Slow query performance when bulkinserting

Floris Robbemont

We are experiencing slow queries when we're doing a large bulk insert. The total size of documents is aprox 12 million documents.

Without the bulkinsert everything is fast (< 100ms). But during the bulk, queries on indexes that are related to the bulk (the same collection as the bulk insert is doing) are dropping down to 8 seconds! The index we're querying is pretty basic, no loaddocument calls and it's a map only. It does have 9 different sort columns defined.

The load on the server is not that high during the bulk (20-30%). It has 16 cores and 16GB RAM. The bulk insert is being done from a different machine. All storage is RAID-10 with 6 SSD's.

I'm wondering why this is happening. I don't mind stale data, but 8 seconds per query cannot be right. I also expected the performance to be higher with this kind of hardware.

Is there anything we can do about this?

Oren Eini (Ayende Rahien)

Hibernating Rhinos Ltd

Oren Eini l CEO l Mobile: + 972-52-548-6969

Office: +972-4-622-7811 l Fax: +972-153-4-622-7811

--

You received this message because you are subscribed to the Google Groups "RavenDB - 2nd generation document database" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+unsubscribe@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Floris Robbemont

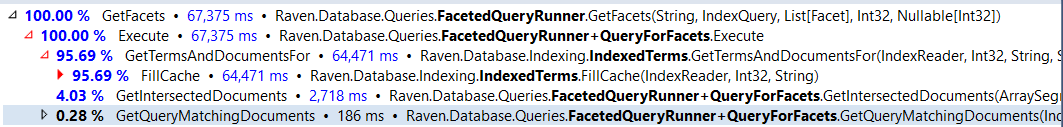

But it's mostly the facets query that takes more then 8 seconds. The results query takes about a second.

If we use the direct query URL in a browser it's still very slow during the bulk. So that would mean it's not client related.

Oren Eini (Ayende Rahien)

Hibernating Rhinos Ltd

Oren Eini l CEO l Mobile: + 972-52-548-6969

Office: +972-4-622-7811 l Fax: +972-153-4-622-7811

Flo...@lucrasoft.nl

Op donderdag 23 maart 2017 01:18:44 UTC+1 schreef Oren Eini:

Can you run this under profiler?

Hibernating Rhinos Ltd

Oren Eini l CEO l Mobile: + 972-52-548-6969

Office: +972-4-622-7811 l Fax: +972-153-4-622-7811

On Thu, Mar 23, 2017 at 2:03 AM, Floris Robbemont <florisr...@hotmail.nl> wrote:

No. The only thing we're doing is NoTracking and NoCaching. Also a SelectFields call.

But it's mostly the facets query that takes more then 8 seconds. The results query takes about a second.

If we use the direct query URL in a browser it's still very slow during the bulk. So that would mean it's not client related.

--

You received this message because you are subscribed to the Google Groups "RavenDB - 2nd generation document database" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+u...@googlegroups.com.

Flo...@lucrasoft.nl

Op donderdag 23 maart 2017 01:18:44 UTC+1 schreef Oren Eini:

Can you run this under profiler?

Hibernating Rhinos Ltd

Oren Eini l CEO l Mobile: + 972-52-548-6969

Office: +972-4-622-7811 l Fax: +972-153-4-622-7811

On Thu, Mar 23, 2017 at 2:03 AM, Floris Robbemont <florisr...@hotmail.nl> wrote:

No. The only thing we're doing is NoTracking and NoCaching. Also a SelectFields call.

But it's mostly the facets query that takes more then 8 seconds. The results query takes about a second.

If we use the direct query URL in a browser it's still very slow during the bulk. So that would mean it's not client related.

--

You received this message because you are subscribed to the Google Groups "RavenDB - 2nd generation document database" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+u...@googlegroups.com.

Floris Robbemont

You received this message because you are subscribed to a topic in the Google Groups "RavenDB - 2nd generation document database" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/ravendb/nZ1u8gbWz9k/unsubscribe.

To unsubscribe from this group and all its topics, send an email to ravendb+u...@googlegroups.com.

Flo...@lucrasoft.nl

Op woensdag 22 maart 2017 17:17:58 UTC+1 schreef Floris Robbemont:

Oren Eini (Ayende Rahien)

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+unsubscribe@googlegroups.com.

Oren Eini (Ayende Rahien)

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+unsubscribe@googlegroups.com.

Oren Eini (Ayende Rahien)

Hibernating Rhinos Ltd

Oren Eini l CEO l Mobile: + 972-52-548-6969

Office: +972-4-622-7811 l Fax: +972-153-4-622-7811

--

Flo...@lucrasoft.nl

Op donderdag 23 maart 2017 10:09:36 UTC+1 schreef Oren Eini:

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+u...@googlegroups.com.

Flo...@lucrasoft.nl

Op donderdag 23 maart 2017 10:08:38 UTC+1 schreef Oren Eini:

Oren Eini (Ayende Rahien)

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+unsubscribe@googlegroups.com.

Flo...@lucrasoft.nl

Op donderdag 23 maart 2017 12:14:20 UTC+1 schreef Oren Eini:

Oren Eini (Ayende Rahien)

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+unsubscribe@googlegroups.com.

Flo...@lucrasoft.nl

Op donderdag 23 maart 2017 15:36:11 UTC+1 schreef Oren Eini:

Oren Eini (Ayende Rahien)

To unsubscribe from this group and stop receiving emails from it, send an email to ravendb+unsubscribe@googlegroups.com.