GridCRF

155 views

Skip to first unread message

Roshan Santhosh

Jan 13, 2016, 2:02:03 AM1/13/16

to pystruct

I plan on using GridCRF for a image segmentation problem. But I am not able to understand the format in which the input has to be given.

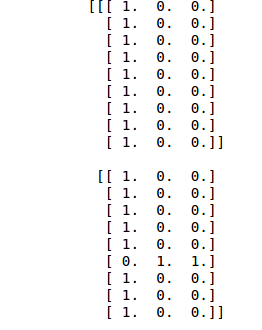

A single sample has to be of the form (width,height,n_classes). However for the "Learning 2d interactions" example, the x input is given like this

Why is the final array of the form [1 0 0] and [0 1 1] ? I understand that there are 3 classes but I dont see how this labelling relates to that. Any help would be appreciated.

A single sample has to be of the form (width,height,n_classes). However for the "Learning 2d interactions" example, the x input is given like this

Why is the final array of the form [1 0 0] and [0 1 1] ? I understand that there are 3 classes but I dont see how this labelling relates to that. Any help would be appreciated.

Andreas Mueller

Jan 14, 2016, 3:12:39 PM1/14/16

to pyst...@googlegroups.com

What do you mean by "final array"?

A sample is not of the form (width, height, n_classes).

The sample is (width, height, n_features), and the labels are (width, height).

I just realized there was a typo in the docstring of the GridCRF and DirectedGridCRF where it said "n_states".

I'm building a fixed version right now.

Cheers,

Andy

A sample is not of the form (width, height, n_classes).

The sample is (width, height, n_features), and the labels are (width, height).

I just realized there was a typo in the docstring of the GridCRF and DirectedGridCRF where it said "n_states".

I'm building a fixed version right now.

Cheers,

Andy

--

You received this message because you are subscribed to the Google Groups "pystruct" group.

To unsubscribe from this group and stop receiving emails from it, send an email to pystruct+u...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Roshan Santhosh

Jan 14, 2016, 3:51:14 PM1/14/16

to pystruct

By final array, I meant the features. So in this example, the feature for node in first row and first column is [1 0 0] and the feature for row 1 , column 6 is [0 1 1]. How are we supposed to interpret these features?

Andreas Mueller

Jan 14, 2016, 4:05:05 PM1/14/16

to pyst...@googlegroups.com

The 2nd and 3rd features are actually identical and you could just

drop the third one (it's there for historical reasons).

The first feature indicates "background" and the second feature indicates "foreground", so being part of either a side or the center of a cross.

The task here is to learn to distinguish whether a foreground pixel is the center or not.

The first feature indicates "background" and the second feature indicates "foreground", so being part of either a side or the center of a cross.

The task here is to learn to distinguish whether a foreground pixel is the center or not.

Roshan Santhosh

Jan 15, 2016, 6:41:13 AM1/15/16

to pystruct

For a classification problem with 3 classes, does the feature vector also have to be of length 3 and do the values necessarily have to be integers?

For the Latent Dynamics CRF (toy cross) problem , you have used vectors of length 2 with either 0 or 1 as its values. This has been compared to the unary potential for a particular class to be present at the node. For example, node 0 has a feature [1 0 ] indicating that its more likely a background pixel. Can we instead also have features like [0.75 0.25].

Also given a different problem, can we use a vector of length n as a feature for the node?

2) The GridCRF for toy cross problem gives 12 weights. Can you explain what these weights correspond to? Are these unary/ edge potentials/ latent edge potentials?

For the Latent Dynamics CRF (toy cross) problem , you have used vectors of length 2 with either 0 or 1 as its values. This has been compared to the unary potential for a particular class to be present at the node. For example, node 0 has a feature [1 0 ] indicating that its more likely a background pixel. Can we instead also have features like [0.75 0.25].

Also given a different problem, can we use a vector of length n as a feature for the node?

2) The GridCRF for toy cross problem gives 12 weights. Can you explain what these weights correspond to? Are these unary/ edge potentials/ latent edge potentials?

Andreas Mueller

Jan 15, 2016, 12:26:05 PM1/15/16

to pyst...@googlegroups.com

On 01/15/2016 06:41 AM, Roshan Santhosh

wrote:

For a classification problem with 3 classes, does the feature vector also have to be of length 3 and do the values necessarily have to be integers?

No.

For the Latent Dynamics CRF (toy cross) problem , you have used vectors of length 2 with either 0 or 1 as its values. This has been compared to the unary potential for a particular class to be present at the node. For example, node 0 has a feature [1 0 ] indicating that its more likely a background pixel. Can we instead also have features like [0.75 0.25].

Yes.

Also given a different problem, can we use a vector of length n as a feature for the node?

yes.

The reason why the features are more or less the classes in the examples are that these are toy problems.

In real problems, there is usually a much more complex relationship.

2) The GridCRF for toy cross problem gives 12 weights. Can you explain what these weights correspond to? Are these unary/ edge potentials/ latent edge potentials?

Which one?

GridCRF has first (n_features x n_states) unary potentials, then (n_states x n_states) pairwise potentials,

as the docs explain here:

http://pystruct.github.io/generated/pystruct.models.GraphCRF.html#pystruct.models.GraphCRF

Roshan Santhosh

Jan 17, 2016, 2:51:27 AM1/17/16

to pystruct

got it . Thanks

1)

For the Latent Variable Hierarchical CRF, you have used a random initialization of the hidden weights like this

Whats the rationale for using such an initialization? And why random 2 and 3's in the end of each vector. Are the random 2 and 3's to represent the classes of the hidden layer?

Does this change affect the running time of the model? I am running this model on 5 samples of 28*28 images (MNIST) and have added hidden variables for each block of 7*7. So 16 additional edges because of the latent variables. However the model seems to be stuck on iteration 0. Without the latent variables, the model runs sufficiently fast.

H_init = [np.hstack([y.ravel(), np.random.randint(2, 4, size=2 * 2)]) for y in Y]

Whats the rationale for using such an initialization? And why random 2 and 3's in the end of each vector. Are the random 2 and 3's to represent the classes of the hidden layer?

2)

I ran a similar model as the above example without defining H_init

My code was instead of

latent_svm.fit(X_,Y_flat)

latent_svm.fit(X_,Y_flat,H_init)A model which took additional diagonal edges (no latent variables) ran quite fast too. This is surprising since both methods simply add new edges to the model. While one is fast , the other (with latent variables) is seemingly stuck on iteration 0. Is there any reason for this?

Andreas Mueller

Jan 19, 2016, 11:53:57 AM1/19/16

to pyst...@googlegroups.com

On 01/17/2016 02:51 AM, Roshan Santhosh

wrote:

got it . Thanks

1)For the Latent Variable Hierarchical CRF, you have used a random initialization of the hidden weights like this

H_init = [np.hstack([y.ravel(), np.random.randint(2, 4, size=2 * 2)]) for y in Y]

Whats the rationale for using such an initialization? And why random 2 and 3's in the end of each vector. Are the random 2 and 3's to represent the classes of the hidden layer?

Yes. (can you link to the code again?)

There are no "node types" in PyStruct at the moment. So all variables are treated equally. Some are observed and some are not. For the hidden nodes to have different

semantics, they need to have different variables. The observed variables here have states 0 and 1, and the hidden have 2 and 3.

I think the reason I used random initialization is that the default initialization is using k-means. For this particular example, k-means finds the "right"

hidden states, so the latent SVM has nothing to do any more. I wanted to show that it can actually learn the right states from random initialization.

This should probably be made more clear in the example. A pull request would be very welcome.

There are no "node types" in PyStruct at the moment. So all variables are treated equally. Some are observed and some are not. For the hidden nodes to have different

semantics, they need to have different variables. The observed variables here have states 0 and 1, and the hidden have 2 and 3.

I think the reason I used random initialization is that the default initialization is using k-means. For this particular example, k-means finds the "right"

hidden states, so the latent SVM has nothing to do any more. I wanted to show that it can actually learn the right states from random initialization.

This should probably be made more clear in the example. A pull request would be very welcome.

2)I ran a similar model as the above example without defining H_initMy code wasinstead of

Leaving out H_init will provide smarter initialization, so it should

make the model run faster.

Does this change affect the running time of the model? I am running this model on 5 samples of 28*28 images (MNIST) and have added hidden variables for each block of 7*7. So 16 additional edges because of the latent variables. However the model seems to be stuck on iteration 0. Without the latent variables, the model runs sufficiently fast.

Iteration 0 here means fitting one structured SVM with the initial

values of the latent nodes. What inference method are you using? I

just saw the example uses lp, which

is silly and will be quite slow.

Have you turned on verbosity to see what's going on in the inner loop of fitting the fully observed SVM in the first step?

is silly and will be quite slow.

Have you turned on verbosity to see what's going on in the inner loop of fitting the fully observed SVM in the first step?

A model which took additional diagonal edges (no latent variables) ran quite fast too. This is surprising since both methods simply add new edges to the model. While one is fast , the other (with latent variables) is seemingly stuck on iteration 0. Is there any reason for this?

Using latent variables means iteratively fitting many structured

SVMs, and will always be slower -- at least using the methods

implemented in PyStruct.

HTH,

Andy

HTH,

Andy

Roshan Santhosh

Jan 21, 2016, 12:42:02 AM1/21/16

to pystruct

I have set the verbose parameter to 1 in the OneSlackSSVM function. That just shows :

LATENT SVM ITERATION 0Training 1-slack dual structural SVMiteration 0

I was using the lp inference method as given in the example. I am new to inference methods and am not very sure about the properties of each of the inference methods. Would be helpful if you could do complete the "Tips on choosing an Inference algorithm" section. Which inference algorithm do you suggest I should use?

Message has been deleted

Message has been deleted

Message has been deleted

Message has been deleted

Andreas Mueller

Jan 21, 2016, 12:21:58 PM1/21/16

to pyst...@googlegroups.com

Yeah AD3 should work better or possibly QPBO.

The problem is caused by the way the latent nodes are implemented.

For the latent node crf you are using, there is a deterministic relationship between the hidden nodes and the visible nodes (multiple hidden states correspond to one visible state).

However, the solution for the hidden variables that you got doesn't respect this relationship.

This is caused by the inference being approximate.

You have the following options:

- enforce the consistency between the latent and observed variables more by changing the max_entry in models/latent_node_crf.py line 235 (replace 1e2 by a larger value?)

- change the "raise ValueError" to simply a warning in models/latent_node_crf.py line 242 and hope that it doesn't mess up learning too much?

- use an exact inference method, like ('ad3', {'branch_and_bound': True})

Hth.

I'm sorry, this part of the code is not very robust at the moment and can surely be improved.

Let me know if any of these solutions work for you.

Cheers,

Andy

The problem is caused by the way the latent nodes are implemented.

For the latent node crf you are using, there is a deterministic relationship between the hidden nodes and the visible nodes (multiple hidden states correspond to one visible state).

However, the solution for the hidden variables that you got doesn't respect this relationship.

This is caused by the inference being approximate.

You have the following options:

- enforce the consistency between the latent and observed variables more by changing the max_entry in models/latent_node_crf.py line 235 (replace 1e2 by a larger value?)

- change the "raise ValueError" to simply a warning in models/latent_node_crf.py line 242 and hope that it doesn't mess up learning too much?

- use an exact inference method, like ('ad3', {'branch_and_bound': True})

Hth.

I'm sorry, this part of the code is not very robust at the moment and can surely be improved.

Let me know if any of these solutions work for you.

Cheers,

Andy

On 01/21/2016 01:22 AM, Roshan Santhosh

wrote:

Surely. Since I am going to work with CRF's I can probably help with the documentation and examples. I will go through the inference methods and create a small documentation on them which could be put in the "Tips on choosing an Inference algorithm" section.

Also I am using the ad3 inference algorithm now and its running pretty fast. However when it reaches LATENT SVM ITERATION 1 it exits with an error saying "Inconsistent h and y in latent node CRF".What could be causing this problem?

--

Roshan Santhosh

Feb 2, 2016, 1:27:19 AM2/2/16

to pystruct

1)

Suppose I create a GraphCRF in which I add the edges for nearest 8-neighbors for all pixels. Does adding additional edges to the nearest 15-neighbors make any difference to the CRF as such.

Is it right to assume that addition of the 8-neighbors for all nodes will essentially connect all nodes of the graph. And so additional edges for each node doesnt really add much to improve the CRF?

2)

I recently used CRF's for a image denoising application. I began with a very basic GraphCRF. Ran it using lp, ad3 and qpbo inference algorithms and all three gave really good results.

However, I try running the same example with the latent variables and the preformance was relatively poor. Any reasons why this would happen? I believed adding hidden variables adds modelling power to the CRF

Andreas Mueller

Feb 2, 2016, 3:27:34 PM2/2/16

to pyst...@googlegroups.com

On 02/02/2016 01:27 AM, Roshan Santhosh wrote:

> 1)

> Suppose I create a GraphCRF in which I add the edges for nearest

> 8-neighbors for all pixels. Does adding additional edges to the

> nearest 15-neighbors make any difference to the CRF as such.

> Is it right to assume that addition of the 8-neighbors for all nodes

> will essentially connect all nodes of the graph. And so additional

> edges for each node doesnt really add much to improve the CRF?

>

model more powerful (though you should probably encode the distance or

edge type somehow in edge features).

It also makes the inference harder / longer, because there are more edges.

What do you mean by "essentially connect all nodes"? That the graph is

connected? Well you could make the graph connected by just having two

edges on each node and doing a space-filling curve

(like a snake going up and down). Clearly that would be a worse model,

though.

>

> 2)

> I recently used CRF's for a image denoising application. I began with

> a very basic GraphCRF. Ran it using lp, ad3 and qpbo inference

> algorithms and all three gave really good results.

> However, I try running the same example with the latent variables and

> the preformance was relatively poor. Any reasons why this would

> happen? I believed adding hidden variables adds modelling power to the CRF

Hidden nodes make the problem much harder to learn, in particular they

make it non-convex.

Roshan Santhosh

Feb 15, 2016, 10:59:28 PM2/15/16

to pystruct

1)

What I meant is this.

1 2 3 4

5 6 7 8

9 10 11 12

Suppose in the above graph, I add a node between all 4-neighborhood pairs. So this will include edges between (1,5) and (5,9). Is there any point in then adding an edge between 1 and 9?. Will the connectivity between 1 and 5 and 5 and 9 , ensure connectivity between 1 and 9 as well? Will adding (1,9) as an edge be a redundant exercise?

2)

I am working on a problem involving 3D images. The volumes are of size 240x240x155. A 3D CRF model had memory issues as the dataset was too huge. So I am running 2D CRF models with individual layers as training samples. So each training sample has 240*240 nodes. It has 2 classes. The CRF model has 4-neighborhood connectivity. The training of this CRF model is also time consuming.

Could you suggest which learner and inference method would be best for this purpose?

Also I would like to better understand the training process. What are the parameters new constraints, cutting plane objective and primal objective? Are there any resources that explain these?

Andy

Feb 18, 2016, 8:02:36 PM2/18/16

to pyst...@googlegroups.com

On 02/15/2016 10:59 PM, Roshan Santhosh

wrote:

1)What I meant is this.

1 2 3 45 6 7 89 10 11 12

Suppose in the above graph, I add a node between all 4-neighborhood pairs. So this will include edges between (1,5) and (5,9). Is there any point in then adding an edge between 1 and 9?. Will the connectivity between 1 and 5 and 5 and 9 , ensure connectivity between 1 and 9 as well? Will adding (1,9) as an edge be a redundant exercise?

It's not redundant, depending on your edge features. You should make

sure you understand what it means.

What do you mean by "ensure connectivity between 1 and 9"?

You might be interested in this paper:

https://www.robots.ox.ac.uk/~vgg/rg/papers/kraehenbuehl__nips2012__densecrf.pdf

What do you mean by "ensure connectivity between 1 and 9"?

You might be interested in this paper:

https://www.robots.ox.ac.uk/~vgg/rg/papers/kraehenbuehl__nips2012__densecrf.pdf

2)I am working on a problem involving 3D images. The volumes are of size 240x240x155. A 3D CRF model had memory issues as the dataset was too huge. So I am running 2D CRF models with individual layers as training samples. So each training sample has 240*240 nodes. It has 2 classes. The CRF model has 4-neighborhood connectivity. The training of this CRF model is also time consuming.

I would suggest you look into supervoxels, for example SLIC. That

will likely allow you to do a CRF on the 3D data.

Could you suggest which learner and inference method would be best for this purpose?

Also I would like to better understand the training process. What are the parameters new constraints, cutting plane objective and primal objective? Are there any resources that explain these?

The references explain them ;)

Look into the paper by Joachims.

If you work on CRF for vision, you should probably just buy the book by Nowozin and Lampert that I referenced. It's great, goes relatively slow, and gives a ton of detail.

Look into the paper by Joachims.

If you work on CRF for vision, you should probably just buy the book by Nowozin and Lampert that I referenced. It's great, goes relatively slow, and gives a ton of detail.

Reply all

Reply to author

Forward

0 new messages