ORC Performance issue

751 views

Skip to first unread message

Sivaramakrishnan Narayanan

Jul 9, 2014, 12:51:30 AM7/9/14

to presto...@googlegroups.com

@Dain - will your ORC changes help fix this perf issue?

I have a 10 node cluster and store_sales table from TPCDS of scale 1000 in ORC format. I run this query:

presto:default> select count(*) from tpcds_orc_1000.store_sales where ss_customer_sk=100;

_col0

-------

245

(1 row)

Query 20140709_042916_00020_dh8f9, FINISHED, 11 nodes

Splits: 1,705 total, 1,705 done (100.00%)

0:25 [2.88B rows, 38.2GB] [113M rows/s, 1.5GB/s]

presto:default> select count(*) from tpcds_orc_1000.store_sales where ss_customer_sk=100;

_col0

-------

245

(1 row)

Query 20140709_042916_00020_dh8f9, FINISHED, 11 nodes

Splits: 1,705 total, 1,705 done (100.00%)

0:25 [2.88B rows, 38.2GB] [113M rows/s, 1.5GB/s]

It takes 25 seconds and the total number of rows selected is only 245.

Now, when I run this query:

presto:default> select * from tpcds_orc_1000.store_sales where ss_customer_sk=100;

presto:default> select * from tpcds_orc_1000.store_sales where ss_customer_sk=100;

<snip output>

Query 20140709_043309_00002_2xdkp, FINISHED, 11 nodes

Splits: 1,705 total, 1,705 done (100.00%)

3:23 [2.88B rows, 38.2GB] [14.2M rows/s, 193MB/s]

Splits: 1,705 total, 1,705 done (100.00%)

3:23 [2.88B rows, 38.2GB] [14.2M rows/s, 193MB/s]

This is seems like an unreasonable jump in execution time. I would hope that other columns are read only when necessary.

Now when I run this query, which selects no rows, this also takes 3+ minutes.

presto:default> select * from tpcds_orc_1000.store_sales where ss_customer_sk<0;

ss_sold_date_sk | ss_sold_time_sk | ss_item_sk | ss_customer_sk | ss_cdemo_sk | ss_hdemo_sk | ss_addr_sk | ss_store_sk | ss_promo_sk | ss_ticket_number | ss_quantity | ss_w

-----------------+-----------------+------------+----------------+-------------+-------------+------------+-------------+-------------+------------------+-------------+-----

(0 rows)

Query 20140709_044512_00002_jbjbz, FINISHED, 12 nodes

Splits: 1,705 total, 1,705 done (100.00%)

3:11 [2.88B rows, 38.2GB] [15.1M rows/s, 205MB/s]

presto:default> select * from tpcds_orc_1000.store_sales where ss_customer_sk<0;

ss_sold_date_sk | ss_sold_time_sk | ss_item_sk | ss_customer_sk | ss_cdemo_sk | ss_hdemo_sk | ss_addr_sk | ss_store_sk | ss_promo_sk | ss_ticket_number | ss_quantity | ss_w

-----------------+-----------------+------------+----------------+-------------+-------------+------------+-------------+-------------+------------------+-------------+-----

(0 rows)

Query 20140709_044512_00002_jbjbz, FINISHED, 12 nodes

Splits: 1,705 total, 1,705 done (100.00%)

3:11 [2.88B rows, 38.2GB] [15.1M rows/s, 205MB/s]

Dain Sundstrom

Jul 9, 2014, 8:55:39 PM7/9/14

to presto...@googlegroups.com

Yes.

There are two system in the orc code that can help with this.

First, the code can push down the "ss_customer_sk=100" predicate into the reader. The ORC file contains the min an max of every column for each 10k row group. Depending on the distribution of the "ss_customer_sk" column you may be able to skip reading most files. For the TPC-H data the push down doesn't work for any of the queries because the data generator uses a normal distribution and in every 10k row group contains ends up containing the full range.

The second system in the new ORC reader code it lazy block decoding. This delays the decoding of the columns until the data is actually used in the query. For this query, you would only decode the "ss_customer_sk". The current code is only a first step as it still will transfer the data for all of the selected columns, meaning you save on CPU but not network and disk IO. As part of future work, I'd like to predictively pull in the columns we expect to be use eagerly and the rest lazily.

For the mean time, RCFile use lazy loading so this query should be fast in that format.

-dain

Sivaramakrishnan Narayanan

Jul 9, 2014, 11:31:28 PM7/9/14

to presto...@googlegroups.com, Shubham Tagra

Perfect - these are exactly the things we are looking for! We'll test out the branch and come back with any questions. BTW, last time we checked, the branch depended on an airlift snapshot version which wasn't in the maven repository - any suggestions?

--

You received this message because you are subscribed to the Google Groups "Presto" group.

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Dain Sundstrom

Jul 10, 2014, 1:10:21 AM7/10/14

to presto...@googlegroups.com, sta...@qubole.com

I believe I have pushed snapshots for everything, but if you run into a missing one, you can check out and build the jars using: https://github.com/airlift/ Also, let me know so I can update the snaps.

-dain

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users+unsubscribe@googlegroups.com.

Sivaramakrishnan Narayanan

Jul 10, 2014, 2:33:29 AM7/10/14

to presto...@googlegroups.com

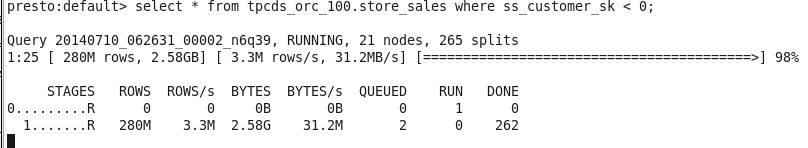

One other perf issue I noticed recently.

This happens very consistently. There are one or two splits that are queued while there are idle nodes. The splits eventually finish, but holds up entire query's execution. Here's a snapshot. Notice that there are 21 nodes, 0 running and 2 queued splits. This smells like a bug of some sort - have you seen this happen?

coordinator=true

datasources=jmx,hive

http-server.http.port=8081

discovery-server.enabled=true

discovery.uri=http://localhost:8411

node-scheduler.include-coordinator=false

task.max-memory=3000MB

task.shard.max-threads=4

query.schedule-split-batch-size=3000

query.max-pending-splits-per-node=100

Siva

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users...@googlegroups.com.

Dain Sundstrom

Jul 10, 2014, 3:06:26 AM7/10/14

to presto...@googlegroups.com

Definitely looks like a bug. Given that it is reporting nothing it running on the worker, my guess is the coordinator is having trouble sending the splits to the worker. I would look to see if the worker is getting PUT requests in the http log (and of course look for error messages in the logs). The JMX stats are also helpful for debugging. They can tell you what the task executor on the worker believes is pending. If none of that works, and you can't reproduce on a machine with a debugger, I grab a heap dump and use yourkit to see the real state of the schedulers on the coordinator and worker (the trick in yourkit it to search for the task id in strings)

-dain

Shubham Tagra

Jul 11, 2014, 7:58:48 AM7/11/14

to presto...@googlegroups.com

Dain, I am testing out your changes and here are some observations:

- I can see a definite improvement in case of hdfs but the performance degrades by a great factor in case of s3.

- Does not work with orc files that were created via hive.11

- This is just to confirm, is the predicate pushdown to reader already implemented? From a glance of your changes I did not see it while creating the reader.

- I can see a definite improvement in case of hdfs but the performance degrades by a great factor in case of s3.

- Does not work with orc files that were created via hive.11

- This is just to confirm, is the predicate pushdown to reader already implemented? From a glance of your changes I did not see it while creating the reader.

--

You received this message because you are subscribed to the Google Groups "Presto" group.

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

-- - Shubham

Dain Sundstrom

Jul 11, 2014, 12:30:15 PM7/11/14

to presto...@googlegroups.com

On Friday, July 11, 2014 4:58:48 AM UTC-7, Shubham Tagra wrote:

Dain, I am testing out your changes and here are some observations:

- I can see a definite improvement in case of hdfs but the performance degrades by a great factor in case of s3.

How are you measuring "performance and what are you using for the baseline? RCFile? Existing ORC?

My guess is the read shape are making s3 perform poorly. The way ORC works is we advance through the file in 10k row chunks. For each 10k chunk we determine which streams are needed and fetch all of them. Depending on how the ORC file is laid out, this could be one large read or many small reads. I have very little experience tuning S3, can you describe how the system works, what is fast/slow and how you work around these. Once I understand these, I can tweak the heuristics. For example, in ORC you can end up with a read plan that looks like: read x MB, skip y M, read z MB. Should we consolidate that into 1 read? Should we read these in parallel. If we are doing parallel, at what size should we split a read chunk into two parallel reads?

- Does not work with orc files that were created via hive.11

I tested the code by writing files with "hive.exec.orc.write.format=11", so there must be different about the writers. I don't have a Hive 11 installation, so can you send me a small data set in ORC 11 and something like CSV?

- This is just to confirm, is the predicate pushdown to reader already implemented? From a glance of your changes I did not see it while creating the reader.

When creating the OrcDataStream in https://github.com/dain/presto/blob/custom-orc/presto-hive/src/main/java/com/facebook/presto/hive/OrcDataStreamFactory.java , the tuple domain is passed in. This is used to prune the segments. This code doesn't use any or the pruning logic from the Hive code (it seems to be pretty slow and buggy).

-dain

Shubham Tagra

Jul 14, 2014, 8:58:40 AM7/14/14

to presto...@googlegroups.com

On 11-07-2014 22:00, Dain Sundstrom

wrote:

On Friday, July 11, 2014 4:58:48 AM UTC-7, Shubham Tagra wrote:Dain, I am testing out your changes and here are some observations:

- I can see a definite improvement in case of hdfs but the performance degrades by a great factor in case of s3.

How are you measuring "performance and what are you using for the baseline? RCFile? Existing ORC?

Against existing ORC

My guess is the read shape are making s3 perform poorly. The way ORC works is we advance through the file in 10k row chunks. For each 10k chunk we determine which streams are needed and fetch all of them. Depending on how the ORC file is laid out, this could be one large read or many small reads. I have very little experience tuning S3, can you describe how the system works, what is fast/slow and how you work around these. Once I understand these, I can tweak the heuristics. For example, in ORC you can end up with a read plan that looks like: read x MB, skip y M, read z MB. Should we consolidate that into 1 read? Should we read these in parallel. If we are doing parallel, at what size should we split a read chunk into two parallel reads?

I ran some experiments on small dataset which takes ~5sec for

count(*) in hdfs while ~30sec for count(*) against s3.

Collected some data around it and it is the seeks which are killing the performance. Seeks are quite costly in s3, in this

case we spent majority of the time in seek calls.

Collected some data around it and it is the seeks which are killing the performance. Seeks are quite costly in s3, in this

case we spent majority of the time in seek calls.

- Does not work with orc files that were created via hive.11

I tested the code by writing files with "hive.exec.orc.write.format=11", so there must be different about the writers. I don't have a Hive 11 installation, so can you send me a small data set in ORC 11 and something like CSV?

Attaching a file for small orc dataset created in hive.11, attached

ddl as well. Error is occuring because StripeStatistics not defined

in hive.11

- This is just to confirm, is the predicate pushdown to reader already implemented? From a glance of your changes I did not see it while creating the reader.

When creating the OrcDataStream in https://github.com/dain/presto/blob/custom-orc/presto-hive/src/main/java/com/facebook/presto/hive/OrcDataStreamFactory.java , the tuple domain is passed in. This is used to prune the segments. This code doesn't use any or the pruning logic from the Hive code (it seems to be pretty slow and buggy).

But I could see the domain set according to the filter clause. Maybe

I have your old branch, will resync and check

-dain

--

You received this message because you are subscribed to the Google Groups "Presto" group.

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

-- - Shubham

to...@avocet.io

Jul 14, 2014, 1:36:45 PM7/14/14

to presto...@googlegroups.com

On Friday, July 11, 2014 5:30:15 PM UTC+1, Dain Sundstrom wrote:

> My guess is the read shape are making s3 perform poorly. The way ORC

> works is we advance through the file in 10k row chunks. For each 10k

> chunk we determine which streams are needed and fetch all of them.

> Depending on how the ORC file is laid out, this could be one large read

> or many small reads. I have very little experience tuning S3, can you

> describe how the system works, what is fast/slow and how you work around

> these.

I'm a big-data back-end engineer, been using s3 in this way for about two years. I'm no expert, and I've not particularly investigated s3, but I can't help but have picked some knowledge on the way.

> My guess is the read shape are making s3 perform poorly. The way ORC

> works is we advance through the file in 10k row chunks. For each 10k

> chunk we determine which streams are needed and fetch all of them.

> Depending on how the ORC file is laid out, this could be one large read

> or many small reads. I have very little experience tuning S3, can you

> describe how the system works, what is fast/slow and how you work around

> these.

So take this with a pinch of salt and do some serious investigation of your own.

An individual download connection will only run at about 1 megabyte/second. However, s3 scales completely - the aggregate bandwidth will certainly saturate your network card, and we're talking 10 GBit network card here. As such, run as many parallel connections as you can. OTOH, I think that testing was done with many files, not one file with many connections. Ultimately the files are on hard disks - but there are multiple copies - so in the end, it could be the equivelent of a hard disk with say three drives and RAID 1 (full mirror).

However - Hive will of course let you bucket to you hearts content, and I think in s3 each bucket file will be hashed to a different set of three hard disks. Ditto for all partitions. If users by these means have lots of individual files, you could get some astounding aggregate bandwidth going on.

One significant issue with is the time taken for "directory listing". If you have a bucket with say 15k files in the root, you're looking at like a minute to get a full list. I have no idea to what extent you'd need this kind of information in Presto or Hive, though, rather than just accessing files directly.

Individual connection set-up time is going to be quite long, I think, but I don't know this for sure by any means.

Feel free to email me if you need to.

Shubham Tagra

Jul 14, 2014, 2:22:24 PM7/14/14

to presto...@googlegroups.com

Correction in last statement, meant to write "I could not see the

domain set according to the filter clause."

-- - Shubham

Dain Sundstrom

Jul 14, 2014, 3:11:25 PM7/14/14

to presto...@googlegroups.com

On Monday, July 14, 2014 5:58:40 AM UTC-7, Shubham Tagra wrote:

On 11-07-2014 22:00, Dain Sundstrom wrote:

Against existing ORCOn Friday, July 11, 2014 4:58:48 AM UTC-7, Shubham Tagra wrote:Dain, I am testing out your changes and here are some observations:

- I can see a definite improvement in case of hdfs but the performance degrades by a great factor in case of s3.

How are you measuring "performance and what are you using for the baseline? RCFile? Existing ORC?

I ran some experiments on small dataset which takes ~5sec for count(*) in hdfs while ~30sec for count(*) against s3.

My guess is the read shape are making s3 perform poorly. The way ORC works is we advance through the file in 10k row chunks. For each 10k chunk we determine which streams are needed and fetch all of them. Depending on how the ORC file is laid out, this could be one large read or many small reads. I have very little experience tuning S3, can you describe how the system works, what is fast/slow and how you work around these. Once I understand these, I can tweak the heuristics. For example, in ORC you can end up with a read plan that looks like: read x MB, skip y M, read z MB. Should we consolidate that into 1 read? Should we read these in parallel. If we are doing parallel, at what size should we split a read chunk into two parallel reads?

Collected some data around it and it is the seeks which are killing the performance. Seeks are quite costly in s3, in this

case we spent majority of the time in seek calls.

Interesting. Count(*) is not a great test case as in Presto it can be satisfied completely using stripe/file metadata (I'm not sure I added this optimization in).

So say we are running a query that only needs data from one column like SELECT stddev(x) FROM y. If y has at least two columns, we will end up with reads that looks like:

...xxx.....xxx.....xxxx...

Currently, the code sends the following commands to HDFS:

open(pos)

read(length)

seek(length)

read(length)

seek(length)

read(length)

close();

IIRC the current S3 file system has:

if ((in != null) && (pos > position)) {

// seeking forwards

long skip = pos - position;

if (skip <= max(in.available(), MAX_SKIP_SIZE.toBytes())) {

// already buffered or seek is small enough

if (in.skip(skip) == skip) {

position = pos;

return;

}

}

}

If the code falls out of the if statement, it closes the current stream and opens a new one. MAX_SKIP_SIZE is 1 MB. Should we reduce or increase that size? In your test case if the table is really wide, it might be opening a new connection for each segment read.

Does anyone know the relative latency of opening a new connection to S3 vs the latency of transferring 1 MB?

Attaching a file for small orc dataset created in hive.11, attached ddl as well. Error is occuring because StripeStatistics not defined in hive.11- Does not work with orc files that were created via hive.11

I tested the code by writing files with "hive.exec.orc.write.format=11", so there must be different about the writers. I don't have a Hive 11 installation, so can you send me a small data set in ORC 11 and something like CSV?

Should be an easy fix. Thanks.

But I could see the domain set according to the filter clause. Maybe I have your old branch, will resync and check- This is just to confirm, is the predicate pushdown to reader already implemented? From a glance of your changes I did not see it while creating the reader.

When creating the OrcDataStream in https://github.com/dain/presto/blob/custom-orc/presto-hive/src/main/java/com/facebook/presto/hive/OrcDataStreamFactory.java , the tuple domain is passed in. This is used to prune the segments. This code doesn't use any or the pruning logic from the Hive code (it seems to be pretty slow and buggy).

On Monday, July 14, 2014 11:22:24 AM UTC-7, Shubham Tagra wrote:

Correction in last statement, meant to write "I could not see the domain set according to the filter clause."

The code should be there in the main Hive connector code. I'll double check to see if I missed something, but you get the idea of what I am doing.

-dain

Dain Sundstrom

Jul 14, 2014, 3:14:20 PM7/14/14

to presto...@googlegroups.com, to...@avocet.io

Interesting. My plan is to replace the current code in my ORC branch with one that performs the reads in parallel and to possibly predict the next reads and read ahead. Sounds like that will work really well with S3.

Do you know how long it takes to establish a new S3 connection? If it is long time, is there any way to speed up this process or to cache and reuse the connections?

-dain

Sivaramakrishnan Narayanan

Jul 14, 2014, 11:05:32 PM7/14/14

to presto...@googlegroups.com, Toby Douglass

We use jets3t and we've found establishing a connection can take ~500ms to even a second sometimes. You might find these posts useful - we've detailed some of the optimizations we've done for Hadoop/Hive + S3:

http://www.quora.com/How-does-Qubole-improve-S3-performance

http://qubole-eng.quora.com/Optimizing-Hadoop-for-S3

BTW, one thing to note is that S3 punishes you for opening too many connections too often and we've seen presto hit that already. S3 also punishes you for keeping a connection open for too long without reading. We're happy to help out with S3 specific stuff as that is our bread and butter use-case.http://www.quora.com/How-does-Qubole-improve-S3-performance

http://qubole-eng.quora.com/Optimizing-Hadoop-for-S3

--

Sivaramakrishnan Narayanan

Jul 14, 2014, 11:09:05 PM7/14/14

to presto...@googlegroups.com, Toby Douglass

Sorry I meant 50ms to open a connetion, not 500ms.. but I have seen 1s occasionally.

Toby Douglass

Jul 15, 2014, 7:19:55 AM7/15/14

to Sivaramakrishnan Narayanan, presto...@googlegroups.com

On Tue, Jul 15, 2014 at 4:09 AM, Sivaramakrishnan Narayanan <snara...@qubole.com> wrote:

Sorry I meant 50ms to open a connetion, not 500ms.. but I have seen 1s occasionally.

Right - but exceptional very long delays are typical of anything over the network.

The info about s3 responding to too many connections is fascenating! I can certainly see Amazon degrade service to connections which are not reading; it doesn't earn them money. They don't charge per second of connection, only on bytes.Shubham Tagra

Jul 15, 2014, 11:13:50 AM7/15/14

to presto...@googlegroups.com

We are using jets3t and the seek() method there is the cause of all

slowness, it closes existing stream and opens a new one irrespective

of position being seeked.

PrestoS3FileSystem's seek() handles the case of seek when position is already where we want it and should work fine.

PrestoS3FileSystem's seek() handles the case of seek when position is already where we want it and should work fine.

--

You received this message because you are subscribed to the Google Groups "Presto" group.

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

-- - Shubham

Dain Sundstrom

Jul 16, 2014, 2:18:05 PM7/16/14

to presto...@googlegroups.com

Would it be easy to repeat your test using PrestoS3FileSystem?

-dain

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users+unsubscribe@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

-- - Shubham

Shubham Tagra

Jul 17, 2014, 8:10:35 AM7/17/14

to presto...@googlegroups.com

Repeated with PrestoS3FileSystem. Performance was again bad, took a

long time for the query which runs in 30sec with changes in jets3t

in our system.

I think the root cause is still the same. This is the method that readFully eventually calls in a while loop:

public int read(long position, byte[] buffer, int offset, int length)

throws IOException {

synchronized (this) {

long oldPos = getPos();

int nread = -1;

try {

seek(position);

nread = read(buffer, offset, length);

} finally {

seek(oldPos);

}

return nread;

}

}

The seek(oldPos) is in finally is resetting the stream always back to where it started.

I think the root cause is still the same. This is the method that readFully eventually calls in a while loop:

public int read(long position, byte[] buffer, int offset, int length)

throws IOException {

synchronized (this) {

long oldPos = getPos();

int nread = -1;

try {

seek(position);

nread = read(buffer, offset, length);

} finally {

seek(oldPos);

}

return nread;

}

}

The seek(oldPos) is in finally is resetting the stream always back to where it started.

To unsubscribe from this group and stop receiving emails from it, send an email to presto-users...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

-- - Shubham

Dain Sundstrom

Jul 17, 2014, 12:50:59 PM7/17/14

to presto...@googlegroups.com

Thanks! I’ll remove that.

-dain

-dain

Robin Verlangen

Jul 22, 2014, 4:42:01 PM7/22/14

to presto...@googlegroups.com

This article might be interesting, not really in-depth but might be the right direction for running on S3: http://www.qubole.com/optimizing-hadoop-for-s3-part-1/

Best regards,

Robin Verlangen

Chief Data Architect

Disclaimer: The information contained in this message and attachments is intended solely for the attention and use of the named addressee and may be confidential. If you are not the intended recipient, you are reminded that the information remains the property of the sender. You must not use, disclose, distribute, copy, print or rely on this e-mail. If you have received this message in error, please contact the sender immediately and irrevocably delete this message and any copies.

Sivaramakrishnan Narayanan

Aug 14, 2014, 9:56:23 AM8/14/14

to presto...@googlegroups.com

As an update - we've been using your ORC changes successfully for the last few days. Things seem to be generally good. One thing though, when selecting many columns, we're seeing some pretty large s3 penalties - I'm guessing its seeks. There's probably an optimization in there where if you're reading more than x% of columns, then might as well read the stripe sequentially.

Sivaramakrishnan Narayanan

Aug 14, 2014, 12:47:35 PM8/14/14

to presto...@googlegroups.com

Ran into an issue reading ORC from S3 - after running a query in a loop, I started seeing "Too many open files" exception. Also noticed that many TCP connections with S3 were in ESTABLISHED and CLOSE_WAIT long after the query finished. This patch seemed to fix it for me: it just closes a couple of streams opened by OrcReader. I wasn't able to reproduce the issue anymore.

--Sivadiff --git a/presto-hive/src/main/java/com/facebook/presto/hive/orc/OrcReader.java b/presto-hive/src/main/java/com/facebook/presto/hive/orc/OrcReader.java

index b05f4fb..8e95245 100644

--- a/presto-hive/src/main/java/com/facebook/presto/hive/orc/OrcReader.java

+++ b/presto-hive/src/main/java/com/facebook/presto/hive/orc/OrcReader.java

@@ -129,11 +129,15 @@ public class OrcReader

Slice metadataSlice = completeFooterSlice.slice(0, metadataSize);

InputStream metadataInputStream = new OrcInputStream(metadataSlice.getInput(), compressionKind, bufferSize);

this.metadata = Metadata.parseFrom(metadataInputStream);

+ metadataInputStream.close();

// read footer

Slice footerSlice = completeFooterSlice.slice(metadataSize, footerSize);

InputStream footerInputStream = new OrcInputStream(footerSlice.getInput(), compressionKind, bufferSize);

this.footer = Footer.parseFrom(footerInputStream);

+ footerInputStream.close();

+

+ file.close();

}

}

Zhenxiao Luo

Aug 14, 2014, 5:47:25 PM8/14/14

to presto...@googlegroups.com

Hi Dain and Siva,

Did you see this problem when running Presto on OrcFile?

I am running a simple select count(*), and get:

java.lang.IndexOutOfBoundsException: Index: 0

at java.util.Collections$EmptyList.get(Collections.java:3212)

at com.facebook.presto.hive.orc.OrcRecordReader.<init>(OrcRecordReader.java:97)

at com.facebook.presto.hive.orc.OrcReader.createRecordReader(OrcReader.java:153)

at com.facebook.presto.hive.OrcDataStreamFactory.createNewDataStream(OrcDataStreamFactory.java:63)

at com.facebook.presto.hive.HiveDataStreamProvider.createNewDataStream(HiveDataStreamProvider.java:83)

at com.facebook.presto.hive.ClassLoaderSafeConnectorDataStreamProvider.createNewDataStream(ClassLoaderSafeConnectorDataStreamProvider.java:43)

at com.facebook.presto.split.DataStreamManager.createNewDataStream(DataStreamManager.java:57)

at com.facebook.presto.operator.TableScanOperator.addSplit(TableScanOperator.java:135)

at com.facebook.presto.operator.Driver.processNewSource(Driver.java:251)

at com.facebook.presto.operator.Driver.processNewSources(Driver.java:216)

at com.facebook.presto.operator.Driver.access$300(Driver.java:52)

at com.facebook.presto.operator.Driver$DriverLockResult.close(Driver.java:491)

at com.facebook.presto.operator.Driver.updateSource(Driver.java:194)

at com.facebook.presto.execution.SqlTaskExecution$DriverSplitRunnerFactory.createDriver(SqlTaskExecution.java:585)

at com.facebook.presto.execution.SqlTaskExecution$DriverSplitRunnerFactory.access$1700(SqlTaskExecution.java:552)

at com.facebook.presto.execution.SqlTaskExecution$DriverSplitRunner.processFor(SqlTaskExecution.java:673)

at com.facebook.presto.execution.TaskExecutor$PrioritizedSplitRunner.process(TaskExecutor.java:444)

at com.facebook.presto.execution.TaskExecutor$Runner.run(TaskExecutor.java:578)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:744)

stripeStats is empty when reading this ORCFile.

Thanks,

Zhenxiao

Sivaramakrishnan Narayanan

Aug 14, 2014, 9:05:41 PM8/14/14

to presto...@googlegroups.com

ORC files created from hive 0.11 have empty stripe stats. We also found that tupledomains weren't being passed in - and that had a perf impact. I'll send you a patch with our changes in a few hours.

Zhenxiao Luo

Aug 14, 2014, 9:10:49 PM8/14/14

to presto...@googlegroups.com

So nice of you. Yes, the ORC table I am created is in hive 0.11, which has empty stripe stats.

I also tried creating a table using createTableAsSelect in Presto, by setting the default file storage as ORC, then hit this:

Query 20140815_005618_00020_bmwme failed: loader constraint violation in interface itable initialization: when resolving method "com.facebook.presto.hive.OrcDataStream.isBlocked()Lcom/google/common/util/concurrent/ListenableFuture;" the class loader (instance of com/facebook/presto/server/PluginManager$SimpleChildFirstClassLoader) of the current class, com/facebook/presto/hive/OrcDataStream, and the class loader (instance of sun/misc/Launcher$AppClassLoader) for interface com/facebook/presto/operator/Operator have different Class objects for the type mmon/util/concurrent/ListenableFuture; used in the signature

java.lang.LinkageError: loader constraint violation in interface itable initialization: when resolving method "com.facebook.presto.hive.OrcDataStream.isBlocked()Lcom/google/common/util/concurrent/ListenableFuture;" the class loader (instance of com/facebook/presto/server/PluginManager$SimpleChildFirstClassLoader) of the current class, com/facebook/presto/hive/OrcDataStream, and the class loader (instance of sun/misc/Launcher$AppClassLoader) for interface com/facebook/presto/operator/Operator have different Class objects for the type mmon/util/concurrent/ListenableFuture; used in the signature

Did you see this before?

Thanks a lot,

Zhenxiao

Sivaramakrishnan Narayanan

Aug 14, 2014, 11:59:48 PM8/14/14

to presto...@googlegroups.com

Attaching two patches over Dain's ORC branch that we're using internally.

I did run into the problem you mentioned as well. I managed to get around the problem by excluding guava and airlift dependency from presto-hive. I'm not sure if it is the right solution, but it works :). Edit the dependency to presto-hive in presto-hive-cdh4/pom.xml (assuming you're using that one).<dependency>

<groupId>com.facebook.presto</groupId>

<artifactId>presto-hive</artifactId>

<exclusions>

<exclusion>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

</exclusion>

<exclusion>

<groupId>commons-codec</groupId>

<artifactId>commons-codec</artifactId>

</exclusion>

<exclusion>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

</exclusion>

<exclusion>

<groupId>io.airlift</groupId>

<artifactId>units</artifactId>

</exclusion>

</exclusions>

</dependency>

Zhenxiao Luo

Aug 15, 2014, 3:09:02 AM8/15/14

to presto...@googlegroups.com

Thank you so much, Siva. Let me take a try.

Thanks,

Zhenxiao

Reply all

Reply to author

Forward

0 new messages