How to read all records from table(> 10 million records) and serve each record as chunk response

Sivakumar Raja

Controller:

@Transactional(readOnly=true)

public Result fetchAll() {

try {

final Iterator<String> sourceIterator = Summary.fetchAll();

response().setHeader("Content-disposition", "attachment; filename=Summary.csv");

Source<String, ?> s = Source.from(() -> sourceIterator);

return ok().chunked(s.via(Flow.of(String.class).map(i -> ByteString.fromString(i+"\n")))).as(Http.MimeTypes.TEXT);

} catch (Exception e) {

return badRequest(e.getMessage());

}

}

Service:

public static Iterator<String> fetchAll() {

StatelessSession session = ((Session) JPA.em().getDelegate()).getSessionFactory().openStatelessSession();

org.hibernate.Query query = session.createQuery("select l.id from Summary l")

.setFetchSize(Integer.MIN_VALUE).setCacheable(false).setReadOnly(true);

ScrollableResults results = query.scroll(ScrollMode.FORWARD_ONLY);

return new models.ScrollableResultIterator<>(results, String.class);

}

Iterator:

public class ScrollableResultIterator<T> implements Iterator<T> {

private final ScrollableResults results;

private final Class<T> type;

public ScrollableResultIterator(ScrollableResults results, Class<T> type) {

this.results = results;

this.type = type;

}

@Override

public boolean hasNext() {

return results.next();

}

@Override

public T next() {

return type.cast(results.get(0));

}

}For test purpose, i am having 1007 records in my table, whenever i call this end point, it always return only 503 records.

Enabled AKKA log level to DEBUG and tried it again, it logs the following line for 1007 times

2016-07-25 19:55:38 +0530 [DEBUG] from org.hibernate.loader.Loader in application-akka.actor.default-dispatcher-73 - Result row: From the log i confirm that it fetching all, but couldn't get where the remaining one got left.

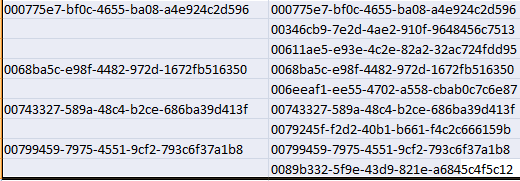

I run the same query in my workbench and i export it to a file locally and compared it with the file generated by the end point, kept LHS record generated from end point and RHS file exported from Workbench. First row matches, second and third didn't match. After that it got matches for alternate records until the end.

Please correct me, if am doing anything wrong and suggest me is this the correct approach for generating CSV for large db records.

Thanks,

Sivakumar