New blog post: A consulting POV: Stop thinking about Data Warehouses!

11 views

Skip to first unread message

Pedro Alves

Jun 27, 2017, 10:25:14 AM6/27/17

to pentaho-...@googlegroups.com

---------------------

A consulting POV: Stop thinking about Data Warehouses!

What I am writing in here is the materialization of a line of thought that started bothering me a couple of years ago. While I implemented projects after projects, built ETLs, optimized reports, designed dashboards, I couldn’t help but thinking that something didn’t quite make sense, but couldn’t quite see what. When I tried to explain it to someone, I just got blank stares…

Eventually things started to make more sense to me (which is far from saying they actually make sense, as I’m fully aware my brain is, hum, let’s just say a little bit messed up!) and I ended up realizing that I’ve been looking at the challenges from a wrong perspective. And while this may seem a very small change in mindset (specially if I fail in passing the message, which may very well happen), the implications are huge: not only it changed our methodology on how to implement projects in our services teams, it’s also guiding Pentaho’s product development and vision.

A few years ago, in a blog post far, far away...

A couple of years ago I wrote a blog post called ”Kimball is getting old”. It focused on one fundamental point: technology was evolving to a point where just looking at the concept of an enterprise datawarehouse (EDW) seemed restrictive. After all, the end users care only about information; they couldn’t care less about what gets the numbers in front of them. So I proposed that we should apply a very critical eye to our problem, and maybe, sometimes, Kimball’s DW, with its star schemas, snowflakes and all that jazz wasn’t the best option and we should choose something else…

But I wasn’t completely right…

I’m still (more than ever?) a huge proponent of the top down approach: focus on usability, focus on the needs of the user, provide him a great experience. All rest follows. All of that is still spot on.

But I made 2 big mistakes:

1. I confused data modelling with data warehouse

2. I kept seeing data sources conceptually as the unified, monolithic source of every insight

Data Modelling – the semantics behind the data

Kimball was a bloody genius! Actually, my mistake here was actually due to the fact that he is waysmarter than everyone else. Why do I say this? Because he didn’t come up with one, but with twogroundbreaking ideas...

First, he realized that the value of data, business-wise, comes when we stop considering it as just zeros and ones and start treating it as business concepts. That’s what the Data Modelling does: By adding semantics to raw data, immediately gives it meaning that makes sense to a wide audience of people. And this is the part that I erroneously dismissed. This is still spot on! All his concepts of dimensions, hierarchies, levels and attributes, are relevant first and foremost because that’s how people think.

And then, he immediately went prescriptive and told us how we could map those concepts to database tables and answer the business questions with relational database technology with concepts like star schemas, snowflake, different types of slowly changing dimensions, aggregation techniques, etc.

He did such a good job that he basically shaped how we worked; How many of us were involved in projects where we were talked to build data warehouses to give all possible answers when we didn’t even know the questions? I’m betting a lot, I certainly did that. We were taught to provide answers without focusing on understanding the questions.

Project’s complexity is growing exponentially

Classically, a project implementation was simply around reporting on the past. We can’t do that anymore; If we want our project to succeed, it can’t just report on the past: It also has to describe the present and predict the future.

There’s also the explosion on the amount of data available.

IoT brought us an entire new set of devices that are generating data we can collect.

Social media and behavior analysis brought us closer to our users and customers

In order to be impactful (regardless of how “impact” is defined), a BI project has to trigger operational actions: schedule maintenances, trigger alerts, prevent failures. So, bring on all those data scientists with their predictive and machine learning algorithms...

On top of that, in the past, we might have been successful at convincing our users that it’s perfectly reasonable to expect a couple of hours for that monthly sales report that processed a couple of gigabytes of data. We all know that’s changed; if they can search the entire internet in less than a second, why would they waste minutes for a “small” report?? And let’s face it, they’re right…

The consequence? It’s getting much more complex to define, architect, implement, manage and support a project that needs more data, more people, more tools.

Am I making all of this sound like a bad thing? On the contrary! This is a great problem to have! In the past, BI systems were confined to delivering analytics. We’re now given the chance to have a much bigger impact in the world! Figuring this out is actually the only way forward for companies like Pentaho: We either succeed and grow, or we become irrelevant. And I certainly don’t want to become irrelevant!

IT’s version of the Heisenberg’s Uncertainty Principle: Improving both speed and scalability??

So how do we do this?

My degree is actually in Physics (don’t pity me, took me a while but I eventually moved away from that), and even though I’m a really crappy one, I do know some of the basics…

One of the most well-known theorems in physics is Heisenberg’s Uncertainty principle. You cannot accurately know both the speed and location of (sub-)atomic particle with full precision. But can have a precise knowledge over one in detriment of the other

I’m very aware this analogy is a little bit silly (to say the least) but it’s at least vivid enough on my mind to make me realize that we can’t expect in IT to solve both the speed and scalability issue – at least not to a point where we have a one size fits all approach.

There have been spectacular improvements in the distributed computing technologies – but all of them have their pros and cons, the days where a database was good for all use cases is long gone.

So what do we do for a project where we effectively need to process a bunch of data and at the same time it has to be blazing fast? What technology do we chose?

Thinking “data sources” slightly differently

When we think about data sources, there are 2 traps most of us fall into:

1. We think of them as a monolithic entity (eg: Sales, Human Resources, etc) that hold all the information relevant to a topic

2. We think of them from a technology perspective

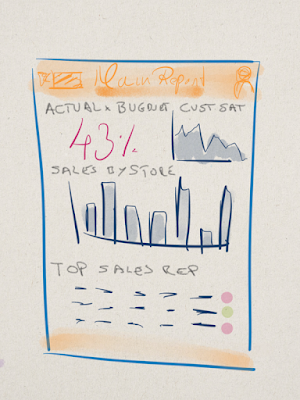

Let me try to explain this through an example. Imagine the following customer requirement, here in the format of a dashboard, but could very well be any other delivery format (yeah, cause a dashboard, a report, a chart, whatever, is just the way we chose to deliver the information):

Pretty common, hum?

The classical approach

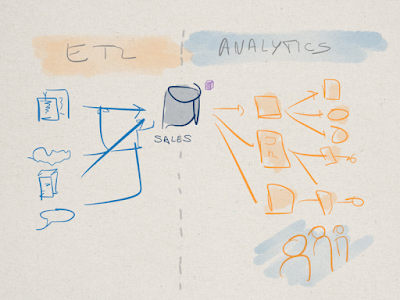

When thinking about this (common) scenario from the classical implementation perspective, the first instinct would be to start designing a data warehouse (doesn’t even need to be an EDW per se, could be Hadoop, a no-sql source, etc). We would build our ETL process (with PDI or whatever) from the source systems through an ETL and there would always be a stage of modelling so we could get to our Sales data source that could answer all kinds of questions.

After that is done, we’d be able to write the necessary queries to generate the numbers our fictitious customer wants.

And after a while, we would implement a solution architecture diagram similar to this, that I’m sure looks very similar to everything we’ve all been doing in consulting:

Our customer gets the number he numbers he want, he’s happy and successful. So successful that he expands, does a bunch of acquisitions, gets so much data that our system starts to become slow. The sales “table” never stops growing. It’s a pain to do anything with it… Part of our dashboard takes a while to render… we’re able to optimize part of it, but other areas become slow.

In order to optimize the performance and allow the system to scale, we consider changing the technology. From relational databases to vertical column store databases, to nosql data stores, all the way through Hadoop, in a permanent effort to keep things scaling and fast…

The business’ approach

Let’s take a step back. Looking at our requirements, the main KPI the customer wants to know is:

How much did I sell yesterday and how is that compared to budget?

It’s one number he’s interested in.

Look at the other elements: He wants the top reps for the month. He wants a chart for the MTD sales. How many data points is that? 30 tops? I’m being simplistic on purpose, but the thing is that it is extremely stupid to force ourselves to always go through all the data when the vast majority of the questions isn’t a big data challenge in the first place. It may need big data processing and orchestration, but certainly not at runtime.

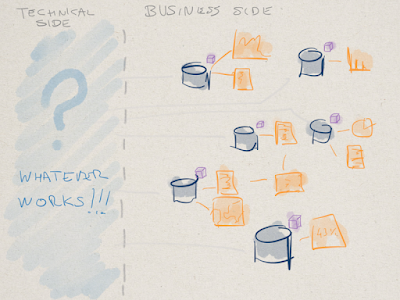

So here’s how I’d address this challenge

I would focus on the business question. I would not do a single Sales datasource. Instead, I’d define the following Business Data Sources (sorry, I’m not very good at naming stuff..), and I’d force myself to define them in a way where each of them contains (or output) a small set of data (up to a few millions the most):

· ActualVsBudgetThisMonth

· CustomerSatByDayAndStore

· SalesByStore

· SalesRepsPerformance

Then I’d implement these however I needed! Materialized, unmaterialized, database or Hadoop, whatever worked. But through this exercise we define a clear separation between where all the data is and the most common questions we need to answer in a very fast way.

Does something like this gives us all the liberty to answer all the questions? Absolutely not! But at least for me doesn’t make a lot of sense to optimize a solution to give answers when I don’t even know what the questions are. And the big data store is still there somewhere for the data scientists to play with

Like I said, while the differences may seem very subtle at first, here are some advantages I found of thinking through solution architecture this way:

· Faster to implement – since our business datasources’s signature is much smaller and well identified, it’s much easier to fill in the blanks

· Easier to validate – since the datasources are smaller, they are easier to validate with the business stakeholders as we lock them down and move to other business data sources

· Technology agnostic – note that at any point in time I mentioned technology choices. Think of these datasources as an API

· Easier to optimize – since we split a big data sources in multiple smaller ones, they become easier to maintain, support and optimize

Concluding thoughts

Give it a try – this will seem odd at first, but it forces us to think differently. We spend too much time worrying about the technology that more than often we forget what we’re here to do in the first place…

-pedro

Reply all

Reply to author

Forward

0 new messages