OLSR: Routing Table entries get (randomly) lost

whatev...@yahoo.de

Dear all,

I’m working on a setup using INETMANET (my version: https://github.com/inetmanet/inetmanet/tree/4116c0c3711657a22a16c8007ecc411eae0cb874)

And Omnet 4.1.

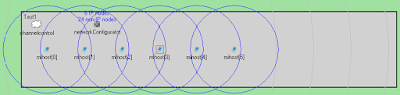

This is my setup, a simple mesh chain of 6 nodes:

OLSR is deployed as a routing protocol. I’m using the standard MobileManetRoutingHost node, with Null Mobility. I just added thruputmeters above and below the IP layer. Node0 sends UDP packets with a TX rate of 200kbps.

Looking at the received throughput, I find that in 2 of 5 runs there is a notable gap in transmission, in which no packets are received. Blue graph is the sender (TX) and red is the receiver (RX).

In the event log I can see that node0 is generating ICMP error messages with the code „Host not reachable“.

To further isolate the problem, I’ve ported the Routing Table Recorder from the current INET framework. The routing table log shows that permanent, identical entries are deleted and directly reinserted in very short intervals. Here is an extract:

|

Type -R |

ID 1215462 |

Time 46,297337 |

RTs Owner sihost[4] |

8 |

GW 10.0.1.1 |

255.255.255.255 |

Dst IP 10.0.4.1 |

|

-R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.2.1 |

255.255.255.255 |

10.0.4.1 |

|

-R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.3.1 |

255.255.255.255 |

10.0.4.1 |

|

-R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.4.1 |

255.255.255.255 |

10.0.4.1 |

|

-R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.6.1 |

255.255.255.255 |

10.0.6.1 |

|

+R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.6.1 |

255.255.255.255 |

10.0.6.1 |

|

+R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.4.1 |

255.255.255.255 |

10.0.4.1 |

|

+R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.3.1 |

255.255.255.255 |

10.0.4.1 |

|

+R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.2.1 |

255.255.255.255 |

10.0.4.1 |

|

+R |

1215462 |

46,297337 |

sihost[4] |

8 |

10.0.1.1 |

255.255.255.255 |

10.0.4.1 |

R- means that an entry is deleted and R+ that is was created.

Shortly before the break occurs, the extract looks like this:

|

-R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.6.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.5.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1232840 |

46,7175671 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

Five entries are deleted but only three are added. This repeats in the later course.

|

-R |

1237974 |

50,0278942 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1237974 |

50,0278942 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1237974 |

50,0278942 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1237974 |

50,0278942 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1237974 |

50,0278942 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1237974 |

50,0278942 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1238380 |

50,269501 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1238380 |

50,269501 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1238380 |

50,269501 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1238380 |

50,269501 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1238380 |

50,269501 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1238380 |

50,269501 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

After a while, the table is complete again:

|

-R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

-R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.2.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.3.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.4.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.5.1 |

255.255.255.255 |

10.0.2.1 |

|

+R |

1239218 |

50,7668667 |

sihost[0] |

4 |

10.0.6.1 |

255.255.255.255 |

10.0.2.1 |

The formerly missing entries are back. But between 46,71 and 50,76 seconds these entries are not usable, which leads to massive losses in the WMN.

If I do multiple runs, this Problem occurs in approximately 40% of the runs.

The frequently Routing Table updates behavior is over the whole simtime and in all runs the same.

A shorter Chain with four Nodes has smaller gaps, three Nodes work fine.

The same Setup with BATMAN and the Routing Table is after 12 seconds in steady state, no further changes.

Does someone know why this happens or has experienced the same effect? Is there a known bug for this in the INETMANET version I’m using?

My workaround would be to modify:

void RoutingTable::addRoute(const IPRoute *entry) und bool RoutingTable::deleteRoute(const IPRoute *entry)

in a way that entries are only added if they are new, but existing entries won’t be deleted.

Kind regards, Johannes

Alfonso Ariza Quintana

You are using a very old version. If you don’t delete the routes, how can you determine a loss of connectivity?

--

You received this message because you are subscribed to the Google Groups "OMNeT++ Users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to omnetpp+u...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

whatev...@yahoo.de

Thanks for your reply!

The current implementation of OLSR updates RT in an identical manner… (Only a short test, inetmanet-2.2 and Omnet 4.6)

The solution for the „frequently update“ problem was:

and set DelOnlyRtEntriesInrtable_ = true.

But the other problem is still present, if i do multiple runs, on some the entry for misthost5 is missing in mihost0s Routing Table.

I use opp_runall for batch execution but it stops on the first ASSERT() complete, is this intended behavior?

...

Alfonso Ariza Quintana

I can imagine a conditions that can produce the behaviour of lost of connectivity. If the traffic is heavy, exist the possibility of lost several "hello", in this case the node remove the routing table entries, due to that the hello messages are broadcast, it is relatively easy to lost several of this messages.

A lineal network increase the probability because all traffic must be sent by the same links and the auto interference problem does the rest.

The solution of this type of problem is a bit complex, the first change is increase the number of hello messages that a node can lost before determine that a link is unusable, the second is to include a link layer feedback mechanism. The first modification reduce the problem and the second allows to remove quickly links that have been lost.

Date: Sat, 21 Feb 2015 09:08:09 -0800

From: omn...@googlegroups.com

To: omn...@googlegroups.com

CC: aari...@hotmail.com

Subject: Re: [Omnetpp-l] OLSR: Routing Table entries get (randomly) lost