Robust tests for graphics packages

527 views

Skip to first unread message

Tom Breloff

Sep 21, 2015, 9:39:30 AM9/21/15

to julia-dev

I'd like to make my testing robust for Plots.jl (especially since I'll want to know if plotting backends change)... what is the recommended way to test packages that produce graphics? I suppose I can test a lot by generating plots, writing to a PNG, and then comparing that the raw bytes are equal to some known (correct) PNG? Is there accepted best practice here? What would be the best way to compare the files? Is there any good way to test that a GUI window is working properly?

Any advice is appreciated. Thanks.

Tim Holy

Sep 21, 2015, 9:49:08 AM9/21/15

to juli...@googlegroups.com

This is an important issue, and I think it's fair to say we don't have a great

solution. Both Winston and Compose do something like this in their tests.

Unfortunately, both projects have issues with different versions of julia---or

different versions of underlying libraries like libcairo on the maintainer's vs

Travis' machines---giving minor differences in output.

Images has a ssd method that computes the sum-of-square differences---perhaps

we should use that? Or do it manually in combination with imfilter_gaussian, to

blur out any differences that affect alignment on the scale of a pixel or two?

Best,

--Tim

solution. Both Winston and Compose do something like this in their tests.

Unfortunately, both projects have issues with different versions of julia---or

different versions of underlying libraries like libcairo on the maintainer's vs

Travis' machines---giving minor differences in output.

Images has a ssd method that computes the sum-of-square differences---perhaps

we should use that? Or do it manually in combination with imfilter_gaussian, to

blur out any differences that affect alignment on the scale of a pixel or two?

Best,

--Tim

Tom Breloff

Sep 21, 2015, 10:12:50 AM9/21/15

to juli...@googlegroups.com

Tim: can you produce a minimally working example of how you would compare 2 images (using something like your 2nd paragraph) to within 99.9% similarity using Images.jl? Ideally the correctness number is flexible, so you could validate exact matches and approximate matches with the same method.

Should I start a new package (can add to JuliaGraphics) to streamline these sorts of comparison/tests so that other packages can use them?

Tim Holy

Sep 21, 2015, 10:48:10 AM9/21/15

to juli...@googlegroups.com

I don't think we need a whole package for this, because it's only a handful of

lines. If you want, we could add a @test_images_approx_eq_eps macro to

Images.jl. Or we could add it to ImageView and, in cases where they differ,

make it pop up a window that displays the two images :-).

Here's a demo:

# Get set up

using Images, TestImages

img1 = testimage("mandrill")

# Let's make img2 a single-pixel circular shift of img1

img2 = shareproperties(img1, img1[:,[size(img1,2);1:size(img1,2)-1]])

# Here's the calculation

img1f = float32(img1) # to make sure Ufixed8 doesn't overflow

img2f = float32(img2)

imgdiff = img1f - img2f

imgblur = imfilter_gaussian(imgdiff, [1,1]) # gaussian with sigma=1pixel

err = sum(abs, imgblur)

pow = (sum(abs, img1f) + sum(abs, img2f))/2

if err > 0.001*pow

error("img1 and img2 differ")

end

This example gives a 6% difference, which is over your threshold. If you blur

by sigma=2pixels, the error drops to 2.6%.

The idea behind blurring is that shifts result in adjacent negative and

positive values, which cancel when you blur.

--Tim

lines. If you want, we could add a @test_images_approx_eq_eps macro to

Images.jl. Or we could add it to ImageView and, in cases where they differ,

make it pop up a window that displays the two images :-).

Here's a demo:

# Get set up

using Images, TestImages

img1 = testimage("mandrill")

# Let's make img2 a single-pixel circular shift of img1

img2 = shareproperties(img1, img1[:,[size(img1,2);1:size(img1,2)-1]])

# Here's the calculation

img1f = float32(img1) # to make sure Ufixed8 doesn't overflow

img2f = float32(img2)

imgdiff = img1f - img2f

imgblur = imfilter_gaussian(imgdiff, [1,1]) # gaussian with sigma=1pixel

err = sum(abs, imgblur)

pow = (sum(abs, img1f) + sum(abs, img2f))/2

if err > 0.001*pow

error("img1 and img2 differ")

end

This example gives a 6% difference, which is over your threshold. If you blur

by sigma=2pixels, the error drops to 2.6%.

The idea behind blurring is that shifts result in adjacent negative and

positive values, which cancel when you blur.

--Tim

Tom Breloff

Sep 21, 2015, 2:12:07 PM9/21/15

to juli...@googlegroups.com

I like the idea of adding the underlying comparison logic to Images.

In regards to ImageView, it might be cool to be able to show the images side by side, along with a third image that is the "difference between each pixel" (I'm not exactly sure what that means... maybe a grayscale image using `colordiff` on each pixel color?) I don't know ImageView well, but based on my assumptions this would be a relatively easy thing to make. Thoughts?

catc...@bromberger.com

Sep 21, 2015, 2:20:40 PM9/21/15

to julia-dev

There's a related use case here with graphs and compressed file formats / visualizations that may argue for a more general solution. It would be great to be able to take a file of arbitrary format and do comparisons on it. (Too complicated for a first cut?)

Seth.

Tim Holy

Sep 21, 2015, 2:43:36 PM9/21/15

to juli...@googlegroups.com

On Monday, September 21, 2015 02:12:03 PM Tom Breloff wrote:

> I like the idea of adding the underlying comparison logic to Images.

>

> In regards to ImageView, it might be cool to be able to show the images

> side by side, along with a third image that is the "difference between each

> pixel" (I'm not exactly sure what that means... maybe a grayscale image

> using `colordiff` on each pixel color?) I don't know ImageView well, but

> based on my assumptions this would be a relatively easy thing to make.

> Thoughts?

Yep, pretty easy. I can try to tackle this in the next day or two; if I

> I like the idea of adding the underlying comparison logic to Images.

>

> In regards to ImageView, it might be cool to be able to show the images

> side by side, along with a third image that is the "difference between each

> pixel" (I'm not exactly sure what that means... maybe a grayscale image

> using `colordiff` on each pixel color?) I don't know ImageView well, but

> based on my assumptions this would be a relatively easy thing to make.

> Thoughts?

forget, file an issue at Images.

--Tim

Tim Holy

Sep 21, 2015, 2:44:20 PM9/21/15

to juli...@googlegroups.com

If you mean byte-level comparisons, that's easy. But also relatively unrelated

to the solution Tom and I are converging on, which is quite specific to image

comparisons.

--Tim

to the solution Tom and I are converging on, which is quite specific to image

comparisons.

--Tim

Simon Danisch

Sep 21, 2015, 5:44:42 PM9/21/15

to julia-dev

I wanted to try this at some point: http://pdiff.sourceforge.net/

I also opened an issue about it to not forget it:

Chris Foster

Sep 21, 2015, 6:45:51 PM9/21/15

to juli...@googlegroups.com

I used pdiff a lot when I used to hack on the aqsis renderer a while

back. It was quite useful in the regression test suite where we

wanted to render out a whole bunch of different scenes and check that

they matched a set of reference images. (Page describing the aqsis

image regression test suite:

https://github.com/aqsis/aqsis/wiki/Regression-Testing. Test suite

code: https://svn.code.sf.net/p/aqsis/svn/trunk/testing/regression/

(yikes, is that an svn repo!?))

Having said that, I'm not sure pdiff is quite what you want: it was

designed for comparing output from 3D software renderers where you

typically use stochastic light integration to compute a solution to

the rendering equation. In the film rendering world, if you can get

away with a cheaper approximation which is perceptually the same, then

you're winning.

If it's just rendering of 2D vector graphics or 3D OpenGL without

sophisticated lighting, you may be better off just looking at the

image differences and comparing with some simple absolute thresholds.

I remember a discussion about image differencing on the OpenImageIO

list a while back. In particular, this comment from Larry Gritz:

> I haven't been very satisfied by the Yee (--pdiff) method. More specifically, although it sounds good theoretically, in practice I just haven't found any situations in which it's a superior approach to just using regular diffs with carefully thought-out thresholds and percentages.

See the thread here:

http://lists.openimageio.org/pipermail/oiio-dev-openimageio.org/2014-August/013434.html

Cheers,

~Chris

back. It was quite useful in the regression test suite where we

wanted to render out a whole bunch of different scenes and check that

they matched a set of reference images. (Page describing the aqsis

image regression test suite:

https://github.com/aqsis/aqsis/wiki/Regression-Testing. Test suite

code: https://svn.code.sf.net/p/aqsis/svn/trunk/testing/regression/

(yikes, is that an svn repo!?))

Having said that, I'm not sure pdiff is quite what you want: it was

designed for comparing output from 3D software renderers where you

typically use stochastic light integration to compute a solution to

the rendering equation. In the film rendering world, if you can get

away with a cheaper approximation which is perceptually the same, then

you're winning.

If it's just rendering of 2D vector graphics or 3D OpenGL without

sophisticated lighting, you may be better off just looking at the

image differences and comparing with some simple absolute thresholds.

I remember a discussion about image differencing on the OpenImageIO

list a while back. In particular, this comment from Larry Gritz:

> I haven't been very satisfied by the Yee (--pdiff) method. More specifically, although it sounds good theoretically, in practice I just haven't found any situations in which it's a superior approach to just using regular diffs with carefully thought-out thresholds and percentages.

See the thread here:

http://lists.openimageio.org/pipermail/oiio-dev-openimageio.org/2014-August/013434.html

Cheers,

~Chris

J Luis

Sep 22, 2015, 6:14:14 AM9/22/15

to julia-dev

In GMT we use graphicsmagick to compare postscript figures. The output is a png. I have implemented it in my WIP porting of GMT, see an example in

https://github.com/joa-quim/GMT.jl/blob/master/src/gmtest.jl

https://github.com/joa-quim/GMT.jl/blob/master/src/gmtest.jl

Gunnar Farnebäck

Sep 22, 2015, 10:49:45 AM9/22/15

to julia-dev

A common approach today, if you want more leeway than pixelwise comparison, is the Structural Similarity Index (SSIM),

https://en.wikipedia.org/wiki/Structural_similarity

Not necessarily worth the added complexity though.

https://en.wikipedia.org/wiki/Structural_similarity

Not necessarily worth the added complexity though.

Tom Breloff

Sep 22, 2015, 10:59:44 AM9/22/15

to juli...@googlegroups.com

SSIM looks relatively straightforward... Could add that to Images as well? I'm happy to add it to my todo list.

Andreas Lobinger

Sep 25, 2015, 9:47:59 AM9/25/15

to julia-dev

Hello colleagues,

On Monday, September 21, 2015 at 11:44:42 PM UTC+2, Simon Danisch wrote:

I wanted to try this at some point: http://pdiff.sourceforge.net/I also opened an issue about it to not forget it:

libcairo has a local pdiff (in src/test/pdiff) which is used in extensive regression testing. Reading the header of the main program it looks like this is pdiff in an earlier version. First step is comparison of size, then binary, then perceptually.

Andreas Lobinger

Sep 25, 2015, 9:48:46 AM9/25/15

to julia-dev

Did you come to a conclusion, what to use? I'd be interested to share the effort.

Tom Breloff

Sep 25, 2015, 10:02:05 AM9/25/15

to juli...@googlegroups.com

I haven't come to any conclusions yet. If you could produce some sample tests using Cairo's pdiff it would be helpful.

Andreas Lobinger

Sep 26, 2015, 7:06:01 AM9/26/15

to julia-dev

Hello colleague,

On Friday, September 25, 2015 at 4:02:05 PM UTC+2, Tom Breloff wrote:

I haven't come to any conclusions yet. If you could produce some sample tests using Cairo's pdiff it would be helpful.

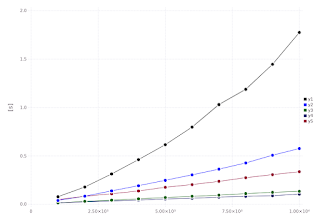

cairos pdiff (build with /test/pdiff make perceptualdiff) is replying as difference between this

and this

(small gaussian blur in the middle of the picture):

lobi@orange3:~/cairo_int/cairo-1.14.0/test/pdiff$ ./perceptualdiff ~/cairo-speed-lines.png ~/cairo-speed-lines1.png -verbose

Field of view is 45.000000 degrees

Threshold pixels is 100 pixels

The Gamma is 2.200000

The Display's luminance is 100.000000 candela per meter squared

PASS: Images are perceptually indistinguishable

Tom Breloff

Sep 26, 2015, 8:38:57 AM9/26/15

to juli...@googlegroups.com

Thanks. Can this be automated? Or do we have to scrape/parse the command line output?

Andreas Lobinger

Sep 26, 2015, 11:26:08 AM9/26/15

to julia-dev

On Saturday, September 26, 2015 at 2:38:57 PM UTC+2, Tom Breloff wrote:

Thanks. Can this be automated? Or do we have to scrape/parse the command line output?

The main program (perceptualdiff.c -> main) returns a status, so running this in a shell and get the status should be easy...

However to get the program you need a full local cairo build and that will get us dependencies a lot. Initially i thought about building the compare function into a lib and just attach via ccall. In my recent experiences with BinDeps my optimism about using external libraries with Julia cooled down.

But looking at the paper and around the SW: Why not doing a re-implementation (of the compare function) in julia.

Reply all

Reply to author

Forward

0 new messages