Google Bot Is Still the enemy...

Brandon Wirtz

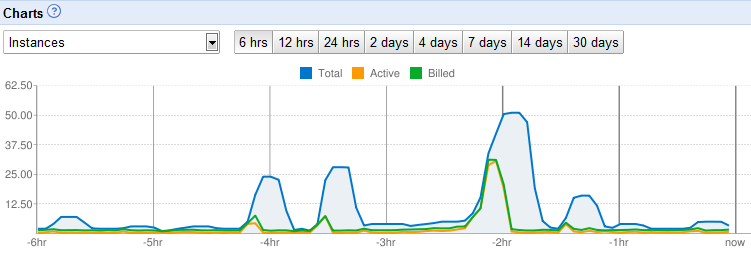

Those Are F4 Instances. The app averages 2.1 instance hours / hour at normal levels. We usually see Google bot spike us to 6 for 15 minutes. I believe this was 35 F4’s (those of you who run free apps, this is 10 days of free quota in 15 minutes) (and currently we are getting hit like this every 30 hours)

F4’s served that pages 2.5x faster on average (mainly because with more memory we get a lot more cache hits) Faster pages meant faster bot crawl. At peak we were serving just shy of 100 Google Bot requests per second.

We really appreciate that the Crawl team helps us prove that we could serve 8.6M users a day with our product, (and against unique page requests no less) but it would be nice if they could do it once a month rather than once a day.

Anand Mistry

Pieter Coucke

Have you looked into using Sitemaps (http://www.sitemaps.org/protocol.html#changefreqdef) to hint at how often to crawl your site? Google, Bing, and Yahoo all recognise the sitemaps protocol, even though they may act on it differently.

--To view this discussion on the web visit https://groups.google.com/d/msg/google-appengine/-/Kg4DY93V0C8J.

You received this message because you are subscribed to the Google Groups "Google App Engine" group.

To post to this group, send email to google-a...@googlegroups.com.

To unsubscribe from this group, send email to google-appengi...@googlegroups.com.

For more options, visit this group at http://groups.google.com/group/google-appengine?hl=en.

Pieter Coucke

Brandon Wirtz

We set expiration very long in both the headers and in the sitemap. We tried killing the sitemap (since we were getting crawled and could see the crawler winding through links). When you are assigned “special crawl rate” because you are on Google infrastructure, you don’t get any control. We have observed the bots going through every page in the sitemap, at a rate of 1 page as fast as it could get it, and going to the next page and looping when it reached the end of the sitemap. I built an app as a “playground” for the bot and if I told it that the change frequency was Hourly with 100k pages it would consume 5 F1 python 2.5 instances 24 hours a day.

We have tried to put code to slow down how fast we serve pages to google bot, but that is almost as expensive as serving the page, and when it really beats on the Wait state backs up legit requests.

We tried making sure we served Googlebot the oldest version of pages we have so that it wouldn’t see changes.

In a test environment we served 500 errors, which got the pages removed from the index.

We tried redirecting only Google Bot to the Naked Domain which is hosted not on GAE That resulted in us crushing the Naked server AND getting the pages listed in Google wrong despite setting a preference in WebMasters Tools that we always have www. In results.

We Tried using the MSN Robot.txt setting to throttle crawling.

We tried to come up with a way to give alternate DNS to Google so it would let us set the crawl rate in webmasters, doing so causes AppsForDomains to disassociate your Domain, because it can’t detect that you are still hosting on GAE.

We Tried attaching HUGE amounts of CSS/ Style data to make the pages big so that Google would throttle back the crawls, and we could push the data to the buffer, but hit the Bit Budget for the crawl… All that did was up our bandwidth usage.

--

sb

there is no need to index the thing more than once a month.

>We have tried to put code to slow down how fast we serve pages to google bot, but that is almost as expensive as serving the page...

What happens if you only serve the google bot once a second and the

rest of the time you give it timeouts rather than 500 errors?

>

> From: google-a...@googlegroups.com

> [mailto:google-a...@googlegroups.com] On Behalf Of Anand Mistry

> Sent: Monday, January 09, 2012 3:58 AM

> To: google-a...@googlegroups.com

> Subject: [google-appengine] Re: Google Bot Is Still the enemy...

>

> Have you looked into using Sitemaps

> (http://www.sitemaps.org/protocol.html#changefreqdef) to hint at how often

> to crawl your site? Google, Bing, and Yahoo all recognise the sitemaps

> protocol, even though they may act on it differently.

> --

> You received this message because you are subscribed to the Google Groups

> "Google App Engine" group.