BDD is TDD + DbC

201 views

Skip to first unread message

Larry Rix

Jun 14, 2021, 11:46:48 AM6/14/21

to Eiffel Users

I have really enjoyed being linked up with the videos from Dave Farley of Continuous Delivery on YouTube.

It seems that Dave was there from the outset of the notion of BDD, which until now, I had dismissed out-of-hand—BUT—no longer! In listening to what Mr. Farley has stated quite a bit in his videos, I am now of a mind that the notion of BDD is actually Design-by-Contract within a TDD (AutoTest) context.

The following video is a result of that realization. I am hoping you all will agree.

You can find the inspiration video from Dave on a link on the YouTube video above.

Enjoy!

It seems that Dave was there from the outset of the notion of BDD, which until now, I had dismissed out-of-hand—BUT—no longer! In listening to what Mr. Farley has stated quite a bit in his videos, I am now of a mind that the notion of BDD is actually Design-by-Contract within a TDD (AutoTest) context.

The following video is a result of that realization. I am hoping you all will agree.

You can find the inspiration video from Dave on a link on the YouTube video above.

Enjoy!

Ian Joyner

Jun 14, 2021, 7:49:41 PM6/14/21

to eiffel...@googlegroups.com

Hi Larry,

Very good and interesting. Clear presentations give others ideas of where to go next.

I think BDD and TDD really do unit tests. The checking in TDD is in the test code, but that is unnecessary in DbC since that is stated with the routine. But DbC still needs a unit test harness which is BDD.

Commenting out the source lines seems a test of the DbC tests. Could that be done in the runtime itself (like turning off assertions), so people don’t need to manually comment out code (which could lead to an error of forgetting to turn them back on)?

Then there is regression vs ad-hoc testing. Unit tests are used in regression. In contrast interpreted languages are really good for ad-hoc tests. That is good for teaching since students can test their learning, but professional programmers like that too without having to write separate tests, thus speeding up the development cycle.

Sorry, a few thoughts in there that you triggered.

Ian

Sent from my iPad

On 15 Jun 2021, at 1:47 am, Larry Rix <lar...@moonshotsoftware.com> wrote:

I have really enjoyed being linked up with the videos from Dave Farley of Continuous Delivery on YouTube.

--

You received this message because you are subscribed to the Google Groups "Eiffel Users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to eiffel-users...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/eiffel-users/f952e0b0-f62e-44f5-a293-a00edfa526f7n%40googlegroups.com.

clan...@earthlink.net

Jun 18, 2021, 12:49:28 PM6/18/21

to Eiffel Users

I think that asserting that BDD = TDD + DBC is not correct.

The first directive of Test Driven Design is the notion that a specification can be created via the use of tests. In other words, express your requirements through unit tests. Write the test first, fail, fix the implementation until the test works, proceed to next test. Note this means expressing requirements in code, which many consider questionable. When I try this approach using AutoTest it is not very cooperative.

Since you are using tests to discover what the design of your abstractions are you often need to use Mock objects to determine what the dependencies are, then inject them into your abstraction. Eiffel is rather strict with what you can create with Mocks.

Behaviour Driven Design connects the behavior constrained by business rules to the creation of unit tests. So it sits on top of TDD. The advantage is that users, domain experts, etc. can be involved in the process, not just programmers, testers, and architects. A given "feature", a business concept, generates scenarios which lead to the discovery of abstractions. The scenarios can be translated into unit tests via the use of Gherkin and associated software tools.

Design by Contract is used to implement Abstract Data Types. The method used to make it easier for non-programmers to use is called BON, Business Object Notation, if I recall correctly. The objects are described with command, queries, and constraints. There are layers of more and more formal refinements which one can go forward and backward in as needed. The output is the universe of Eiffel objects complete with pre-conditions, post-conditions, and invariants.

When you dig into the testing library of AutoTest there is some code to implement an Eiffel interpreter. I think this was an initial effort to create the playground like environment of Pharo, or the REPL of Clojure. If EiffelStudio were to finish this, along with adding sophisticated support for Mock objects, I would be quite pleased.

Carl

Larry Rix

Jun 19, 2021, 7:27:45 PM6/19/21

to Eiffel Users

Hi Carl,

Dave Farley was a part of the group of people who came up with BDD. Farley states quite clearly that BDD is not something different from TDD, but is "TDD better explained". They came up with BDD as a form of guidance on how to implement TDD code testing production code.

The common notion of BDD has been captured in the "Given-When-Then" notion (and notation as used in certain products like Cucumber). This pattern of Given-When-Then is precisely the notion of Design-by-Contract.

Given = the precondition-state that must hold true before the "When" (do ... end) is executed. The "Then" are the post-condition results and resulting state that must hold true after the "When" has been executed. This is a standard Hoare Triple of {P}C{Q}. Same dance—different words.

AutoTest is simply a tool within EiffelStudio for executing test code. That code is there for at least two primary purposes. The most primary is to prepare-to-call and then call the operation ("When") under-test. The preconditions of DbC are simply code representing final QA-test assertions that must hold true for the feature to execute without defect.

That BDD has found a notation like Gherkin in Cucumber is a happy circumstance. It allows non-technical people to write specifications that can be translated (compiled) from the BDD specification into possibly two code targets: First—at least a skeletal deferred version of a class. Second—possible test code that makes a call to the generated deferred class feature.

It is quite clear the BDD does in fact represent a pragmatic application of DbC code executed and exercised by TDD code.

Kindest regards,

Larry

Dave Farley was a part of the group of people who came up with BDD. Farley states quite clearly that BDD is not something different from TDD, but is "TDD better explained". They came up with BDD as a form of guidance on how to implement TDD code testing production code.

The common notion of BDD has been captured in the "Given-When-Then" notion (and notation as used in certain products like Cucumber). This pattern of Given-When-Then is precisely the notion of Design-by-Contract.

Given = the precondition-state that must hold true before the "When" (do ... end) is executed. The "Then" are the post-condition results and resulting state that must hold true after the "When" has been executed. This is a standard Hoare Triple of {P}C{Q}. Same dance—different words.

AutoTest is simply a tool within EiffelStudio for executing test code. That code is there for at least two primary purposes. The most primary is to prepare-to-call and then call the operation ("When") under-test. The preconditions of DbC are simply code representing final QA-test assertions that must hold true for the feature to execute without defect.

That BDD has found a notation like Gherkin in Cucumber is a happy circumstance. It allows non-technical people to write specifications that can be translated (compiled) from the BDD specification into possibly two code targets: First—at least a skeletal deferred version of a class. Second—possible test code that makes a call to the generated deferred class feature.

It is quite clear the BDD does in fact represent a pragmatic application of DbC code executed and exercised by TDD code.

Kindest regards,

Larry

clan...@earthlink.net

Jun 19, 2021, 8:44:43 PM6/19/21

to Eiffel Users

I agree that many of the parts map to one another between BDD and DBC but I think the emphasis is different.

The BDD process is pictured as follows: you have a meeting of the "three amigos" to discuss a new feature. They are a product person, a developer, and a tester. They agree to limit their meeting to about 25 minutes. They use cards to describe the feature. The new feature lead to some business rules. The rules lead to scenarios which demonstrate the rule. The scenarios describe the post-conditions. Also questions which can't be resolved come up. If any of the piles of cards gets too high you know your problem is too big.

Each of the scenarios are translated into the Given, when, then pattern. A software tool then can create the skeleton of test cases that express these clauses. These are run in the test tool. They should fail. You implement code until the tests pass. This forms your design.

I don't know if it is the best design method out there. Bertrand Meyer wrote a book about how he doesn't like the agile methods very much. The thing that is nice is that you create a framework of tests that you can run when you refactor code to see if you have broken something.

Another aspect of this approach is using Mock objects. These are good for when you don't have the interface for a class worked out, so you fake results until enough pieces are in place to figure it out. Mock objects fit into the test structure and are controlled by them. In the past I have worked on trying to create Mock objects in Eiffel and it wasn't very easy. The calling patterns used in DBC make it tricky.

Larry Rix

Jun 20, 2021, 8:42:23 AM6/20/21

to Eiffel Users

On Saturday, June 19, 2021 at 8:44:43 PM UTC-4 clan...@earthlink.net wrote:

I agree that many of the parts map to one another between BDD and DBC but I think the emphasis is different. They do map quite well. The emphasis is different for sure. The Given-When-Then specs are helpful and useful for "shaping" design thinking. It is quite obvious that for simple BDD specifications, a lexer/parser can be written where Eiffel code can be successfully generated directly from the specification text. See the attached documents.

The BDD process is pictured as follows: you have a meeting of the "three amigos" to discuss a new feature. They are a product person, a developer, and a tester. They agree to limit their meeting to about 25 minutes. They use cards to describe the feature. The new feature lead to some business rules. The rules lead to scenarios which demonstrate the rule. The scenarios describe the post-conditions. Also questions which can't be resolved come up. If any of the piles of cards gets too high you know your problem is too big.

If the BDD terminology holds—then—scenarios (in basic form) consist of Given-When-Then, so they describe far more than just the post-conditions. You are correct that in Cucumber's Gherkin, the following BNF-spec is roughly true:

Feature ::= [{Rule}*]

Rule ::= {Example}*

** Example = Scenario (synonymous)

Scenario ::= {Step}+

Step ::= {Given | When | Then | And | But}*

** Only When and Then are required. Also, And and But must be preceded by Given, When, or Then.

Feature ::= [{Rule}*]

Rule ::= {Example}*

** Example = Scenario (synonymous)

Scenario ::= {Step}+

Step ::= {Given | When | Then | And | But}*

** Only When and Then are required. Also, And and But must be preceded by Given, When, or Then.

I can agree that if your pile of cards is numerous, your problem space is not granular enough. This is a matter of choosing to start somewhere and then breaking the problem down.

Each of the scenarios are translated into the Given, when, then pattern. A software tool then can create the skeleton of test cases that express these clauses. These are run in the test tool. They should fail. You implement code until the tests pass. This forms your design.

A software tool (e.g. lexer/parser) can generate an implementation (deferred) stub along with a basic implemented class (see attached). From that same BDD-spec, it can also generate a basic set of unit tests on the primary Command features (Scenarios) from the BDD-spec text.

You are correct that such test code exercising the generated production code ought to now run and fail. The programmer is then responsible to provide the "passing implementation"

You are correct that such test code exercising the generated production code ought to now run and fail. The programmer is then responsible to provide the "passing implementation"

I don't know if it is the best design method out there. Bertrand Meyer wrote a book about how he doesn't like the agile methods very much. The thing that is nice is that you create a framework of tests that you can run when you refactor code to see if you have broken something.

That is true of all regression and integration testing in general. One does not need BDD for that. The important part of BDD is providing the "Three Amigos" with a place to begin.

Bertrand has suggested that the basic Eiffel code (e.g. deferred classes) provide a suitable stand-in for BDD specifications. This can work well if your non-technical BA (e.g. "product person") is shadowed by a programmer. Another choice is to train your BA in basic Eiffel programming, allowing them to write the deferred classes themselves, alone, without assistance. Try that in reality! I have. It doesn't work well. Most BA people barely get being a BA's job handled correctly, much less the mind-meld required to add even basic programming skills. This is the core idea of "Right-tool-Right-job"—the BA is a non-technical person who needs a non-technical tool (e.g. Notepad or other text editors).

We can all dance around whether we like Agile methods or not. The matter has become obfuscated and confused over the years to where we might debate the matter forever. That's a waste of time, in my not-so-humble opinion :-)

Bertrand has suggested that the basic Eiffel code (e.g. deferred classes) provide a suitable stand-in for BDD specifications. This can work well if your non-technical BA (e.g. "product person") is shadowed by a programmer. Another choice is to train your BA in basic Eiffel programming, allowing them to write the deferred classes themselves, alone, without assistance. Try that in reality! I have. It doesn't work well. Most BA people barely get being a BA's job handled correctly, much less the mind-meld required to add even basic programming skills. This is the core idea of "Right-tool-Right-job"—the BA is a non-technical person who needs a non-technical tool (e.g. Notepad or other text editors).

We can all dance around whether we like Agile methods or not. The matter has become obfuscated and confused over the years to where we might debate the matter forever. That's a waste of time, in my not-so-humble opinion :-)

Another aspect of this approach is using Mock objects. These are good for when you don't have the interface for a class worked out, so you fake results until enough pieces are in place to figure it out. Mock objects fit into the test structure and are controlled by them. In the past I have worked on trying to create Mock objects in Eiffel and it wasn't very easy. The calling patterns used in DBC make it tricky.

And finally—Rubber meet Road, Road let me introduce you to Rubber!

The BDD specifications (as raw text with a grammar) will not be written in a way that code and tests can be generated and auto-executed the first time out. The text written by the BA will most likely need a programmer to review and make suggestions for modifications. They will most likely bounce that back-and-forth for a while until both a satisfied with the result. As a part of this process, the programmer will attempt to run the BDD-lexer/parser and then look at the code generated to see if it makes sense. This will be his or her litmus test that the BDD-spec is finally workable further down the CI/CD pipeline.

Over time, the BA will learn from this exchange of ideas how to best write his or her BDD specs. They might even learn (even indirectly) some of the expectations of the programmer (e.g. how to name things properly, structures that work, and so on).

I listened to a podcast interview of a young lady working as a BA for the NAIC who went through this learning curve with her team (BA + Programmer + QA) and this was precisely her experience. She mentioned her lack of technical knowledge and lack of desire to become a programmer. She mentioned her need to lean on the Programmer and QA person(s) to help her shape her work product correctly to the intended target (code generation and test generation—be it automated or manual). Either way, if I had any inclination of sitting this gal down and trying to teach her basic Eiffel as a "spec language" for BA's, her talk squashed that hope in about 45 minutes of listening to what she had to say.

But—I am digressing from your primary and excellent point—MOCK OBJECTS! In my personal experience, the world is messy. On the one hand, there are certain mocks that can be easily written or even generated. The Gherkin grammar that allows some basic test-data mocking, which is (in turn) used to generate mock test objects, is intriguing. On the other hand, my experience has also encountered the complex test-setup code, where the code written to prepare for a testable state is far more complex than the test itself. There is a code smell there, yes?

Because of these things—I think it is imperative to get the design very close to correct from the start. An ounce of prevention (correct-first designs) is worth a pound of cure (later refactors of poor designs). Because of the non-technical minds of most BA people, I am inclined to think that BDD specifications are a very useful tool to translate user desire into blueprint specifications that allow programmers to get the code right from the start.

This fact is born out by Namcook in various studies and papers—that is—getting defects out at the requirements production stage has a tremendous impact on the software quality later on. Each step along the delivery pipeline has the ability to either produce or retard defects. Design-by-Contract is one of those tools to defect reduction. However, DbC is only as good as the requirement specifications driving it. Therefore, if there is an earlier way to describe the Client-Supplier (BDD) relationship, then we ought to take it! We will be rewarded with better quality and few defects overall.

The BDD specifications (as raw text with a grammar) will not be written in a way that code and tests can be generated and auto-executed the first time out. The text written by the BA will most likely need a programmer to review and make suggestions for modifications. They will most likely bounce that back-and-forth for a while until both a satisfied with the result. As a part of this process, the programmer will attempt to run the BDD-lexer/parser and then look at the code generated to see if it makes sense. This will be his or her litmus test that the BDD-spec is finally workable further down the CI/CD pipeline.

Over time, the BA will learn from this exchange of ideas how to best write his or her BDD specs. They might even learn (even indirectly) some of the expectations of the programmer (e.g. how to name things properly, structures that work, and so on).

I listened to a podcast interview of a young lady working as a BA for the NAIC who went through this learning curve with her team (BA + Programmer + QA) and this was precisely her experience. She mentioned her lack of technical knowledge and lack of desire to become a programmer. She mentioned her need to lean on the Programmer and QA person(s) to help her shape her work product correctly to the intended target (code generation and test generation—be it automated or manual). Either way, if I had any inclination of sitting this gal down and trying to teach her basic Eiffel as a "spec language" for BA's, her talk squashed that hope in about 45 minutes of listening to what she had to say.

But—I am digressing from your primary and excellent point—MOCK OBJECTS! In my personal experience, the world is messy. On the one hand, there are certain mocks that can be easily written or even generated. The Gherkin grammar that allows some basic test-data mocking, which is (in turn) used to generate mock test objects, is intriguing. On the other hand, my experience has also encountered the complex test-setup code, where the code written to prepare for a testable state is far more complex than the test itself. There is a code smell there, yes?

Because of these things—I think it is imperative to get the design very close to correct from the start. An ounce of prevention (correct-first designs) is worth a pound of cure (later refactors of poor designs). Because of the non-technical minds of most BA people, I am inclined to think that BDD specifications are a very useful tool to translate user desire into blueprint specifications that allow programmers to get the code right from the start.

This fact is born out by Namcook in various studies and papers—that is—getting defects out at the requirements production stage has a tremendous impact on the software quality later on. Each step along the delivery pipeline has the ability to either produce or retard defects. Design-by-Contract is one of those tools to defect reduction. However, DbC is only as good as the requirement specifications driving it. Therefore, if there is an earlier way to describe the Client-Supplier (BDD) relationship, then we ought to take it! We will be rewarded with better quality and few defects overall.

CONCLUSION

===========

Your point that BDD is bigger than just generating code (it's about the design) is spot on! However—products like Cucumber with Gherkin are demonstrating value—that is—better and higher quality specifications, some of which can be used to auto-generate both code and tests of that code—at least in skeletal form.

My hope is for such tools to show up in EiffelStudio. I would like to see ES grow to where it more than a programmers IDE, but is a team-based portal tool that facilitates each team player with the proper tools in the team context and along the product delivery pipeline. To this end, ES needs not only its IDE, but an EiffelStudio Server, which helps to coordinate the efforts to the team. It ought also be able to reach out, integrate, and use the facilities of tools like GitHub and GitLab and others (e.g. think Microservices and Cloud delivery tools). It ought also play nice with other QA tools like playback-and-record tools for GUI testing and so on.

Kindest regards,

Larry

Your point that BDD is bigger than just generating code (it's about the design) is spot on! However—products like Cucumber with Gherkin are demonstrating value—that is—better and higher quality specifications, some of which can be used to auto-generate both code and tests of that code—at least in skeletal form.

My hope is for such tools to show up in EiffelStudio. I would like to see ES grow to where it more than a programmers IDE, but is a team-based portal tool that facilitates each team player with the proper tools in the team context and along the product delivery pipeline. To this end, ES needs not only its IDE, but an EiffelStudio Server, which helps to coordinate the efforts to the team. It ought also be able to reach out, integrate, and use the facilities of tools like GitHub and GitLab and others (e.g. think Microservices and Cloud delivery tools). It ought also play nice with other QA tools like playback-and-record tools for GUI testing and so on.

Kindest regards,

Larry

Larry Rix

Jun 20, 2021, 8:47:59 AM6/20/21

to Eiffel Users

Stupid Google failed to add the attachments mentioned above.

Here they are (hopefully this time)!

Note that the BDD-spec is not Gherkin specifically, but is Gherkin-inspired. I have modified it somewhat to provide the needed information for appropriate Eiffel code and test generation. Hopefully, the added bits as they relate to the generated code are self-evident.

Here they are (hopefully this time)!

Note that the BDD-spec is not Gherkin specifically, but is Gherkin-inspired. I have modified it somewhat to provide the needed information for appropriate Eiffel code and test generation. Hopefully, the added bits as they relate to the generated code are self-evident.

clan...@earthlink.net

Jun 20, 2021, 3:27:38 PM6/20/21

to Eiffel Users

I think the problem with what you have created here is that it has implementation intertwined with design.

BDD is supposed to describe behavior, not implementation. So all the bracketed material in your BDD spec should be removed.

The output of the BDD spec (Gherkin) should be a test implementation, which should run in Autotest.

We run into problems already. I think Autotest treats every method as a test and calls it. I don't even know if there is any order to it.

I would think that the tests would look like:

a command that is the scenario name,

a require for each given, which is in turn a predicate feature

a query for each when, and a conjunction of their results with some kind of assertion coded in

an ensure clause with a predicate for each then.

It might be that autotest only calls commands, that would be a lucky coincidence. You could hide everything else in predicates.

Carl

clan...@earthlink.net

Jun 20, 2021, 4:53:21 PM6/20/21

to Eiffel Users

To follow up a little. I've been experimenting with autotest. It turns out a test method is executed if it is exported to ANY, takes no parameters, and returns no result. So my pattern from above should work.

Carl

Larry Rix

Jun 20, 2021, 5:35:03 PM6/20/21

to Eiffel Users

On Sunday, June 20, 2021 at 3:27:38 PM UTC-4 clan...@earthlink.net wrote:

I think the problem with what you have created here is that it has implementation intertwined with design.

The created code has no implementation beyond the API. The design as presented in the BDD spec is expressed in the API.

BDD is supposed to describe behavior, not implementation. So all the bracketed material in your BDD spec should be removed.

There is no implementation. Only API. The only implementation to be found is in the calls to class command features in the test code. That is not production implementation, nor design.

Let's ensure we have a good working definition of "behavior". This is encapsulated in the Client-Supplier Relationship. The contractual relationship of Rights-vs-Obligations for Client and Supplier is precisely what we expect of their behavior. The "how" of how they get their jobs done is the undetermined implementation detail. Nothing in the BDD spec, nor in the generated code says "how" each command (scenario/example) accomplishes its job. The only code present is descriptively named stubs for command and DbC assertion queries, which semantically tell the story of the Client-Supplier relationship alone. That's the point of BDD. :-)

The output of the BDD spec (Gherkin) should be a test implementation, which should run in Autotest.

This is what is there.

We run into problems already. I think Autotest treats every method as a test and calls it. I don't even know if there is any order to it.

This is a detail of deciding what needs to be called from a test versus not.

I would think that the tests would look like:a command that is the scenario name,

This is what is demonstrated.

a require for each given, which is in turn a predicate feature

This is what is demonstrated.

a query for each when, and a conjunction of their results with some kind of assertion coded in

This is what is demonstrated.

an ensure clause with a predicate for each then.

This is what is demonstrated.

It might be that autotest only calls commands, that would be a lucky coincidence. You could hide everything else in predicates.

This is certainly a great goal to have!

Larry Rix

Jun 20, 2021, 5:40:26 PM6/20/21

to Eiffel Users

You are precisely correct about classes that AutoTest recognizes and the features it recognizes as "test code". At the class level, it needs to first inherit from EQA_TEST_SET. From there, your are correct—exported to ANY, no arguments, and no Result.

clan...@earthlink.net

Jun 20, 2021, 5:42:27 PM6/20/21

to Eiffel Users

So you agree that you don't need any bracketed statements in the BDD spec? Why are they there.

You should look at what you have implemented again. The test code have no require, or ensure clauses.

Carl

Larry Rix

Jun 21, 2021, 11:01:46 AM6/21/21

to Eiffel Users

On Sunday, June 20, 2021 at 5:42:27 PM UTC-4 clan...@earthlink.net wrote:

So you agree that you don't need any bracketed statements in the BDD spec? Why are they there.

I do not agree at all. The square-bracketed identifiers are there to provide an identifier name for whatever grammatical token it is representing. This will be used to generate feature names.

You should look at what you have implemented again. The test code have no require, or ensure clauses.

Test code is not what needs require (precondition) and ensure (post-condition) assertions. The production code is where that is applied. Test code serves one of at least two purposes:

1. It exists to be the calling Client of the production code under test. As such, it needs no assertions of its own. However, as the Client-caller it is responsible for creating an instance of the class with the feature to be tested and to ensure the Supplier preconditions are met before calling.

2. It exists to test Supplier results beyond the scope of the Supplier post-condition ensure assertions.

Carl

Larry Rix

Jun 21, 2021, 11:11:31 AM6/21/21

to Eiffel Users

However—you do bring up an excellent point and that is the placement of the [Identifier] part. I do not have to place it after, but could place it between the Keyword and the

Descriptive_text, such that:

Line ::= Keyword ":" "[" Identifier "]" Descriptive_text

For example: Instead of ...

Line ::= Keyword ":" "[" Identifier "]" Descriptive_text

For example: Instead of ...

Title: Guess the word game

[WORD_GUESS_GAME]

Title: [WORD_GUESS_GAME] Guess the word game

The other choice is to leave it to the writer to determine if the Identifier comes before or after the

Descriptive_text.

Either way—the result is the same. The ID tag is there to give the parser/lexer/generator a meaningful Eiffel identifier and the Descriptive_text becomes Eiffel comments as they relate to where the Identifier is applied.

Either way—the result is the same. The ID tag is there to give the parser/lexer/generator a meaningful Eiffel identifier and the Descriptive_text becomes Eiffel comments as they relate to where the Identifier is applied.

clan...@earthlink.net

Jun 21, 2021, 11:57:33 AM6/21/21

to Eiffel Users

The problem is that it doesn't fit the Gherkin grammar, so you are inventing something new.

When you say you are testing production code, that means you are defining implementation, at the BDD level.

Carl

clan...@earthlink.net

Jun 21, 2021, 12:00:28 PM6/21/21

to Eiffel Users

I don't know if you have looked at the BON method of design. You can find the book about it online. It fits extremely closely with Eiffel. A fellow named Kinery (?) had some classes online about creating Java tools implementing BON. He used to post on this forum.

Carl

Larry Rix

Jun 21, 2021, 12:09:56 PM6/21/21

to Eiffel Users

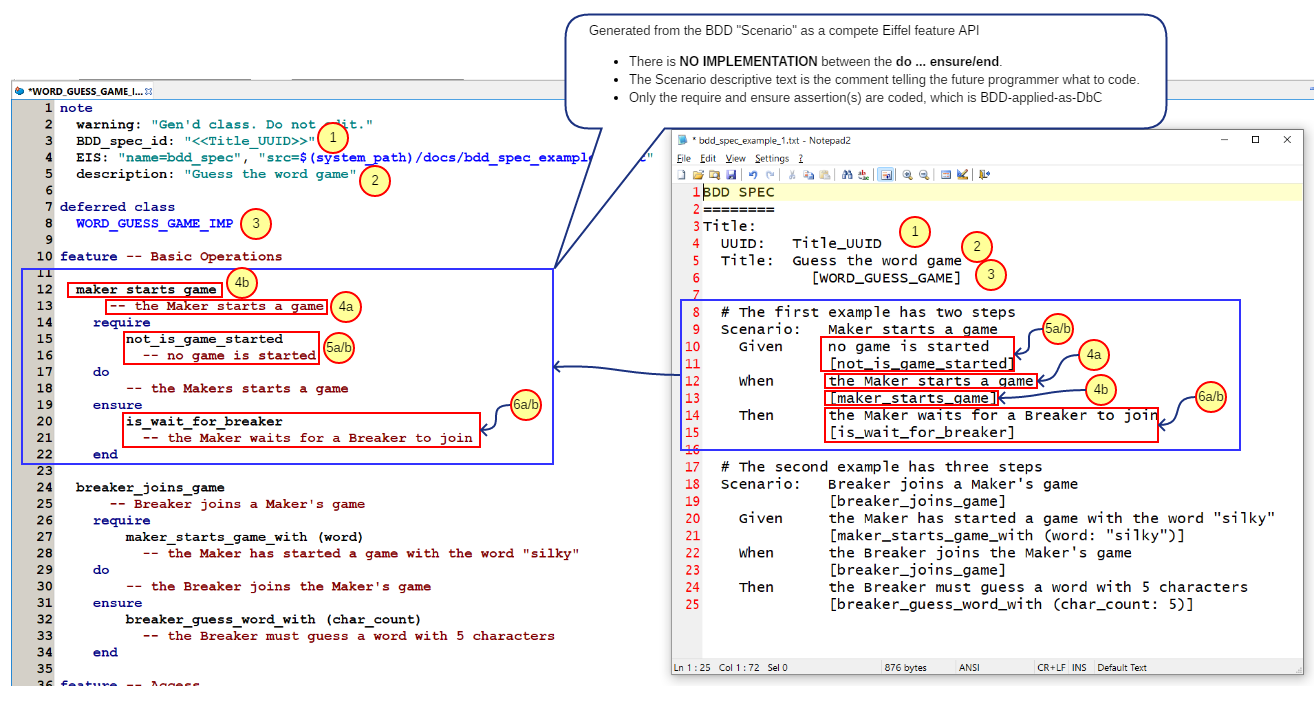

Please allow me to demonstrate through an illustration how the BDD spec is transformed into Eiffel code per my envisioned parser/lexer.

NOTE: Each numeral in a circle represents how the data from the specification is transferred into the generated Eiffel class.

- The BDD specification is given a UUID to ensure it is forever linked to its source BDD specification regardless of refactoring or renaming.

- The BDD spec Title transfers to the "description" of the class note clause.

- The Title square-bracketed identifier is used to name the Eiffel class.

- The square-bracketed Identifier is used for the feature name and the Descriptive_text is used as the feature comment. (4a and 4b).

- The Given_part Descriptive text is used as a comment for the lone precondition assertion provided. The square-bracketed part is the BOOLEAN feature created as stub code.

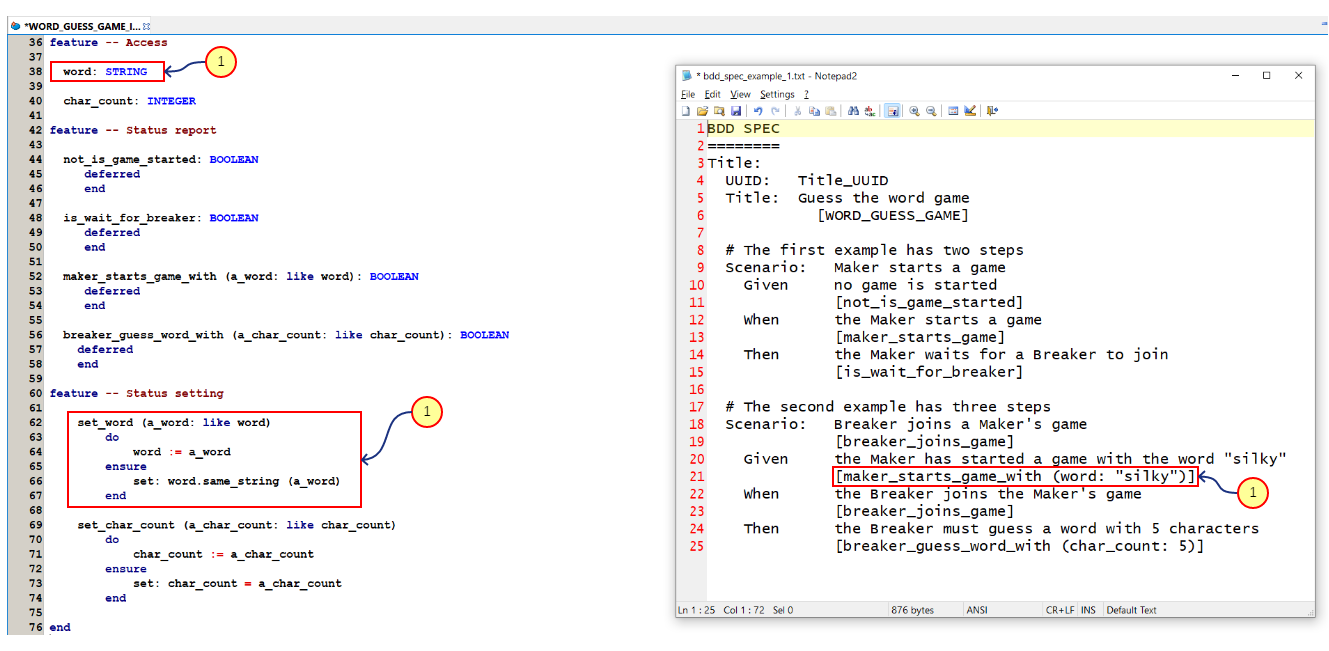

The parser/lexer/generator (e.g BDD-compiler) can also use the BDD notation I am designing to detect basic and complex types. See below.

In this example, the `maker_starts_game_with' has an argument example, where "silky" is parsed to mean that a feature named `word' is of type STRING. Therefore, the emitted code creates a word: STRING property with an accompanying setter (e.g. set_word). This will be true of all basic types (e.g. those with clear manifest constants like STRING, BOOLEAN, INTEGER, REAL, DOUBLE, and even TUPLE. Complex types will be detectable and generatable based on type names of BDD specs (see WORD_GUESS_GAME above). Therefore, one might pass a game: WORD_GUESS_GAME, where the parser/lexer searches through the BDD specs looking for that "class" specification and then making the appropriate reference to ensure type-safety.

In this example, the `maker_starts_game_with' has an argument example, where "silky" is parsed to mean that a feature named `word' is of type STRING. Therefore, the emitted code creates a word: STRING property with an accompanying setter (e.g. set_word). This will be true of all basic types (e.g. those with clear manifest constants like STRING, BOOLEAN, INTEGER, REAL, DOUBLE, and even TUPLE. Complex types will be detectable and generatable based on type names of BDD specs (see WORD_GUESS_GAME above). Therefore, one might pass a game: WORD_GUESS_GAME, where the parser/lexer searches through the BDD specs looking for that "class" specification and then making the appropriate reference to ensure type-safety.

Larry Rix

Jun 21, 2021, 12:15:11 PM6/21/21

to Eiffel Users

On Monday, June 21, 2021 at 11:57:33 AM UTC-4 clan...@earthlink.net wrote:

The problem is that it doesn't fit the Gherkin grammar, so you are inventing something new.

And this bad, why again? :-) ... where is the universal First Book of Gherkin for the Gospel of BDD? Is Gherkin flawless? I think not. But that's me, right? :-)

When you say you are testing production code, that means you are defining implementation, at the BDD level.

Not at all (see the provided illustrations). There is no implementation being provided. There is an API with nothing between the "do ... end" (or "do ... ensure"). Implementation code is not equal to everything beyond test code. Implementation is that code that provides the How and not the What. The API is empty and has no implementation (How) code at all. The API describes the What (is happening)—that is—the behavior at a high level with only the Design-by-Contract rules to guide the correct way for a Client to call and the rules by which a Supplier knows when it has done a proper job.

Larry Rix

Jun 21, 2021, 12:21:13 PM6/21/21

to Eiffel Users

On Monday, June 21, 2021 at 12:00:28 PM UTC-4 clan...@earthlink.net wrote:

I don't know if you have looked at the BON method of design. You can find the book about it online.

Yes. BON is built into Eiffel Studio. BON is great. It is a wonderful tool for what it is, but it is not a tool for non-technical people like BA's and QA's. If you want to glaze over the eyes and minds of a BA, just show them BON or Eiffel code (or both). Show them a BDD specification and they will immediately "get it". :-)

It fits extremely closely with Eiffel.

Yes, it does. Bertrand and others at Eiffel Software knew this. It is why BON is built in as a tool in Eiffel Studio. :-)

A fellow named Kinery (?) had some classes online about creating Java tools implementing BON. He used to post on this forum.

BON is language-agnostic—or at least it is language-friendly. So, that BON can model Java is not surprising. I think I have seen that somewhere back in the deep dark history of my personal experience. :-)

Larry Rix

Jun 21, 2021, 12:33:27 PM6/21/21

to Eiffel Users

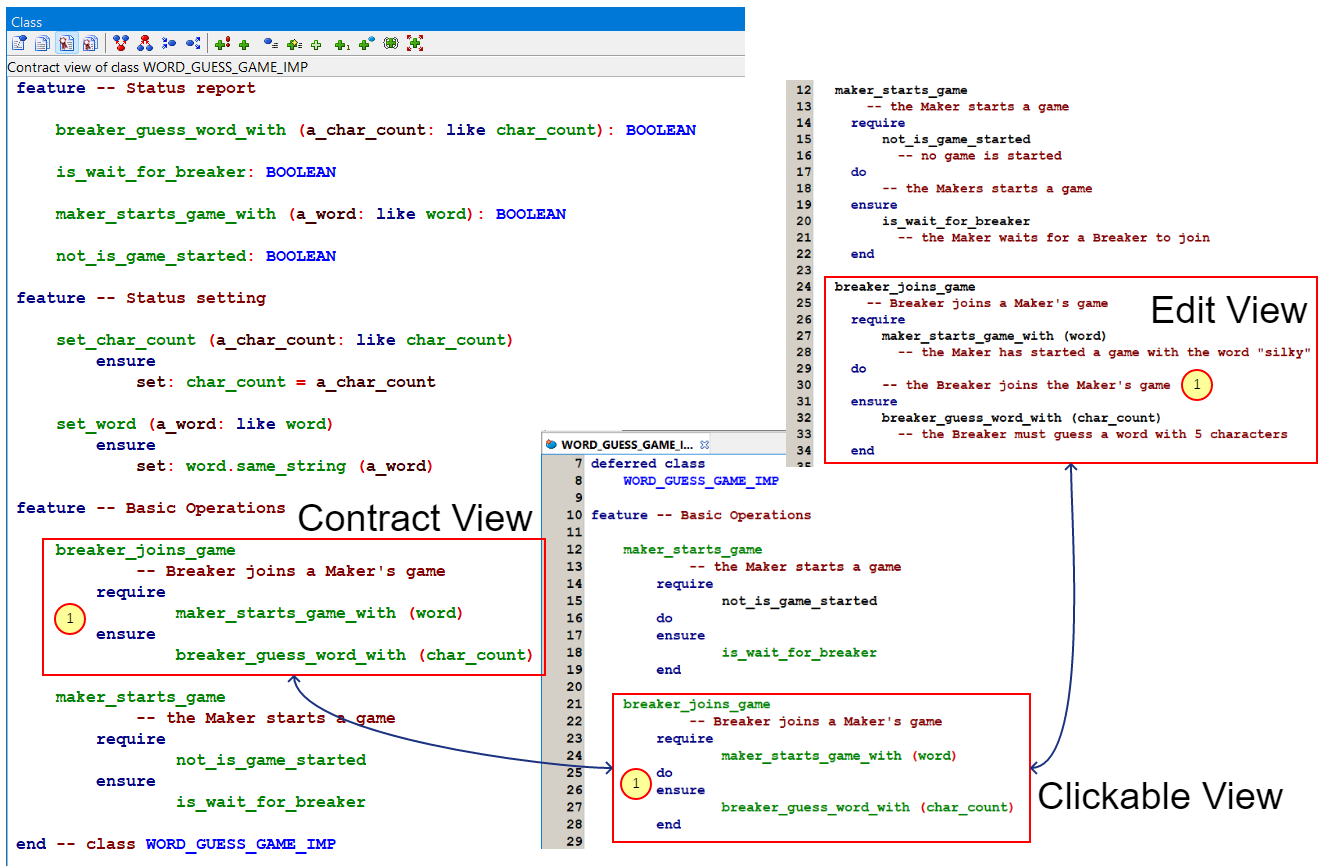

Here is another way to see that there is NO IMPLEMENTATION CODE at all. Only the API. At each place labeled as #1 (circled number), you will see that there is no implementation code given at all. The only code found is the API (e.g the name of the feature plus the assertion-code for require preconditions and ensure post-conditions).

clan...@earthlink.net

Jun 21, 2021, 3:10:45 PM6/21/21

to Eiffel Users

EiffelStudio doesn't really implement BON as described in the book. ES shows the class chart in a UML like form, and you can edit features and the like. The textual form from the book has an assertion language built into it. Also, it has a reversible design, meaning with the right tool, you can work at an informal level, refine it to more formal one, and go backward and forward with changes being moved back and forth. I don't know if anybody ever implemented it, in any language. There was somebody in York who worked on it, if I remember correctly.

I think the process you have come up is more or less akin to what I was suggesting. My goal would be not to have any bracketed phrases and generate all code base on the scenario clause wording. This would all be placed in the test class. The Cucumber version does this -- using only method calls. The trick of using attributes to save parameters should work in test code also.

The interpretation of the word "Given" is an interesting question. Do you mean this was the responsibility of the client, as in the Eiffel world, or is it something that is going to be made to happen, which is more of a behavior friendly idea. The "when" implementation starts in the same place no matter which you do, and ensure works the same in both methods.

Unfortunately, while this all makes for an interesting discussion between us, I don't think it really proceeds very far. The Eiffel folks are already busy enough.

Carl

Larry Rix

Jun 22, 2021, 12:04:44 PM6/22/21

to Eiffel Users

Outstanding response!

I honestly do not need them to do anything. :-)

I am part way through writing the BDD-spec parser/lexer/compiler/generator as I am writing this to you. I already have some test code that is consuming a BDD specification and producing a a token list, which will then feed a code-generator (that's the next part for me to write). As soon as I have that working, I will share that code with everyone to see what everyone thinks.

GOAL: The goal will be to have a tool available to Eiffel programmers/BAs who can then build BDD specifications, have them syntax-checked and code generated according to a result-design-patter, and then utilize that code however they see fit in their CI/CD pipeline. The hope will be that the BDD specs will then serve as fodder for QA and Acceptance Testing. What will be most interesting to me is to give this thing a shot on points where the code is dependent on external input (e.g. files from a server or keystrokes and mouse-actions with a mouse).

Kindest regards,

Larry

On Monday, June 21, 2021 at 3:10:45 PM UTC-4 clan...@earthlink.net wrote:

EiffelStudio doesn't really implement BON as described in the book. ES shows the class chart in a UML like form, and you can edit features and the like. The textual form from the book has an assertion language built into it. Also, it has a reversible design, meaning with the right tool, you can work at an informal level, refine it to more formal one, and go backward and forward with changes being moved back and forth. I don't know if anybody ever implemented it, in any language. There was somebody in York who worked on it, if I remember correctly.

This is excellent news (e.g. BON has a textual version or grammar). If that is the case, then like you, I am surprised that no one wrote a parser/generator against it where code can be produced from the BON notation! That's worth exploring. Thank you for the tip!

I think the process you have come up is more or less akin to what I was suggesting.

I thought so as well, which was part of my confusion. I am glad we are tracking together again! :-)

My goal would be not to have any bracketed phrases and generate all code base on the scenario clause wording. This would all be placed in the test class. The Cucumber version does this -- using only method calls. The trick of using attributes to save parameters should work in test code also.

RE: [Bracketed_text] — the reason I chose to have this feature to the grammar is because sentences make for horrible feature names (routines, attributes, properties, methods, et al). So, the idea behind the Bracketed_text is to force the BDD spec writer to summarize his/her long-winded descriptive text (sentence) in a smaller more compact thing that can be used as a reasonable feature name in generated code. That way—the spec writer can be as verbose as they feel necessary, but it frees the parser/lexer/generator from having to either generate equally verbose names or try to do that slimming down in some stupid way that ends up being just as horrid as a long verbose name built from a sentence.

The interpretation of the word "Given" is an interesting question. Do you mean this was the responsibility of the client, as in the Eiffel world, or is it something that is going to be made to happen, which is more of a behavior friendly idea.

The Given part is very much a cousin of the Then part in that they both have stateful conditions, but the question is: What is the scope of those conditions and who is responsible.

For example: Some of the precondition statefulness is under the control of the calling Client code. Another part of it is the overall setup state (e.g. building mocks and so on). The client caller will be "set down in the middle" of an existing state, where it inherits that by virtue of the running software. Another part of the state will be its direct responsibility. So, to say it another way: There is precondition state that is indirectly surrounding the Client when it calls and then there is directly controlled and responsible-for state that belongs to the Client caller.

The same is found in the resulting state. The post-conditions that the Supplier must adhere to may be a smaller subset of the overall resulting post-condition state. For example: The supplier may be responsible for building a list of widget things, but it is not responsible for how many widgets are on the list. We could explore this point ad naseum. The fact would remain that the supplier post-condition assertions do not tell the whole story of the overall results of its work. Therefore, the TDD test code picks up at that point. So, the Supplier produces a list and the TDD test code looks at that list and further decides if the resulting list meets certain assertion rules.

For example: Some of the precondition statefulness is under the control of the calling Client code. Another part of it is the overall setup state (e.g. building mocks and so on). The client caller will be "set down in the middle" of an existing state, where it inherits that by virtue of the running software. Another part of the state will be its direct responsibility. So, to say it another way: There is precondition state that is indirectly surrounding the Client when it calls and then there is directly controlled and responsible-for state that belongs to the Client caller.

The same is found in the resulting state. The post-conditions that the Supplier must adhere to may be a smaller subset of the overall resulting post-condition state. For example: The supplier may be responsible for building a list of widget things, but it is not responsible for how many widgets are on the list. We could explore this point ad naseum. The fact would remain that the supplier post-condition assertions do not tell the whole story of the overall results of its work. Therefore, the TDD test code picks up at that point. So, the Supplier produces a list and the TDD test code looks at that list and further decides if the resulting list meets certain assertion rules.

The "when" implementation starts in the same place no matter which you do, and ensure works the same in both methods.

That is correct! The When part has an API with precondition and post-condition rules, but one does not need to provide the "How" of it, only the fact that it does do it (ultimately) and the preconditions and post-conditions that must hold true on either side of its execution.

Unfortunately, while this all makes for an interesting discussion between us, I don't think it really proceeds very far. The Eiffel folks are already busy enough.

I honestly do not need them to do anything. :-)

I am part way through writing the BDD-spec parser/lexer/compiler/generator as I am writing this to you. I already have some test code that is consuming a BDD specification and producing a a token list, which will then feed a code-generator (that's the next part for me to write). As soon as I have that working, I will share that code with everyone to see what everyone thinks.

GOAL: The goal will be to have a tool available to Eiffel programmers/BAs who can then build BDD specifications, have them syntax-checked and code generated according to a result-design-patter, and then utilize that code however they see fit in their CI/CD pipeline. The hope will be that the BDD specs will then serve as fodder for QA and Acceptance Testing. What will be most interesting to me is to give this thing a shot on points where the code is dependent on external input (e.g. files from a server or keystrokes and mouse-actions with a mouse).

Kindest regards,

Larry

Larry Rix

Jun 22, 2021, 12:21:48 PM6/22/21

to Eiffel Users

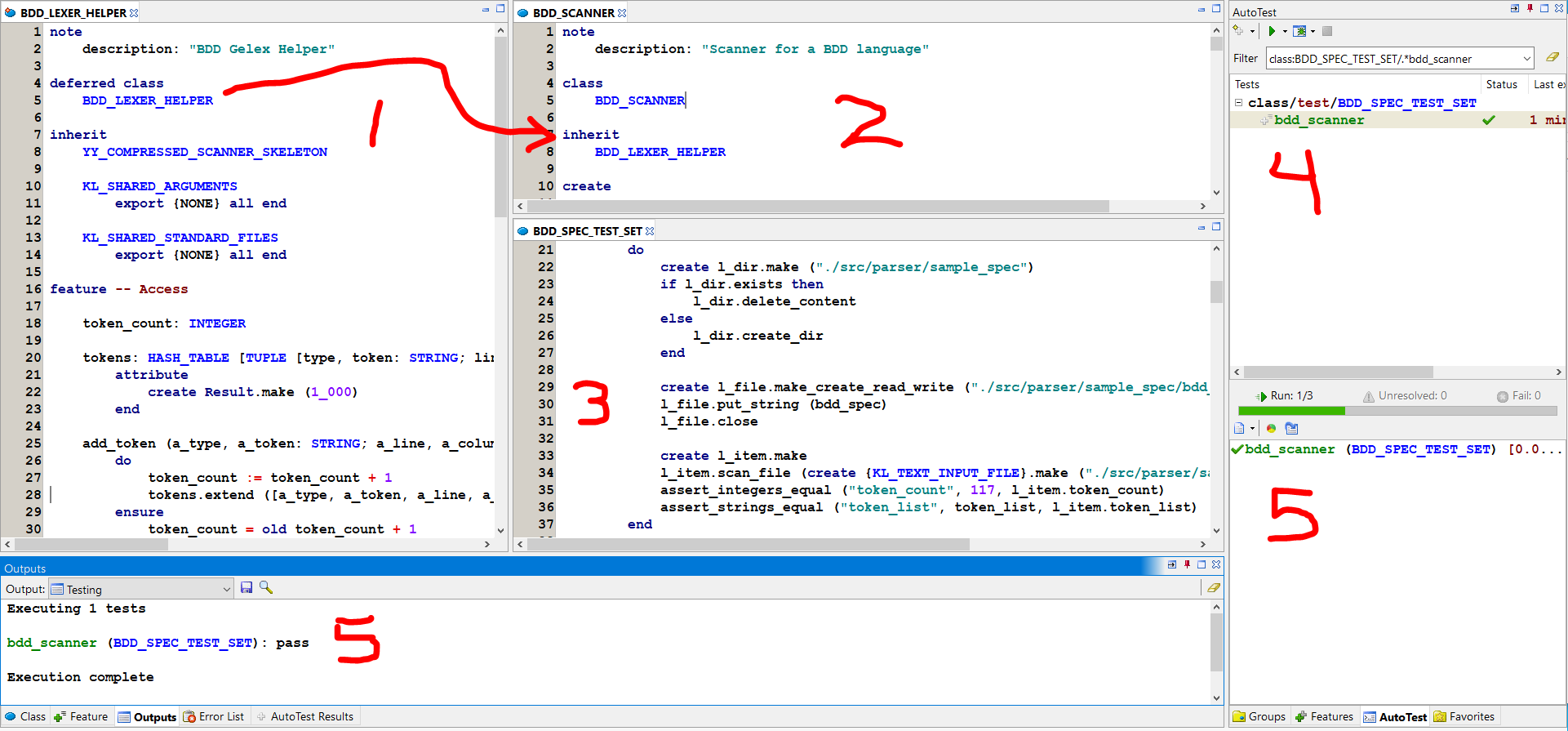

In the illustration below, you will find a screenshot of my working SCANNER (so far). The BDD_SCANNER class is what was generated by Gelex (Gobo Eiffel Lexer). It inherits from BDD_LEXER_HELPER, which has the code to parse a source file (BDD spec text file) and produce a list of "tokens". These tokens can then be used to sequentially generate all of the needed Eiffel code that represents the BDD specification as production code and TDD code calling and exercising it. The generated classes will be deferred, where the receiving programmer will be responsible for providing the necessary implementation code to the API (both deferred production and test code).

1. BDD_LEXER_HELPER = The code added to the BDD_SCANNER

2. BDD_SCANNER = The code generated by Gelex, which is the parser.

3. BDD_SPEC_TEST_SET = The TDD tests I am using to ensure that the SCANNER is doing the right thing (see further illustrations below)

1. BDD_LEXER_HELPER = The code added to the BDD_SCANNER

2. BDD_SCANNER = The code generated by Gelex, which is the parser.

3. BDD_SPEC_TEST_SET = The TDD tests I am using to ensure that the SCANNER is doing the right thing (see further illustrations below)

The other illustrations are attached for your convenience.

Larry Rix

Jun 22, 2021, 12:30:52 PM6/22/21

to Eiffel Users

Note that the SCANNER specification is really rough and not ready for prime-time (even alpha testing). So, don't read too much into the grammar spec just yet.

For example: the SENTENCE does not work (Words are gathered up instead). So, I have a choice. I'd like to get SENTENCE working so that WORD becomes superfluous and not needed. In that case, I can just know what the sentences are.

There is much work yet to do based on some form of the Gherkin specification, which is serving as an inspiration in the entire process (but is not "gospel" as already indicated before).

For example: the SENTENCE does not work (Words are gathered up instead). So, I have a choice. I'd like to get SENTENCE working so that WORD becomes superfluous and not needed. In that case, I can just know what the sentences are.

There is much work yet to do based on some form of the Gherkin specification, which is serving as an inspiration in the entire process (but is not "gospel" as already indicated before).

I really want to get to a place where one can refer to other specs (classes with routines/properties) and have the code generated accordingly. I am imagining a program that identifies all of the BDD spec files and then parses each of them in-turn, building a cross-reference table between them, and then (if everything is found and accounted for), it then starts generating code.

I would also like to have it capable of making at least simple "test data" to pass like strings, numbers, lists, tuples, and so on. If it can parse and detect "basic types", then we might have the capacity to make tests that actually provide some "sample data" based on the BDD spec made by the BA and also presents the chance to take manual-testing off of the QA people on the other side. Even if the BA/Programmer/QA are all the same person!

I would also like to have it capable of making at least simple "test data" to pass like strings, numbers, lists, tuples, and so on. If it can parse and detect "basic types", then we might have the capacity to make tests that actually provide some "sample data" based on the BDD spec made by the BA and also presents the chance to take manual-testing off of the QA people on the other side. Even if the BA/Programmer/QA are all the same person!

clan...@earthlink.net

Jun 22, 2021, 3:22:18 PM6/22/21

to Eiffel Users

If you are considering feature requests then I would suggest using a no bracket gherkin feature file to create a test class pretty much like cucumber's step definitions.

Don't worry about bad long stringy names, the good thing is that they raise the chance of being unique.

The result is something that can be run directly in autotest as a manual test.

Your not trying to make a complete file, just something you can copy and paste from. You would try to avoid clobbering peoples work.

So Gherkin Feature name -> test class name

Scenario name -> individual test name (in the autotest sense)

test has no require and no ensure, it has do .... clause1, clause2, clause3, .... end

each clause becomes a eiffel feature with the keyword stripped and has the long ugly name and lives in a feature{NONE} section. it has some initial "I'm not done" exception.

in the scenario outline mode, the feature can be parameterized, you may have already determined how to work this.

for the scenario outline the do ... has some kind of iterator ... end calling the clauses

you would translate the scenario data into something simple, like an array of TUPLE with the scenario name to make it identifiable

Motivation for these suggestions:

I played with Autotest a little more, and it calls test in a random order.

Also, it treats returns from exceptions differently. There's pass (green), fail (fail) , and unresolved(yellow)

If you fail a requires or ensure clause on a test you get a yellow, if you fail a requires on a called class you get a yellow.

If you assert a falsehood or cause an exception in the test you get a red.

If you fail the ensure in a called class you get a red.

If you cause an exception in the called class you get a red.

It is not the end of the world for people to decide that yellow is the same as red, but it adds complication.

One thing I know for sure is that people hate automatically generated code, and will throw it away almost without thinking.

I think I can see the motivation for how you are doing the generator, you want two classes of the given name so that one is auto-generated and one can be coded by hand.

I had assumed that you were using the bracketed items for generating a first implementation for a target class, that was why I was suspicious of the design/implementation intermix.

It might be that there is a way to make this work. The cucumber method seems to be using Java's nested class approach to build up a target class, using the step definition file as the scratch pad.

I do think you should use the @-sign tags for marking instead of brackets, then in the generated code, these would turn into notes (along with given, when, etc) so people can tell how something was generated.

Are you intending to make a separate application, using a Lexer and Parser? This could probably be made to work using the console tool to copy and paste from.

Carl

Reply all

Reply to author

Forward

0 new messages