overlord has large mount of logs

144 views

Skip to first unread message

Leon

Aug 21, 2016, 9:24:33 PM8/21/16

to Druid User

Hi everyone,

We have choose druid recently, it is very impressive, the required disk size is dramatically less than similar products.

But now, we meet some issue after running a small cluster.

we have three hosts:

one for a historical and a middleManager

one for a overlord and a coordinate and a tranquility

one for a broker and a middleManager

it runs well in first hours, but after that, the overlord output large mount of logs like:

2016-08-22T01:03:08,902 INFO [Curator-LeaderSelector-0] io.druid.indexing.overlord.TaskLockbox - Task[index_realtime_NetInterfaceStatus_2016-08-20T12:00:00.000Z_0_0] already present in TaskLock[index_realtime_NetInterfaceStatus]2016-08-22T01:03:08,902 INFO [Curator-LeaderSelector-0] io.druid.indexing.overlord.TaskLockbox - Reacquired lock on interval[2016-08-20T12:00:00.000Z/2016-08-20T13:00:00.000Z] version[2016-08-20T12:36:19.493Z] for task: index_realtime_NetInterfaceStatus_2016-08-20T12:00:00.000Z_0_0

these logs output several time per millisecond.

I found the recent exception or error output is:

2016-08-22T01:03:06,388 INFO [Curator-LeaderSelector-0] io.druid.indexing.overlord.TaskMaster - Bowing out!2016-08-22T01:03:06,388 ERROR [Curator-LeaderSelector-0] io.druid.indexing.overlord.TaskMaster - Failed to lead: {class=io.druid.indexing.overlord.TaskMaster, exceptionType=class java.lang.reflect.InvocationTargetException, exceptionMessage=null}java.lang.reflect.InvocationTargetExceptionat sun.reflect.GeneratedMethodAccessor34.invoke(Unknown Source) ~[?:?]at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.7.0_91]at java.lang.reflect.Method.invoke(Method.java:606) ~[?:1.7.0_91]at com.metamx.common.lifecycle.Lifecycle$AnnotationBasedHandler.start(Lifecycle.java:350) ~[java-util-0.27.9.jar:?]at com.metamx.common.lifecycle.Lifecycle.start(Lifecycle.java:259) ~[java-util-0.27.9.jar:?]at io.druid.indexing.overlord.TaskMaster$1.takeLeadership(TaskMaster.java:141) [druid-indexing-service-0.9.1.1.jar:0.9.1.1]at org.apache.curator.framework.recipes.leader.LeaderSelector$WrappedListener.takeLeadership(LeaderSelector.java:534) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.leader.LeaderSelector.doWork(LeaderSelector.java:399) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.leader.LeaderSelector.doWorkLoop(LeaderSelector.java:441) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.leader.LeaderSelector.access$100(LeaderSelector.java:64) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.leader.LeaderSelector$2.call(LeaderSelector.java:245) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.leader.LeaderSelector$2.call(LeaderSelector.java:239) [curator-recipes-2.10.0.jar:?]at java.util.concurrent.FutureTask.run(FutureTask.java:262) [?:1.7.0_91]at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471) [?:1.7.0_91]at java.util.concurrent.FutureTask.run(FutureTask.java:262) [?:1.7.0_91]at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) [?:1.7.0_91]at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) [?:1.7.0_91]at java.lang.Thread.run(Thread.java:745) [?:1.7.0_91]Caused by: java.lang.IllegalStateException: SupervisorManager already startedat com.google.common.base.Preconditions.checkState(Preconditions.java:176) ~[guava-16.0.1.jar:?]at io.druid.indexing.overlord.supervisor.SupervisorManager.start(SupervisorManager.java:93) ~[druid-indexing-service-0.9.1.1.jar:0.9.1.1]... 18 more

and:

2016-08-22T01:03:06,367 ERROR [Curator-LeaderSelector-0] io.druid.indexing.overlord.TaskMaster - TaskMaster set a new Lifecycle without the old one being cleared! Race condition: {class=io.druid.indexing.overlo

rd.TaskMaster}

my druid version is 0.9.1.1, meta data using mysql

anyone has any ideas?

Thanks very much!

Fangjin Yang

Aug 25, 2016, 5:25:29 PM8/25/16

to Druid User

Hi Leon, can you describe you cluster a little bit, most notable, where is Zookeeper running?

Gian Merlino

Aug 26, 2016, 2:46:30 AM8/26/16

to druid...@googlegroups.com

Hmm, it looks like your Overlord is probably looping through leadership cycles really fast. Do you notice any other exceptions in the Overlord log? Perhaps it gains leadership, fails, and then continues in an infinite loop always failing for the same reason.

Gian

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/8d086668-8205-44c4-9d82-20fbf78ad26f%40googlegroups.com.--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to druid-user+unsubscribe@googlegroups.com.

To post to this group, send email to druid...@googlegroups.com.

LiangQing

Aug 29, 2016, 4:55:24 AM8/29/16

to druid...@googlegroups.com

Hi Fangjin,

Thanks for your reply,

The zookeepers is running with the same three nodes of druid cluster, each node running one zookeeper.

I have suspect that maybe our test clusters is too small, so we get three more powerful machines, each one has 32 cores and 32GB memory

And we think maybe imply is easy to deploy,

so in the new cluster, i have installed:

host 1: imply master.conf

host 2: imply data.conf

host 3: imply query.conf

all config is default conf in imply release directory, except:

- zookeepers running in host 1, host 2, host 3 (we add zk config in supervise/data.conf and supervise/query.conf) by add line '!p10 zk bin/run-zk conf'

- add data nodes to host 1 by adding: "historical bin/run-druid historical conf" and "middleManager bin/run-druid middleManager conf"

- change the meta data store to our mysql

- change the capacity of middleManagers to 30 by "druid.worker.capacity=30"

- every host running a tranquility node by adding: "!p95 tranquility-server bin/tranquility server -configFile conf/tranquility/monitor.json", monitor.json is our own data source schema

master.conf:

:verify bin/verify-java!p10 zk bin/run-zk confcoordinator bin/run-druid coordinator conf!p80 overlord bin/run-druid overlord confhistorical bin/run-druid historical confmiddleManager bin/run-druid middleManager conf!p95 tranquility-server bin/tranquility server -configFile conf/tranquility/monitor.json

data.conf

:verify bin/verify-java

!p10 zk bin/run-zk conf

historical bin/run-druid historical conf

middleManager bin/run-druid middleManager conf

# Uncomment to use Tranquility Server

!p95 tranquility-server bin/tranquility server -configFile conf/tranquility/monitor.json

# Uncomment to use Tranquility Kafka

#!p95 tranquility-kafka bin/tranquility kafka -configFile conf/tranquility/kafka.json

query.conf

:verify bin/verify-java:verify bin/verify-node!p10 zk bin/run-zk confbroker bin/run-druid broker confpivot bin/run-pivot conf!p95 tranquility-server bin/tranquility server -configFile conf/tranquility/monitor.json

after config and deploy the cluster, the cluster running very well for two hours, but after two hours, the data can not insert, every request to tranquility will response:

{"result":{"received":1,"sent":0}}

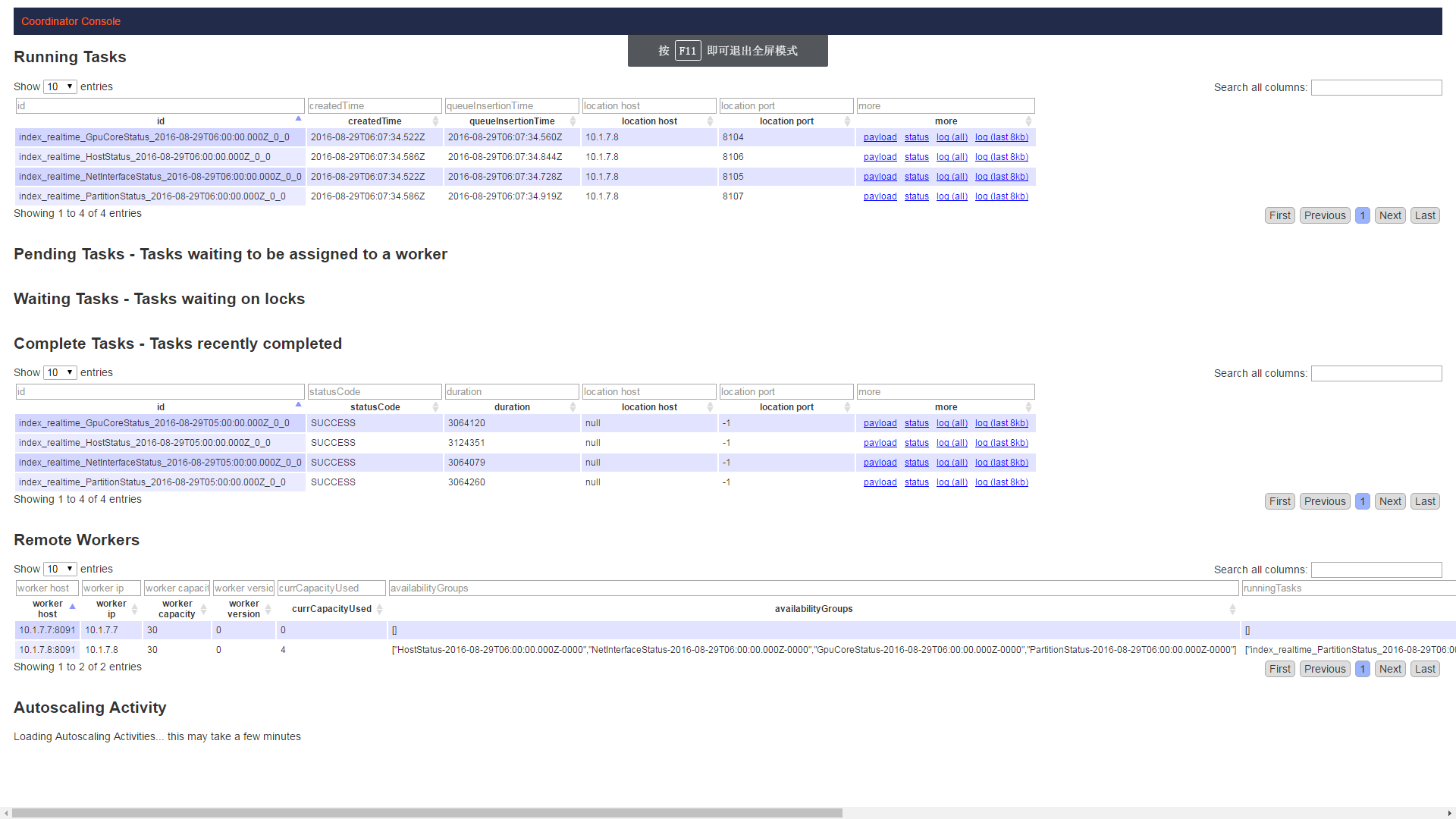

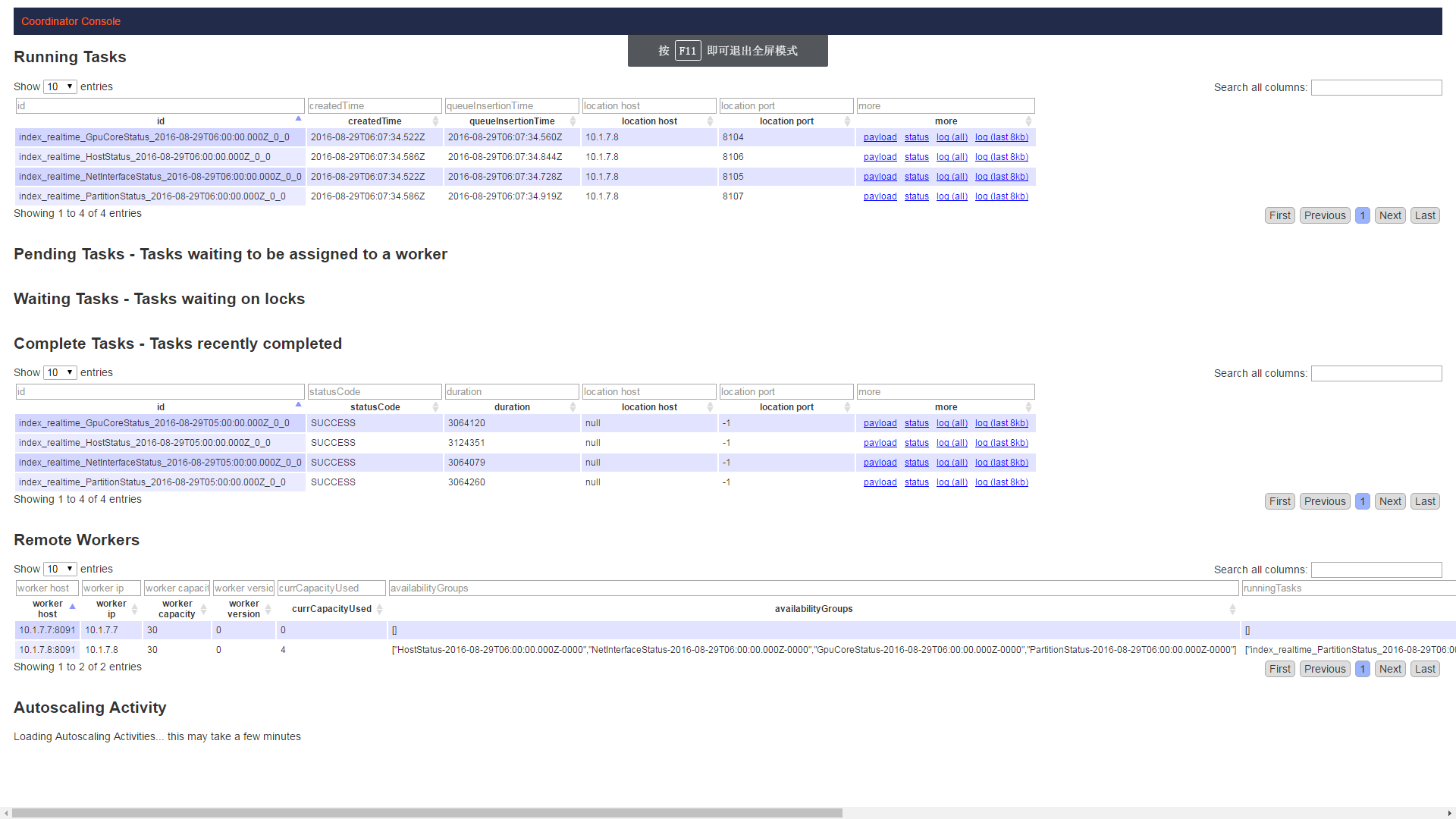

the overloard console:

There are a lot of errors in the historical.log of host 1:

2016-08-29T07:25:12,302 ERROR [ZkCoordinator-0] io.druid.server.coordination.ZkCoordinator - Failed to load segment for dataSource: {class=io.druid.server.coordination.ZkCoordinator, exceptionType=class io.druid.segment.loading.SegmentLoadingException, exceptionMessage=Exception loading segment[PartitionStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:26.133Z], segment=DataSegment{size=4817673, shardSpec=LinearShardSpec{partitionNum=0}, metrics=[count, total_sum, total_max, total_min, free_sum, free_max, free_min, used_sum, used_max, used_min, avail_sum, avail_max, avail_min, files_sum, files_max, files_min, freeFiles_sum, freeFiles_max, freeFiles_min, diskReads_sum, diskReads_max, diskReads_min, diskWrites_sum, diskWrites_max, diskWrites_min, diskReadBytes_sum, diskReadBytes_max, diskReadBytes_min, diskWriteBytes_sum, diskWriteBytes_max, diskWriteBytes_min, usedPercent_sum, usedPercent_max, usedPercent_min], dimensions=[id, ip], version='2016-08-29T05:25:26.133Z', loadSpec={type=local, path=/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip}, interval=2016-08-29T05:00:00.000Z/2016-08-29T06:00:00.000Z, dataSource='PartitionStatus', binaryVersion='9'}}io.druid.segment.loading.SegmentLoadingException: Exception loading segment[PartitionStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:26.133Z]at io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:309) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator.addSegment(ZkCoordinator.java:350) [druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.SegmentChangeRequestLoad.go(SegmentChangeRequestLoad.java:44) [druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator$1.childEvent(ZkCoordinator.java:152) [druid-server-0.9.1.1.jar:0.9.1.1]at org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:522) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:516) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.listen.ListenerContainer$1.run(ListenerContainer.java:93) [curator-framework-2.10.0.jar:?]at com.google.common.util.concurrent.MoreExecutors$SameThreadExecutorService.execute(MoreExecutors.java:297) [guava-16.0.1.jar:?]at org.apache.curator.framework.listen.ListenerContainer.forEach(ListenerContainer.java:85) [curator-framework-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.PathChildrenCache.callListeners(PathChildrenCache.java:514) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.EventOperation.invoke(EventOperation.java:35) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.PathChildrenCache$9.run(PathChildrenCache.java:772) [curator-recipes-2.10.0.jar:?]at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_91]at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_91]at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_91]at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_91]at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [?:1.8.0_91]at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [?:1.8.0_91]at java.lang.Thread.run(Thread.java:745) [?:1.8.0_91]Caused by: java.lang.IllegalArgumentException: Instantiation of [simple type, class io.druid.segment.loading.LocalLoadSpec] value failed: [/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip] does not existat com.fasterxml.jackson.databind.ObjectMapper._convert(ObjectMapper.java:2774) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.ObjectMapper.convertValue(ObjectMapper.java:2700) ~[jackson-databind-2.4.6.jar:2.4.6]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegmentFiles(SegmentLoaderLocalCacheManager.java:142) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegment(SegmentLoaderLocalCacheManager.java:95) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ServerManager.loadSegment(ServerManager.java:152) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:305) ~[druid-server-0.9.1.1.jar:0.9.1.1]... 18 moreCaused by: com.fasterxml.jackson.databind.JsonMappingException: Instantiation of [simple type, class io.druid.segment.loading.LocalLoadSpec] value failed: [/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip] does not existat com.fasterxml.jackson.databind.deser.std.StdValueInstantiator.wrapException(StdValueInstantiator.java:405) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.std.StdValueInstantiator.createFromObjectWith(StdValueInstantiator.java:234) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.impl.PropertyBasedCreator.build(PropertyBasedCreator.java:167) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeUsingPropertyBased(BeanDeserializer.java:398) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializerBase.deserializeFromObjectUsingNonDefault(BeanDeserializerBase.java:1064) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:264) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeOther(BeanDeserializer.java:156) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserialize(BeanDeserializer.java:126) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.jsontype.impl.AsPropertyTypeDeserializer._deserializeTypedForId(AsPropertyTypeDeserializer.java:113) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.jsontype.impl.AsPropertyTypeDeserializer.deserializeTypedFromObject(AsPropertyTypeDeserializer.java:84) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.AbstractDeserializer.deserializeWithType(AbstractDeserializer.java:132) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.impl.TypeWrappedDeserializer.deserialize(TypeWrappedDeserializer.java:41) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.ObjectMapper._convert(ObjectMapper.java:2769) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.ObjectMapper.convertValue(ObjectMapper.java:2700) ~[jackson-databind-2.4.6.jar:2.4.6]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegmentFiles(SegmentLoaderLocalCacheManager.java:142) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegment(SegmentLoaderLocalCacheManager.java:95) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ServerManager.loadSegment(ServerManager.java:152) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:305) ~[druid-server-0.9.1.1.jar:0.9.1.1]... 18 moreCaused by: java.lang.IllegalArgumentException: [/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip] does not existat com.google.common.base.Preconditions.checkArgument(Preconditions.java:148) ~[guava-16.0.1.jar:?]at io.druid.segment.loading.LocalLoadSpec.<init>(LocalLoadSpec.java:51) ~[druid-server-0.9.1.1.jar:0.9.1.1]at sun.reflect.GeneratedConstructorAccessor36.newInstance(Unknown Source) ~[?:?]at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_91]at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_91]at com.fasterxml.jackson.databind.introspect.AnnotatedConstructor.call(AnnotatedConstructor.java:125) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.std.StdValueInstantiator.createFromObjectWith(StdValueInstantiator.java:230) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.impl.PropertyBasedCreator.build(PropertyBasedCreator.java:167) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeUsingPropertyBased(BeanDeserializer.java:398) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializerBase.deserializeFromObjectUsingNonDefault(BeanDeserializerBase.java:1064) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:264) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeOther(BeanDeserializer.java:156) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserialize(BeanDeserializer.java:126) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.jsontype.impl.AsPropertyTypeDeserializer._deserializeTypedForId(AsPropertyTypeDeserializer.java:113) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.jsontype.impl.AsPropertyTypeDeserializer.deserializeTypedFromObject(AsPropertyTypeDeserializer.java:84) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.AbstractDeserializer.deserializeWithType(AbstractDeserializer.java:132) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.deser.impl.TypeWrappedDeserializer.deserialize(TypeWrappedDeserializer.java:41) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.ObjectMapper._convert(ObjectMapper.java:2769) ~[jackson-databind-2.4.6.jar:2.4.6]at com.fasterxml.jackson.databind.ObjectMapper.convertValue(ObjectMapper.java:2700) ~[jackson-databind-2.4.6.jar:2.4.6]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegmentFiles(SegmentLoaderLocalCacheManager.java:142) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegment(SegmentLoaderLocalCacheManager.java:95) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ServerManager.loadSegment(ServerManager.java:152) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:305) ~[druid-server-0.9.1.1.jar:0.9.1.1]... 18 morey":"component-failure","description":"Failed to load segment for dataSource","data":{"class":"io.druid.server.coordination.ZkCoordinator","exceptionType":"io.druid.segment.loading.SegmentLoadingException","exceptionMessage":"Exception loading segment[PartitionStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:26.133Z]","exceptionStackTrace":"io.druid.segment.loading.SegmentLoadingException: Exception loading segment[PartitionStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:26.133Z]\n\tat io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:309)\n\tat io.druid.server.coordination.ZkCoordinator.addSegment(ZkCoordinator.java:350)\n\tat io.druid.server.coordination.SegmentChangeRequestLoad.go(SegmentChangeRequestLoad.java:44)\n\tat io.druid.server.coordination.ZkCoordinator$1.childEvent(ZkCoordinator.java:152)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:522)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:516)\n\tat org.apache.curator.framework.listen.ListenerContainer$1.run(ListenerContainer.java:93)\n\tat com.google.common.util.concurrent.MoreExecutors$SameThreadExecutorService.execute(MoreExecutors.java:297)\n\tat org.apache.curator.framework.listen.ListenerContainer.forEach(ListenerContainer.java:85)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache.callListeners(PathChildrenCache.java:514)\n\tat org.apache.curator.framework.recipes.cache.EventOperation.invoke(EventOperation.java:35)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache$9.run(PathChildrenCache.java:772)\n\tat java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)\n\tat java.util.concurrent.FutureTask.run(FutureTask.java:266)\n\tat java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)\n\tat java.util.concurrent.FutureTask.run(FutureTask.java:266)\n\tat java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)\n\tat java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)\n\tat java.lang.Thread.run(Thread.java:745)\nCaused by: java.lang.IllegalArgumentException: Instantiation of [simple type, class io.druid.segment.loading.LocalLoadSpec] value failed: [/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip] does not exist\n\tat com.fasterxml.jackson.databind.ObjectMapper._convert(ObjectMapper.java:2774)\n\tat com.fasterxml.jackson.databind.ObjectMapper.convertValue(ObjectMapper.java:2700)\n\tat io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegmentFiles(SegmentLoaderLocalCacheManager.java:142)\n\tat io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegment(SegmentLoaderLocalCacheManager.java:95)\n\tat io.druid.server.coordination.ServerManager.loadSegment(ServerManager.java:152)\n\tat io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:305)\n\t... 18 more\nCaused by: com.fasterxml.jackson.databind.JsonMappingException: Instantiation of [simple type, class io.druid.segment.loading.LocalLoadSpec] value failed: [/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip] does not exist\n\tat com.fasterxml.jackson.databind.deser.std.StdValueInstantiator.wrapException(StdValueInstantiator.java:405)\n\tat com.fasterxml.jackson.databind.deser.std.StdValueInstantiator.createFromObjectWith(StdValueInstantiator.java:234)\n\tat com.fasterxml.jackson.databind.deser.impl.PropertyBasedCreator.build(PropertyBasedCreator.java:167)\n\tat com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeUsingPropertyBased(BeanDeserializer.java:398)\n\tat com.fasterxml.jackson.databind.deser.BeanDeserializerBase.deserializeFromObjectUsingNonDefault(BeanDeserializerBase.java:1064)\n\tat com.fasterxml.jackson.databind.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:264)\n\tat com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeOther(BeanDeserializer.java:156)\n\tat com.fasterxml.jackson.databind.deser.BeanDeserializer.deserialize(BeanDeserializer.java:126)\n\tat com.fasterxml.jackson.databind.jsontype.impl.AsPropertyTypeDeserializer._deserializeTypedForId(AsPropertyTypeDeserializer.java:113)\n\tat com.fasterxml.jackson.databind.jsontype.impl.AsPropertyTypeDeserializer.deserializeTypedFromObject(AsPropertyTypeDeserializer.java:84)\n\tat com.fasterxml.jackson.databind.deser.AbstractDeserializer.deserializeWithType(AbstractDeserializer.java:132)\n\tat com.fasterxml.jackson.databind.deser.impl.TypeWrappedDeserializer.deserialize(TypeWrappedDeserializer.java:41)\n\tat com.fasterxml.jackson.databind.ObjectMapper._convert(ObjectMapper.java:2769)\n\t... 23 more\nCaused by: java.lang.IllegalArgumentException: [/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip] does not exist\n\tat com.google.common.base.Preconditions.checkArgument(Preconditions.java:148)\n\tat io.druid.segment.loading.LocalLoadSpec.<init>(LocalLoadSpec.java:51)\n\tat sun.reflect.GeneratedConstructorAccessor36.newInstance(Unknown Source)\n\tat sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)\n\tat java.lang.reflect.Constructor.newInstance(Constructor.java:423)\n\tat com.fasterxml.jackson.databind.introspect.AnnotatedConstructor.call(AnnotatedConstructor.java:125)\n\tat com.fasterxml.jackson.databind.deser.std.StdValueInstantiator.createFromObjectWith(StdValueInstantiator.java:230)\n\t... 34 more\n","segment":{"dataSource":"PartitionStatus","interval":"2016-08-29T05:00:00.000Z/2016-08-29T06:00:00.000Z","version":"2016-08-29T05:25:26.133Z","loadSpec":{"type":"local","path":"/data/imply/druid/segments/PartitionStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:26.133Z/0/index.zip"},"dimensions":"id,ip","metrics":"count,total_sum,total_max,total_min,free_sum,free_max,free_min,used_sum,used_max,used_min,avail_sum,avail_max,avail_min,files_sum,files_max,files_min,freeFiles_sum,freeFiles_max,freeFiles_min,diskReads_sum,diskReads_max,diskReads_min,diskWrites_sum,diskWrites_max,diskWrites_min,diskReadBytes_sum,diskReadBytes_max,diskReadBytes_min,diskWriteBytes_sum,diskWriteBytes_max,diskWriteBytes_min,usedPercent_sum,usedPercent_max,usedPercent_min","shardSpec":{"type":"linear","partitionNum":0},"binaryVersion":9,"size":4817673,"identifier":"PartitionStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:26.133Z"}}}]2016-08-29T07:25:12,305 INFO [ZkCoordinator-0] io.druid.server.coordination.ZkCoordinator - zNode[/druid/loadQueue/10.1.7.7:8083/PartitionStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:26.133Z] was removed2016-08-29T07:25:12,324 INFO [ZkCoordinator-Exec--0] io.druid.server.coordination.ServerManager - Told to delete a queryable for a dataSource[PartitionStatus] that doesn't exist.2016-08-29T07:25:12,324 WARN [ZkCoordinator-Exec--0] io.druid.server.coordination.ZkCoordinator - Unable to delete segmentInfoCacheFile[/data/imply/druid/segment-cache/info_dir/PartitionStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:26.133Z]

and like this:

2016-08-29T07:23:12,299 ERROR [ZkCoordinator-0] io.druid.server.coordination.ZkCoordinator - Failed to load segment for dataSource: {class=io.druid.server.coordination.ZkCoordinator, exceptionType=class io.druid.segment.loading.SegmentLoadingException, exceptionMessage=Exception loading segment[GpuCoreStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:23.417Z], segment=DataSegment{size=22832772, shardSpec=LinearShardSpec{partitionNum=0}, metrics=[count, fbMemoryUsageUsed_sum, fbMemoryUsageUsed_max, fbMemoryUsageUsed_min, pciBridgeChipFanSpeed_sum, pciBridgeChipFanSpeed_max, pciBridgeChipFanSpeed_min, fbMemoryUsageTotal_sum, fbMemoryUsageTotal_max, fbMemoryUsageTotal_min, fbMemoryUsageFree_sum, fbMemoryUsageFree_max, fbMemoryUsageFree_min, temperatureGpuCurrentTemp_sum, temperatureGpuCurrentTemp_max, temperatureGpuCurrentTemp_min], dimensions=[id, bar1MemoryUsageComputeMode, pciBus, driverModelVbiosVersion, driverModelMinorNumber, driverModelGpuUuid, pciDeviceId, pciDevice, pciBusId, productName, productBrand, persistenceMode, ip, pciBridgeChipPerformanceState, pciSubSystemId, pciDomain], version='2016-08-29T05:25:23.417Z', loadSpec={type=local, path=/data/imply/druid/segments/GpuCoreStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:23.417Z/0/index.zip}, interval=2016-08-29T05:00:00.000Z/2016-08-29T06:00:00.000Z, dataSource='GpuCoreStatus', binaryVersion='9'}}io.druid.segment.loading.SegmentLoadingException: Exception loading segment[GpuCoreStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:23.417Z]at io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:309) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator.addSegment(ZkCoordinator.java:350) [druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.SegmentChangeRequestLoad.go(SegmentChangeRequestLoad.java:44) [druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator$1.childEvent(ZkCoordinator.java:152) [druid-server-0.9.1.1.jar:0.9.1.1]at org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:522) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:516) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.listen.ListenerContainer$1.run(ListenerContainer.java:93) [curator-framework-2.10.0.jar:?]at com.google.common.util.concurrent.MoreExecutors$SameThreadExecutorService.execute(MoreExecutors.java:297) [guava-16.0.1.jar:?]at org.apache.curator.framework.listen.ListenerContainer.forEach(ListenerContainer.java:85) [curator-framework-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.PathChildrenCache.callListeners(PathChildrenCache.java:514) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.EventOperation.invoke(EventOperation.java:35) [curator-recipes-2.10.0.jar:?]at org.apache.curator.framework.recipes.cache.PathChildrenCache$9.run(PathChildrenCache.java:772) [curator-recipes-2.10.0.jar:?]at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_91]at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_91]at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_91]at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_91]at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [?:1.8.0_91]at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [?:1.8.0_91]at java.lang.Thread.run(Thread.java:745) [?:1.8.0_91]Caused by: io.druid.segment.loading.SegmentLoadingException: /data/imply/druid/segment-cache/GpuCoreStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:23.417Z/0/index.drd (No such file or directory)at io.druid.segment.loading.MMappedQueryableIndexFactory.factorize(MMappedQueryableIndexFactory.java:52) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegment(SegmentLoaderLocalCacheManager.java:96) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ServerManager.loadSegment(ServerManager.java:152) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:305) ~[druid-server-0.9.1.1.jar:0.9.1.1]... 18 moreCaused by: java.io.FileNotFoundException: /data/imply/druid/segment-cache/GpuCoreStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:23.417Z/0/index.drd (No such file or directory)at java.io.FileInputStream.open0(Native Method) ~[?:1.8.0_91]at java.io.FileInputStream.open(FileInputStream.java:195) ~[?:1.8.0_91]at java.io.FileInputStream.<init>(FileInputStream.java:138) ~[?:1.8.0_91]at io.druid.segment.SegmentUtils.getVersionFromDir(SegmentUtils.java:43) ~[druid-api-0.9.1.1.jar:0.9.1.1]at io.druid.segment.IndexIO.loadIndex(IndexIO.java:211) ~[druid-processing-0.9.1.1.jar:0.9.1.1]at io.druid.segment.loading.MMappedQueryableIndexFactory.factorize(MMappedQueryableIndexFactory.java:49) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegment(SegmentLoaderLocalCacheManager.java:96) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ServerManager.loadSegment(ServerManager.java:152) ~[druid-server-0.9.1.1.jar:0.9.1.1]at io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:305) ~[druid-server-0.9.1.1.jar:0.9.1.1]... 18 more2016-08-29T07:23:12,299 INFO [ZkCoordinator-0] com.metamx.emitter.core.LoggingEmitter - Event [{"feed":"alerts","timestamp":"2016-08-29T07:23:12.299Z","service":"druid/historical","host":"10.1.7.7:8083","severity":"component-failure","description":"Failed to load segment for dataSource","data":{"class":"io.druid.server.coordination.ZkCoordinator","exceptionType":"io.druid.segment.loading.SegmentLoadingException","exceptionMessage":"Exception loading segment[GpuCoreStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:23.417Z]","exceptionStackTrace":"io.druid.segment.loading.SegmentLoadingException: Exception loading segment[GpuCoreStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:23.417Z]\n\tat io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:309)\n\tat io.druid.server.coordination.ZkCoordinator.addSegment(ZkCoordinator.java:350)\n\tat io.druid.server.coordination.SegmentChangeRequestLoad.go(SegmentChangeRequestLoad.java:44)\n\tat io.druid.server.coordination.ZkCoordinator$1.childEvent(ZkCoordinator.java:152)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:522)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache$5.apply(PathChildrenCache.java:516)\n\tat org.apache.curator.framework.listen.ListenerContainer$1.run(ListenerContainer.java:93)\n\tat com.google.common.util.concurrent.MoreExecutors$SameThreadExecutorService.execute(MoreExecutors.java:297)\n\tat org.apache.curator.framework.listen.ListenerContainer.forEach(ListenerContainer.java:85)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache.callListeners(PathChildrenCache.java:514)\n\tat org.apache.curator.framework.recipes.cache.EventOperation.invoke(EventOperation.java:35)\n\tat org.apache.curator.framework.recipes.cache.PathChildrenCache$9.run(PathChildrenCache.java:772)\n\tat java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)\n\tat java.util.concurrent.FutureTask.run(FutureTask.java:266)\n\tat java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)\n\tat java.util.concurrent.FutureTask.run(FutureTask.java:266)\n\tat java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)\n\tat java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)\n\tat java.lang.Thread.run(Thread.java:745)\nCaused by: io.druid.segment.loading.SegmentLoadingException: /data/imply/druid/segment-cache/GpuCoreStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:23.417Z/0/index.drd (No such file or directory)\n\tat io.druid.segment.loading.MMappedQueryableIndexFactory.factorize(MMappedQueryableIndexFactory.java:52)\n\tat io.druid.segment.loading.SegmentLoaderLocalCacheManager.getSegment(SegmentLoaderLocalCacheManager.java:96)\n\tat io.druid.server.coordination.ServerManager.loadSegment(ServerManager.java:152)\n\tat io.druid.server.coordination.ZkCoordinator.loadSegment(ZkCoordinator.java:305)\n\t... 18 more\nCaused by: java.io.FileNotFoundException: /data/imply/druid/segment-cache/GpuCoreStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:23.417Z/0/index.drd (No such file or directory)\n\tat java.io.FileInputStream.open0(Native Method)\n\tat java.io.FileInputStream.open(FileInputStream.java:195)\n\tat java.io.FileInputStream.<init>(FileInputStream.java:138)\n\tat io.druid.segment.SegmentUtils.getVersionFromDir(SegmentUtils.java:43)\n\tat io.druid.segment.IndexIO.loadIndex(IndexIO.java:211)\n\tat io.druid.segment.loading.MMappedQueryableIndexFactory.factorize(MMappedQueryableIndexFactory.java:49)\n\t... 21 more\n","segment":{"dataSource":"GpuCoreStatus","interval":"2016-08-29T05:00:00.000Z/2016-08-29T06:00:00.000Z","version":"2016-08-29T05:25:23.417Z","loadSpec":{"type":"local","path":"/data/imply/druid/segments/GpuCoreStatus/2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z/2016-08-29T05:25:23.417Z/0/index.zip"},"dimensions":"id,bar1MemoryUsageComputeMode,pciBus,driverModelVbiosVersion,driverModelMinorNumber,driverModelGpuUuid,pciDeviceId,pciDevice,pciBusId,productName,productBrand,persistenceMode,ip,pciBridgeChipPerformanceState,pciSubSystemId,pciDomain","metrics":"count,fbMemoryUsageUsed_sum,fbMemoryUsageUsed_max,fbMemoryUsageUsed_min,pciBridgeChipFanSpeed_sum,pciBridgeChipFanSpeed_max,pciBridgeChipFanSpeed_min,fbMemoryUsageTotal_sum,fbMemoryUsageTotal_max,fbMemoryUsageTotal_min,fbMemoryUsageFree_sum,fbMemoryUsageFree_max,fbMemoryUsageFree_min,temperatureGpuCurrentTemp_sum,temperatureGpuCurrentTemp_max,temperatureGpuCurrentTemp_min","shardSpec":{"type":"linear","partitionNum":0},"binaryVersion":9,"size":22832772,"identifier":"GpuCoreStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:23.417Z"}}}]2016-08-29T07:23:12,299 INFO [ZkCoordinator-0] io.druid.server.coordination.ZkCoordinator - zNode[/druid/loadQueue/10.1.7.7:8083/GpuCoreStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:23.417Z] was removed2016-08-29T07:23:12,301 INFO [ZkCoordinator-Exec--0] io.druid.server.coordination.ServerManager - Told to delete a queryable for a dataSource[GpuCoreStatus] that doesn't exist.2016-08-29T07:23:12,301 WARN [ZkCoordinator-Exec--0] io.druid.server.coordination.ZkCoordinator - Unable to delete segmentInfoCacheFile[/data/imply/druid/segment-cache/info_dir/GpuCoreStatus_2016-08-29T05:00:00.000Z_2016-08-29T06:00:00.000Z_2016-08-29T05:25:23.417Z]

do not have any other errors or exceptions in host2 and host3

--

You received this message because you are subscribed to a topic in the Google Groups "Druid User" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/druid-user/HaUnctF2mZM/unsubscribe.

To unsubscribe from this group and all its topics, send an email to druid-user+unsubscribe@googlegroups.com.

Fangjin Yang

Aug 29, 2016, 7:28:16 PM8/29/16

to Druid User

Hi Leon, you seem to have indexed a lot of data for a local setup, and then switched to a distributed setup. This won't work.

Check out https://imply.io/docs/latest/cluster for more info on how to set up a distributed cluster.

LiangQing

Aug 29, 2016, 10:30:36 PM8/29/16

to druid...@googlegroups.com

Hi Fangjin,

Thanks for your quick response!

What do you meaning local setup? do i must enable s3 or HDFS extension? we don't have s3 or HDFS environment, we only want store they in data node's local storage, is there any way to support these?

We setup the cluster only once, the data are indexed once, do not have a 'switching' action.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/a94d0061-b4c1-42d7-b979-714d9ef2661f%40googlegroups.com.

Gian Merlino

Aug 29, 2016, 10:50:00 PM8/29/16

to druid...@googlegroups.com

If you use multiple machines, Druid nodes need a shared deep storage like S3, HDFS, or NFS. Local filesystem won't work unless it's a shared network mount.

Gian

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to druid-user+unsubscribe@googlegroups.com.

To post to this group, send email to druid...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/CAJ2h%2Bmhj0GExAtdpgC3LoNydsa6EsNocht56y0__pGxwef1qUw%40mail.gmail.com.

LiangQing

Aug 29, 2016, 11:12:04 PM8/29/16

to druid...@googlegroups.com

OK, got it, thanks Gian

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/CACZNdYDQ8DVhwmHZ-u-6t-4SpQe4dmrZCQC85uqoLUXf0Q1tDA%40mail.gmail.com.

Reply all

Reply to author

Forward

0 new messages