Fw: Help required setting up CDAP in distributed mode using HDP

Nilkanth Patel

I am trying to set up CDAP in distributed mode with HDP 2.2.4.2. Steps that i have followed are

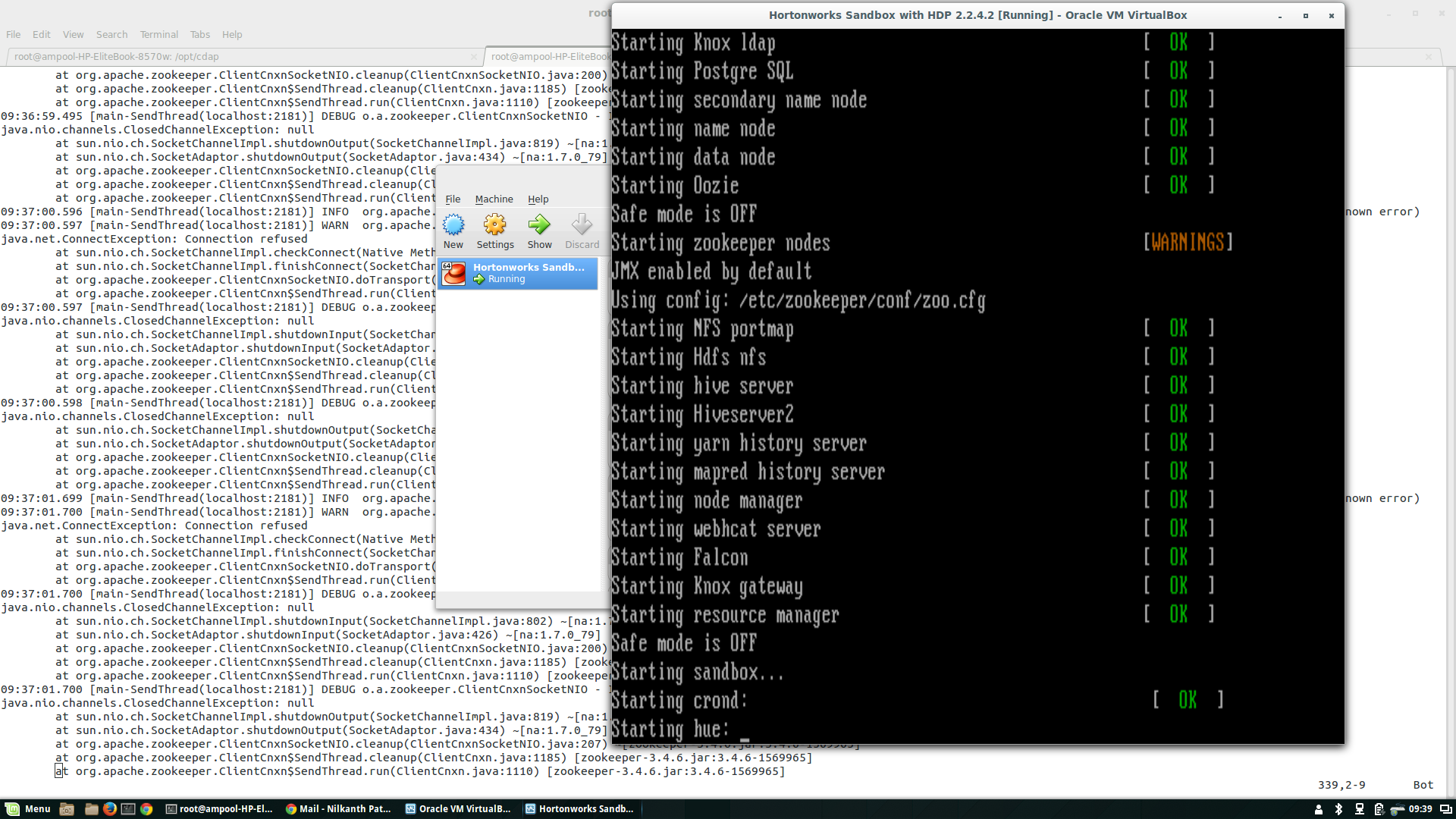

1. Installed Sandbox_HDP_2.2.4.2_VirtualBox.ova into Virtualbox. Setup done successfully. IP address for this machine is 192.168.1.110

2. followed http://docs.cask.co/cdap/current/en/admin-manual/installation/installation.html

and installed locally,

Though documentation says, for configuring Hortonworks data platform you need to config following in cdap-env.sh

I could not find cdap-env.sh in my setup.

My /etc/cdap/conf/cdap-site.xml is as following

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

~ Copyright © 2015 Cask Data, Inc.

~

~ Licensed under the Apache License, Version 2.0 (the "License"); you may not

~ use this file except in compliance with the License. You may obtain a copy of

~ the License at

~

~ http://www.apache.org/licenses/LICENSE-2.0

~

~ Unless required by applicable law or agreed to in writing, software

~ distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

~ WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

~ License for the specific language governing permissions and limitations under

~ the License.

-->

<configuration>

<property>

<name>root.namespace</name>

<value>cdap</value>

<description>Specifies the root namespace</description>

</property>

<!-- Substitute the zookeeper quorum for components here -->

<property>

<name>zookeeper.quorum</name>

<value>192.168.1.110:2181/${root.namespace}</value>

<description>Specifies the zookeeper host:port</description>

</property>

<property>

<name>hdfs.namespace</name>

<value>/${root.namespace}</value>

<description>Namespace for HDFS files</description>

</property>

<property>

<name>hdfs.user</name>

<value>yarn</value>

<description>User name for accessing HDFS</description>

</property>

<!--

Router configuration

-->

<!-- Substitue the IP to which Router service should bind to and listen on -->

<property>

<name>router.bind.address</name>

<value>localhost</value>

<description>Specifies the inet address on which the Router service will listen</description>

</property>

<!--

App Fabric configuration

-->

<!-- Substitute the IP to which App-Fabric service should bind to and listen on -->

<property>

<name>app.bind.address</name>

<value>localhost</value>

<description>Specifies the inet address on which the app fabric service will listen</description>

</property>

<!--

Data Fabric configuration

-->

<!-- Substitute the IP to which Data-Fabric tx service should bind to and listen on -->

<property>

<name>data.tx.bind.address</name>

<value>localhost</value>

<description>Specifies the inet address on which the transaction service will listen</description>

</property>

<!--

Kafka Configuration

-->

<property>

<name>kafka.log.dir</name>

<value>/opt/cdap/kafka/logs</value>

<description>Directory to store Kafka logs</description>

</property>

<!-- Must be <= the number of kafka.seed.brokers configured above. For HA this should be at least 2. -->

<property>

<name>kafka.default.replication.factor</name>

<value>1</value>

<description>Kafka replication factor</description>

</property>

<!--

Watchdog Configuration

-->

<!-- Substitute the IP to which metrics-query service should bind to and listen on -->

<property>

<name>metrics.query.bind.address</name>

<value>localhost</value>

<description>Specifies the inet address on which the metrics-query service will listen</description>

</property>

<!--

Web-App Configuration

-->

<property>

<name>dashboard.bind.port</name>

<value>9999</value>

<description>Specifies the port on which dashboard listens</description>

</property>

<!-- Substitute the IP of the Router service to which the UI should connect -->

<property>

<name>router.server.address</name>

<value>localhost</value>

<description>Specifies the destination IP where Router service is running</description>

</property>

<property>

<name>router.server.port</name>

<value>10000</value>

<description>Specifies the destination Port where Router service is listening</description>

</property>

<property>

<name>app.program.jvm.opts</name>

<value>-XX:MaxPermSize=128M ${twill.jvm.gc.opts} -Dhdp.version=2.2.4.2</value>

<description>Java options for all program containers</description>

</property>

</configuration>Not sure what should be the various find addresses for my case. kindly confirm it.

3. Starting services

root@ampool-HP-EliteBook-8570w:/opt/cdap# for i in `ls /etc/init.d/ | grep cdap` ; do sudo service $i start ; done

Wed Aug 19 09:26:48 IST 2015 Starting Java auth-server service on ampool-HP-EliteBook-8570w

Wed Aug 19 09:26:49 IST 2015 Starting Java kafka-server service on ampool-HP-EliteBook-8570w

Wed Aug 19 09:26:50 IST 2015 Starting Java master service on ampool-HP-EliteBook-8570w

Wed Aug 19 09:26:50 IST 2015 Starting Java router service on ampool-HP-EliteBook-8570w

Wed Aug 19 09:26:50 IST 2015 Starting ui service on ampool-HP-EliteBook-8570w4. Status of cdap-services

root@ampool-HP-EliteBook-8570w:/opt/cdap# for i in `ls /etc/init.d/ | grep cdap` ; do sudo service $i status ; done

checking status

* auth-server is running

checking status

* kafka-server is running

checking status

* master is running

checking status

* router is running

checking status

* ui is running

5. auth-server-cdap-ampool-HP-EliteBook-8570w.log has a following exception

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [ch.qos.logback.classic.util.ContextSelectorStaticBinder]

09:26:49.535 [main] DEBUG org.apache.hadoop.util.Shell - Failed to detect a valid hadoop home directory

java.io.IOException: HADOOP_HOME or hadoop.home.dir are not set.

at org.apache.hadoop.util.Shell.checkHadoopHome(Shell.java:225) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:250) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:76) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.yarn.conf.YarnConfiguration.<clinit>(YarnConfiguration.java:345) [hadoop-yarn-api-2.2.0.jar:na]

at co.cask.cdap.common.guice.ConfigModule.configure(ConfigModule.java:64) [co.cask.cdap.cdap-common-3.1.1.jar:na]

at com.google.inject.AbstractModule.configure(AbstractModule.java:59) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:223) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.spi.Elements.getElements(Elements.java:101) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:133) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:103) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.Guice.createInjector(Guice.java:95) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.Guice.createInjector(Guice.java:72) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.Guice.createInjector(Guice.java:62) [com.google.inject.guice-3.0.jar:na]

at co.cask.cdap.security.runtime.AuthenticationServerMain.init(AuthenticationServerMain.java:50) [co.cask.cdap.cdap-security-3.1.1.jar:na]

at co.cask.cdap.common.runtime.DaemonMain.doMain(DaemonMain.java:36) [co.cask.cdap.cdap-common-3.1.1.jar:na]

at co.cask.cdap.security.runtime.AuthenticationServerMain.main(AuthenticationServerMain.java:97) [co.cask.cdap.cdap-security-3.1.1.jar:na]

09:26:49.545 [main] DEBUG org.apache.hadoop.util.Shell - setsid exited with exit code 0

/opt/cdap/security/bin/common-env.sh , my changes are marked in bold red colour.

#!/usr/bin/env bash

#

# Copyright © 2014 Cask Data, Inc.

#

# Licensed under the Apache License, Version 2.0 (the "License"); you may not

# use this file except in compliance with the License. You may obtain a copy of

# the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

# WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

# License for the specific language governing permissions and limitations under

# the License.

# Set environment variables here.

# The java implementation to use. Java 1.6 required.

export JAVA_HOME=/opt/cdap/jdk1.7.0_79

# The maximum amount of heap to use, in MB. Default is 1000.

# export HEAPSIZE=1000

# Extra Java runtime options.

# Below are what we set by default. May only work with SUN JVM.

# For more on why as well as other possible settings,

# see http://wiki.apache.org/hadoop/PerformanceTuning

export OPTS="-XX:+UseConcMarkSweepGC"

# Uncomment below to enable java garbage collection logging in the .out file.

# export GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps"

# Uncomment below (along with above GC logging) to put GC information in its own logfile

# export USE_GC_LOGFILE=true

# Where log files are stored. $CDAP_HOME/logs by default.

export LOG_DIR=/var/log/cdap

# A string representing this instance of hbase. $USER by default.

export IDENT_STRING=$USER

# The scheduling priority for daemon processes. See 'man nice'.

# export NICENESS=10

# The directory where pid files are stored. /tmp by default.

export PID_DIR=/var/cdap/run

# The directory serving as the user directory for master

export LOCAL_DIR=/var/tmp/cdap

# Specifies the JAVA_HEAPMAX

export JAVA_HEAPMAX=${JAVA_HEAPMAX:--Xmx128m}

# The options below can be set in the sourced component-specific conf/[component]-env.sh scripts

# Main class to be invoked.

#MAIN_CLASS=

# Arguments for main class.

#MAIN_CLASS_ARGS=""

# Adds Hadoop and HBase libs to the classpath on startup.

# If the "hbase" command is on the PATH, this will be done automatically.

# Or uncomment the line below to point to the HBase installation directly.

HBASE_HOME=/opt/cdap/hbase-0.98.13-hadoop2

# Extra CLASSPATH

# EXTRA_CLASSPATH=""

6. kafka-server seems started properly. No exception found in log. part of kafka-server-cdap-ampool-HP-EliteBook-8570w.log

2015-08-19 09:44:09,892 INFO [EmbeddedKafkaServer STARTING] controller.KafkaController: [Controller 2130706433]: Controller startup complete

2015-08-19 09:44:09,910 INFO [EmbeddedKafkaServer STARTING] server.KafkaServer: [Kafka Server 2130706433], Started

09:44:09.910 [main] INFO c.c.cdap.kafka.run.KafkaServerMain - Embedded kafka server started successfully.

2015-08-19 09:44:09,912 INFO [ZkClient-EventThread-22-192.168.1.110:2181/cdap/kafka] server.ZookeeperLeaderElector$LeaderChangeListener: New leader is 2130706433

0

changes to /opt/cdap/kafka/bin/common-env.sh . Added following configuration.

export JAVA_HOME=/opt/cdap/jdk1.7.0_79

7. some of the exception found in master-cdap-ampool-HP-EliteBook-8570w.log

09:44:09.107 [main] DEBUG org.apache.hadoop.util.Shell - Failed to detect a valid hadoop home directory

java.io.IOException: HADOOP_HOME or hadoop.home.dir are not set.

at org.apache.hadoop.util.Shell.checkHadoopHome(Shell.java:225) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:250) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:76) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.yarn.conf.YarnConfiguration.<clinit>(YarnConfiguration.java:345) [hadoop-yarn-api-2.2.0.jar:na]

at co.cask.cdap.common.guice.ConfigModule.configure(ConfigModule.java:64) [co.cask.cdap.cdap-common-3.1.1.jar:na]

at com.google.inject.AbstractModule.configure(AbstractModule.java:59) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:223) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.spi.Elements.getElements(Elements.java:101) [com.google.inject.guice-3.0.jar:na]

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:133) [com.google.inject.guice-3.0.jar:na]

09:44:10.753 [main] INFO c.c.c.d.d.InMemoryDatasetFramework - Adding Default module basicKVTable to system namespace

09:44:10.768 [main] INFO c.c.c.d.r.m.TokenSecureStoreUpdater - Setting token renewal time to: 86100000 ms

09:44:10.770 [main] DEBUG c.c.c.d.r.main.MasterServiceMain - Failed to cleanup temp directory /var/tmp/cdap/data/tmp

java.io.IOException: Not a directory: /var/tmp/cdap/data/tmp

at co.cask.cdap.common.utils.DirUtils.deleteDirectoryContents(DirUtils.java:61) ~[co.cask.cdap.cdap-common-3.1.1.jar:na]

at co.cask.cdap.data.runtime.main.MasterServiceMain.cleanupTempDir(MasterServiceMain.java:418) [co.cask.cdap.cdap-master-3.1.1.jar:na]

at co.cask.cdap.data.runtime.main.MasterServiceMain.init(MasterServiceMain.java:165) [co.cask.cdap.cdap-master-3.1.1.jar:na]

at co.cask.cdap.common.runtime.DaemonMain.doMain(DaemonMain.java:36) [co.cask.cdap.cdap-common-3.1.1.jar:na]

at co.cask.cdap.data.runtime.main.MasterServiceMain.main(MasterServiceMain.java:144) [co.cask.cdap.cdap-master-3.1.1.jar:na]

09:44:10.771 [main] INFO c.cask.cdap.common.io.URLConnections - Turning off default caching in URLConnection

09:44:10.898 [main] DEBUG org.apache.zookeeper.ClientCnxn - zookeeper.disableAutoWatchReset is false

09:44:10.914 [main-SendThread(localhost:2181)] INFO org.apache.zookeeper.ClientCnxn - Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

09:44:10.918 [main-SendThread(localhost:2181)] WARN org.apache.zookeeper.ClientCnxn - Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) ~[na:1.7.0_79]

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739) ~[na:1.7.0_79]

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361) ~[zookeeper-3.4.6.jar:3.4.6-1569965]

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1081) ~[zookeeper-3.4.6.jar:3.4.6-1569965]

09:44:10.919 [main-SendThread(localhost:2181)] DEBUG o.a.zookeeper.ClientCnxnSocketNIO - Ignoring exception during shutdown input

java.nio.channels.ClosedChannelException: nullchanges to /opt/cdap/master/bin/common-env.sh . Added following configuration.

export JAVA_HOME=/opt/cdap/jdk1.7.0_79

8. Exceptions found in router-cdap-ampool-HP-EliteBook-8570w.log

java.io.IOException: HADOOP_HOME or hadoop.home.dir are not set.

at org.apache.hadoop.util.Shell.checkHadoopHome(Shell.java:225) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:250) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:76) [hadoop-common-2.2.0.jar:na]

at org.apache.hadoop.yarn.conf.YarnConfiguration.<clinit>(YarnConfiguration.java:345) [hadoop-yarn-api-2.2.0.jar:na]

at co.cask.cdap.common.guice.ConfigModule.configure(ConfigModule.java:64) [co.cask.cdap.cdap-common-3.1.1.jar:na]

at com.google.inject.AbstractModule.configure(AbstractModule.java:59) [com.google.inject.guice-3.0.jar:na]

co.cask.cdap.common.HandlerException: No endpoint strategy found for request : /ping

at co.cask.cdap.gateway.router.handlers.HttpRequestHandler.getDiscoverable(HttpRequestHandler.java:197) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at co.cask.cdap.gateway.router.handlers.HttpRequestHandler.messageReceived(HttpRequestHandler.java:106) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at co.cask.cdap.gateway.router.handlers.HttpStatusRequestHandler.messageReceived(HttpStatusRequestHandler.java:65) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:459) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.handler.codec.replay.ReplayingDecoder.callDecode(ReplayingDecoder.java:536) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.handler.codec.replay.ReplayingDecoder.messageReceived(ReplayingDecoder.java:435) ~[io.netty.netty-3.6.6.Final.jar:na]

at co.cask.cdap.gateway.router.NettyRouter$1.handleUpstream(NettyRouter.java:177) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:109) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:312) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:90) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:178) ~[io.netty.netty-3.6.6.Final.jar:na]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) [na:1.7.0_79]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) [na:1.7.0_79]

at java.lang.Thread.run(Thread.java:745) [na:1.7.0_79]

09:44:16.528 [New I/O worker #13] DEBUG c.c.c.g.router.RouterServiceLookup - Looking up service name appfabric

09:44:16.829 [New I/O worker #13] DEBUG c.c.c.g.router.RouterServiceLookup - Discoverable endpoint appfabric not found

09:44:16.829 [New I/O worker #13] ERROR c.c.c.g.router.RouterServiceLookup - No discoverable endpoints found for service CacheKey{service=appfabric, host=localhost:10000, firstPathPart=/v3}

09:44:16.831 [New I/O worker #13] ERROR c.c.c.g.r.h.HttpRequestHandler - Exception raised in Request Handler [id: 0x0d2ed568, /127.0.0.1:58068 => /127.0.0.1:10000]

co.cask.cdap.common.HandlerException: No endpoint strategy found for request : /v3/namespaces

at co.cask.cdap.gateway.router.handlers.HttpRequestHandler.getDiscoverable(HttpRequestHandler.java:197) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at co.cask.cdap.gateway.router.handlers.HttpRequestHandler.messageReceived(HttpRequestHandler.java:106) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at co.cask.cdap.gateway.router.handlers.HttpStatusRequestHandler.messageReceived(HttpStatusRequestHandler.java:65) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:459) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.handler.codec.replay.ReplayingDecoder.callDecode(ReplayingDecoder.java:536) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.handler.codec.replay.ReplayingDecoder.messageReceived(ReplayingDecoder.java:435) ~[io.netty.netty-3.6.6.Final.jar:na]

at co.cask.cdap.gateway.router.NettyRouter$1.handleUpstream(NettyRouter.java:177) ~[co.cask.cdap.cdap-gateway-3.1.1.jar:na]

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88) ~[io.netty.netty-3.6.6.Final.jar:na]

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:109) ~[io.netty.netty-3.6.6.Final.jar:na]changes to/opt/cdap/gateway/bin/common-env.sh . Added following configuration.

export JAVA_HOME=/opt/cdap/jdk1.7.0_79

9. ui-cdap-ampool-HP-EliteBook-8570w.log does not log any exception.

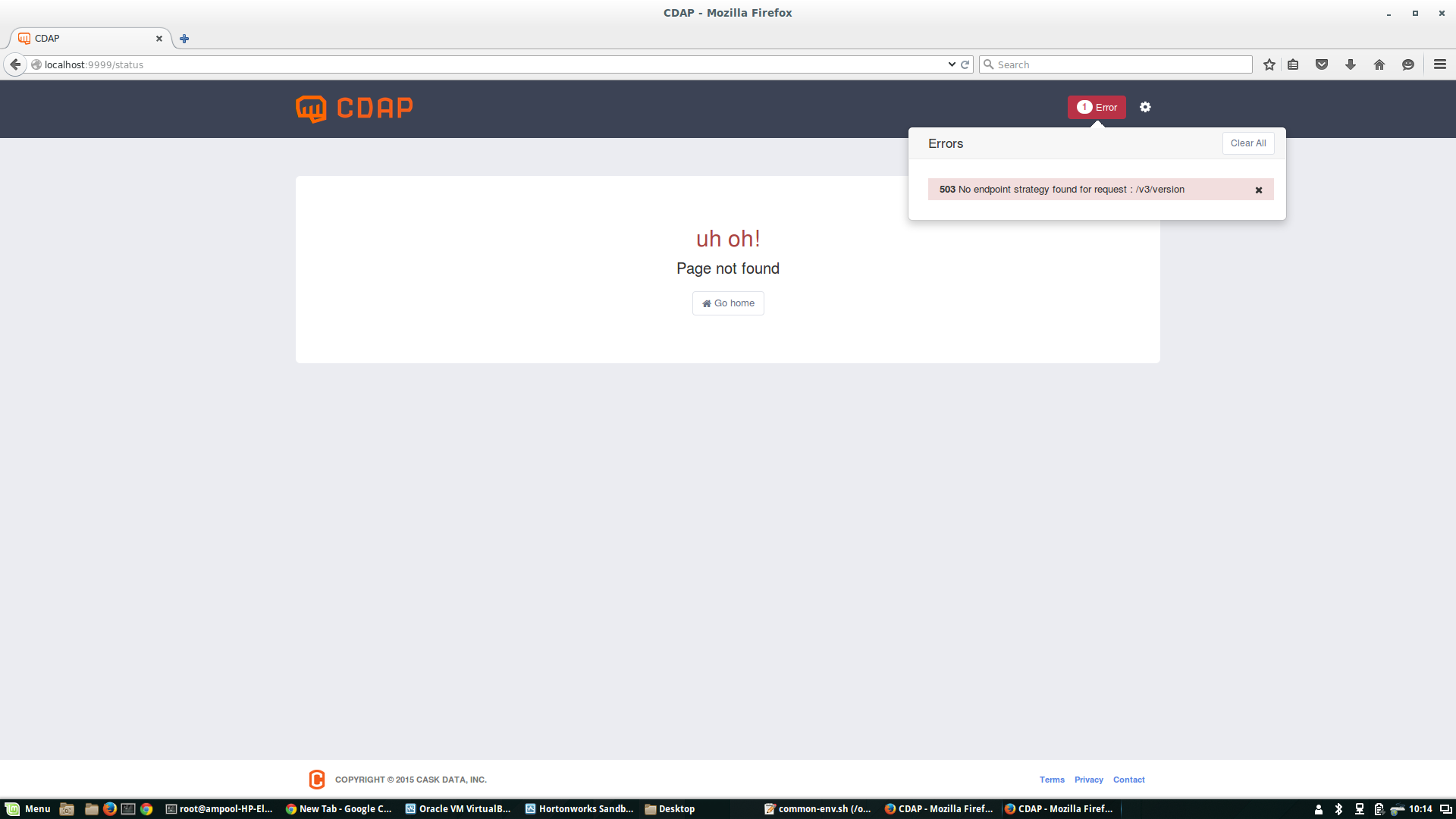

10, health check of the CDAP UI, http://localhost:9999/status gives me following screenshot

11. health check of the CDAP Router , http://localhost:10000/status

OK

12. health check of the CDAP Authentication Server, http://localhost:10009/status

Unable to connect

13. health check of all the services running in YARN, http://localhost:10000/v3/system/services

No endpoint strategy found for request : /v3/system/services

14. Classpath for cdap master service

root@ampool-HP-EliteBook-8570w:/opt/cdap/security# /opt/cdap/master/bin/svc-master classpath /opt/cdap/hbase-compat-0.98/lib/*:/opt/cdap/master/lib/*:/opt/cdap/hbase-0.98.13-hadoop2/bin/../conf:/opt/cdap/jdk1.7.0_79/lib/tools.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/..:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/activation-1.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/aopalliance-1.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/asm-3.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/avro-1.7.4.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-beanutils-1.7.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-beanutils-core-1.8.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-cli-1.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-codec-1.7.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-collections-3.2.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-compress-1.4.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-configuration-1.6.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-daemon-1.0.13.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-digester-1.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-el-1.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-httpclient-3.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-io-2.4.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-lang-2.6.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-logging-1.1.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-math-2.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/commons-net-3.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/findbugs-annotations-1.3.9-1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/gmbal-api-only-3.0.0-b023.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/grizzly-framework-2.1.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/grizzly-http-2.1.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/grizzly-http-server-2.1.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/grizzly-http-servlet-2.1.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/grizzly-rcm-2.1.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/guava-12.0.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/guice-3.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/guice-servlet-3.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-annotations-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-auth-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-client-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-common-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-hdfs-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-mapreduce-client-app-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-mapreduce-client-common-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-mapreduce-client-core-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-mapreduce-client-jobclient-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-mapreduce-client-shuffle-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-yarn-api-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-yarn-client-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-yarn-common-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-yarn-server-common-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hadoop-yarn-server-nodemanager-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hamcrest-core-1.3.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-annotations-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-checkstyle-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-client-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-common-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-common-0.98.13-hadoop2-tests.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-examples-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-hadoop2-compat-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-hadoop-compat-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-it-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-it-0.98.13-hadoop2-tests.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-prefix-tree-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-protocol-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-rest-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-server-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-server-0.98.13-hadoop2-tests.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-shell-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-testing-util-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/hbase-thrift-0.98.13-hadoop2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/high-scale-lib-1.1.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/htrace-core-2.04.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/httpclient-4.1.3.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/httpcore-4.1.3.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jackson-core-asl-1.8.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jackson-jaxrs-1.8.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jackson-mapper-asl-1.8.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jackson-xc-1.8.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jamon-runtime-2.3.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jasper-compiler-5.5.23.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jasper-runtime-5.5.23.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/javax.inject-1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/javax.servlet-3.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/javax.servlet-api-3.0.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jaxb-api-2.2.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jaxb-impl-2.2.3-1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jcodings-1.0.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-client-1.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-core-1.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-grizzly2-1.9.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-guice-1.9.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-json-1.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-server-1.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-test-framework-core-1.9.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jersey-test-framework-grizzly2-1.9.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jets3t-0.6.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jettison-1.3.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jetty-6.1.26.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jetty-sslengine-6.1.26.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jetty-util-6.1.26.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/joni-2.1.2.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jruby-complete-1.6.8.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jsch-0.1.42.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jsp-2.1-6.1.14.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jsp-api-2.1-6.1.14.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/jsr305-1.3.9.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/junit-4.11.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/libthrift-0.9.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/log4j-1.2.17.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/management-api-3.0.0-b012.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/metrics-core-2.2.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/netty-3.6.6.Final.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/paranamer-2.3.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/protobuf-java-2.5.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/servlet-api-2.5-6.1.14.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/slf4j-api-1.6.4.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/slf4j-log4j12-1.6.4.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/snappy-java-1.0.4.1.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/xmlenc-0.52.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/xz-1.0.jar:/opt/cdap/hbase-0.98.13-hadoop2/bin/../lib/zookeeper-3.4.6.jar:/usr/hdp/2.2.4.2-2/hadoop/conf:/usr/hdp/2.2.4.2-2/hadoop/lib/*:/usr/hdp/2.2.4.2-2/hadoop/.//*:/usr/hdp/2.2.4.2-2/hadoop-hdfs/./:/usr/hdp/2.2.4.2-2/hadoop-hdfs/lib/*:/usr/hdp/2.2.4.2-2/hadoop-hdfs/.//*:/usr/hdp/2.2.4.2-2/hadoop-yarn/lib/*:/usr/hdp/2.2.4.2-2/hadoop-yarn/.//*:/usr/hdp/2.2.4.2-2/hadoop-mapreduce/lib/*:/usr/hdp/2.2.4.2-2/hadoop-mapreduce/.//*:/etc/cdap/conf/:/opt/cdap/master/conf/:/etc/hbase/conf/

Derek Wood

--

You received this message because you are subscribed to the Google Groups "CDAP User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to cdap-user+...@googlegroups.com.

To post to this group, send email to cdap...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/cdap-user/BN3PR10MB0196500CE9C6CD0B03C0BC62D1670%40BN3PR10MB0196.namprd10.prod.outlook.com.

For more options, visit https://groups.google.com/d/optout.