Qian Yang

Aug 21, 2017, 8:59:13 AM8/21/17

to Caffe Users

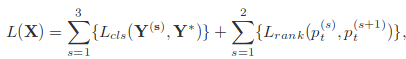

I am trying to achieve the RA-CNN . There are some problems when creating a new layer for calculating the pairwise ranking loss.

Here are some basic formulas.

Here are some basic formulas.

where  denotes the prediction probability on the correct category labels t, and s denotes the scale index.

denotes the prediction probability on the correct category labels t, and s denotes the scale index.

In short, the whole network consists three sub networks, each sub network outputs 10 probabilities of different classes. The following code only calculates the second item of whole loss.

Here is my test code.

However, when I make runtest, gradient checking failed. Can anyone point out where I made some mistakes. Any suggetions are welcomed.

In short, the whole network consists three sub networks, each sub network outputs 10 probabilities of different classes. The following code only calculates the second item of whole loss.

namespace caffe {

template <typename Dtype>

void RankLossLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top)

{

const Dtype* pred = bottom[0]->cpu_data();

const Dtype* label = bottom[1]->cpu_data();

int num = bottom[0]->num(); //number of samples

int count = bottom[0]->count(); //length of data

int dim = count / num; // dim of classes

Dtype margin=this->layer_param_.rank_loss_param().margin();

Dtype loss = Dtype(0.0);

for(int i=0;i<num;i++)

{

int scale1_index = i*dim+(int)label[i];

int scale2_index = i*dim+dim/3+(int)label[i];

int scale3_index = i*dim+dim/3*2+(int)label[i];

Dtype rankLoss12 = std::max(Dtype(0), pred[scale1_index]-pred[scale2_index]+margin);

Dtype rankLoss23 = std::max(Dtype(0), pred[scale2_index]-pred[scale3_index]+margin);

loss = rankLoss12 + rankLoss23;

}

top[0]->mutable_cpu_data()[0] = loss;

}

template<typename Dtype>

void RankLossLayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom)

{

const Dtype loss_weight = top[0]->cpu_diff()[0];

const Dtype* pred = bottom[0]->cpu_data();

const Dtype* label = bottom[1]->cpu_data();

Dtype* bottom_diff = bottom[0]->mutable_cpu_diff();

int num = bottom[0]->num();

int count = bottom[0]->count();

int dim = count / num; // dim of classes

Dtype margin=this->layer_param_.rank_loss_param().margin();

memset(bottom_diff, Dtype(0), count*sizeof(Dtype));

for(int i=0;i<num;i++)

{

int scale1_index = i*dim+(int)label[i];

int scale2_index = i*dim+dim/3+(int)label[i];

int scale3_index = i*dim+dim/3*2+(int)label[i];

if(pred[scale1_index]-pred[scale2_index]+margin>0)

{

bottom_diff[scale1_index] += loss_weight;

if(pred[scale2_index]-pred[scale3_index]+margin<0)

{

bottom_diff[scale2_index] += loss_weight;

}

else

{

bottom_diff[scale3_index] -= loss_weight;

}

}

else

{

if(pred[scale2_index]-pred[scale3_index]+margin>0)

{

bottom_diff[scale2_index] += loss_weight;

bottom_diff[scale3_index] -= loss_weight;

}

}

}

}在此输入代码...Here is my test code.

void TestBackward()

{

LayerParameter layer_param;

RankLossParameter* rank_loss_param = layer_param.mutable_rank_loss_param();

rank_loss_param->set_margin(1);

RankLossLayer<Dtype> layer(layer_param);

GradientChecker<Dtype> checker(1e-4, 1e-2);//, 1701, 0, 0.01);

checker.CheckGradientExhaustive(&layer, this->blob_bottom_vec_, this->blob_top_vec_);

}在此输入代码...However, when I make runtest, gradient checking failed. Can anyone point out where I made some mistakes. Any suggetions are welcomed.

Reply all

Reply to author

Forward

0 new messages