Submariner for local redundant clusters

239 views

Skip to first unread message

john...@gmail.com

Feb 9, 2021, 10:49:01 PM2/9/21

to submariner-users

Hello,

I am working on an edge use case with redundant local clusters on-prem over a private network. I have been able to execute the Kind Sandbox walkthrough and obtain success results from subctl verify.

In taking the next step simulating something more resembling my use case, I am attempting to connect 2 single-node clusters using Submariner. Thus far, I have tried a few different minimal cases from my development machine; minikube, (2) microk8s, and (2) VMs using kubeadm from scratch, all with the same result of seemingly successful Submariner deployment, but no connectivity between clusters after joining. Perhaps the minikube and microk8s cases were problematic because of their pre-packaged nature, which is why I eventually moved to kubeadm and 2 VMs- so I would like to focus on this case.

My setup is as follows:

2 VMs (VirtualBox) each using bridged adapter to LAN (wired).

Clusters created via kubeadm using different pod & service CIDRs:

cluster A: (broker)

host IP 192.168.1.71

pod-cidr 10.71.0.0/16

svc-cidr 10.72.0.0/16

cluster B:

host IP 192.168.1.66

pod-cidr 10.66.0.0/16

svc-cidr 10.67.0.0/16

My results have been the same in each of my attempts:

I am able to successfully deploy Submariner broker to cluster A (subctl).

I am able to successfully join the broker cluster (subctl join).

Joining cluster B results in Submariner successfully deployed/running on cluster B, but once either the gateway pod or routeagent pod come online, communication between the clusters ceases. In fact I can no longer ping between the VMs until I remove the Submariner deployment.

Any ideas where I am going wrong here? Perhaps these machines must reside on different subnets?

regards,

John

Miguel Angel Ajo

Feb 10, 2021, 4:36:49 AM2/10/21

to john...@gmail.com, submariner-users

Hello John!,

If NAT is enabled (it is by default), the behavior on the gateway will be: a) discover the public IP (your internet IP), then, announce that IP in the broker as the public IP, which will be used by other clusters to connect.

That doesn't work very well in multiple cases:

1) If two clusters with nat enabled try to communicate to each other, they will go to the public IP of the router, it will not work

2) If you have 1 public clusters and multiple on-premises, behind nat.

We have an open issue to make nat/no-nat detected in real time https://github.com/submariner-io/submariner/issues/300, it's still not prioritized, my idea was to use a separate port with public access where all gateways can talk to each other, so when connecting to a cluster, they will first try to ping on the private IP, if connectivity succeeds, then the private IP will be used, if that doesn't work, then the public IP is attempted, if that doesn't work either, print a warning and try blindly on the public IP as a fall back -may be the detection port is closed, and the ipsec or wireguard ports are open-

To very likely fix this in your deployment (hopefully it's case 1, where there's no mix), add this parameter to join

`--disable-nat` (this flag will change to --natt=false in 0.9.0, becoming deprecated).

You can re-join existing clusters and the nat config will be updated if you use that flag. Alternatively you can also do:

kubectl edit Submariner submariner -n submariner-operator

and disable the nat setting on each cluster, the pods will be restarted with nat disabled.

Let me know if that fixes your case, and if you will be hitting the case 2 described above.

Best regards,

Miguel Ángel

--

You received this message because you are subscribed to the Google Groups "submariner-users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to submariner-use...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/submariner-users/a343d4b3-bcbc-4ce7-a42e-4ddb3d00983cn%40googlegroups.com.

Miguel Ángel Ajo @mangel_ajo

OpenShift / Kubernetes / Multi-cluster Networking team.

ex OSP / Networking DFG, OVN Squad Engineering

Sridhar Gaddam

Feb 10, 2021, 4:56:15 AM2/10/21

to john...@gmail.com, Miguel Angel Ajo, submariner-users

On the KIND Sandbox environment, all the Clusters are deployed on the same Subnet. So, it's not mandatory.

As Miguel pointed out, please take a look if you specified "--disable-nat" (or --natt=false) while joining the clusters.

john...@gmail.com

Feb 10, 2021, 1:02:02 PM2/10/21

to submariner-users

Thank you- this appears to have been the problem. Both clusters are now able to join (confirmed via subctl show) and I am able to run tests.

Tests start running, but I run into issues with both connectivity and service-discovery suites. I am not running gateway suite since I have only 1 node cluster.

For connectivity, it seems a single-node cluster may not be appropriate, or at least I need to provide some annotation/label to satisfy affinity? (see attached log)

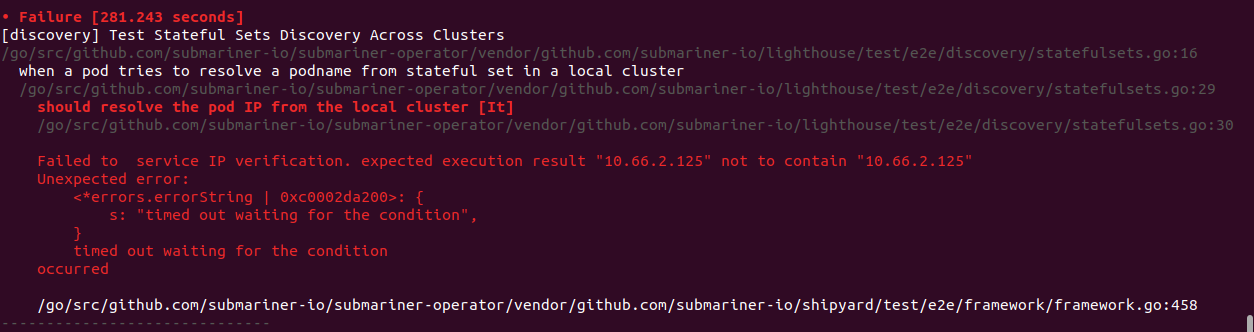

For service discovery, the test suite seems to get stuck trying to validate that an exposed service was indeed removed. (see attached screenshot of test output).

Many thanks for the help!

regards,

John

Sridhar Gaddam

Feb 10, 2021, 1:35:39 PM2/10/21

to john...@gmail.com, submariner-users

Thanks for confirming John. PSB inline

Thank you- this appears to have been the problem. Both clusters are now able to join (confirmed via subctl show) and I am able to run tests.

Glad to hear that.

Tests start running, but I run into issues with both connectivity and service-discovery suites.

It looks like your clusters are deployed with Calico CNI which requires an additional step. Please follow the instructions mentioned here

I am not running gateway suite since I have only 1 node cluster.

Yes, the end-to-end tests in Submariner try to validate various use-cases by scheduling Pods on different nodes and expect at least two nodes in each of the clusters (a Gateway node and a non-Gateway node).

To view this discussion on the web visit https://groups.google.com/d/msgid/submariner-users/b7643ed9-16d8-4c3e-b340-3fc00ce1a5ebn%40googlegroups.com.

John Finch

Feb 12, 2021, 2:23:16 PM2/12/21

to Sridhar Gaddam, submariner-users

again- thank you for the information; I'm progressing further down the path.

I did fiddle with the Calico configuration a bit, but was still having trouble getting things working (certainly due to my inexperience with Calico). I decided to redeploy using Flannel and got to the point where I have all connectivity tests passing but 7 of the service discovery tests are failing due to timeouts. Probably an issue with DNS config for the cluster?- but I'm not particularly knowledgeable in this area.

Regardless, much closer to a working setup. Thanks!

regards,

John

To view this discussion on the web visit https://groups.google.com/d/msgid/submariner-users/CAOW2eyzGyZO-7uhhuW6oQAMPB7vYqQSwMCw6b_O3DObwrYDOOQ%40mail.gmail.com.

Miguel Angel

Feb 12, 2021, 8:39:41 PM2/12/21

to John Finch, vth...@redhat.com, Sridhar Gaddam, submariner-users

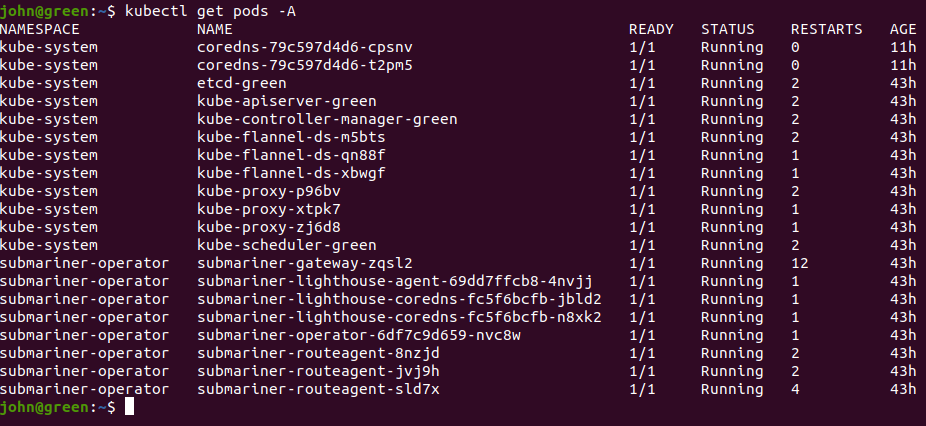

I suspect that your cluster could be running kubedns instead of coredns, can you check by listing the pods?

kubectl get pods -A

If it’s the case please check this workaround here:

We should probably go ahead and include support for kubedns.

To view this discussion on the web visit https://groups.google.com/d/msgid/submariner-users/CAJADjR3KZ5Prew97QF5dPB8zA8tDWmKp48t6Pur3%2Ba%2BBa%2Ba9Bw%40mail.gmail.com.

John Finch

Feb 13, 2021, 10:58:29 AM2/13/21

to Miguel Angel, vth...@redhat.com, Sridhar Gaddam, submariner-users

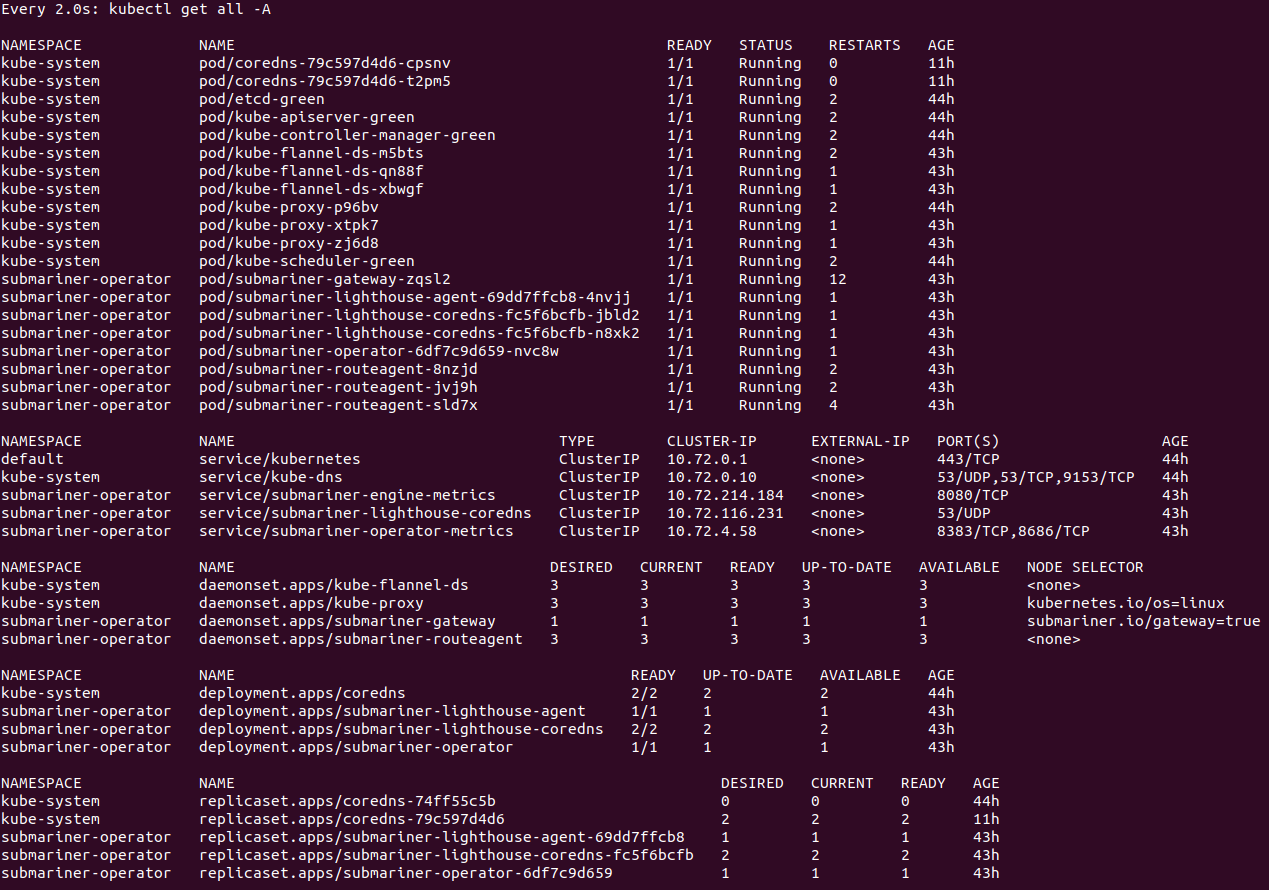

I have kube-dns showing as a service, but I think its backed by the coredns pods:

I did try the suggested kube-dns workaround, and restarted the core-dns pods, but ended up with the same timeout result on the same 7 service-discovery tests.

Both my clusters are deployed via kubeadm, v1.20.2, so I think I'm running CoreDNS.

regards,

John

Message has been deleted

john...@gmail.com

Feb 22, 2021, 12:59:42 PM2/22/21

to submariner-users

Hi,

Following up after chipping away at this over the last week. I remain stuck with 7 service-discovery tests continuing to fail. I've confirmed I am running CoreDNS, I have rebuilt the clusters to confirm I am using Flannel CNI as well. I've confirmed I see expected output from subctl show all, and can successfully run the throughput and latency tests. I've also gone through Step 1 Validate Installation from the user guide also observing expected results.

Any pointers on where I can dig around to see why I am getting network timeouts on these 7 test cases? (Output from test run is attached)

Are there other logs or anything I can provide to assist in troubleshooting?

Summarizing 7 Failures:

[Fail] [discovery] Test Stateful Sets Discovery Across Clusters when a pod tries to resolve a podname from stateful set in a remote cluster [It] should resolve the pod IP from the remote cluster

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Stateful Sets Discovery Across Clusters when a pod tries to resolve a podname from stateful set in a local cluster [It] should resolve the pod IP from the local cluster

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Headless Service Discovery Across Clusters when a pod tries to resolve a headless service in a remote cluster [It] should resolve the backing pod IPs from the remote cluster

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Headless Service Discovery Across Clusters when a pod tries to resolve a headless service which is exported locally and in a remote cluster [It] should resolve the backing pod IPs from both clusters

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Service Discovery Across Clusters when a pod tries to resolve a service in a remote cluster [It] should be able to discover the remote service successfully

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Service Discovery Across Clusters when a pod tries to resolve a service which is present locally and in a remote cluster [It] should resolve the local service

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Service Discovery Across Clusters when service export is created before the service [It] should resolve the service

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

Ran 23 of 34 Specs in 952.546 seconds

FAIL! -- 16 Passed | 7 Failed | 0 Pending | 11 Skipped

[Fail] [discovery] Test Stateful Sets Discovery Across Clusters when a pod tries to resolve a podname from stateful set in a remote cluster [It] should resolve the pod IP from the remote cluster

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Stateful Sets Discovery Across Clusters when a pod tries to resolve a podname from stateful set in a local cluster [It] should resolve the pod IP from the local cluster

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Headless Service Discovery Across Clusters when a pod tries to resolve a headless service in a remote cluster [It] should resolve the backing pod IPs from the remote cluster

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Headless Service Discovery Across Clusters when a pod tries to resolve a headless service which is exported locally and in a remote cluster [It] should resolve the backing pod IPs from both clusters

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Service Discovery Across Clusters when a pod tries to resolve a service in a remote cluster [It] should be able to discover the remote service successfully

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Service Discovery Across Clusters when a pod tries to resolve a service which is present locally and in a remote cluster [It] should resolve the local service

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

[Fail] [discovery] Test Service Discovery Across Clusters when service export is created before the service [It] should resolve the service

/go/src/github.com/submariner-io/submariner-operator/vendor/github.com/submariner-io/shipyard/test/e2e/framework/framework.go:458

Ran 23 of 34 Specs in 952.546 seconds

FAIL! -- 16 Passed | 7 Failed | 0 Pending | 11 Skipped

Miguel Angel

Feb 23, 2021, 4:55:26 AM2/23/21

to vth...@redhat.com, Aswin Suryanarayanan, submariner-users

Vishal, Aswin, do you have any idea about what could be wrong with John's deployment ^

John: Vishal & Aswin are our service discovery experts :)

To view this discussion on the web visit https://groups.google.com/d/msgid/submariner-users/400eecbd-d2e4-47bb-8d4b-f5d6c14c5ebfn%40googlegroups.com.

Aswin Suryanarayanan

Feb 23, 2021, 6:58:54 AM2/23/21

to finche...@gmail.com, Vishal Thapar, submariner-users, Miguel Angel

Hi John,

It seems like tests are failing in the last step, where it verifies the delete flow ( like delete the serviceExport and verify if dig command fails ). So it seems like the ServiceImport that Lighthouse creates is not getting cleaned up/updated.

Can you have a look at the logs of lighthouse-agent running in the submariner-operator namespace?

Thanks

Aswin

Aswin Suryanarayanan

Feb 23, 2021, 8:14:52 AM2/23/21

to John Finch, Vishal Thapar, submariner-users, Miguel Angel

Hi John,

Logs look fine. Can you just try the manual verification of service discovery as in [1] with 'dig' instead of 'curl'. If it works delete the ServicExport and see if the dig still fails from a remote cluster. If not, check if ServiceImport(in submariner-operator ns) is getting deleted from all clusters. Also while using 'dig' take a look at the TTL value, just to confirm it is not a high value.

Thanks,

Aswin

On Tue, Feb 23, 2021 at 6:10 PM John Finch <finche...@gmail.com> wrote:

Hi Aswin,Here is the lighthouse-agent log after a run of the test suite:john@green:~/.local/bin$ kubectl logs pod/submariner-lighthouse-agent-6bdff54f75-t798l --namespace submariner-operator

+ trap 'exit 1' SIGTERM SIGINT

+ LIGHTHOUSE_VERBOSITY=1

+ '[' '' == true ']'

+ DEBUG=-v=1

+ exec lighthouse-agent -v=1 -alsologtostderr

I0222 16:55:16.591705 1 main.go:34] AgentSpec: {green submariner-operator false}

W0222 16:55:16.592048 1 client_config.go:543] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0222 16:55:16.592378 1 main.go:51] Starting submariner-lighthouse-agent {green submariner-operator false}

I0222 16:55:38.304413 1 agent.go:167] Starting Agent controller

I0222 16:55:38.918260 1 agent.go:193] Agent controller started

E0223 01:28:59.605893 1 queue.go:83] Endpoints -> EndpointSlice: Failed to process object with key "nginx-test/nginx-ss": error creating &unstructured.Unstructured{Object:map[string]interface {}{"addressType":"IPv4", "apiVersion":"discovery.k8s.io/v1beta1", "endpoints":[]interface {}{map[string]interface {}{"addresses":[]interface {}{"10.71.1.65"}, "conditions":map[string]interface {}{"ready":true}, "hostname":"web-1", "topology":map[string]interface {}{"kubernetes.io/hostname":"gwork"}}}, "kind":"EndpointSlice", "metadata":map[string]interface {}{"labels":map[string]interface {}{"endpointslice.kubernetes.io/managed-by":"lighthouse-agent.submariner.io", "lighthouse.submariner.io/sourceCluster":"green", "lighthouse.submariner.io/sourceName":"nginx-ss", "lighthouse.submariner.io/sourceNamespace":"nginx-test", "multicluster.kubernetes.io/service-name":"nginx-ss-nginx-test-green"}, "name":"nginx-ss-green", "namespace":"nginx-test", "ownerReferences":[]interface {}{map[string]interface {}{"apiVersion":"lighthouse.submariner.io.v2alpha1", "controller":false, "kind":"ServiceImport", "name":"nginx-ss-nginx-test-green", "uid":"2d2339af-382c-4db1-8a0e-c6a2055e8064"}}}, "ports":[]interface {}{map[string]interface {}{"name":"web", "port":80, "protocol":"TCP"}}}}: endpointslices.discovery.k8s.io "nginx-ss-green" is forbidden: unable to create new content in namespace nginx-test because it is being terminated

E0223 01:28:59.617115 1 queue.go:83] Endpoints -> EndpointSlice: Failed to process object with key "nginx-test/nginx-ss": error creating &unstructured.Unstructured{Object:map[string]interface {}{"addressType":"IPv4", "apiVersion":"discovery.k8s.io/v1beta1", "endpoints":interface {}(nil), "kind":"EndpointSlice", "metadata":map[string]interface {}{"labels":map[string]interface {}{"endpointslice.kubernetes.io/managed-by":"lighthouse-agent.submariner.io", "lighthouse.submariner.io/sourceCluster":"green", "lighthouse.submariner.io/sourceName":"nginx-ss", "lighthouse.submariner.io/sourceNamespace":"nginx-test", "multicluster.kubernetes.io/service-name":"nginx-ss-nginx-test-green"}, "name":"nginx-ss-green", "namespace":"nginx-test", "ownerReferences":[]interface {}{map[string]interface {}{"apiVersion":"lighthouse.submariner.io.v2alpha1", "controller":false, "kind":"ServiceImport", "name":"nginx-ss-nginx-test-green", "uid":"2d2339af-382c-4db1-8a0e-c6a2055e8064"}}}, "ports":interface {}(nil)}}: endpointslices.discovery.k8s.io "nginx-ss-green" is forbidden: unable to create new content in namespace nginx-test because it is being terminated

john@green:~/.local/bin$

Message has been deleted

Miguel Angel

Feb 23, 2021, 11:25:33 AM2/23/21

to John Finch, Aswin Suryanarayanan, Vishal Thapar, submariner-users

It may stop resolving (ignoring the TTL part) as soon as you delete the

service export, or the service. The failure for that to happen is what the

E2E failures are indicating.

Which version of k8s are you using?

Can you share the steps/details to reproduce in the form of an issue in the lighthouse repo?

Can you also post there the logs for the lighthouse-agent on all clusters around the time you

create, .. then delete the service or service export manually?

@Aswin Suryanarayanan Is there anything else we could need? ^

On Tue, Feb 23, 2021 at 5:19 PM John Finch <finche...@gmail.com> wrote:

Hi Aswin,Thanks- I performed the manual verification as suggested, but am not certain I deleted the service export in the correct way, so I would like to confirm.After exporting the service, the remote cluster is able to resolve it correctly (cluster exporting service = 10.72.x.x, remote cluster is 10.62.x.x):bash-5.0# dig nginx.test.svc.clusterset.local

; <<>> DiG 9.16.6 <<>> nginx.test.svc.clusterset.local

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 44242

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 16a67284d43d8a77 (echoed)

;; QUESTION SECTION:

;nginx.test.svc.clusterset.local. IN A

;; ANSWER SECTION:

nginx.test.svc.clusterset.local. 5 IN A 10.72.158.54

;; Query time: 0 msec

;; SERVER: 10.67.0.10#53(10.67.0.10)

;; WHEN: Tue Feb 23 14:59:12 UTC 2021

;; MSG SIZE rcvd: 119Then I attempted to delete the service exports CRD from the cluster exporting the service using kubectl delete serviceexports.multicluster.x-k8s.io --all. This seemed to have no effect though, as I was still able to resolve the service from the remote cluster. The remained true after waiting > 5 minutes to in case TTL was my problem.So then I simply deleted the entire test namespace from the cluster exporting the service. Once deleted, I retried the dig query from the remote cluster and I do observe the service is no longer resolvable from the remote cluster:bash-5.0# dig nginx.test.svc.clusterset.local

; <<>> DiG 9.16.6 <<>> nginx.test.svc.clusterset.local

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 64806

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 2d076e73e6d6a967 (echoed)

;; QUESTION SECTION:

;nginx.test.svc.clusterset.local. IN A

;; Query time: 8 msec

;; SERVER: 10.67.0.10#53(10.67.0.10)

;; WHEN: Tue Feb 23 16:03:36 UTC 2021

;; MSG SIZE rcvd: 72

John Finch

Feb 23, 2021, 12:30:41 PM2/23/21

to Aswin Suryanarayanan, Vishal Thapar, submariner-users, Miguel Angel

John Finch

Feb 23, 2021, 12:30:41 PM2/23/21

to Aswin Suryanarayanan, Vishal Thapar, submariner-users, Miguel Angel

Hi Aswin,

Here is the lighthouse-agent log after a run of the test suite:

john@green:~/.local/bin$ kubectl logs pod/submariner-lighthouse-agent-6bdff54f75-t798l --namespace submariner-operator

+ trap 'exit 1' SIGTERM SIGINT

+ LIGHTHOUSE_VERBOSITY=1

+ '[' '' == true ']'

+ DEBUG=-v=1

+ exec lighthouse-agent -v=1 -alsologtostderr

I0222 16:55:16.591705 1 main.go:34] AgentSpec: {green submariner-operator false}

W0222 16:55:16.592048 1 client_config.go:543] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0222 16:55:16.592378 1 main.go:51] Starting submariner-lighthouse-agent {green submariner-operator false}

I0222 16:55:38.304413 1 agent.go:167] Starting Agent controller

I0222 16:55:38.918260 1 agent.go:193] Agent controller started

E0223 01:28:59.605893 1 queue.go:83] Endpoints -> EndpointSlice: Failed to process object with key "nginx-test/nginx-ss": error creating &unstructured.Unstructured{Object:map[string]interface {}{"addressType":"IPv4", "apiVersion":"discovery.k8s.io/v1beta1", "endpoints":[]interface {}{map[string]interface {}{"addresses":[]interface {}{"10.71.1.65"}, "conditions":map[string]interface {}{"ready":true}, "hostname":"web-1", "topology":map[string]interface {}{"kubernetes.io/hostname":"gwork"}}}, "kind":"EndpointSlice", "metadata":map[string]interface {}{"labels":map[string]interface {}{"endpointslice.kubernetes.io/managed-by":"lighthouse-agent.submariner.io", "lighthouse.submariner.io/sourceCluster":"green", "lighthouse.submariner.io/sourceName":"nginx-ss", "lighthouse.submariner.io/sourceNamespace":"nginx-test", "multicluster.kubernetes.io/service-name":"nginx-ss-nginx-test-green"}, "name":"nginx-ss-green", "namespace":"nginx-test", "ownerReferences":[]interface {}{map[string]interface {}{"apiVersion":"lighthouse.submariner.io.v2alpha1", "controller":false, "kind":"ServiceImport", "name":"nginx-ss-nginx-test-green", "uid":"2d2339af-382c-4db1-8a0e-c6a2055e8064"}}}, "ports":[]interface {}{map[string]interface {}{"name":"web", "port":80, "protocol":"TCP"}}}}: endpointslices.discovery.k8s.io "nginx-ss-green" is forbidden: unable to create new content in namespace nginx-test because it is being terminated

E0223 01:28:59.617115 1 queue.go:83] Endpoints -> EndpointSlice: Failed to process object with key "nginx-test/nginx-ss": error creating &unstructured.Unstructured{Object:map[string]interface {}{"addressType":"IPv4", "apiVersion":"discovery.k8s.io/v1beta1", "endpoints":interface {}(nil), "kind":"EndpointSlice", "metadata":map[string]interface {}{"labels":map[string]interface {}{"endpointslice.kubernetes.io/managed-by":"lighthouse-agent.submariner.io", "lighthouse.submariner.io/sourceCluster":"green", "lighthouse.submariner.io/sourceName":"nginx-ss", "lighthouse.submariner.io/sourceNamespace":"nginx-test", "multicluster.kubernetes.io/service-name":"nginx-ss-nginx-test-green"}, "name":"nginx-ss-green", "namespace":"nginx-test", "ownerReferences":[]interface {}{map[string]interface {}{"apiVersion":"lighthouse.submariner.io.v2alpha1", "controller":false, "kind":"ServiceImport", "name":"nginx-ss-nginx-test-green", "uid":"2d2339af-382c-4db1-8a0e-c6a2055e8064"}}}, "ports":interface {}(nil)}}: endpointslices.discovery.k8s.io "nginx-ss-green" is forbidden: unable to create new content in namespace nginx-test because it is being terminated

john@green:~/.local/bin$

+ trap 'exit 1' SIGTERM SIGINT

+ LIGHTHOUSE_VERBOSITY=1

+ '[' '' == true ']'

+ DEBUG=-v=1

+ exec lighthouse-agent -v=1 -alsologtostderr

I0222 16:55:16.591705 1 main.go:34] AgentSpec: {green submariner-operator false}

W0222 16:55:16.592048 1 client_config.go:543] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0222 16:55:16.592378 1 main.go:51] Starting submariner-lighthouse-agent {green submariner-operator false}

I0222 16:55:38.304413 1 agent.go:167] Starting Agent controller

I0222 16:55:38.918260 1 agent.go:193] Agent controller started

E0223 01:28:59.605893 1 queue.go:83] Endpoints -> EndpointSlice: Failed to process object with key "nginx-test/nginx-ss": error creating &unstructured.Unstructured{Object:map[string]interface {}{"addressType":"IPv4", "apiVersion":"discovery.k8s.io/v1beta1", "endpoints":[]interface {}{map[string]interface {}{"addresses":[]interface {}{"10.71.1.65"}, "conditions":map[string]interface {}{"ready":true}, "hostname":"web-1", "topology":map[string]interface {}{"kubernetes.io/hostname":"gwork"}}}, "kind":"EndpointSlice", "metadata":map[string]interface {}{"labels":map[string]interface {}{"endpointslice.kubernetes.io/managed-by":"lighthouse-agent.submariner.io", "lighthouse.submariner.io/sourceCluster":"green", "lighthouse.submariner.io/sourceName":"nginx-ss", "lighthouse.submariner.io/sourceNamespace":"nginx-test", "multicluster.kubernetes.io/service-name":"nginx-ss-nginx-test-green"}, "name":"nginx-ss-green", "namespace":"nginx-test", "ownerReferences":[]interface {}{map[string]interface {}{"apiVersion":"lighthouse.submariner.io.v2alpha1", "controller":false, "kind":"ServiceImport", "name":"nginx-ss-nginx-test-green", "uid":"2d2339af-382c-4db1-8a0e-c6a2055e8064"}}}, "ports":[]interface {}{map[string]interface {}{"name":"web", "port":80, "protocol":"TCP"}}}}: endpointslices.discovery.k8s.io "nginx-ss-green" is forbidden: unable to create new content in namespace nginx-test because it is being terminated

E0223 01:28:59.617115 1 queue.go:83] Endpoints -> EndpointSlice: Failed to process object with key "nginx-test/nginx-ss": error creating &unstructured.Unstructured{Object:map[string]interface {}{"addressType":"IPv4", "apiVersion":"discovery.k8s.io/v1beta1", "endpoints":interface {}(nil), "kind":"EndpointSlice", "metadata":map[string]interface {}{"labels":map[string]interface {}{"endpointslice.kubernetes.io/managed-by":"lighthouse-agent.submariner.io", "lighthouse.submariner.io/sourceCluster":"green", "lighthouse.submariner.io/sourceName":"nginx-ss", "lighthouse.submariner.io/sourceNamespace":"nginx-test", "multicluster.kubernetes.io/service-name":"nginx-ss-nginx-test-green"}, "name":"nginx-ss-green", "namespace":"nginx-test", "ownerReferences":[]interface {}{map[string]interface {}{"apiVersion":"lighthouse.submariner.io.v2alpha1", "controller":false, "kind":"ServiceImport", "name":"nginx-ss-nginx-test-green", "uid":"2d2339af-382c-4db1-8a0e-c6a2055e8064"}}}, "ports":interface {}(nil)}}: endpointslices.discovery.k8s.io "nginx-ss-green" is forbidden: unable to create new content in namespace nginx-test because it is being terminated

john@green:~/.local/bin$

On Tue, Feb 23, 2021 at 5:58 AM Aswin Suryanarayanan <asur...@redhat.com> wrote:

Message has been deleted

Aswin Suryanarayanan

Feb 23, 2021, 1:11:01 PM2/23/21

to Miguel Angel, John Finch, Vishal Thapar, submariner-users

On Tue, Feb 23, 2021 at 9:56 PM 'Miguel Angel' via submariner-users <submarin...@googlegroups.com> wrote:

It may stop resolving (ignoring the TTL part) as soon as you delete theservice export, or the service. The failure for that to happen is what theE2E failures are indicating.Which version of k8s are you using?Can you share the steps/details to reproduce in the form of an issue in the lighthouse repo?Can you also post there the logs for the lighthouse-agent on all clusters around the time youcreate, .. then delete the service or service export manually?@Aswin Suryanarayanan Is there anything else we could need? ^

The ligthouse-coredns logs may give some additional info too

@John Finch Was the ServiceImport(In submariner-operator ns) deleted/updated in the cluster you tried the 'dig' command, when you removed the ServiceExport ?

To view this discussion on the web visit https://groups.google.com/d/msgid/submariner-users/CADSDy2iFOXCN6RsMEOPpTnA93vmR%3DZwLt8tFMG%3DHC6yNherKoQ%40mail.gmail.com.

Message has been deleted

Aswin Suryanarayanan

Feb 24, 2021, 9:18:48 AM2/24/21

to John Finch, Miguel Angel, Vishal Thapar, submariner-users

On Wed, Feb 24, 2021 at 6:44 PM John Finch <finche...@gmail.com> wrote:

Hi all,For some reason I am having trouble with my responses getting deleted on the mailing list- so I just wanted to respond to the questions above:I'm using K8s v1.20.4, submariner v0.8.1I logged the issue as requested at the lighthouse repo: https://github.com/submariner-io/lighthouse/issues/470

@Aswin Suryanarayanan, from the cluster where I invoked the 'dig' command, I did not delete/update anything with ServiceImport, after removing the ServiceExport. dig was still able to resolve the service. It was after I deleted the test namespace from the exporting cluster that the dig request at the remote cluster was finally no longer able to resolve the service.

@John Finch

Thanks for reporting the issue.

Lighthouse is supposed to delete the ServiceImport corresponding to the ServiceExport, when ServiceExport is deleted. I just wanted to confirm if that is happening.

regards,John

Message has been deleted

John Finch

Mar 7, 2021, 6:04:57 AM3/7/21

to Aswin Suryanarayanan, Miguel Angel, Vishal Thapar, submariner-users

Hi all,

I just wanted to close the loop on this issue, it has been addressed with the resolution to https://github.com/submariner-io/lighthouse/pull/480, and hence the issue I was experiencing and logged as https://github.com/submariner-io/lighthouse/issues/470 is closed as well.

I was able to confirm successful E2E tests for connectivity+service-discovery using the dev build that incorporated the fix for #480.

Thanks all for your assistance.

regards,

John

Reply all

Reply to author

Forward

0 new messages