Exception during "create branch"

93 views

Skip to first unread message

Vikram Roopchand

Aug 6, 2022, 3:42:58 AM8/6/22

to projectnessie

Hello There,

We are trying to create a branch using the spark-sql shell.

./spark-sql --packages org.apache.iceberg:iceberg-spark-runtime-3.2_2.12:0.13.0,org.projectnessie:nessie-spark-3.2-extensions:0.41.0 --conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions,org.projectnessie.spark.extensions.NessieSpark32SessionExtensions --conf spark.sql.catalog.nessie.uri=http://localhost:19120/api/v1 --conf spark.sql.catalog.nessie.ref=main --conf spark.sql.catalog.nessie.authentication.type=NONE --conf spark.sql.catalog.nessie.catalog-impl=org.apache.iceberg.nessie.NessieCatalog --conf spark.sql.catalog.nessie.warehouse=hdfs://voyager:9000/DI/warehouse --conf spark.sql.catalog.nessie=org.apache.iceberg.spark.SparkCatalog

>> create branch if not exists etl in nessie;

The above results in

java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.trees.Origin.<init>(Lscala/Option;Lscala/Option;)V

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.position(NessieSqlExtensionsAstBuilder.scala:141)

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.withOrigin(NessieSqlExtensionsAstBuilder.scala:131)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:100)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:26)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsParser$SingleStatementContext.accept(NessieSqlExtensionsParser.java:126)

at org.projectnessie.shaded.org.antlr.v4.runtime.tree.AbstractParseTreeVisitor.visit(AbstractParseTreeVisitor.java:18)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.$anonfun$parsePlan$1(NessieSparkSqlExtensionsParser.scala:107)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parse(NessieSparkSqlExtensionsParser.scala:150)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parsePlan(NessieSparkSqlExtensionsParser.scala:106)

at org.apache.spark.sql.SparkSession.$anonfun$sql$2(SparkSession.scala:620)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:620)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:651)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:67)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:384)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1(SparkSQLCLIDriver.scala:504)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1$adapted(SparkSQLCLIDriver.scala:498)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processLine(SparkSQLCLIDriver.scala:498)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:286)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.trees.Origin.<init>(Lscala/Option;Lscala/Option;)V

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.position(NessieSqlExtensionsAstBuilder.scala:141)

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.withOrigin(NessieSqlExtensionsAstBuilder.scala:131)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:100)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:26)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsParser$SingleStatementContext.accept(NessieSqlExtensionsParser.java:126)

at org.projectnessie.shaded.org.antlr.v4.runtime.tree.AbstractParseTreeVisitor.visit(AbstractParseTreeVisitor.java:18)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.$anonfun$parsePlan$1(NessieSparkSqlExtensionsParser.scala:107)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parse(NessieSparkSqlExtensionsParser.scala:150)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parsePlan(NessieSparkSqlExtensionsParser.scala:106)

at org.apache.spark.sql.SparkSession.$anonfun$sql$2(SparkSession.scala:620)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:620)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:651)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:67)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:384)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1(SparkSQLCLIDriver.scala:504)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1$adapted(SparkSQLCLIDriver.scala:498)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processLine(SparkSQLCLIDriver.scala:498)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:286)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.position(NessieSqlExtensionsAstBuilder.scala:141)

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.withOrigin(NessieSqlExtensionsAstBuilder.scala:131)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:100)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:26)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsParser$SingleStatementContext.accept(NessieSqlExtensionsParser.java:126)

at org.projectnessie.shaded.org.antlr.v4.runtime.tree.AbstractParseTreeVisitor.visit(AbstractParseTreeVisitor.java:18)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.$anonfun$parsePlan$1(NessieSparkSqlExtensionsParser.scala:107)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parse(NessieSparkSqlExtensionsParser.scala:150)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parsePlan(NessieSparkSqlExtensionsParser.scala:106)

at org.apache.spark.sql.SparkSession.$anonfun$sql$2(SparkSession.scala:620)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:620)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:651)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:67)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:384)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1(SparkSQLCLIDriver.scala:504)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1$adapted(SparkSQLCLIDriver.scala:498)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processLine(SparkSQLCLIDriver.scala:498)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:286)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.trees.Origin.<init>(Lscala/Option;Lscala/Option;)V

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.position(NessieSqlExtensionsAstBuilder.scala:141)

at org.apache.spark.sql.catalyst.parser.extensions.NessieParserUtils$.withOrigin(NessieSqlExtensionsAstBuilder.scala:131)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:100)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsAstBuilder.visitSingleStatement(NessieSqlExtensionsAstBuilder.scala:26)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSqlExtensionsParser$SingleStatementContext.accept(NessieSqlExtensionsParser.java:126)

at org.projectnessie.shaded.org.antlr.v4.runtime.tree.AbstractParseTreeVisitor.visit(AbstractParseTreeVisitor.java:18)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.$anonfun$parsePlan$1(NessieSparkSqlExtensionsParser.scala:107)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parse(NessieSparkSqlExtensionsParser.scala:150)

at org.apache.spark.sql.catalyst.parser.extensions.NessieSparkSqlExtensionsParser.parsePlan(NessieSparkSqlExtensionsParser.scala:106)

at org.apache.spark.sql.SparkSession.$anonfun$sql$2(SparkSession.scala:620)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:620)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:651)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:67)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:384)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1(SparkSQLCLIDriver.scala:504)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1$adapted(SparkSQLCLIDriver.scala:498)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processLine(SparkSQLCLIDriver.scala:498)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:286)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Do I need to add any additional or different dependencies ?

Thanks in advance for any assistance on the above.

best regards,

Vikram

Ajantha Bhat

Aug 7, 2022, 10:44:29 PM8/7/22

to Vikram Roopchand, projectnessie

Hi Vikram,

It seems like you are using the old version of Iceberg(0.13.0) with the latest version of Nessie(0.41.0).

This is Nessie's compatibility matrix.

https://github.com/projectnessie/nessie#compatibility

Can you please use Iceberg's 0.14.0 release?

Thanks,

Ajantha

It seems like you are using the old version of Iceberg(0.13.0) with the latest version of Nessie(0.41.0).

This is Nessie's compatibility matrix.

https://github.com/projectnessie/nessie#compatibility

Can you please use Iceberg's 0.14.0 release?

Thanks,

Ajantha

--

You received this message because you are subscribed to the Google Groups "projectnessie" group.

To unsubscribe from this group and stop receiving emails from it, send an email to projectnessi...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/projectnessie/2a443df4-f0f0-40e7-8eba-a7bc9dd11534n%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Vikram Roopchand

Aug 9, 2022, 1:36:59 AM8/9/22

to Ajantha Bhat, projectnessie

Dear Ajantha,

I did this, however the same exception.

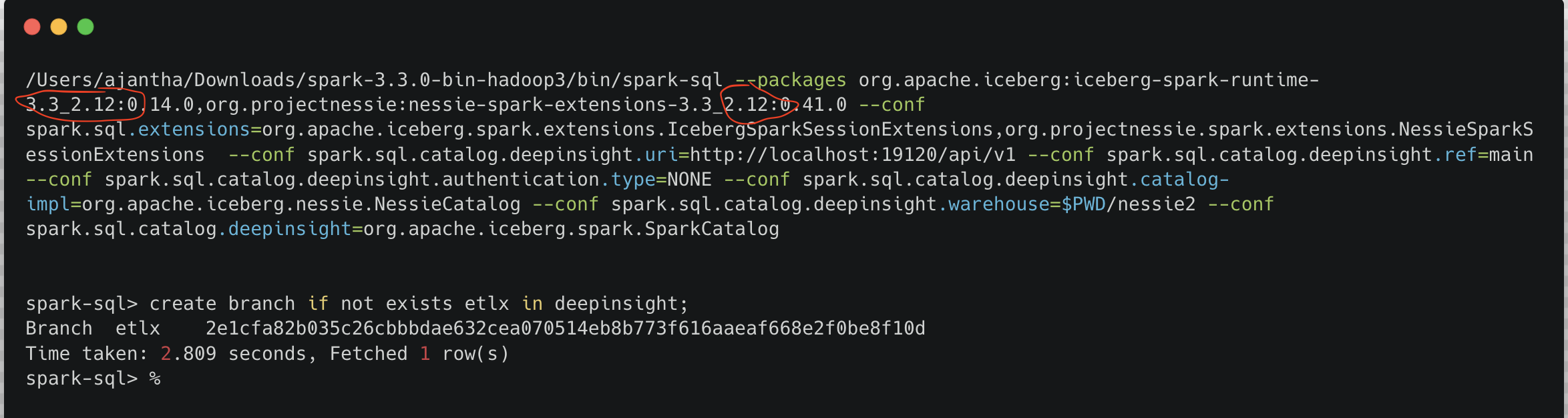

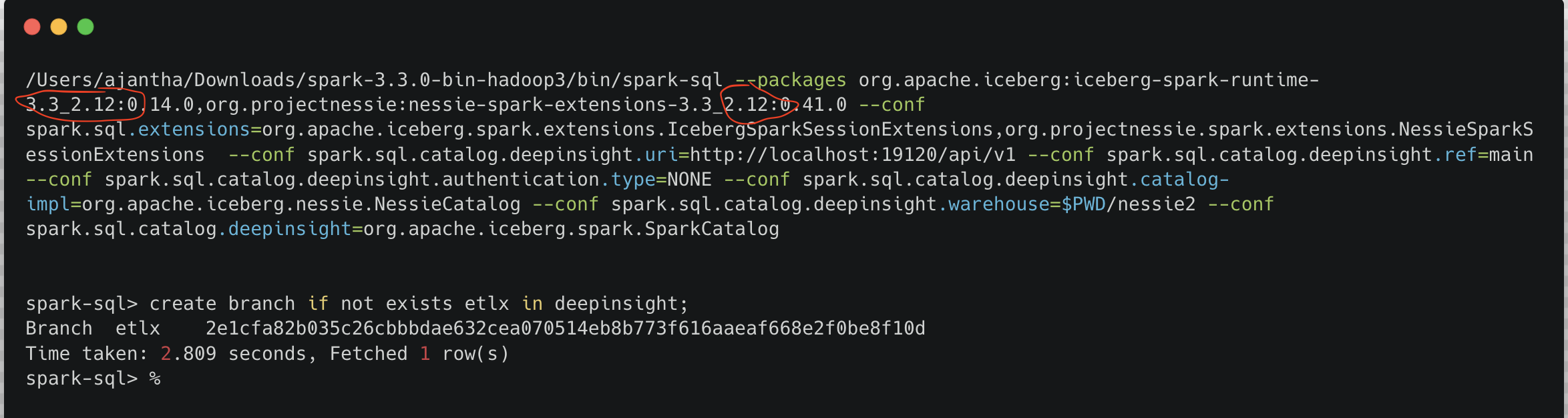

./spark-sql --packages org.apache.iceberg:iceberg-spark-runtime-3.3_2.13:0.14.0,org.projectnessie:nessie-spark-extensions-3.3_2.13:0.41.0 --conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions,org.projectnessie.spark.extensions.NessieSpark32SessionExtensions --conf spark.sql.catalog.deepinsight.uri=http://localhost:19120/api/v1 --conf spark.sql.catalog.deepinsight.ref=main --conf spark.sql.catalog.deepinsight.authentication.type=NONE --conf spark.sql.catalog.deepinsight.catalog-impl=org.apache.iceberg.nessie.NessieCatalog --conf spark.sql.catalog.deepinsight.warehouse=hdfs://voyager:9000/DI/warehouse --conf spark.sql.catalog.deepinsight=org.apache.iceberg.spark.SparkCatalog

I was able to create a branch via the APIs.

thanks again,

best regards,

Vikram

Ajantha Bhat

Aug 9, 2022, 4:49:13 AM8/9/22

to Vikram Roopchand, projectnessie

Hi Vikram,

I checked your configuration of spark3.3 with Nessie 0.41.0 with Iceberg 0.14.0

a. NessieSpark32SessionExtensions is only for Spark-3.2 (also deprecated). So, we need to use NessieSparkSessionExtensions for all the versions of spark.

b. Please check which version of scala is used for spark jars (inside spark-3.3.0-hadoop3/jars). I think yours is scala 2.12 compiled spark.

I was able to reproduce your issue using the spark compiled with scala 2.12 + iceberg and Nessie jars compiled with scala 2.13.

So, mixing the jars compiled with different scala versions can cause this problem.

It can be fixed by using all the jars compiled with the same scala version.

I am using spark compiled with scala 2.12. Hence, modified your configurations to use scala 2.12 for Iceberg + Nessie.

Create branch SQL is working fine.

Thanks,

Ajantha

I checked your configuration of spark3.3 with Nessie 0.41.0 with Iceberg 0.14.0

a. NessieSpark32SessionExtensions is only for Spark-3.2 (also deprecated). So, we need to use NessieSparkSessionExtensions for all the versions of spark.

b. Please check which version of scala is used for spark jars (inside spark-3.3.0-hadoop3/jars). I think yours is scala 2.12 compiled spark.

I was able to reproduce your issue using the spark compiled with scala 2.12 + iceberg and Nessie jars compiled with scala 2.13.

So, mixing the jars compiled with different scala versions can cause this problem.

It can be fixed by using all the jars compiled with the same scala version.

I am using spark compiled with scala 2.12. Hence, modified your configurations to use scala 2.12 for Iceberg + Nessie.

Create branch SQL is working fine.

Thanks,

Ajantha

To view this discussion on the web visit https://groups.google.com/d/msgid/projectnessie/CAKxebTBAL9Q4x6Kdz_UJeoqoPTYhzKiXGQpgmfvreuL2qcDoBQ%40mail.gmail.com.

Vikram Roopchand

Aug 10, 2022, 11:29:27 PM8/10/22

to Ajantha Bhat, projectnessie

Dear Ajantha,

thanks again,

best regards,

Vikram

Reply all

Reply to author

Forward

0 new messages