Definition of "P" in rays that hit the environment....?

92 views

Skip to first unread message

Master Zap

Nov 29, 2019, 8:20:30 PM11/29/19

to OSL Developers

So I ran into a fun problem.

Some renderers sets P to 0.0 for rays that hit the environment... but to me this is wrong. Because if I want to make a shader that applies a screen image, I need to be able to do transform("screen", P) .... which is obviously impossibru for 0,0,0 coordiate.

I think a good way to "fix" this is so simply invent some point that is along the ray from the camera to the environment... most easily, the camera position + I ?

But the specification of OSL is silent on this, so I can't put much force behind my argument.

I think a good way to "fix" this is so simply invent some point that is along the ray from the camera to the environment... most easily, the camera position + I ?

But the specification of OSL is silent on this, so I can't put much force behind my argument.

I think that doing transform("screen", P) should be possible also in an environment shader, and for that to happen, P can't be zero.....

..right?

/Z

Olivier Paquet

Dec 1, 2019, 5:16:17 PM12/1/19

to OSL Developers

Le vendredi 29 novembre 2019 20:20:30 UTC-5, Master Zap a écrit :

So I ran into a fun problem.Some renderers sets P to 0.0 for rays that hit the environment... but to me this is wrong. Because if I want to make a shader that applies a screen image, I need to be able to do transform("screen", P) .... which is obviously impossibru for 0,0,0 coordiate.

Just checked, we do that indeed.

I think a good way to "fix" this is so simply invent some point that is along the ray from the camera to the environment... most easily, the camera position + I ?

How will that work for secondary bounces? I don't think it makes sense. But then, neither does a screen image in that case.

If you only care about camera rays, can't you already do something like transform("screen", transform("camera", "common", point(0)) + I) ? Or for that matter, simply transform("screen", I) ?

But the specification of OSL is silent on this, so I can't put much force behind my argument.

That should probably be fixed. But the conceptually correct value would be infinitely far away, which is useless. 0 is faster to compute :-)

I think that doing transform("screen", P) should be possible also in an environment shader, and for that to happen, P can't be zero.....

The environment has no position. It's at infinity. So I don't think it's reasonable to transform its P.

Olivier

Master Zap

Dec 1, 2019, 5:24:22 PM12/1/19

to OSL Developers

Sorry but does the spec guarantee that transform("screen", I) works?!? And what would that even mean? Transforming a view direction to a screen pixel? That makes zero sense to me....

In my mind, transform("screen", P) should always work, by spec.....

/Z

/Z

Master Zap

Dec 1, 2019, 5:25:44 PM12/1/19

to OSL Developers

...and while the point "should" be "conceptually infinitely far away", this is unworkable for numerical precision reasons.

All that is required, is that

All that is required, is that

a) the point is on the ray heading towards the background and

b) it is transformable to a "screen" coordinate.

<Camera position> + I

would do that just nicely.....

/Z

Olivier Paquet

Dec 1, 2019, 5:55:10 PM12/1/19

to OSL Developers

Le dimanche 1 décembre 2019 17:24:22 UTC-5, Master Zap a écrit :

Sorry but does the spec guarantee that transform("screen", I) works?!? And what would that even mean? Transforming a view direction to a screen pixel? That makes zero sense to me....

Why not? Every pixel on the screen corresponds to a camera ray direction in the scene. It should be possible to map the other way around (for some directions anyway). The spec probably does not say anything about it though. Might not be a good idea either.

"camera position" + I makes no sense to me except that it will achieve what you want to achieve in the near term. I see it as a "make my use case work" feature.

Either way, transform("screen", transform("camera", "common", point(0)) + I) should work ok right now. It is exactly what you're asking for as the camera position is 0 (in camera space). I think it's better to write it out than to always set P to some arbitrary value which will never be used by most shaders.

Olivier

Master Zap

Dec 2, 2019, 4:47:51 AM12/2/19

to OSL Developers

I don't think this makes sense. If I do this, the same shader mapped on an object would return the wrong thing in a reflection, rather than back-converting the hit point P to where it is in screen space....

if instead the spec is augmented to specify that P, in an environment context, must have a value that is valid to convert to screen space, everything would work fine and consistently.....

I don't really see the harm in doing this - it simplifies things a lot...?

/Z

Olivier Paquet

Dec 2, 2019, 8:59:55 AM12/2/19

to OSL Developers

Le lundi 2 décembre 2019 04:47:51 UTC-5, Master Zap a écrit :

I don't think this makes sense. If I do this, the same shader mapped on an object would return the wrong thing in a reflection, rather than back-converting the hit point P to where it is in screen space....

Huhhh... not sure I follow here. What do you expect this to do in a reflection? To show your screen mapped texture as if the object were transparent instead? Meaning that you'd use the same P, based on the camera ray, for all later bounces? That would be problematic in a path tracer which might not even keep that camera ray around. Even worse for a bidirectional path tracer trying to generate paths from the environment.

if instead the spec is augmented to specify that P, in an environment context, must have a value that is valid to convert to screen space, everything would work fine and consistently.....I don't really see the harm in doing this - it simplifies things a lot...?

It simplifies your current need. But you're looking at this through a single narrow use case. The harm is that we can paint ourselves into a corner with something which makes no general sense but end up stuck with. I've seen a lot of those things over the years, often coming from similar user requests with similar justifications, so you'll understand if I'm rather wary of this approach of defining how something should work. It comes down to:

- Will P not being 0 hurt something else? Like performance, or the ability to do bidirectional tracing as mentioned above.

- Could there be another way to define the value of P which is more useful in general?

Those are not easy questions to answer but they are important. OSL will likely still be around 10-20 years from now. It would be unfortunate if at that point people end up discussing that "P is defined as this weird value because someone wanted an easy screen texture projection back in 2019" ;-)

Olivier

Master Zap

Dec 3, 2019, 8:52:18 AM12/3/19

to OSL Developers

Imagine I have a reflective teapot on top of a screen-mapped plane.

When the reflection ray of the teapot hits the plane, I expect it to pick up the screen-mapped pixel projected back into screen space.

I.e. I expect transform("screen", P) at that intersection to return what I want. (Which it does, today).

I.e. I expect transform("screen", P) at that intersection to return what I want. (Which it does, today).

Now, assume I want to use the same screen-mapped shader in my environment. Currently I can't (in the P=0 renderers) because suddenly, what was valid in the reflection, is suddenly not valid for the camera ... if it hits the object... but it works if it hits the plane ... uhm... that's quite inconsistent and weird to me.

/Z

Master Zap

Jan 21, 2020, 3:31:15 PM1/21/20

to OSL Developers

*Boink.*

Larry? Help :)

Larry? Help :)

/Z

Master Zap

Mar 30, 2020, 5:48:17 AM3/30/20

to OSL Developers

Ok I found a real world case where this is breaking things, and the trick to transform 0 to camera isn't working:

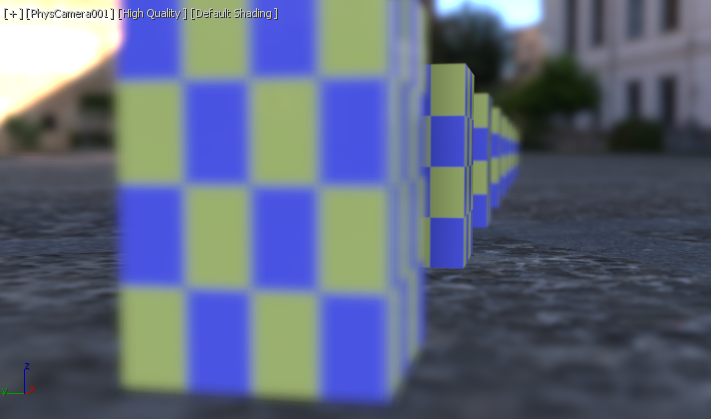

I wrote a ground projection shader for max 2021... and in my viewport backend (which do set P to a (in my mind) sensible value in environment hits) I can compute the environment rays intersection with the virtual ground plane. So the ground these boxes are standing on doesn't exist, it's the environment, but in my shader I project it to the flat plane. My viewport code does the right thing, because it has the current P that defines the real ray:

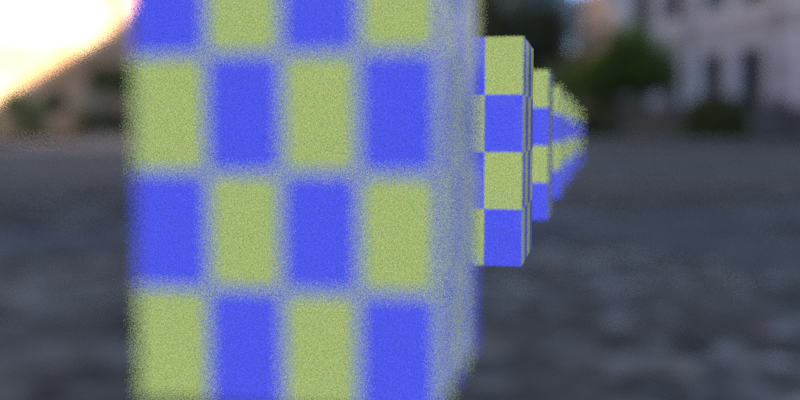

But in Arnold, where I do not have this value, and have to back-transform "0" from camera space, it turns out that it is just giving me the camera origin, not the origin of that particular DOF ray.

So I get the wrong things (as if the ground was at infinity):

I think this is broken. I think P, in an environment shader, should be decreed in the spec to be "on the ray", so I can make computations like this properly.

/Z

But in Arnold, where I do not have this value, and have to back-transform "0" from camera space, it turns out that it is just giving me the camera origin, not the origin of that particular DOF ray.

So I get the wrong things (as if the ground was at infinity):

I think this is broken. I think P, in an environment shader, should be decreed in the spec to be "on the ray", so I can make computations like this properly.

/Z

Master Zap

Mar 30, 2020, 5:52:55 AM3/30/20

to OSL Developers

The shader in question is here https://github.com/ADN-DevTech/3dsMax-OSL-Shaders/blob/master/3ds%20Max%20Shipping%20Shaders/HDRIEnviron.osl

You may need to adjust it if you have the wrong up-axis.

Maybe this all really points to a lack of an O global in OSL (ray origin)?

/Z

Larry Gritz

Mar 31, 2020, 11:18:10 AM3/31/20

to OSL Developers List

So, let me see if I understand correctly.

Rays that hit nothing and get some kind of background shading don't seem to have a meaningful P. At least not in some renderers? And in particular, Autodesk Arnold seems to set P=(0,0,0) which seems especially unhelpful? And so you think it would be better to make P always be some finite point along the ray (even if very far away)?

The OSL library doesn't set P, it just uses whatever the renderer hands it, so I'm not sure what we on the OSL side can directly do to fix it.

Seems to me that mainly what you need is for your own Arnold team to make sure this is the case for your embedded renderer.

Maybe, if numerics or other reasons make it unwise to try to set P to some far-away finite position along the ray, it would be better if Arnold, instead of punting and setting it to world (0,0,0), would at least set it to the ray origin? That's no worse philosophically than forcing it to world origin, it's not expensive to compute, and it would give you enough information for the trickery you are trying to employ in your shader.

I guess you want me to bless an approach and recommend that it be the case for all renderers? I mean, I guess it sounds ok, but I haven't really considered all the edge cases and what it might make more difficult for the renderer. I'd appreciate hearing from others (especially the renderer authors) about why this might be hard or ill-advised.

(SPI team, opinions?)

On Mar 30, 2020, at 2:48 AM, Master Zap <zap.an...@gmail.com> wrote:

Ok I found a real world case where this is breaking things, and the trick to transform 0 to camera isn't working:

I wrote a ground projection shader for max 2021... and in my viewport backend (which do set P to a (in my mind) sensible value in environment hits) I can compute the environment rays intersection with the virtual ground plane. So the ground these boxes are standing on doesn't exist, it's the environment, but in my shader I project it to the flat plane. My viewport code does the right thing, because it has the current P that defines the real ray:<viewport-dof.png>

But in Arnold, where I do not have this value, and have to back-transform "0" from camera space, it turns out that it is just giving me the camera origin, not the origin of that particular DOF ray.

So I get the wrong things (as if the ground was at infinity):

<arnold-dof.png>

I think this is broken. I think P, in an environment shader, should be decreed in the spec to be "on the ray", so I can make computations like this properly.

/Z

On Tuesday, January 21, 2020 at 9:31:15 PM UTC+1, Master Zap wrote:*Boink.*

Larry? Help :)/Z

On Tuesday, December 3, 2019 at 2:52:18 PM UTC+1, Master Zap wrote:Imagine I have a reflective teapot on top of a screen-mapped plane.When the reflection ray of the teapot hits the plane, I expect it to pick up the screen-mapped pixel projected back into screen space.

I.e. I expect transform("screen", P) at that intersection to return what I want. (Which it does, today).Now, assume I want to use the same screen-mapped shader in my environment. Currently I can't (in the P=0 renderers) because suddenly, what was valid in the reflection, is suddenly not valid for the camera ... if it hits the object... but it works if it hits the plane ... uhm... that's quite inconsistent and weird to me./Z

--

You received this message because you are subscribed to the Google Groups "OSL Developers" group.

To unsubscribe from this group and stop receiving emails from it, send an email to osl-dev+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/osl-dev/2178f0ee-ed49-4f83-ad66-5d99baf41910%40googlegroups.com.

<viewport-dof.png><arnold-dof.png>

Etienne Sandré-Chardonnal

Mar 31, 2020, 12:01:54 PM3/31/20

to osl...@googlegroups.com

Hi, these are my 2 cents on this:

Global 'I' is much more meaningful than 'P' for passing the coordinates of the environment, as it's a pure direction, and this is actually the view direction.

But currently, we can't write support for

transform("screen", I)

As the renderer can only implement "transform_points", so it can't transform pure directions (aka OSL 'vectors')

So ideally, we should be able to implement "transform_vectors" in the renderer.

Alternatively, you still can retrieve the 4x4 world-to-screen homogenous transformation matrix, and multiply it by the 4-vector (I.x, I.y, I.z, 0), this should give you the screen space coordinates.

Since OSL doesn't offer a 4-vector type, you need to do some work by hand.

Example (not tested):

matrix m = matrix("world", "screen");

m[0][3] = 0;

m[1][3] = 0;

m[2][3] = 0;

point screenpos = m * I;

Etienne

To view this discussion on the web visit https://groups.google.com/d/msgid/osl-dev/8792DE6D-0B44-4CB0-97AC-65FE83755486%40larrygritz.com.

Christopher Kulla

Mar 31, 2020, 12:09:44 PM3/31/20

to osl...@googlegroups.com

I think Zap was asking for P to mean ray origin in the background

context. It sounds like he did it this way for his viewport backend,

but would like to be able to ask that other renderers do the same.

Our own renderer sets P to some distant value far along the ray like

Larry suggested, but I don't think that is useful at all. In lens and

imager shader we have to chose to co-opt P to mean the ray origin, so

there is some precedent to allow this.

So really, we should just update the docs to say P=ray origin in the

background context (and update testrender if it doesn't do this).

The alternative would be to introduce a ray origin into the shader

globals, but given that it would probably require derivatives - this

would add a whopping 36 bytes to shader globals at a time where we are

trying to shrink the globals as much as possible ...

> To view this discussion on the web visit https://groups.google.com/d/msgid/osl-dev/CAM-xo90VOAbhC_zBTy7s24QWzpB7yxc_qEO4gPpgQ2_HvR%3DXWA%40mail.gmail.com.

context. It sounds like he did it this way for his viewport backend,

but would like to be able to ask that other renderers do the same.

Our own renderer sets P to some distant value far along the ray like

Larry suggested, but I don't think that is useful at all. In lens and

imager shader we have to chose to co-opt P to mean the ray origin, so

there is some precedent to allow this.

So really, we should just update the docs to say P=ray origin in the

background context (and update testrender if it doesn't do this).

The alternative would be to introduce a ray origin into the shader

globals, but given that it would probably require derivatives - this

would add a whopping 36 bytes to shader globals at a time where we are

trying to shrink the globals as much as possible ...

Larry Gritz

Mar 31, 2020, 12:11:40 PM3/31/20

to OSL Developers List

That's a totally valid point (no pun) about transform_points, and having a transform_vectors is very reasonable.

But I'm not sure it solves the problem. The thing is, "screen" space is a 2D coordinate system. You can project 3D points into that 2D space, but I'm not sure it's in any way meaningful to project a vector there. What would you expect transform("screen", I) to give you? I mean, we could blindly run it through the same math, but would it be geometrically meaningful?

To view this discussion on the web visit https://groups.google.com/d/msgid/osl-dev/CAM-xo90VOAbhC_zBTy7s24QWzpB7yxc_qEO4gPpgQ2_HvR%3DXWA%40mail.gmail.com.

Etienne Sandré-Chardonnal

Mar 31, 2020, 12:17:57 PM3/31/20

to osl...@googlegroups.com

Sorry, you need to set the m[3][3] coefficient to zero as well, and anyway OSL does not provide matrix * vector multiplications.

The proper OSL code would be :

matrix m = matrix("world", "screen");

float x = m[0][0]*I.x + m[0][1]*I.y + m[0][2]*I.z;

float y = m[1][0]*I.x + m[1][1]*I.y + m[1][2]*I.z;

float t = m[3][0]*I.x + m[3][1]*I.y + m[3][2]*I.z;

float screen_x = x/t;

float screen_y = y/t;

This should give you the screen space coordinates, if:

- The renderer sets properly global 'I' for environment shading, which I think is the best place to pass environment position

- The renderer properly provides the homogenous world-to-screen 4x4 matrix

Still, giving the renderer a way to provide a "transform_vectors" function, allowing OSL code:

point screenpos = transform("screen", I);

Would be much more elegant!

Cheers,

Etienne

Etienne Sandré-Chardonnal

Mar 31, 2020, 12:21:43 PM3/31/20

to osl...@googlegroups.com

Hi Larry,

Actually, if the transformation matrices are 4x4 homogenous, and we handle points and vectors as :

- points as 4-vectors (p.x, p.y, p.z, 1)

- vectors as 4-vectors (v.x, v.y, v.z, 0)

Then, vectors are handled as points located at infinity, and it should definitely work to transform points at infinity from world space to screen space. It's fully valid.

To view this discussion on the web visit https://groups.google.com/d/msgid/osl-dev/11AF8F6A-A05E-4594-9CE7-AC89720D47D8%40larrygritz.com.

Larry Gritz

Mar 31, 2020, 12:32:04 PM3/31/20

to OSL Developers List

I think changing the spec to require P for background shaders to be ray origin is a simple solution. It sure isn't any weirder than having it be (0,0,0).

It does sort of bug me that transform("screen", P) wouldn't work, that is a bothersome inconsistency, and there may be other asymmetries, but maybe there is not a better answer. At the end of the day, P is for surfaces and there is no solid position for rays that miss anything, so many any answer is just a punt.

As Chris says, adding a ray origin field to to the shaderglobals is mostly a non-starter because it will harm GPU performance.

To view this discussion on the web visit https://groups.google.com/d/msgid/osl-dev/CALu_sPzpWkj%3Dv25xkMsc25kau4Tmp56sF7noyuXt1gaP65GA4Q%40mail.gmail.com.

Etienne Sandré-Chardonnal

Mar 31, 2020, 12:46:45 PM3/31/20

to osl...@googlegroups.com

Alternatively, I think that if we define an "environment" space, which has the same transform-to-common matrix than "world" except the last column is set to zero, then I believe:

transform("environment", "screen", I)

Will provide the desired results, if 'I' is properly defined as the view vector for environment shaders. This needs to be validated, but I believe it is geometrically correct.

This is the cleanest solution, it just requires renderers to :

- Support the "environment" space, which has the same matrix than "world" except last column is zeroified

- Set 'I' to the view vector also for environment shaders.

I will test it as soon as I have some time (I'm confined at home with children so finding time is err... complicated)

Cheers,

Etienne

To view this discussion on the web visit https://groups.google.com/d/msgid/osl-dev/37A72AC9-06E8-4487-9259-F891F3380E53%40larrygritz.com.

Larry Gritz

Mar 31, 2020, 1:28:47 PM3/31/20

to OSL Developers List

On Mar 31, 2020, at 9:46 AM, Etienne Sandré-Chardonnal <etienne...@m4x.org> wrote:

I will test it as soon as I have some time (I'm confined at home with children so finding time is err... complicated)

Indeed, a lot of us are in the position of trying to juggle new surroundings and distractions and attempting to regain something resembling productivity. :-)

Master Zap

Mar 31, 2020, 1:49:44 PM3/31/20

to OSL Developers

Larry: Yes, exactly, I want you to sanction, in the spec "this is how P should behave", because otherwise it's up to interpretation. If we want OSL to succeed and shaders to flow between renderers freely, we can't be having these inconsistencies and things being open to interpretation....

Etienne: So in my experience, relying on named transforms doens't work. At least "Autodesk Arnold", transforming 0,0,0 from camera to world will not give me the ray origin of each DOF ray, it just gives me the camera.

Also, it's not just about being able to transform P (but it's important), it's about being able to do raytracing in the shader code (like I do with the fake ground plane in my environment projection shader).

If P is "a point on the ray", either works correctly and as expected!

Christopher:

I'm not sure P being "ray origin" would work... for a normal, non-DOF'ed camera ray, isn't all ray origins the same? Yet I want transform("screen", P) to be a screen pixel... how would I transform the same point to different things depending on which pixel I'm in?

To me, placing P somewhere "along the ray" (and I freely admit max's choise of 100 random units from the camera is super arbitrary) is the only useful option.

/Z

On Tuesday, March 31, 2020 at 7:28:47 PM UTC+2, Larry Gritz wrote:

On Tuesday, March 31, 2020 at 7:28:47 PM UTC+2, Larry Gritz wrote:

Master Zap

Mar 31, 2020, 1:52:22 PM3/31/20

to OSL Developers

I realize I may have forgotten to mention it in this forum (I'm holding this discussion in parallel in more than one place) but what max does - both in the software and viewport GPU backends - is to place P 100 units away from ray origin along I.

Not sure who came up with 100.0, but that's how it is :P

/Z

Olivier Paquet

Mar 31, 2020, 2:46:39 PM3/31/20

to OSL Developers

Le mardi 31 mars 2020 12:46:45 UTC-4, Etienne Sandré-Chardonnal a écrit :

Alternatively, I think that if we define an "environment" space, which has the same transform-to-common matrix than "world" except the last column is set to zero, then I believe:transform("environment", "screen", I)Will provide the desired results, if 'I' is properly defined as the view vector for environment shaders. This needs to be validated, but I believe it is geometrically correct.

Stop trying, no amount of transform magic will work. The information Zap wants is simply not present in the I vector. With DoF, you can have multiple identical I vectors which correspond to different screen coordinates.

As for the proposal, another option would be to require that "P - I" be the ray origin, like it is elsewhere, and let the renderer pick P and I. I believe this gives the same information and is slightly more coherent. It might be useful if one wants to share some piece of OSL code between environment and surface shaders.

Olivier

Etienne Sandré-Chardonnal

Mar 31, 2020, 3:38:55 PM3/31/20

to osl...@googlegroups.com

Well, I was not trying to solve the DoF issue, but the original question, which is defining a standard behavior of globals for environment shader invocations, and a simple way to transform it to screen space (without DoF).

For the DoF case, if you don't define either:

- A dedicated renderer callback that retrieves the "DoFed" screen position using a behind the scenes shader context

or

- Set P and I to values which goes against their definition, such as the focus plane ray intersection position (which you can simply transform from world to screen space to get DoFed screen pos)

or

- Add a new global variable

I don't see any other way to solve the request. The second one will maybe do it but will break something else. The third one is like opening Pandora's box regarding adding many globals.

Olivier Paquet

Mar 31, 2020, 4:31:27 PM3/31/20

to OSL Developers

Good point, DoF is a real mess. And I can't help but feel that #2 is a "do what I want for this specific shader" solution which will indeed turn out to be nonsense for some other application.

Transforming to screen with DoF makes no sense. The actual result for most points is a circle of confusion. Unless you assume behind the scenes magic which allows the renderer to actually know where a specific camera ray came from (like your #1).

The real bottom line is stop trying to write a renderer in a shader. It was a bad idea 20 years ago and it's a worse idea today.

Olivier

Larry Gritz

Mar 31, 2020, 5:17:12 PM3/31/20

to OSL Developers List

On Mar 31, 2020, at 9:17 AM, Etienne Sandré-Chardonnal <etienne...@m4x.org> wrote:

, and anyway OSL does not provide matrix * vector multiplications.

I'm pretty sure that's what `transform(matrix, vector)` does.

Larry Gritz

Mar 31, 2020, 5:20:36 PM3/31/20

to OSL Developers List

On Mar 31, 2020, at 1:31 PM, Olivier Paquet <olivier...@gmail.com> wrote:

The real bottom line is stop trying to write a renderer in a shader. It was a bad idea 20 years ago and it's a worse idea today.Olivier

Indeed, I was also going to write that this feels very much like something that should be a renderer feature ("flattened environment background"), and in groping for a way to do it entirely as a shader inside a renderer that is unaware and never accounted for that case, we are just fumbling around between different inconsistencies.

It's worth remembering that a core principle of OSL (different from most earlier shading languages) is that view-dependent behavior inside shaders is strongly discouraged. As everybody discovers, when you give people a powerful enough programming language, they will often find a way to break those guidelines, and some of those things are pretty cool (there are some neat NPR shaders, for example, that have inherently view-dependent behavior). But whenever you do something like that, you are certainly operating outside the guard rails and might expect trouble if you try to do anything too clever.

I think our intent was that P is meaningful for surface shaders, but its value is not well defined for background shaders. `I` is still meaningful there, but not P.

But something has to be in that variable in contexts where there is no surface or volume point being shaded per se (background, lens, or imager shaders, for example), and I have no objection to suggesting that it be the ray origin. That is probably more useful than the world origin, or being uninitialized garbage.

Would any of the other renderer authors like to chime in?

I'll also bring it up at the TSC meeting.

-- lg

Paolo Berto

Mar 31, 2020, 5:25:01 PM3/31/20

to osl...@googlegroups.com

On Wed, Apr 1, 2020 at 5:31 Olivier Paquet <olivier...@gmail.com>

The real bottom line is stop trying to write a renderer in a shader. It was a bad idea 20 years ago and it's a worse idea today.

+1

Sent from a parallel universe

Etienne Sandré-Chardonnal

Mar 31, 2020, 5:28:25 PM3/31/20

to osl...@googlegroups.com

Ah, good to know. And I just figured out that RendererServices::transform_points takes a

Etienne Sandré-Chardonnal

Mar 31, 2020, 5:30:28 PM3/31/20

to osl...@googlegroups.com

Ah, good to know. And I just figured out that RendererServices::transform_points takes a TypeDesc::VECSEMANTICS vectype parameter, which I ignored...

So if a renderer implements this properly, it might work (for the non DoF case)

Master Zap

Apr 7, 2020, 5:10:14 AM4/7/20

to OSL Developers

While I admire the philosophical purity of those viewpoints, I think it's overly stubborn to just deny a feature purely on the point of philosophical purity.

On Tuesday, March 31, 2020 at 11:20:36 PM UTC+2, Larry Gritz wrote:

Every single one of my issues listead above would be solved, if we all could just agree that P, in an environment shader, is somewhere on the ray. I can live without it being "100 * I from the origin" that max seems to do... but having it on the ray makes a ton of sense.

- It properly back-transforms to screen. (How would you do a 2D background in OSL without this? Just a plain old background image?)

- It allows my "view dependent hacks", like making the Amiga Juggler work in OSL... https://www.facebook.com/groups/OSL.Shaders/?post_id=543719636411160

It literally solves all the problems I have. And since the rest of you seems to think that P should be undefined or zero, you wouldn't be using it anyway.... right?

So you do not need to care that we add to the spec that it should have a particular value? It would make everyone happy.

Saying that "we shouldn't put a value in P that is useful to someone in there, because it might break things for other people" makes no sense since the value is currenlty undefined (and apparently zero for most people), so nobody could have been using it anyway..... so what would it break for whom? :)

/Z

On Tuesday, March 31, 2020 at 11:20:36 PM UTC+2, Larry Gritz wrote:

Master Zap

Apr 7, 2020, 5:11:56 AM4/7/20

to OSL Developers

> Indeed, I was also going to write that this feels very much like something that should be a renderer feature ("flattened environment background"), and in groping for a way to do

> it entirely as a shader inside a renderer that is unaware and never accounted for that case, we are just fumbling around between different inconsistencies.

This is beside the point. My "flattened environment bottom" is just one example of a thousand where I might want to know the ray when an environment is hit.

Will you be able to anticipate my every use case? No. Should my every use case "be built into the renderer"? I don't think so. The more work I can do in shaders, that are renderer-independent, the better.

/Z

Olivier Paquet

Apr 7, 2020, 9:56:46 AM4/7/20

to OSL Developers

It is exactly the point. Do you think you are able to anticipate every renderer out there? The renderer independence of those tricks is an illusion. There are already a number of rendering algorithms which require the environment to be view independent. I probably don't know half of them. With those, it is simply not possible to provide the value you seek.

I've been down that road before. The story goes roughly like this. Features are implemented which seem "nice" and "obvious wins" although outside the theoretical rendering framework. People use them for various tricks and they become entrenched. Then a new rendering algorithm comes up which clashes with those features. I can't implement it. Or I can implement it but have to keep supporting the old one, which means two modes, which means a messed up UI, more bugs, higher development costs, etc. It's how renderers end up with pages upon pages of obsure toggles which force you to choose between "fast", "good quality" and "actually works". On a lucky day, you might get to pick two of those.

You can convince a bunch of renderers to set P to some value for now. Yes, it will be easy and work. But don't fool yourself into thinking it makes those shader tricks portable and future proof.

Olivier

Changsoo Eun

Apr 7, 2020, 8:46:24 PM4/7/20

to OSL Developers

well.. Zap's case.

His OSL map is the delegate for all renderers.

So, I think it will work for him. :)

Reply all

Reply to author

Forward

0 new messages