OIDC-NG - Duplicate key com.nimbusds.jose.crypto.RSASSASigner

17 views

Skip to first unread message

Domingos Gonçalves

Apr 22, 2020, 11:19:21 AM4/22/20

to OpenConext Community

Hello,

we are facing an issue on OIDC-NG saying we have a duplicate key.

We can solve the problem deleting the collection oidcng.signing_keys on MongoDB. After restarting OIDC-NG it works again.

Is that config correct for key_rollover_cron_expression? https://github.com/OpenConext/OpenConext-deploy/blob/931dadceeffff24a7d9fd2c17d5dcdafd24b60c2/environments/template/group_vars/template.yml#L365

Or it should be like these: https://github.com/OpenConext/OpenConext-oidcng/blob/48b3a69c62fd2de2985c62218226cf3309d5d98e/src/main/resources/application.yml#L14

During the tests we saw the following:

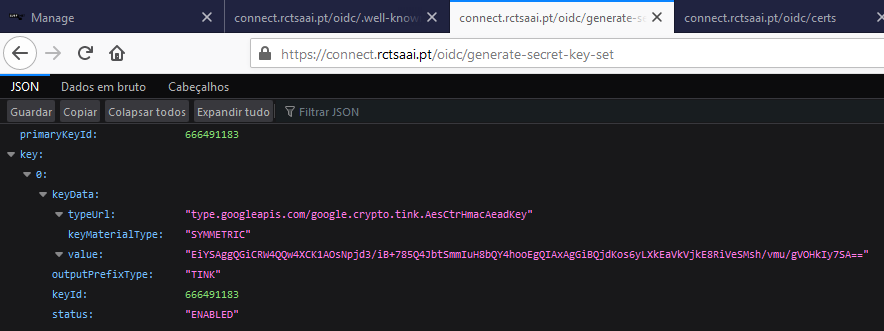

- It works fine: https://connect.rctsaai.pt/oidc/generate-secret-key-set

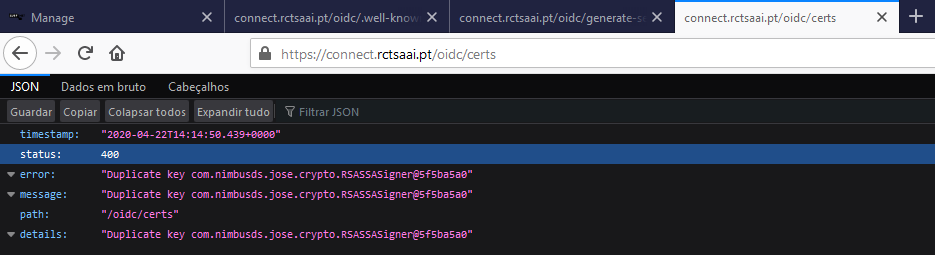

- Error saying "duplicate key": https://connect.rctsaai.pt/oidc/certs

- Log when starting OIDC-NG:

2020-04-22 15:10:55,475 INFO [main] com.github.mongobee.Mongobee:158 - Mongobee is releasing process lock.2020-04-22 15:10:55,480 INFO [main] com.github.mongobee.Mongobee:162 - Mongobee has finished his job.2020-04-22 15:10:55,607 INFO [main] o.s.s.concurrent.ThreadPoolTaskExecutor:171 - Initializing ExecutorService 'applicationTaskExecutor'2020-04-22 15:10:55,977 INFO [main] o.s.s.concurrent.ThreadPoolTaskScheduler:171 - Initializing ExecutorService 'taskScheduler'2020-04-22 15:10:55,995 INFO [main] o.s.b.a.e.web.EndpointLinksResolver:58 - Exposing 2 endpoint(s) beneath base path '/actuator'2020-04-22 15:10:56,059 INFO [main] o.apache.coyote.http11.Http11NioProtocol:173 - Starting ProtocolHandler ["http-nio-9195"]2020-04-22 15:10:56,091 INFO [main] o.s.b.w.embedded.tomcat.TomcatWebServer:204 - Tomcat started on port(s): 9195 (http) with context path ''2020-04-22 15:10:56,094 INFO [main] oidc.OidcServerApplication:61 - Started OidcServerApplication in 9.325 seconds (JVM running for 10.021)2020-04-22 15:10:56,387 INFO [main] o.s.b.a.l.ConditionEvaluationReportLoggingListener:136 -

Error starting ApplicationContext. To display the conditions report re-run your application with 'debug' enabled.2020-04-22 15:10:56,391 ERROR [main] o.springframework.boot.SpringApplication:826 - Application run failedjava.lang.IllegalStateException: Duplicate key com.nimbusds.jose.crypto.RSASSASigner@30d4b288 at java.util.stream.Collectors.lambda$throwingMerger$0(Collectors.java:133) at java.util.HashMap.merge(HashMap.java:1254) at java.util.stream.Collectors.lambda$toMap$58(Collectors.java:1320) at java.util.stream.ReduceOps$3ReducingSink.accept(ReduceOps.java:169) at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1382) at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482) at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472) at java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708) at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234) at java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566) at oidc.secure.TokenGenerator.initializeSigningKeys(TokenGenerator.java:183) at oidc.secure.TokenGenerator.onApplicationEvent(TokenGenerator.java:165) at oidc.secure.TokenGenerator.onApplicationEvent(TokenGenerator.java:86) at org.springframework.context.event.SimpleApplicationEventMulticaster.doInvokeListener(SimpleApplicationEventMulticaster.java:172) at org.springframework.context.event.SimpleApplicationEventMulticaster.invokeListener(SimpleApplicationEventMulticaster.java:165) at org.springframework.context.event.SimpleApplicationEventMulticaster.multicastEvent(SimpleApplicationEventMulticaster.java:139) at org.springframework.context.support.AbstractApplicationContext.publishEvent(AbstractApplicationContext.java:403) at org.springframework.context.support.AbstractApplicationContext.publishEvent(AbstractApplicationContext.java:360) at org.springframework.boot.context.event.EventPublishingRunListener.started(EventPublishingRunListener.java:98) at org.springframework.boot.SpringApplicationRunListeners.started(SpringApplicationRunListeners.java:71) at org.springframework.boot.SpringApplication.run(SpringApplication.java:321) at org.springframework.boot.SpringApplication.run(SpringApplication.java:1226) at org.springframework.boot.SpringApplication.run(SpringApplication.java:1215) at oidc.OidcServerApplication.main(OidcServerApplication.java:10) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.springframework.boot.loader.MainMethodRunner.run(MainMethodRunner.java:48) at org.springframework.boot.loader.Launcher.launch(Launcher.java:87) at org.springframework.boot.loader.Launcher.launch(Launcher.java:51) at org.springframework.boot.loader.JarLauncher.main(JarLauncher.java:52)2020-04-22 15:10:56,397 INFO [main] o.s.s.concurrent.ThreadPoolTaskScheduler:208 - Shutting down ExecutorService 'taskScheduler'2020-04-22 15:10:56,399 INFO [main] o.s.s.concurrent.ThreadPoolTaskExecutor:208 - Shutting down ExecutorService 'applicationTaskExecutor'- The log on MongoDB during OIDC-NG start:

2020-04-22T15:40:37.256+0100 I NETWORK [conn2933] end connection <machine-ip>:57294 (65 connections now open)

2020-04-22T15:40:37.257+0100 I NETWORK [conn2932] end connection <machine-ip>:57290 (64 connections now open)

2020-04-22T15:40:37.269+0100 I NETWORK [conn3237] end connection <machine-ip>:48898 (63 connections now open)

2020-04-22T15:40:37.270+0100 I NETWORK [conn2934] end connection <machine-ip>:57304 (62 connections now open)

2020-04-22T15:40:37.271+0100 I NETWORK [conn2931] end connection <machine-ip>:57256 (61 connections now open)

2020-04-22T15:40:37.271+0100 I NETWORK [conn2930] end connection <machine-ip>:57246 (60 connections now open)

2020-04-22T15:40:42.675+0100 I ACCESS [conn58] Successfully authenticated as principal __system on local from client <database2-ip>:35482

2020-04-22T15:40:42.684+0100 I ACCESS [conn59] Successfully authenticated as principal __system on local from client <database2-ip>:35484

2020-04-22T15:40:42.690+0100 I ACCESS [conn58] Successfully authenticated as principal __system on local from client <database2-ip>:35482

2020-04-22T15:40:42.710+0100 I ACCESS [conn58] Successfully authenticated as principal __system on local from client <database2-ip>:35482

2020-04-22T15:40:42.731+0100 I ACCESS [conn58] Successfully authenticated as principal __system on local from client <database2-ip>:35482

2020-04-22T15:40:46.736+0100 I NETWORK [listener] connection accepted from <machine-ip>:42538 #4055 (61 connections now open)

2020-04-22T15:40:46.773+0100 I NETWORK [conn4055] received client metadata from <machine-ip>:42538 conn4055: { driver: { name: "mongo-java-driver|legacy", version: "3.11.2" }, os: { type: "Linux", name: "Linux", architecture: "amd64", version: "3.10.0-1062.4.1.el7.x86_64" }, platform: "Java/Oracle Corporation/1.8.0_232-b09" }

2020-04-22T15:40:47.620+0100 I NETWORK [listener] connection accepted from <machine-ip>:42544 #4056 (62 connections now open)

2020-04-22T15:40:47.621+0100 I NETWORK [conn4056] received client metadata from <machine-ip>:42544 conn4056: { driver: { name: "mongo-java-driver|legacy", version: "3.11.2" }, os: { type: "Linux", name: "Linux", architecture: "amd64", version: "3.10.0-1062.4.1.el7.x86_64" }, platform: "Java/Oracle Corporation/1.8.0_232-b09" }

2020-04-22T15:40:47.740+0100 I ACCESS [conn4056] Successfully authenticated as principal oidcngrw on oidcng from client <machine-ip>:42544

2020-04-22T15:40:53.930+0100 I NETWORK [listener] connection accepted from <machine-ip>:42566 #4057 (63 connections now open)

2020-04-22T15:40:53.934+0100 I NETWORK [conn4057] received client metadata from <machine-ip>:42566 conn4057: { driver: { name: "mongo-java-driver|legacy", version: "3.11.2" }, os: { type: "Linux", name: "Linux", architecture: "amd64", version: "3.10.0-1062.4.1.el7.x86_64" }, platform: "Java/Oracle Corporation/1.8.0_232-b09" }

2020-04-22T15:40:53.969+0100 I NETWORK [listener] connection accepted from <machine-ip>:42570 #4058 (64 connections now open)

2020-04-22T15:40:53.971+0100 I NETWORK [conn4058] received client metadata from <machine-ip>:42570 conn4058: { driver: { name: "mongo-java-driver|legacy", version: "3.11.2" }, os: { type: "Linux", name: "Linux", architecture: "amd64", version: "3.10.0-1062.4.1.el7.x86_64" }, platform: "Java/Oracle Corporation/1.8.0_232-b09" }

2020-04-22T15:40:54.006+0100 I ACCESS [conn4058] Successfully authenticated as principal oidcngrw on oidcng from client <machine-ip>:42570

2020-04-22T15:40:56.115+0100 I NETWORK [conn4058] end connection <machine-ip>:42570 (63 connections now open)

2020-04-22T15:40:56.120+0100 I NETWORK [conn4057] end connection <machine-ip>:42566 (62 connections now open)

2020-04-22T15:40:56.132+0100 I NETWORK [conn4056] end connection <machine-ip>:42544 (61 connections now open)

2020-04-22T15:40:56.132+0100 I NETWORK [conn4055] end connection <machine-ip>:42538 (60 connections now open)The client error:

Unable to exchange code for API access token. HTTP error 400. Raw Provider API response: {"timestamp":"2020-04-22T13:32:49.367+0000","status":400,"error":"Duplicate key com.nimbusds.jose.crypto.RSASSASigner@494a8a24","message":"Duplicate key com.nimbusds.jose.crypto.RSASSASigner@494a8a24","path":"/oidc/token","details":"Duplicate key com.nimbusds.jose.crypto.RSASSASigner@494a8a24"}.Best regards,

Domingos Gonçalves

Okke Harsta

Apr 24, 2020, 7:32:58 AM4/24/20

to openc...@googlegroups.com

Hi,

The duplicate key error occurs if there are signing keys with exact the same keyId which are generated using the current date including the number of milliseconds. Do you have multiple instances of OIDC-NG running that are configured with cron:node-cron-job-responsible=true? See https://github.com/OpenConext/OpenConext-deploy/blob/master/roles/oidcng/templates/application.yml.j2#L23

If multiple OIDC-NG instances are reinitialising the keys at the same cron-configured interval then this can happen - in theory and apparently also in practice.

Regards,

Okke

On 22 Apr 2020, at 17:19, Domingos Gonçalves <domingos....@gmail.com> wrote:

Hello,we are facing an issue on OIDC-NG saying we have a duplicate key.We can solve the problem deleting the collection oidcng.signing_keys on MongoDB. After restarting OIDC-NG it works again.Is that config correct for key_rollover_cron_expression? https://github.com/OpenConext/OpenConext-deploy/blob/931dadceeffff24a7d9fd2c17d5dcdafd24b60c2/environments/template/group_vars/template.yml#L365Or it should be like these: https://github.com/OpenConext/OpenConext-oidcng/blob/48b3a69c62fd2de2985c62218226cf3309d5d98e/src/main/resources/application.yml#L14During the tests we saw the following:

- It works fine: https://connect.rctsaai.pt/oidc/generate-secret-key-set

--

OpenConext - Open For Collaboration

---

You received this message because you are subscribed to the Google Groups "OpenConext Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openconext+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openconext/f34710e0-a204-4263-948a-d170ea157278%40googlegroups.com.

<generate-secret-key-set.png><duplicate-key.png>

Domingos Gonçalves

Apr 24, 2020, 10:18:28 AM4/24/20

to OpenConext Community

Yes!

We had 4 nodes with OIDC-NG running with the node-cron-job-responsible as True

So, for this kind of error we should change the schedule for all OIDC-NG instance to don't do the key rollover at the same time (0 0 0 * * *).

At first I was expecting to have all nodes running and don't be worried to enable node-cron-job-responsible as True when needed. In a logic of master and slaves with manual reconfiguration.

So, I will try to reschedule the key re-initialization on all nodes, assigning a different time for each one.

Like this:

OIDC-NG01 key-rollover-expression: 0 0 0 * * *

OIDC-NG02 key-rollover-expression: 0 0 6 * * *

OIDC-NG03 key-rollover-expression: 0 0 12 * * *

OIDC-NG04 key-rollover-expression: 0 0 18 * * *

It probably an over kill key initialization but... it can avoid the collision.

You think it will avoid that issue?

Best Regards,

Domingos Gonçalves

sexta-feira, 24 de Abril de 2020 às 12:32:58 UTC+1, Okke Harsta escreveu:

Hi,The duplicate key error occurs if there are signing keys with exact the same keyId which are generated using the current date including the number of milliseconds. Do you have multiple instances of OIDC-NG running that are configured with cron:node-cron-job-responsible=true? See https://github.com/OpenConext/OpenConext-deploy/blob/master/roles/oidcng/templates/application.yml.j2#L23If multiple OIDC-NG instances are reinitialising the keys at the same cron-configured interval then this can happen - in theory and apparently also in practice.Regards,Okke

To unsubscribe from this group and stop receiving emails from it, send an email to openc...@googlegroups.com.

Okke Harsta

Apr 24, 2020, 10:30:20 AM4/24/20

to openc...@googlegroups.com

Hi,

I think it is better to configure three of the nodes with node-cron-job-responsible=false and make one of the nodes responsible for the cron job. The cron scheduling also cleans up expired tokens and potential deadlocks can occur if multiple nodes perform this task. This is also how we have configured OIDC-NG in SURFconext production.

Regards,

Okke

To unsubscribe from this group and stop receiving emails from it, send an email to openconext+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openconext/75789975-e20c-443f-ac56-ae21926b74da%40googlegroups.com.

Reply all

Reply to author

Forward

0 new messages