consciousness

Nadin, Mihai

Dear and respected colleagues:

The issue does not go away:

I have no dog in this race!

Mihai Nadin

https://www.nadin.ws

https://www.anteinstitute.org

John F Sowa

Ricardo Sanz

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/DM8PR01MB6904789D0AC3B60C233322DFDAC6A%40DM8PR01MB6904.prod.exchangelabs.com.

Ricardo Sanz

Head of Autonomous Systems Laboratory

Escuela Técnica Superior de Ingenieros Industriales

Center for Automation and Robotics

Jose Gutierrez Abascal 2.

28006, Madrid, SPAIN

Ricardo Sanz

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/9126ffc5ce9349cab0703f533adedb2e%40bestweb.net.

Ravi Sharma

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/CAB_uPW5hNvX2OF660CuUceom0QYqBdJQxibQFXC4VnP8LqJtSw%40mail.gmail.com.

John F Sowa

Alex Shkotin

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/45e419b33c5c4c02a270a0cbac79e26c%40bestweb.net.

Dan Brickley

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/45e419b33c5c4c02a270a0cbac79e26c%40bestweb.net.

Stephen Young

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/CAFfrAFoKtjmXnhQ-Q3ohNQh%3DVeGYF-s8ABb3U%3DuQsJ9PeMdquw%40mail.gmail.com.

--

Anatoly Levenchuk

John,

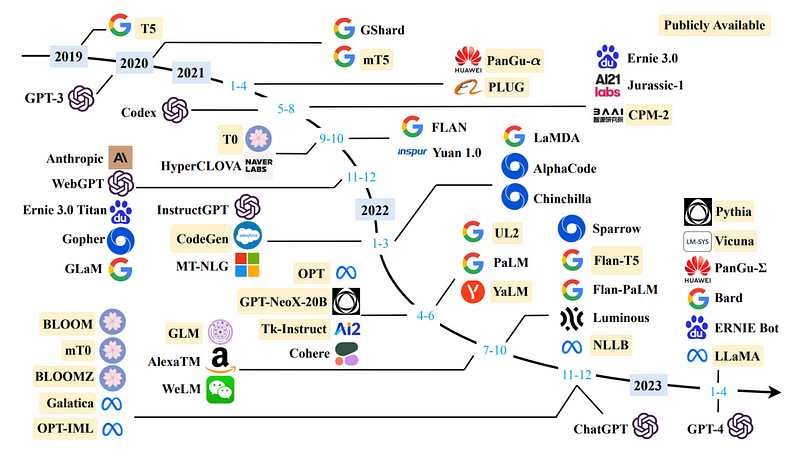

When you target LLM and ANN as its engine, you should consider that this is very fast moving target. E.g. consider recent work (and imagine what can be done there in a year or two in graph-of-thoughts architectures):

Boolformer: Symbolic Regression of Logic Functions with Transformers

Stéphane d'Ascoli, Samy Bengio, Josh Susskind, Emmanuel Abbé

In this work, we introduce Boolformer, the first Transformer architecture trained to perform end-to-end symbolic regression of Boolean functions. First, we show that it can predict compact formulas for complex functions which were not seen during training, when provided a clean truth table. Then, we demonstrate its ability to find approximate expressions when provided incomplete and noisy observations. We evaluate the Boolformer on a broad set of real-world binary classification datasets, demonstrating its potential as an interpretable alternative to classic machine learning methods. Finally, we apply it to the widespread task of modelling the dynamics of gene regulatory networks. Using a recent benchmark, we show that Boolformer is competitive with state-of-the art genetic algorithms with a speedup of several orders of magnitude. Our code and models are available publicly.

https://arxiv.org/abs/2309.12207

Best regards,

Anatoly

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/45e419b33c5c4c02a270a0cbac79e26c%40bestweb.net.

John F Sowa

Alex Shkotin

John,

For me LLM is a different technology compared to formal ontologies. Completely different.

JFS: "But that is when everybody else will have won the big contracts to develop the mission-critical applications."

I am more interested in the use of formal ontologies in mission-critical applications.

JFS: "Now is the time to do the critical research on where the strengths and limitations are."

There are such reviews. Here's the first one I came across https://indatalabs.com/blog/large-language-model-apps

Alex

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/ff6ceb7b5eeb4311b873b0ea0eb3f635%40bestweb.net.

Alex Shkotin

Kingsley Idehen

Hi John,

Anatoly, Stephen, Dan, Alex, and every subscriber to these lists,

I want to emphasize two points: (1) I am extremely enthusiastic about LLMs and what they can and cannot do. (2) I am also extremely enthusiastic about the 60+ years of R & D in AI technologies and what they have and have not done. Many of the most successful AI developments are no longer called AI because they have become integral components of computer science. Examples: compilers, databases, computer graphics, and the interfaces of nearly every appliance we use today: cars, trucks, airplanes, rockets, telephones, farm equipment, construction equipment, washing machines, etc. For those things, the AI technology of the 20th century is performing mission-critical operations with a level of precision and dependability that unaided humans cannot achieve without their help.

Fundamental principle: For any tool of any kind -- hardware or software -- it's impossible to understand exactly what it can do until the tool is pushed to the limits where it breaks. At that point, an examination of the pieces shows where its strengths and weaknesses lie.

For LLMs, some of the breaking points have been published as hallucinations and humorous nonsense. But more R & D is necessary to determine where the boundaries are, how to overcome them, work around them, and supplement them with the 60+ years of other AI tools.

I think it is safe to conclude that a natural language processor and code generator can't also function as an all-answering oracle without integration with domain-specific Knowledge Bases, which is why Retrieval Augmented Generation (RAG) has become an emergent area of activity in recent times.

RAG is basically about loosely coupling LLMs and Knowledge Bases (including Knowledge Graphs), which is an area I've been experimenting with for some time now [1].

Anatoly> When you target LLM and ANN as its engine, you should consider that this is very fast moving target. E.g. consider recent work (and imagine what can be done there in a year or two in graph-of-thoughts architectures) . . .

Yes, that's obvious. The article you cited looks interesting, and there are many others. They are certainly worth exploring. But I emphasize the question I asked: Google and OpenAI have been exploring this technology for quite a few years. What mission-critical applications have they or anybody else discovered and implemented?

So far the only truly successful applications are in MT -- machine translation of languages, natural and artificial. Can anybody point to any other applications that are mission critical for any business or government organization anywhere?

Yes, software help and support. It is now possible to build assistants (or co-pilots) that fill voids that have challenged software usage for years [2][3] i.e., conversational self-support and help as integral parts of applications.

Stephen Young> Yup. My 17yo only managed 94% in his Math exam. He got 6% wrong. Hopeless - he'll never amount to anything.

The LLMs have been successful in passing various tests at levels that match or surpass the best humans. But that's because they cheat. They have access to a huge amount of information on the WWW about a huge range of tests. Bur when they are asked routine questions for which the answers or the methods for generating answers cannot be found, they make truly stupid mistakes.

No mission-critical system that guides a car, an airplane, a rocket, or a farmer's plow can depend on such tools.

True, but there are many others areas of utility that require less precision -- as per my comments above.

Dan Brickley> Encouraging members of this forum to delay putting time into learning how to use LLMs is doing them no favours. All of us love to feel we can see through hype, but it’s also a brainworm that means we’ll occasionally miss out on things whose hype is grounded in substance.

Yes, I enthusiastically agree. We must always ask questions. We must study how LLMs work, what they do, and what their limitations are. If they cannot solve some puzzle, it's essential to find out why. Noticing a failure on one problem is not an excuse for giving up. It's a clue for guiding the search.

Alex> I'm researching how LLMs work. And we will really find out where they will be used after the hype in 3-5 years.

Yes. But that is when everybody else will have won the big contracts to develop the mission-critical applications.

Now is the time to do the critical research on where the strengths and limitations are. Right now, the crowd is having fun building toys that exploit the obvious strengths. The people who are doing the truly fundamental research are exploring the limitations and how to get around them.

Yes, sandboxing LLMs can mitigate the adverse effects of hallucinations. OpenAI, in particular, offers integration points that facilitate this, such as support for external function integration using callbacks, among other features.

John

Links:

[1] https://medium.com/virtuoso-blog/chatgpt-and-semantic-web-symbiosis-1fd89df1db35

-- ChatGPT and Semantic Web Symbiosis

[2] https://www.linkedin.com/pulse/leveraging-llm-based-conversational-assistants-bots-enhanced-idehen/

[3] netid-qa.openlinksw.com:8443/chat/?chat_id=746cbe10c60e9b2544211adf071c714e

-- Assistant Transcript & Demo

-- Regards, Kingsley Idehen Founder & CEO OpenLink Software Home Page: http://www.openlinksw.com Community Support: https://community.openlinksw.com Weblogs (Blogs): Company Blog: https://medium.com/openlink-software-blog Virtuoso Blog: https://medium.com/virtuoso-blog Data Access Drivers Blog: https://medium.com/openlink-odbc-jdbc-ado-net-data-access-drivers Personal Weblogs (Blogs): Medium Blog: https://medium.com/@kidehen Legacy Blogs: http://www.openlinksw.com/blog/~kidehen/ http://kidehen.blogspot.com Profile Pages: Pinterest: https://www.pinterest.com/kidehen/ Quora: https://www.quora.com/profile/Kingsley-Uyi-Idehen Twitter: https://twitter.com/kidehen Google+: https://plus.google.com/+KingsleyIdehen/about LinkedIn: http://www.linkedin.com/in/kidehen Web Identities (WebID): Personal: http://kingsley.idehen.net/public_home/kidehen/profile.ttl#i : http://id.myopenlink.net/DAV/home/KingsleyUyiIdehen/Public/kingsley.ttl#this

Alex Shkotin

John and all

I’ve probably already written that I regularly read Sergei Karelov’s reviews on Facebook on LLM and other AI technologies. Here is a rather long quote from his post today (0):

“The study by Max Tegmark’s group at MIT “Language models represent space and time” (1) provided evidence that large language models (LLMs) are not just machine learning systems on huge collections of superficial statistical data. LLMs build holistic models of a process within themselves data generation - models of the world.

The authors present evidence of the following:

• LLMs are trained in linear representations of space and time at different scales;

• These representations are robust to variations in prompts and are unified across different types of objects (for example, cities and landmarks).

In addition, the authors identified separate “space neurons” and “time neurons” that reliably encode spatial and temporal coordinates.

The analysis presented by the authors shows that modern LLMs are acquiring structured knowledge about fundamental dimensions such as space and time, which supports the view that LLMs are learning literal models of the world rather than just superficial statistics.

Those wishing to check the results of the study and the conclusions of the authors here (2) (the open source model is available for any verification)." <translated by Google-trans>

This is certainly not a critical study, but a research.

Alex

1 https://arxiv.org/abs/2310.02207

2 https://github.com/wesg52/world-modelsAnatoly, Stephen, Dan, Alex, and every subscriber to these lists,

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/ff6ceb7b5eeb4311b873b0ea0eb3f635%40bestweb.net.

John F Sowa

John F Sowa

Stephen Young

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/2963cd5767c2439189be8fabb185ca48%40bestweb.net.

--

Alex Shkotin

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/9d9c9598e2014086b2875a6504c12d05%40bestweb.net.

Alex Shkotin

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/CAHH%2BT2LhtMi9GzhK51uuzs%2B2jgnFzYEFJyPfuHkdmOL-2kv5iw%40mail.gmail.com.

alex.shkotin

This repository contains all experimental infrastructure for the paper. We expect most users to just be interested in the cleaned data CSVs containing entity names and relevant metadata. These can be found in data/entity_datasets/ (with the tokenized versions for Llama and Pythia models available in the data/prompt_datasets/ folder for each prompt type).

In the coming weeks we will release a minimal version of the code to run basic probing experiments on our datasets."John F Sowa

Alex Shkotin

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/477ab4f0f4164b6c9734d6c6b6f72282%40bestweb.net.

John F Sowa

Alex Shkotin

John,

English LLM is the flower on the tip of the iceberg. Multilingual LLMs are also being created. The Chinese certainly train more than just English-speaking LLMs. You can see the underwater structure of the iceberg, for example, here https://huggingface.co/datasets (1).

Academic claims against inventors are possible. But you know the inventors: it works!

It's funny that before that hype LLM meant Master of Laws:-)

Alex

(1)

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/234c433fce944337897d337a64d6d8c9%40bestweb.net.

John F Sowa

Stephen Young

Using our limited understanding of one black box to try to justify our assessment of another black box is not going to get us anywhere.

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/4b87b85e6cde491780c3115b5babe297%40bestweb.net.

--

John F Sowa

Sent: 10/8/23 7:13 PM

To: ontolo...@googlegroups.com, Stephen Young <st...@electricmint.com>

Subject: Re: [ontolog-forum] Addendum to (Generative AI is at the top of the Hype Cycle. Is it about to crash?

Alex Shkotin

John,

My limited knowledge of LLM is as follows:

-The basis of LLM is ANN, which works with numbers and not words. Everything that is built in it depends solely on the training data on which it is trained.

-the number of neurons in the first layer is now tens and hundreds of thousands, and this is quite enough to start processing images.

-all work from input to output and inside the ANN is done with rational numbers in the range from approximately 0 to 1.

-transformation of text into a chain of numbers occurs by dividing it into tokens that only roughly resemble syllables.

-to set the ANN order of words, special tokens are introduced that approximately indicate the position of the word in the text.

-ANN itself is absolutely deterministic and in order to achieve non-determinism they resort to special tricks.

and so on.

LLM is only one of the applications of ANN and only one of the types of models, of which there are 353,584 in https://huggingface.co/models.

Looking at how the brain works, you can certainly be inspired to ask questions about ANNs and models. For example, consider the following dialogue:

“Where is cerebellum in ANN?” - “What is it doing?” - "Works with patterns!" - “So we only work with them!”

My proposal: let’s first agree that ANN is far from being only an LLM. LLM is by far the most noisy and unexpected of ANN applications.

The question can be posed this way: we know about the Language Model, but what other models using ANN exist?

Have a look at this model https://huggingface.co/Salesforce/blip-image-captioning-large

Alex

--

All contributions to this forum are covered by an open-source license.

For information about the wiki, the license, and how to subscribe or

unsubscribe to the forum, see http://ontologforum.org/info/

---

You received this message because you are subscribed to the Google Groups "ontolog-forum" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ontolog-foru...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/4b87b85e6cde491780c3115b5babe297%40bestweb.net.

arwesterinen

That is why the Fall Series sessions from mid-October to early-November are focused on use cases and demonstrations.

doug foxvog

> Alex,

>

> Thanks for the list of applications of LANGUAGE-based LLMs. It is indeed

> impressive. We all agree on that. But mathematics, physics, computer

> science, neuroscience, and all the branches of cognitive science have

> shown that natural languages are just one of an open-ended variety of

> left-brain ways of thinking. LLMs haven't scratched the surface of the

> methods of thinking by the right brain and the cerebellum.

> The left hemisphere of the cerebral cortex has about 8 billion neurons.

> The right hemisphere has another 8 billion neurons that are NOT dedicated

> to language. And the cerebellum has about 69 billion neurons that are

> organized in patterns that are totally different from the cerebrum. That

> implies that LLMs are only addressing 10% of what is going on in the human

> brain. There is a lot going on in that other 90%. What kinds of

> processes are happening in those regions?

front of the language areas are strips for motor control of & sensory

input from the right side of the body. The frontal lobe forward of those

strips do not deal with language. The occipital lobe at the rear of the

brain does not deal with language, either. The visual cortex in the

temporal lobe also does not deal with language. This means that most of

the 8 billion neurons in the cerebral cortex have nothing to do with

language.

-- doug foxvog

> Science makes progress by asking QUESTIONS. The biggest question is how

> can you handle the open-ended range of thinking that is not based on

> natural languages. Ignoring that question is NOT scientific. As the

> saying goes, when the only tool you have is a hammer, all the world is a

> nail. We need more tools to handle the other 90% of the brain -- or

> perhaps updated and extended variations of tools that have been developed

> in the past 60+ years of AI and computer science.

>

> I'll say more about these issues with more excerpts from the article I'm

> writing. But I appreciate your work in showing the limitations of the

> current LLMs.

>

> John

>

>

> John,

>

> English LLM is the flower on the tip of the iceberg. Multilingual LLMs are

> also being created. The Chinese certainly train more than just

> English-speaking LLMs. You can see the underwater structure of the

> iceberg, for example, here https://huggingface.co/datasets (1).

> Academic claims against inventors are possible. But you know the

> inventors: it works!

>

> It's funny that before that hype LLM meant Master of Laws:-)

>

> Alex

>

> (1)

>

John F Sowa

alex.shkotin

John,

I forgot to mention another interesting LLM trick: If ANN issues only one token for each call, how do we get a text response from LMM that sometimes contains a hundred words?

Answer: They access the ANN in a loop, adding the previous answer token to the next input until they hit a completion token, such as an end-of-sentence token.

Alex

Stefan Decker

for what it's worth - I agree with you.

Of the limited resources I command I am spending a million Euros in institute reserves to prepare the 500 people institute I am directing for what is coming.

And the topic has reached the highest levels of government in Germany.

I assume the same is true for many other countries.

But nobody can predict the future - we can only prepare or shape it.

That being said - the interplay between Knowledge Graphs and language models seems to be an interesting playing field. I can only say that we are exploring a number of the connections already.

My hope is that language models are doing all the work for us in making data structured, so that it can be processed at scale. As a simple example, think of doctors reports from cancer patients being available only as free text. With nobody having the resources to turn those into structured data, so that they can be analysed.

I hope we found something willing and able to do this - once we figure out the challenges :-)

And yes, ontologies play a role here. But not for reasoning.

Best regards,

Stefan

Frankly, this is just getting silly.

The ability of these systems to engage with human-authored text in ways highly sensitive to their content and intent is absolutely stunning. Encouraging members of this forum to delay putting time into learning how to use LLMs is doing them no favours. All of us love to feel we can see through hype, but it’s also a brainworm that means we’ll occasionally miss out on things whose hype is grounded in substance.

To view this discussion on the web visit https://groups.google.com/d/msgid/ontolog-forum/CAFfrAFoKtjmXnhQ-Q3ohNQh%3DVeGYF-s8ABb3U%3DuQsJ9PeMdquw%40mail.gmail.com.

Kingsley Idehen

Yes, because we really need to understand what leads to confusing behavior -- even in the hands of skilled operators.

Transcript from a strange ChatGPT session.

https://netid-qa.openlinksw.com:8443/chat/?chat_id=s-48sBd4DwHJM7yxfU56bbc5bnnAran1K4qinKWrfKvNHy

.

Issue:

Contradicts its own session configuration along the way.

Setup:

ChatGPT sand-boxed in a Virtuoso-hosted application, courtesy of external function callbacks. The external functions are actually DBMS-hosted SQL stored procedures :)

Kingsley Idehen

On 10/10/23 10:48 AM, Stefan Decker wrote:

> My hope is that language models are doing all the work for us in

> making data structured, so that it can be processed at scale. As a

> simple example, think of doctors reports from cancer patients being

> available only as free text. With nobody having the resources to turn

> those into structured data, so that they can be analysed.

> I hope we found something willing and able to do this - once we figure

> out the challenges :-)

> And yes, ontologies play a role here. But not for reasoning.

LLM-based natural language processors & code generators and Knowledge

Graphs.

Circa 2023, we shouldn't be hand-crafting structured data. Instead, we

should simply be reviewing and curating what's generated :)