How do you set java options for ssh agents

159 views

Skip to first unread message

Tim Black

Sep 22, 2020, 7:21:28 PM9/22/20

to Jenkins Users

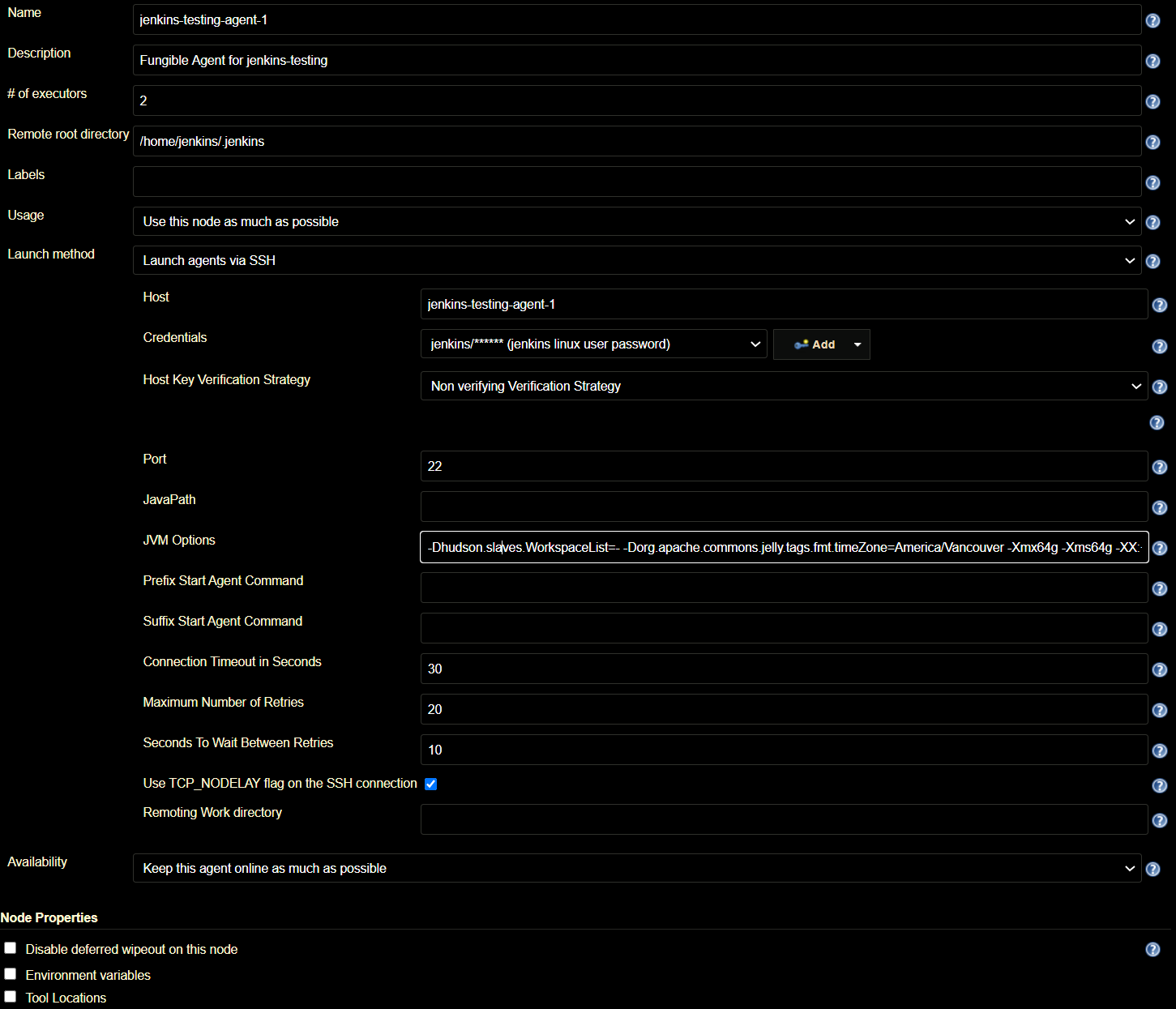

I'm using ssh-slaves-plugin to configure and launch 2 ssh agents, and I've specified several java options in these agents' config (see photo and text list below), but when these agents are launched, the agents' log still shows empty jvmOptions in the ssh launcher call. Agent Log excerpt:

SSHLauncher{host='jenkins-testing-agent-1', port=22, credentialsId='jenkins_user_on_linux_agent', jvmOptions='', javaPath='', prefixStartSlaveCmd='', suffixStartSlaveCmd='', launchTimeoutSeconds=30, maxNumRetries=20, retryWaitTime=10, sshHostKeyVerificationStrategy=hudson.plugins.sshslaves.verifiers.NonVerifyingKeyVerificationStrategy, tcpNoDelay=true, trackCredentials=true}

[09/22/20 15:56:12] [SSH] Opening SSH connection to jenkins-testing-agent-1:22.

[09/22/20 15:56:16] [SSH] WARNING: SSH Host Keys are not being verified. Man-in-the-middle attacks may be possible against this connection.

[09/22/20 15:56:16] [SSH] Authentication successful.

[09/22/20 15:56:16] [SSH] The remote user's environment is:

BASH=/usr/bin/bash

.

.

.

[SSH] java -version returned 11.0.8.

[09/22/20 15:56:16] [SSH] Starting sftp client.

[09/22/20 15:56:16] [SSH] Copying latest remoting.jar...

Source agent hash is 0146753DA5ED62106734D59722B1FA2C. Installed agent hash is 0146753DA5ED62106734D59722B1FA2C

Verified agent jar. No update is necessary.

Expanded the channel window size to 4MB

[09/22/20 15:56:16] [SSH] Starting agent process: cd "/home/jenkins/.jenkins" && java -jar remoting.jar -workDir /home/jenkins/.jenkins -jar-cache /home/jenkins/.jenkins/remoting/jarCache

Sep 22, 2020 3:56:17 PM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir

INFO: Using /home/jenkins/.jenkins/remoting as a remoting work directory

Sep 22, 2020 3:56:17 PM org.jenkinsci.remoting.engine.WorkDirManager setupLogging

INFO: Both error and output logs will be printed to /home/jenkins/.jenkins/remoting

<===[JENKINS REMOTING CAPACITY]===>channel started

Remoting version: 4.2

This is a Unix agent

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by jenkins.slaves.StandardOutputSwapper$ChannelSwapper to constructor java.io.FileDescriptor(int)

WARNING: Please consider reporting this to the maintainers of jenkins.slaves.StandardOutputSwapper$ChannelSwapper

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Evacuated stdout

Agent successfully connected and online

This is the full text in the "JVM Options" field for jenkins-testing-agent-1 and 2:

-Dhudson.slaves.WorkspaceList=- -Dorg.apache.commons.jelly.tags.fmt.timeZone=America/Vancouver -Xmx64g -Xms64g -XX:+AlwaysPreTouch -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/jenkins/.jenkins/support -XX:+UseG1GC -XX:+UseStringDeduplication -XX:+ParallelRefProcEnabled -XX:+DisableExplicitGC -XX:+UnlockDiagnosticVMOptions -XX:+UnlockExperimentalVMOptions -verbose:gc -Xlog:gc:/home/jenkins/.jenkins/support/gc-%t.log -XX:+PrintGC -XX:+PrintGCDetails -XX:ErrorFile=/hs_err_%p.log -XX:+LogVMOutput -XX:LogFile=/home/jenkins/.jenkins/support/jvm.log

I am having intermittent catastrophic failures of these agent machines during builds and am trying to properly configure java settings per Cloudbees best practices, but I cannot seem to get off the ground here. Another problem in my agents that's probably related is that the agent-side (remoting) logs are all zero bytes.

Thanks for your help.

Ivan Fernandez Calvo

Sep 23, 2020, 1:01:46 PM9/23/20

to Jenkins Users

How much memory those agents have? you set "-Xmx64g -Xms64g" for the remoting process (not for builds) you agent has to have more than 64GB of RAM to run any build on it, you grab 64GB only for the remoting process, and this RAM should be enough to run you builds. The remoting agent usually does not need more than 256-512MB, this keeps the rest of your agent memory for builds, agents rarely need JVM options to tune the memory the default configuration is enough, the only case I will recommend to pass JVM option is to limit the memory of the agent process.

the jvmOptions field should work is tested on unit test, if not is and issue, Which version of Jenksin and ssh build agents plugin do your use?

Ivan Fernandez Calvo

Sep 23, 2020, 1:34:01 PM9/23/20

to Jenkins Users

The jvmOptions settings work, I just tested it on the latest version. Did you disconnect and connect the agent again to apply the changes? if you only click on save the agent does not discconect and connect with the new settings, you have to disconnect the agent with the action disconnect from the menu and lauch the agent again with the launch button in the status page.

naresh....@gmail.com

Sep 23, 2020, 1:36:20 PM9/23/20

to Jenkins Users

I think to have those updated settings applied correctly we need to disconnect and launch those agents again instead of just bringing those offline and online, just checking to make sure that we are not missing anything there.

Tim Black

Sep 23, 2020, 3:01:39 PM9/23/20

to Jenkins Users

Thanks everyone, it's working now (see below for details). kuisathaverat, these agents have 96GB total RAM. Thanks for the explanation. Our builds are very RAM intensive, and I misunderstood that the builds happened within the remoting java process. Sounds like you're saying in this case there's no reason to give the agent jvm so much RAM. The Cloudbees JVM Best Practices page indicates the default min/max heap are 1/64 physical RAM / 1/4 physical RAM, but both cap out at 1GB. So, before I was setting these options, my agents should have been effectively using 1GB/1GB for min/max. As for the other options I'm setting in the agents, these are the same options recommended by the page linked above (which I'm also using on master/controller). Do these not apply to agents as well as masters/controllers?

Also, on the agent machine, my <JENKINS_HOME>/support/all*.logs and <JENKINS_HOME>/remoting/logs/* are still empty,; any suggestions how to get more logging on the agents?

I didn't have gc or other logging enabled, so I'm still not yet sure what the catastrophic problem was, it might not be a java problem at all, since I'm not seeing any problems in syslog indicating problems with the jenkins remoting process. These are VMware machines, and they just stop themselves, so it seems like a kernel panic or something. I have them autorestarting now and the problem seems intermittent.

I think the jvmOptions is working as expected now. I think I may not have rebooted the jenkins instance but had only rebooted the agents and had only restarted the jenkins service on master/controller machine. So apparently the change I made required a reboot of the master/controller. Now, signing into the agent and looking at the java process for jenkins remoting, I can see all the specified args are there:

```

jenkins@jenkins-testing-agent-1:~$ ps aux | grep java

jenkins 2733 5.1 70.4 73509096 69794284 ? Ssl 11:19 0:26 java -Dhudson.slaves.WorkspaceList=- -Dorg.apache.commons.jelly.tags.fmt.timeZone=America/Vancouver -Xmx64g -Xms64g -XX:+AlwaysPreTouch -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/jenkins/.jenkins/support -XX:+UseG1GC -XX:+UseStringDeduplication -XX:+ParallelRefProcEnabled -XX:+DisableExplicitGC -XX:+UnlockDiagnosticVMOptions -XX:+UnlockExperimentalVMOptions -verbose:gc -Xlog:gc:/home/jenkins/.jenkins/support/gc-%t.log -XX:+PrintGC -XX:+PrintGCDetails -XX:ErrorFile=/hs_err_%p.log -XX:+LogVMOutput -XX:LogFile=/home/jenkins/.jenkins/support/jvm.log -jar remoting.jar -workDir /home/jenkins/.jenkins -jar-cache /home/jenkins/.jenkins/remoting/jarCache

```

I am also now seeing garbage collection logs in support/ as configured:

```

jenkins@jenkins-testing-agent-1:~$ ls -la .jenkins/support/

total 32

drwxr-xr-x 2 jenkins jenkins 4096 Sep 23 11:20 .

drwxrwxr-x 6 jenkins jenkins 4096 Sep 16 00:27 ..

-rw-r--r-- 1 jenkins jenkins 0 Sep 22 11:01 all_2020-09-22_18.01.37.log

-rw-r--r-- 1 jenkins jenkins 0 Sep 22 11:03 all_2020-09-22_18.03.01.log

-rw-r--r-- 1 jenkins jenkins 0 Sep 22 13:04 all_2020-09-22_20.04.15.log

-rw-r--r-- 1 jenkins jenkins 0 Sep 22 15:17 all_2020-09-22_22.17.09.log

-rw-r--r-- 1 jenkins jenkins 0 Sep 22 15:32 all_2020-09-22_22.32.14.log

-rw-r--r-- 1 jenkins jenkins 0 Sep 22 15:56 all_2020-09-22_22.56.18.log

-rw-r--r-- 1 jenkins jenkins 1078 Sep 23 11:18 all_2020-09-23_18.04.43.log

-rw-r--r-- 1 jenkins jenkins 0 Sep 23 11:20 all_2020-09-23_18.20.07.log

-rw-r--r-- 1 jenkins jenkins 194 Sep 23 11:04 gc-2020-09-23_11-04-04.log

-rw-r--r-- 1 jenkins jenkins 194 Sep 23 11:04 gc-2020-09-23_11-04-24.log

-rw-r--r-- 1 jenkins jenkins 194 Sep 23 11:19 gc-2020-09-23_11-19-32.log

-rw-r--r-- 1 jenkins jenkins 546 Sep 23 11:22 gc-2020-09-23_11-19-50.log

-rw-r--r-- 1 jenkins jenkins 4096 Sep 23 11:20 jvm.log

```

Tim Black

Sep 23, 2020, 4:10:14 PM9/23/20

to Jenkins Users

More info: In my case, a reboot is definitely needed. A disconnect/reconnect does not suffice, nor does rebooting just the master/controller or the agent in sequence - the only way I see the correct jvmOptions being used is by rebooting the entire cluster at once.

I'm using Jenkins 2.222.3, ssh build agents plugin 1.31.2.

Another probably important piece of info here is that I have "ServerAliveCountMax 10" and "ServerAliveInterval 60" in the ssh client on the Jenkins master/controller, to help keep ssh connections alive for longer amount of time when agents are very very busy performing builds and may not have the cycles to respond to the master/controller.

I'm also using ansible and configuration-as-code plugin (1.43) to configure everything in the jenkins cluster. So, to make a change to the agent java_options, what I do is:

1. Modify the local jenkins.yml CasC file to include new "jvmOptions" values for my agent, e.g. my latest:

- permanent:

name: "jenkins-testing-agent-1"

nodeDescription: "Fungible Agent for jenkins-testing"

labelString: ""

mode: "NORMAL"

remoteFS: "/home/jenkins/.jenkins"

launcher:

ssh:

credentialsId: "jenkins_user_on_linux_agent"

host: "jenkins-testing-agent-1"

jvmOptions: "-Dhudson.slaves.WorkspaceList=- -Dorg.apache.commons.jelly.tags.fmt.timeZone=America/Vancouver -Xmx4g -Xms1g -XX:+AlwaysPreTouch -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/jenkins/.jenkins/support -XX:+UseG1GC -XX:+UseStringDeduplication -XX:+ParallelRefProcEnabled -XX:+DisableExplicitGC -XX:+UnlockDiagnosticVMOptions -XX:+UnlockExperimentalVMOptions -verbose:gc -Xlog:gc:/home/jenkins/.jenkins/support/gc-%t.log -XX:+PrintGC -XX:+PrintGCDetails -XX:ErrorFile=/hs_err_%p.log -XX:+LogVMOutput -XX:LogFile=/home/jenkins/.jenkins/support/jvm.log"

launchTimeoutSeconds: 30

maxNumRetries: 20

port: 22

retryWaitTime: 10

sshHostKeyVerificationStrategy: "nonVerifyingKeyVerificationStrategy"

2. send the CasC yaml file to <JENKINS_HOME>/jenkins.yml on the master/controller machine

3. run geerlingguy.jenkins role which, among other things, detects a change and restarts the jenkins service

4. on Jenkins restart, Jenkins applies the new CasC settings in jenkins.yaml, and this can be verified as correct in the GUI subsequently

5. the agents are not restarted in this process (which I assert should be fine/ok)

After my ansible playbook is complete, and all (verifiably correct) config has been applied to controller/agents, I look at the agent logs and they appear to have gone back to having the empty jvmOptions like I originally reported:

SSHLauncher{host='jenkins-testing-agent-1', port=22, credentialsId='jenkins_user_on_linux_agent', jvmOptions='', javaPath='', prefixStartSlaveCmd='', suffixStartSlaveCmd='', launchTimeoutSeconds=30, maxNumRetries=20, retryWaitTime=10, sshHostKeyVerificationStrategy=hudson.plugins.sshslaves.verifiers.NonVerifyingKeyVerificationStrategy, tcpNoDelay=true, trackCredentials=true}

At this point, if I only reboot the agent, when the master/controller reconnect to it the logs still shows jvmOptions=''.

If I then reboot the master/controller, is still shows jvmOptions=''.

But if (and only iff) I reboot the entire cluster, I get the correct application of my ssh agent jvmOptions:

SSHLauncher{host='jenkins-testing-agent-1', port=22, credentialsId='jenkins_user_on_linux_agent', jvmOptions='-Dhudson.slaves.WorkspaceList=- -Dorg.apache.commons.jelly.tags.fmt.timeZone=America/Vancouver -Xmx4g -Xms1g -XX:+AlwaysPreTouch -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/jenkins/.jenkins/support -XX:+UseG1GC -XX:+UseStringDeduplication -XX:+ParallelRefProcEnabled -XX:+DisableExplicitGC -XX:+UnlockDiagnosticVMOptions -XX:+UnlockExperimentalVMOptions -verbose:gc -Xlog:gc:/home/jenkins/.jenkins/support/gc-%t.log -XX:+PrintGC -XX:+PrintGCDetails -XX:ErrorFile=/hs_err_%p.log -XX:+LogVMOutput -XX:LogFile=/home/jenkins/.jenkins/support/jvm.log', javaPath='', prefixStartSlaveCmd='', suffixStartSlaveCmd='', launchTimeoutSeconds=30, maxNumRetries=20, retryWaitTime=10, sshHostKeyVerificationStrategy=hudson.plugins.sshslaves.verifiers.NonVerifyingKeyVerificationStrategy, tcpNoDelay=true, trackCredentials=true}

Thanks for your help in diagnosing these behaviors. kuisathaverat, let me know if any of this feels like a bug in ssh-slaves-plugin or configuration-as-code-plugin.

kuisathaverat

Sep 23, 2020, 5:37:18 PM9/23/20

to jenkins...@googlegroups.com

I will configure a test environment with JCasC that has jmvOptions too see how it behaves, then we will know if it is an issue or not, in any case is weird.

--

You received this message because you are subscribed to a topic in the Google Groups "Jenkins Users" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/jenkinsci-users/Ax_JIIpKt4c/unsubscribe.

To unsubscribe from this group and all its topics, send an email to jenkinsci-use...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/jenkinsci-users/8463b0ea-9df0-4c05-9bf4-0501296f2b9bn%40googlegroups.com.

Un Saludo

Iván Fernández Calvo

Ivan Fernandez Calvo

Sep 25, 2020, 6:05:59 AM9/25/20

to Jenkins Users

ok, I think I know what happens, I saw it before using Docker and JCasC, if you make changes on the JCasC and restart Jenkins from UI the changes are not applied because JCasC is not executed on that restart, but if you stop the Jenkins instance and start it again the changes are applied IIRC is how it works.

Tim Black

Sep 25, 2020, 11:32:36 AM9/25/20

to Jenkins Users

Thanks. What's the difference between "restart Jenkins from UI" and "stop the Jenkins instance and start it again"? In the latter, how are you implying that Jenkins gets stopped and restarted, through the CLI? Just trying to understand what you're saying - it sounds like you're implying CasC settings aren't applied when you restart jenkins through the GUI, but they are when you restart through the CLI..

I don't think this explanation is relevant to my use case bc I never restart jenkins through the GUI. In the workflow I outlined above, I am running an ansible playbook on my jenkins cluster, over and over, and each time if there is a config change, it restarts the jenkins service through the CLI using a jenkins admin credentials (using an active-directory user actually). This appears to not have the desired effect of applying the new agent jvmOptions upon next connection of the agent, whereas when I simply reboot the entire machines (master/controller and agents), the new jvmOptions are used in the SSHLauncher). Note that I do not have this same problem with other CasC settings, only ssh agents.

kuisathaverat

Sep 25, 2020, 11:56:43 AM9/25/20

to jenkins...@googlegroups.com

Restart Jenkins using the CLI(https://www.jenkins.io/doc/book/managing/cli/) it is the same make it from the UI. When I said stop/start, I mean stop/start the Jankins daemon/service/Docker container/Whatever. The reason it is because IIRC JCasC runs on the start time of the Jenkins process, and also IIRC if you make changes on the JCasC config file and reload the configuration, or restart from UI the JCasC configuration is not recreated because the stage where it is run is not running on those restart ways. Probably someone with the deepest acknowledge of JCasC can add more context.

It is easy to check, run a Jenkins Docker container configured with JCasC (e.g. https://github.com/kuisathaverat/jenkins-issues/tree/master/JENKINS-63703) then connect to the container and modify the JENKINS_HOME/jenkins.yaml file and restart from UI or CLI, the JCasC changes will not apply if you stop the Docker container and start it again the changes are applied.

It is easy to check, run a Jenkins Docker container configured with JCasC (e.g. https://github.com/kuisathaverat/jenkins-issues/tree/master/JENKINS-63703) then connect to the container and modify the JENKINS_HOME/jenkins.yaml file and restart from UI or CLI, the JCasC changes will not apply if you stop the Docker container and start it again the changes are applied.

To view this discussion on the web visit https://groups.google.com/d/msgid/jenkinsci-users/2440d77c-39c7-4836-bdbc-704b15e469c4n%40googlegroups.com.

Tim Black

Sep 25, 2020, 2:03:25 PM9/25/20

to Jenkins Users

Thanks. I believe you were saying stop/start because you're using Docker container. In your Docker example, stopping and restarting the Docker container is analogous to rebooting (power cycling, or sudo rebooting) the physical or virtual machine hosting the Jenkins service.

In this thread I'm saying that restarting the Jenkins service (which resides within a container or vm that is NOT being restarted/rebooted) IS sufficient to apply MOST CasC settings, however, NOT the ssh agent jvmOptions. It's not a blocking problem, bc I can get the desired effect by rebooting master/controller and agent machines. But it's a mystery I'd like to understand better, bc as I scale this cluster and rll out new configuration changes to it, I'm going to need to understand these mechanics.

Would this be an appropriate thread for jenkins-developers group? Is there another forum you could recommend to ask detailed questions about JCasC? (I'm on the gitter channel but it's quite hit and miss due to the format)

Björn Pedersen

Sep 28, 2020, 7:08:27 AM9/28/20

to Jenkins Users

I think this is simply because the agent process survives the master restart (that is actually a feature) so if agent settings change, you need to disconnect and connect the agent (or otherwise restart the agent process to pick up the changes).

Tim Black

Oct 3, 2020, 7:06:39 PM10/3/20

to jenkins...@googlegroups.com

Bjorn I like that explanation. If this behaviour is "as designed" then I just need to adjust my expectations.

I suspect that my Ansible playbooks are not relaunching the agent processes when changes happen. The agent options are specified through the JCasC yaml in the master/controller, and I suspect CasC does nothing to detect agent config changes and relaunch remote agent processes. Thus I have to reboot them..

I should probably add my own Ansible task to relaunch agent processes..

To view this discussion on the web visit https://groups.google.com/d/msgid/jenkinsci-users/010f3070-ef38-4964-a5a4-aded7a080e75n%40googlegroups.com.

Reply all

Reply to author

Forward

0 new messages