Speed up png.Decode

180 views

Skip to first unread message

Lee Armstrong

Jun 26, 2020, 5:00:07 AM6/26/20

to golang-nuts

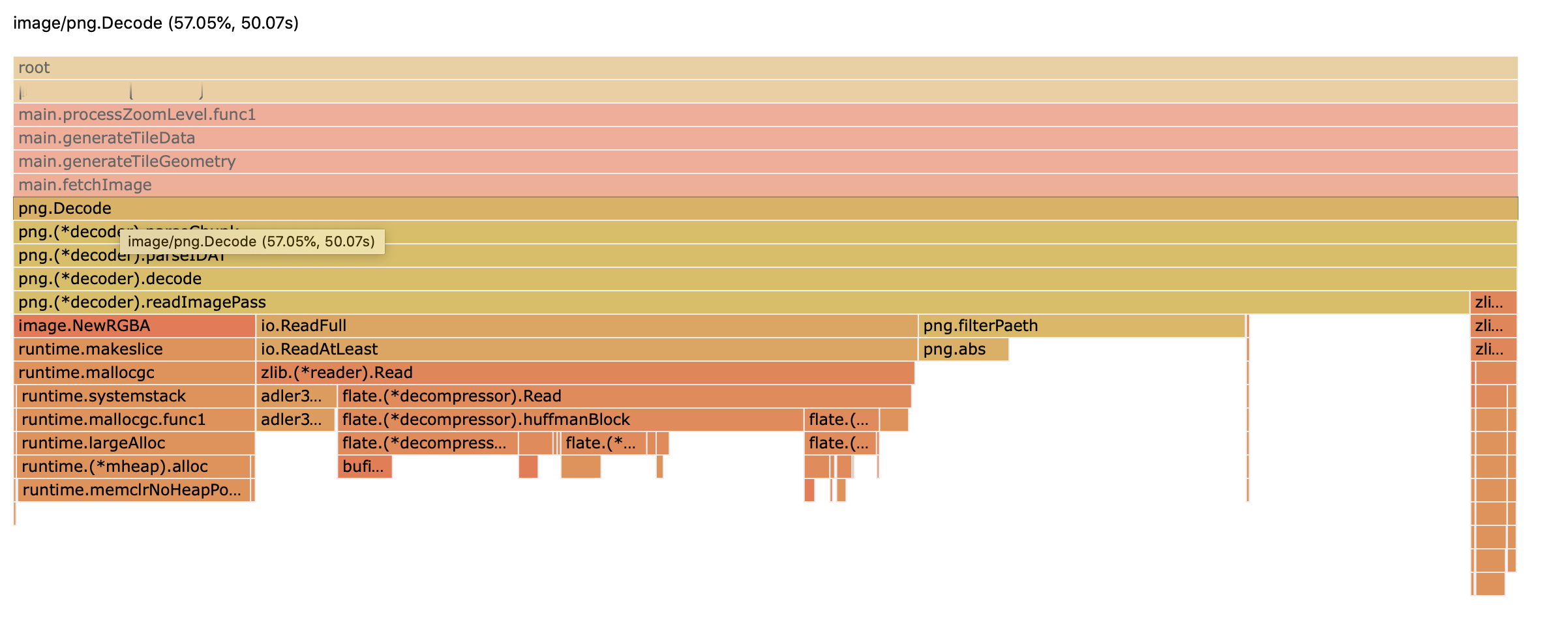

I have an application that reads []byte from a database which is a PNG. The png.Decode shows up as really heavy in the pprof I am doing. I wonder if there is a way to speed up the Decode at all?

The code is as simple as, but it is being called a lot as I have around 16 million images to process. Am I out of luck at all here or is there a win I get somewhere from this?

var b []byte

cachedPackedStatement.QueryRow(x, y, z).Scan(&b)

cachedPackedStatement.QueryRow(x, y, z).Scan(&b)

img, err := png.Decode(bytes.NewReader(b))

howar...@gmail.com

Jun 26, 2020, 8:51:26 AM6/26/20

to golang-nuts

I don't know if the CGo transitions for 16 million images will completely swamp the speed gains, or how much post-processing you need to do to these images, but you might try https://github.com/h2non/bimg and see if it gives you any wins. It claims 'typically 4x faster' then the Go Image package. It is a CGO interface to libvips, which is an experimental branch of libspng, which is a performance focused alternative to libpng.

Howard

Robert Engels

Jun 26, 2020, 8:55:40 AM6/26/20

to howar...@gmail.com, golang-nuts

Just parallelize in the cloud. At a minimum parallelize locally with multiple Go routines.

On Jun 26, 2020, at 7:51 AM, howar...@gmail.com wrote:

I don't know if the CGo transitions for 16 million images will completely swamp the speed gains, or how much post-processing you need to do to these images, but you might try https://github.com/h2non/bimg and see if it gives you any wins. It claims 'typically 4x faster' then the Go Image package. It is a CGO interface to libvips, which is an experimental branch of libspng, which is a performance focused alternative to libpng.Howard

--

You received this message because you are subscribed to the Google Groups "golang-nuts" group.

To unsubscribe from this group and stop receiving emails from it, send an email to golang-nuts...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/golang-nuts/9f8d3086-75f4-47e1-b49a-4dce057258cco%40googlegroups.com.

Lee Armstrong

Jun 26, 2020, 8:59:45 AM6/26/20

to golang-nuts

Thanks, I am already maxing out some servers but wondered if it could be sped up.

I will give the bimg package a go!

Rob Pike

Jun 26, 2020, 8:31:12 PM6/26/20

to Lee Armstrong, golang-nuts

Without information about the particular images - the data set you're working with - it's hard to provide any accurate advice. If the images are small, maybe it's all overhead. If the images are of a particular form of PNG, maybe a fast path is missing. And so on.

To get meaningful help for problems like this, the more information you can provide, the better.

-rob

To view this discussion on the web visit https://groups.google.com/d/msgid/golang-nuts/d694c7d7-8438-4d7c-93ab-46cccaf09b65n%40googlegroups.com.

Lee Armstrong

Jun 27, 2020, 1:15:34 AM6/27/20

to Rob Pike, golang-nuts

Thanks Rob,

Yes you are right these images are small. <100kb each with an example below. And as I mentioned I have millions of these.

I am able to max the CPUs out with a worker pool but that is when I noticed a lot of the work was actually decoding the PNG images which made we wonder if if was able to reuse decoders at all and even if that would help!

Robert Engels

Jun 27, 2020, 8:31:42 AM6/27/20

to Lee Armstrong, Rob Pike, golang-nuts

Just because the bulk of the time is in the decode doesn’t mean the decode is inefficient or can be improved upon. It might be the most expensive stage in the process regardless of the implementation.

On Jun 27, 2020, at 12:15 AM, Lee Armstrong <l...@pinkfroot.com> wrote:

To view this discussion on the web visit https://groups.google.com/d/msgid/golang-nuts/CAC5LoL_yWxgfDUCs5UwStep-dOMoWovpF_RK0K_MuPNzsG6Unw%40mail.gmail.com.

Michael Jones

Jun 27, 2020, 10:03:55 AM6/27/20

to Robert Engels, Lee Armstrong, Rob Pike, golang-nuts

An easy "comfort" exercise:

save 1000 images to a directory in png form.

decode with go and with several tools such as imagemagick

compare times

for format in jpeg, tiff, ...

convert all images to that format; do the test above anew

the result will be a comparison chart of Go existing image decoders vs X in various image formats for your images.

if it is within a few percent, you can be comfortable

if it is much slower, be uncomfortable and report a performance bug.

if it is faster, be happy.

michael

To view this discussion on the web visit https://groups.google.com/d/msgid/golang-nuts/D4EB5766-01C2-4025-898E-0768A302C8DF%40ix.netcom.com.

Michael T. Jones

michae...@gmail.com

michae...@gmail.com

David Riley

Jun 27, 2020, 10:33:17 AM6/27/20

to Robert Engels, Lee Armstrong, Rob Pike, golang-nuts

On Jun 27, 2020, at 8:30 AM, Robert Engels <ren...@ix.netcom.com> wrote:

>

> Just because the bulk of the time is in the decode doesn’t mean the decode is inefficient or can be improved upon. It might be the most expensive stage in the process regardless of the implementation.

This is I think the most important point; image decoding is an expensive operation, and it makes sense that the bulk of your time decoding is spent on it. The question is how it stacks up to other decoders; if you're able to write a quick C proof-of-concept to test how fast libpng does it (or libspng) and run the same benchmarks, you'll get your answer there.

>

> Just because the bulk of the time is in the decode doesn’t mean the decode is inefficient or can be improved upon. It might be the most expensive stage in the process regardless of the implementation.

Depending on how regular your images are (size-wise, especially), you may be able to get some additional mileage out of pooling your receiving buffers and reusing them after your operations are complete. There doesn't appear to be a handle for that in the current image/png library like there is for encoding, though; you'd have to do some surgery on decoder.readImagePass to do something like that.

- Dave

Reply all

Reply to author

Forward

0 new messages