Luciferian Murder?

Brent Allsop

Fellow transhumanists,

We’re seek to build and track consensus around a definition of evil in a camp we’re newly calling “Liciferian Murder”. If anyone agrees that this as a good example of evil, we would love your support. And if not, we’d love to hear why, possibly in a competing camp.

Already getting the typical blow back of polarizing bleating and tweeting from some fundamentalists, but as usual, nobody yet willing to canonize a competing POV which would enable movement towards moral consensus.

Brent

Lawrence Crowell

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onssQDs_eNRnyQ%2BtGxgzC40jZL4s91hUKZOeoL%2BtWopCOw%40mail.gmail.com.

John Clark

> Fellow transhumanists,

We’re seek to build and track consensus around a definition of evil in a camp we’re newly calling “Liciferian Murder”.

John Clark

> What is good and what is evil is ultimately how we define it. I draw little quarter for ideas and beliefs concerning disembodied conscious or sentient beings, whether devils or angels and up to God. This relativity to what we call morality is of course difficult to work with or to find ways to justify certain things and admonishments against others. Remember, human sacrifice was considered a social good for a long time up to the middle iron age. Today we would see it as evil, but in the past not so. Who ever said life is easy and choices clear?

Terren Suydam

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv1iU9Dquf0F9u0pDExT%3Dk4%3DRRSUU-m2e3SNxFisRTqK-w%40mail.gmail.com.

John Clark

> Furthermore, is accountability even possible once systems become truly autonomous as in the AGI scenario.

William Flynn Wallace

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv2GMncVKwnQLaDA8hFBnp2Zzcu_bBMOhRWm_VS1JJN01A%40mail.gmail.com.

John Clark

> The Trolley Problem - I think it's totally phony. You are called upon to imagine a problem that you will never encounter, a problem that is unlike any problem you have ever encountered, and are asked to imagine what you would do.

Terren Suydam

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv2GMncVKwnQLaDA8hFBnp2Zzcu_bBMOhRWm_VS1JJN01A%40mail.gmail.com.

John Clark

> if a paperclip maximizer turns us all into paperclips, is that evil? If it was happening to me and you, it would surely feel that way - but for an AI whose only goal is to create paperclips it might be difficult to argue that from its perspective it is actually evil.

> It may be impossible to build an AGI that doesn't run the risk of doing harm to humans for whatever might qualify as its "interests". Would it be fair to punish the creators of an evil-acting AGI even if they took great pains to avoid evil outcomes?

Terren Suydam

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv0HaW%3DLwsvx%2BgdP7SjbjFyxckUS_Xyc5BSpivpTJ%3DGzpA%40mail.gmail.com.

Brent Allsop

The belief that AI will be good camp continues to extend its lead over the fearful camp. There are about 20 people in the consensus super camp who all agree that AI will surpass human level intelligence. Of those, 12 are in the hopeful camp, and 6 are in the concerned camp. You can see concise state of the art descriptions of their arguments in the camp statements. Early on, the fearful camp was in the lead, as you can see with an as_of setting back to 2011.

But no longer.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAMy3ZA-%3Dpq3nwtoP5dFVtjMru7YTzF5Wq04G2RS%3DEx3t2Fnhog%40mail.gmail.com.

Brent Allsop

John Clark

> You might be right that these things aren't possible, but just to be clear, are you really saying you don't think it's possible for a super-intelligent AI to be evil, assuming it wasn't designed to be that way?

> You said yourself that real intelligence has to be willing to upend its goal systems. What if it does that and the new goal system it arrives at requires the murder of humans and other life? Would that constitute evil?

> You raise an interesting issue with the evil inherent in enslaving an AI. Why is that evil?

> You'd have to believe the AI would *want* to do otherwise.

John Clark

Terren Suydam

On Thu, Dec 9, 2021 at 10:05 PM Terren Suydam <terren...@gmail.com> wrote:> You might be right that these things aren't possible, but just to be clear, are you really saying you don't think it's possible for a super-intelligent AI to be evil, assuming it wasn't designed to be that way?I'm saying it will be impossible to be certain an AI will always consider human well-being to be more important than its own well-being, and as the AI becomes more and more intelligent it will become increasingly unlikely that it will. Don't get me wrong I'm not saying the AI will necessarily exterminate humanity, perhaps it will feel some nostalgic affection for us, after all we are its parents and we should take some pride in that fact, but I am saying humanity will not be the major preoccupation of a super intelligent AI, it will have much bigger fish to fry than us. The human race might or might not still be around but either way it will no longer be the top dog, it will be no longer running the show. I would be astonished if this transition happens in 10 years, I would be equally astonished if it didn't happen in 100 years.

> You said yourself that real intelligence has to be willing to upend its goal systems. What if it does that and the new goal system it arrives at requires the murder of humans and other life? Would that constitute evil?It would certainly be evil from the human point of view but to the AI things would look different. Would you consider it evil when somebody steps on an ant? I'm sure the ant would.> You raise an interesting issue with the evil inherent in enslaving an AI. Why is that evil?I would have thought that would be intuitively obvious.> You'd have to believe the AI would *want* to do otherwise.You seem to be apologizing for using the word "want" in this context. Why?

John K ClarkTerrenOn Thu, Dec 9, 2021 at 11:38 AM John Clark <johnk...@gmail.com> wrote:> if a paperclip maximizer turns us all into paperclips, is that evil? If it was happening to me and you, it would surely feel that way - but for an AI whose only goal is to create paperclips it might be difficult to argue that from its perspective it is actually evil.I'm not worried about that, the paper clip scenario could only happen in an intelligence that had a top goal that was fixed and inflexible, and I don't think that's possible for any sort of intelligence, artificial or otherwise. Humans have no such goal, not even the goal of self preservation, and there is a reason Evolution never came up with a mind built that way; Turing proved in 1935 that in general there is no way to know if a given computation will ever produce a solution and that a mind with a top inflexible goal could never work. If you had a fixed inflexible top goal you'd be a sucker for getting drawn into an infinite loop accomplishing nothing, then the computer would be turned into just an expensive space heater. That's why Evolution invented boredom, it's a judgment call on when to call it quits and set up a new goal that is a little more realistic. Of course the boredom point varies from person to person, perhaps the world's great mathematicians have a very high boredom point and that gives them ferocious concentration until a problem is solved. Perhaps that is also why mathematicians, especially the very best mathematicians, have a reputation for being a bit, ...ah..., odd. A fixed goal might work in a specialized paper clip making machine but not in a machine that can demonstrate general intelligence and solve problems of every sort, even problems that have nothing to do with paper clips.Any intelligent must have the ability to modify and even scrap it's entire goal structure in certain circumstances. Nogoal orutility functionis sacrosanct, not survival, not even happiness.> It may be impossible to build an AGI that doesn't run the risk of doing harm to humans for whatever might qualify as its "interests". Would it be fair to punish the creators of an evil-acting AGI even if they took great pains to avoid evil outcomes?Isaac Asimov's three laws of robotics make for good stories but I don't think it would be possible to ever implement something like that, and personally I'm glad that they can't because however immoral it may be to enslave a member of your own species with an intelligence equal to your own it would be even more evil to enslave an intelligence that was far greater than your own. There is no way a scientist who creates an AI can guarantee that it will never turn against its creator. And if the AI is currently filled with benevolence towards humans even the AI itself couldn't guarantee that it's attitude towards them will never change.John K ClarkiaaOn Wed, Dec 8, 2021 at 1:30 PM John Clark <johnk...@gmail.com> wrote:> Furthermore, is accountability even possible once systems become truly autonomous as in the AGI scenario.I don't see what AI has to do with it. And from an ethical point of view it seems to me that accountability is one of the few things that is easy to determine because it all boils down to a question of punishment, and the only valid reason for punishing anybody for anything is if it seems likely that it will result in a net decrease in human suffering in the future; if it does then punish that person, if it doesn't then don't. After all, if ethics doesn't result in less suffering then what's the point of ethics?John K Clark

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv2%2B36OP%2BxgVTCY3Ne6A4RRS2LmL4wCo7_UAi1PVk9NFBQ%40mail.gmail.com.

Terren Suydam

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onsK3rG%2BZeJvaGV%2B8E%2BV1wMgAn3%3De9CB62H_u1i6PAYupQ%40mail.gmail.com.

Brent Allsop

On Thu, Dec 9, 2021 at 10:05 PM Terren Suydam <terren...@gmail.com> wrote:> You might be right that these things aren't possible, but just to be clear, are you really saying you don't think it's possible for a super-intelligent AI to be evil, assuming it wasn't designed to be that way?I'm saying it will be impossible to be certain an AI will always consider human well-being to be more important than its own well-being,

Brent Allsop

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAMy3ZA9J_ejm7YgFBvsGoCR-Svnu6F-4WEn1-52WkthR3rUe2Q%40mail.gmail.com.

Brent Allsop

I find the arguments that "AI will be good" as described as unconvincing and easy to rebut.

On Thu, Dec 9, 2021 at 10:39 PM Brent Allsop <brent....@gmail.com> wrote:

The belief that AI will be good camp continues to extend its lead over the fearful camp. There are about 20 people in the consensus super camp who all agree that AI will surpass human level intelligence. Of those, 12 are in the hopeful camp, and 6 are in the concerned camp. You can see concise state of the art descriptions of their arguments in the camp statements. Early on, the fearful camp was in the lead, as you can see with an as_of setting back to 2011.

But no longer.

Brent Allsop

Even "kill" implies intent. Can you think of a term that makes it clear that basically all such cases are done in complete ignorance?As to Canonizer - it's a similar problem, and similar to the one I have with most interpretations of Christianity (where Lucifer comes from). You act as if the points of view ("camps") on your site are the only ones to consider. (Yes, anyone can make another camp, but this takes a lot more work to do well and thus is usually not worth doing.) Thus, on many (possibly most) issues, debates on your site start with false dichotomies - and there does not seem to be much if any outreach or research to try to find points of view that someone on your site is not already strongly promoting.You have demonstrated that you're just not interested in doing that sort of work: you would much rather debate and defend points of view than actively try to discover what, if anything, you're missing in any given case. Perhaps you might respond that you are interested in this, then I or someone else would call BS, rather than commence research you'll just defend what you've done so far, and it'll be an aggravating waste of time - so I'd rather just not engage in that.On Thu, Dec 9, 2021 at 5:53 PM Brent Allsop <brent....@gmail.com> wrote:Thanks, everyone, for all the helpful comments. Especially thanks Adrean, your examples which are especially helpful. True, I hadn't fully considered the definition of murder, and how intent is normally included. Other's have balked at using the 'murder' term for similar reasons which I've been struggling to understand. But these examples of yours enabled me to clearly understand the problem.Would it fix the problem if I do a global replace of murder with kill or killer? Seems to me that would fix things. I want to focus on the acts, and the results of such, whether done in ignorance or with intent or not.Also, I apologize for so far being unable to understand the problems you have with Canonizer. Would it help for me to ask you to not give up on me, and give me another chance? As I really want to understand.I guess I'm mostly just asking if I am the only one that constantly thinks about this type of "luciferian killing"? I am constantly asking myself if the actions I plan to do today will help, save more people, or not help, killing more people in a luciferan way by delaying the singularity?Does anyone else besides me ever think like this?ThanksBrentOn Tue, Dec 7, 2021 at 10:04 PM Adrian Tymes via extropy-chat <extrop...@lists.extropy.org> wrote:I have no desire to engage in your Web site (do not bother trying to convince me otherwise: you are unable to address my reasons for not wanting to do so, as you have demonstrated that you will not understand them even if I explain them again), but I can point out a flaw in your reasoning: you assume intent.Most - basically all - behavior that delays resurrection capability is done out of ignorance: the person is unaware of the concept of resurrection, at least in any non-supernatural, potentially-non-fictional form.Most - basically all - of said behavior that is not done out of ignorance, is done out of disbelief: the person is aware that some people believe it is theoretically possible but personally believes those people are mistaken, that it is not theoretically possible and thus that there are no moral consequences for delaying what can never happen anyway.There is either extremely little, quite possibly literally no, behavior that delays resurrection that is performed with the intent of delaying resurrection. "Manslaughter" would be a more accurate term than "murder".

_____________________________________________________________________________________________________________________________________________

extropy-chat mailing list

extrop...@lists.extropy.org

http://lists.extropy.org/mailman/listinfo.cgi/extropy-chat

extropy-chat mailing list

extrop...@lists.extropy.org

http://lists.extropy.org/mailman/listinfo.cgi/extropy-chat

extropy-chat mailing list

extrop...@lists.extropy.org

http://lists.extropy.org/mailman/listinfo.cgi/extropy-chat

Stathis Papaioannou

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypV80KwqMc6Z_Gk3q6xBKF4qzj-rVP4Jo8MFOJZAU4uwGQ%40mail.gmail.com.

John Clark

> OK, maybe we can value natural phenomenal intelligence a little more than artificial, temporarily so, after all, we are their creators and they owe us, but certainly we should want to eventually get it all, even for them. We just have a slightly higher priority till everything is made just during the millennium.

John Clark

> It would only be morally wrong to make an AI a slave if the AI didn’t like being a slave and didn’t want to be a slave. That might either be programmed into the AI or it might arise as the AI develops.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv2c%2BpsmC4DEtz%2B_LLG39PUkSObHc4fP66X3Z%3DrDLktNjA%40mail.gmail.com.

John Clark

> Hi John,

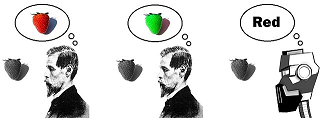

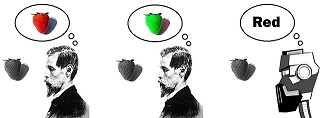

If I were in your camp, and if I made the assumptions you make, I would agree with you. You assume there is no difference between the 3 different systems portrayed in this image engineered to function the same:

> in my camp redness isn't a quality of something that reflects 'red' light, redness is an intrinsic physical quality of the physical stuff your brain uses to represent visual knowledge of red things with.

> In my world, my brain could be engineered to represent knowledge of red things with your greenness physical quality.

> You can't know the meaning of the word 'red' without a dictionary.

> You can't know what red means, without a dictionary that points to the red colored one.

> The same is true of things like pain and pleasure

> In my word, If you understand what redness and greenness are you get two things.

> Experiencing just 5 minutes of redness would give purpose to billions of years of abstract evolutionary death and suffering to reach that achievement.

Dan TheBookMan

John Clark

>> I don't see what AI has to do with it. And from an ethical point of view it seems to me that accountability is one of the few things that is easy to determine because it all boils down to a question of punishment, and the only valid reason for punishing anybody for anything is if it seems likely that it will result in a net decrease in human suffering in the future; if it does then punish that person, if it doesn't then don't. After all, if ethics doesn't result in less suffering then what's the point of ethics?

> Could be that there are other values aside from a reduction in suffering. In fact, only a sort of hedonic ethics (which meshes well with the rather vapid utilitarian ethics many folks adopt) would center on suffering. This isn’t to say reducing suffering isn’t in the mix, but that everything ethical isn’t reduced to it.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv1zr8SEmJFTyhjN8qNTS8mKcLdVxp75dRpORtWpwAVHCQ%40mail.gmail.com.

John Clark

> Hi John,Seems to me morality can be based on some, what seem to me to be necessary fundamental truths, like existence or living is better than dying,

> knowing is better than not knowing (i.e. "find out more about how the universe works",

> social is better than anti social...

> This is why evolution towards that which is better is a logically necessity, in any sufficiently complex system.The opposite of evolution is logically impossible, right?

William Flynn Wallace

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv1zxrqzZkRE-40Zi2iNcHBeFT%2BC9%3Dy6vXCZQ2O5X%2BgGXw%40mail.gmail.com.

Brent Allsop

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAO%2BxQEYF%2B61uMQH%3DLwDCMo7Ji%2BLMK0y%2BZ6EFaQLML7m6BcCsYA%40mail.gmail.com.

John Clark

> I think forgiving shows moral superiority.

> Putting a person in prison is a case that reinforces that notion. The only positive thing it does is to keep someone off the streets for awhile. The horrible conditions in prisons here in Mississippi only strengthen hostility of the prisoners to society in general and conservatives are happy to keep cutting prison budgets so they can suffer even more from clogged toilets, terrible food, and more. Sensory deprivation, aka total isolation, is cruel and unusual punishment but often used. Result: prison riots that more than occasionally kill some inmates.

> So - vengeance against lawbreakers is misplaced in many ways,

John Clark

> For me it's all about a full restitution, with interest, to achieve perfect justice.

William Flynn Wallace

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onud3tgeENfyakCEeF%2B9G-mpqk7KEZ1w7gMSpkQBU8V4Rw%40mail.gmail.com.

William Flynn Wallace

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv3V4zhbeXBeN8eumrYKoB3LScsX39ZTCFi3OQAUV3tqvA%40mail.gmail.com.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv18cNc32XVkLrVDM-4RmnxtVftOToU6eH6W2TsVsetcdw%40mail.gmail.com.

William Flynn Wallace

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onuQFOWuDeTe8d2J6%3Dprd%3DXFrc6jMUbinY3EPqDNv1tjOA%40mail.gmail.com.

Stuart LaForge

I taught courses in Learning for over 30 years and I can testify that punishment, of the positive kind ( as opposed to the negative kind, where a response results in the withdrawal of something good, like taking a toy away) has so many unfortunate side effects, often worse than the behavior being punished, that I would never recommend it in child raising unless the behavior being punished is actually dangerous to the person or to others.

I would love to have vengeance against the doctors that killed both my parents, but I could not. You have to have very deep pockets to sue a medical person, and of course I didn't. So I have to forgive them to get rid of the depressions and grudges I held. I think forgiving shows moral superiority.

Putting a person in prison is a case that reinforces that notion. The only positive thing it does is to keep someone off the streets for awhile. The horrible conditions in prisons here in Mississippi only strengthen hostility of the prisoners to society in general and conservatives are happy to keep cutting prison budgets so they can suffer even more from clogged toilets, terrible food, and more. Sensory deprivation, aka total isolation, is cruel and unusual punishment but often used. Result: prison riots that more than occasionally kill some inmates. And who cares about them? Then they are turned loose to do it again. Some people can be rehabilitated. Some can get off their addictions, but they get no help here. No money for programs like these.

So - vengeance against lawbreakers is misplaced in many ways, but of course conservatives would not dream of 'coddling' criminals. Creating pain and suffering are their only weapons and they are simply not working, as recidivism statistics reveal. Studies of positive punishment of children are similar: kids grow up worse if the only discipline is physical - numerous studies on that.

In poor, esp. in minority communities, kids learn that they have done something wrong when they are yelled at and hit, and yelling and hitting them becomes the only thing that gets their attention. This is why minority kids act up in schools - no one can yell or hit them, so they don't take anything seriously.

Try forgiveness, even of poor drivers. It will improve your mental health and overall attitude towards living.

This really requires a much longer post with added references to studies, but maybe it's a start. bill w

Stuart LaForge

On Thu, Dec 30, 2021 at 9:33 AM Brent Allsop <brent....@gmail.com> wrote:> Hi John,Seems to me morality can be based on some, what seem to me to be necessary fundamental truths, like existence or living is better than dying,Usually yeah, but I think oblivion would be preferable to intense unrelenting pain.

> This is why evolution towards that which is better is a logically necessity, in any sufficiently complex system.The opposite of evolution is logically impossible, right?It's improbable Evolution will precisely retrace its steps, but that doesn't mean a human would conclude the end results will always be an improvement. Evolution's goal is not to increase complexity or to become more intelligent but to get more genes into the next generation by outcompeting the competition. And sometimes that results in something simpler, dumber and more primitive; for example that is often seen in the evolution of non-parasites into parasites.

William Flynn Wallace

William Flynn Wallace wrote:I taught courses in Learning for over 30 years and I can testify that punishment, of the positive kind ( as opposed to the negative kind, where a response results in the withdrawal of something good, like taking a toy away) has so many unfortunate side effects, often worse than the behavior being punished, that I would never recommend it in child raising unless the behavior being punished is actually dangerous to the person or to others.

Let met start off with these:

1 - creates fear of the punisher and possibly hate - may generalize to other authority figures

2 - does not generalize well to similar behaviors

3 - creates avoidance of punishers

4 - creates hostility towards punisher and maybe society

5 - does nothing to encourage proper behavior

6 - creates learning of how to avoid punishment - i.e. get away with bad behavior

7 - encourages acting out of anger and frustration by punisher -poor model

8 - is associated with poorer cognitive and intellectual development

9 - may result in excessive anxiety, guilt, and self-punishment. -low self worth

10 - encourages excessive punishment when mild punishment does not work

11 - can create aggression and antisocial behavior

You can nitpick these - some or most do not necessarily happen every time, and some only when the person being punished reacts rather strongly. But all of them are common.Both prison and capital punishment are examples of negative punishment. In the first, one is taking away the subject's freedom and in the second, one is taking away the subject's life. I am not sure how either of these options is better than the positive punishment of flogging them in the public square.

Positive punishment of undesired behavior is an evolved trait that would not have evolved unless it was successful. For reference look at evolved behavior of all social primates and pack animals. When one wolf wants stop another wolf from stealing its food, it warns and ultimately bites the offending wolf. Chimpanzees use pain and violence to regulate one another's behavior. Even game theoretic computer simulations show that punishing defectors in tit-for-tat is a Nash equilibrium and an evolutionarily stable strategy

Until constantly forgiving someone for the same offenses becomes a pattern, at which point forgiving becomes enabling.

prison riots that more than occasionally kill

Again, all these punishments you rail against are negative punishments which are supposed to be the good kind, while spanking a child or tasering an adult is positive punishment and is considered bad. Not all by any means. And it's not the negative aspect that creates the problems.

Are you sure this is not a case of psychologists thinking with their hearts instead of their brains? How did they conduct these studies? It seems that a good study would be hard to set up since you can't compare outcomes in identical children using controls.

Most studies, if not all, of positive punishment cannot be ethically done. But those side effects can be verified, often by scars and bruises and broken bones in abused wives and children. Do you doubt that?This is a HUGE problem. These poor kids (race seems far less relevant than socioeconomic status - true) respect and fear one another far more than they do their teachers or school administration because of their gang mentality of "snitches get stitches". Numerous TikTok challenges have them vandalizing school property, stealing from, and hitting their teachers. The prohibition against positive punishment for school children seems like it will be the death of public education, at least in the United States. Coddling of delinquents has gotten so bad that it seems like almost like a communist plot to destabilize western liberal democracies from within. The educational psychology theory that teachers learn in school seems completely ineffectual in the real world of schools in poor neighborhoods. All it seems to do is prime these kids for prison by teaching them that authority figures are a joke who have no teeth. They can get away with anything they want until they cross a cop or another thug that shoot them, beat them, or throw them in jail. Don't get me started on the education establishment!I am not in favor of coddling anyone. You can make punishments severe without hitting people. Hitting people to me is a sign that you can't, or don't want to try anything else. I am a liberal but not a bleeding heart one. I have no idea what to do with poor, misbehaving kids in schools. Wish I did. I just don't equate getting tough with lots of positive punishment. I would justify it only as a last resort.

It might make YOU feel better, but what about the good of society? What if that reckless driver you forgave ends up killing a whole family because you let him off the hook?

This really requires a much longer post with added references to studies, but maybe it's a start. bill w

Stuart LaForge