conversation with GPT-4 on black hole QM

Lawrence Crowell

Einstein advanced the equivalence principle. In there it is 1) not possible to distinguish between an inertial frame in flat spacetime from a sufficiently local inertial frame falling in a gravity field, and 2) the accelerated frame in flat spacetime is equivalent to the surface acceleration against gravity on a planet or hard gravitating body. Can it then be said that two entangled particles in spacetime, whether flat or with a curvature have that entanglement conserved?

GPT-4 answer: The equivalence principle in general relativity states that the effects of gravity are indistinguishable from the effects of acceleration. It does not make any specific statement about the conservation of entanglement between two particles in spacetime, whether in flat or curved spacetime.

Entanglement is a quantum mechanical phenomenon that describes the correlation between two or more particles, even when they are separated by large distances. The conservation of entanglement is a fundamental property of quantum mechanics, which holds true regardless of the presence of gravity or the curvature of spacetime.

Therefore, it can be said that entanglement between two particles is conserved in both flat and curved spacetime, as long as there is no external interaction that disturbs or breaks the entanglement. The effects of gravity, as described by the equivalence principle, do not affect the conservation of entanglement between two particles.

Good, this is what I concluded as well. Then to take this further. Given an EPR pair, where Bob remains far from the black hole and Alice is in a freely falling frame into the black hole, there are then two interpretations of events. Alice will consider the entanglement with Bob as continuing. Bob on the other hand will, due to red-shifting on tortoise coordinates and that Alice’s time is dilated asymptotically to a crawl, that his EPR pair is entangled with the black hole. The two perspectives are effectively equivalent to each other.

GPT-4 ans:

Yes, you are correct. According to the equivalence principle, there is no difference between a uniform acceleration and being in a gravitational field. Therefore, for Bob, who is far away from the black hole, the entanglement between his particle and Alice's particle will be affected by the gravitational field of the black hole. This will cause the entangled state to evolve differently than it would in a flat spacetime.

On the other hand, for Alice, who is in a freely falling frame into the black hole, there is no gravitational force acting on her. From her perspective, the entanglement between her particle and Bob's particle will continue to evolve according to the laws of quantum mechanics.

These two perspectives are indeed equivalent, even though they appear to be different. Bob's observations can be explained by the effects of the gravitational field on his particle's evolution, while Alice's observations can be explained by the absence of a gravitational force on her particle's evolution.

It is worth noting that the details of the evolution of the entangled state in this scenario are highly dependent on the specific setup and initial conditions. Therefore, the specific outcomes of the two perspectives may differ in different situations. However, the equivalence principle ensures that both perspectives are equally valid and consistent with the laws of physics.

This then means that Bob and Alice have different subjective interpretations of the same physics. Alice observes no apparent difference with standard quantum mechanics. Bob on the other hand is uncertain of the status of the entangled state. The tortoise coordinates with t’ = t – 2m log|r – 2m|, and the associated red-shifting means that Bob sees this bipartite entanglement dissolve into an entanglement with all the entangled states on the Planck region above the event horizon.

GPT-4 ans: Yes, that's correct. Bob and Alice have different subjective interpretations of the same physics due to their different perspectives in the presence of the black hole. From Alice's perspective, the entangled state between her particle and Bob's particle continues to evolve according to standard quantum mechanics. However, from Bob's perspective, the entangled state appears to dissolve into an entanglement with the black hole itself.

As you mentioned, the redshift factor associated with Bob's perspective causes the entangled state to evolve in a way that appears to dissolve the entanglement between Alice's particle and Bob's particle. This can be thought of as an "entanglement swap" where the entanglement between the two particles is effectively transferred to a new entanglement between Alice's particle and the black hole.

It's worth noting that while this "entanglement swap" appears to occur from Bob's perspective, it is still consistent with the laws of quantum mechanics and the conservation of entanglement. The entangled state between Alice's particle and Bob's particle is still preserved in the overall system, even though it may be redistributed among different entangled states within that system.

Overall, this scenario demonstrates the importance of considering the subjective perspectives of different observers when interpreting physical phenomena in the presence of strong gravitational fields.

This begins to get deeper into the big question here. If the black hole has entropy S = Nk, here assuming a naïve microcanonical partition of N states and k = Boltzmann’s constant, then from Bob’s perspective the entanglement has changed from a bipartite entanglement to an N+1 entanglement. Alice observes nothing of this sort. This appears to suggest a possible crack in the AMPs argument. I may indicate next what I think that may be.

GPT-4 ans: The scenario you describe, where the entanglement appears to change from a bipartite entanglement to an N+1 entanglement from Bob's perspective, does not necessarily indicate a crack in the argument of the AMPs (Almheiri-Marolf-Polchinski-Sully) paper.

The AMPs argument concerns the black hole information paradox, which arises from the apparent loss of information when matter falls into a black hole. The argument suggests that this apparent loss of information can be resolved by considering the entanglement structure of the Hawking radiation emitted by the black hole.

In the scenario you describe, the entanglement between Alice's particle and Bob's particle appears to dissolve into an entanglement with the black hole, resulting in an N+1 entanglement structure from Bob's perspective. However, the entanglement structure of the overall system, including the black hole and the emitted Hawking radiation, remains consistent with the AMPs argument.

Specifically, the AMPs argument proposes that the entanglement between the black hole and the Hawking radiation determines the entanglement structure of the emitted radiation. The N+1 entanglement structure from Bob's perspective can be seen as a result of this entanglement structure between the black hole and the emitted radiation.

Therefore, while the scenario you describe may appear to challenge the AMPs argument, it is still consistent with the overall picture proposed by the argument. It highlights the importance of considering the subjective perspectives of different observers in interpreting physical phenomena, but it does not necessarily imply a fundamental flaw in the AMPs argument itself.

Let me then rephase that. The black hole information paradox stems from the fact that Hawking radiation is entangled with a black hole. However, after the Page time emitted Hawking radiation must be entangled with previously emitted Hawking radiation plus the black hole. Hence the bipartite entanglement of the previously emitted radiation and the black hole transforms into a tripartite entanglement. This is what is a source of the non-unitary change in information or S = Tr[ρ log(ρ)], which in quantum mechanics is easily shown to be invariant. Can this then suggest that the topology of entanglement in curved spacetime may in fact be a matter of subjective, maybe Bayesian, observation?

GPT-4 ans: The non-unitary evolution of information in the presence of a black hole is indeed at the heart of the black hole information paradox, and the tripartite entanglement structure you describe is an important aspect of this paradox. The AMPs argument attempts to resolve this paradox by proposing that the entanglement structure of the emitted Hawking radiation is determined by the entanglement between the black hole and the radiation, and that this entanglement structure is consistent with unitary evolution.

Regarding the topology of entanglement in curved spacetime, it is true that the subjective perspectives of different observers can lead to different interpretations of entanglement and its evolution. In the scenario you describe, Bob and Alice have different interpretations of the same physical system due to their different perspectives in the presence of a black hole. However, this does not necessarily imply that the topology of entanglement itself is subjective or Bayesian.

Rather, the topology of entanglement is an objective property of the physical system that can be described mathematically using the formalism of quantum mechanics. The subjective perspectives of different observers can lead to different interpretations of this topology, but this does not change the objective nature of the topology itself.

In summary, while the subjective perspectives of different observers can play a role in the interpretation of entanglement in curved spacetime, the topology of entanglement is an objective property of the physical system that can be described using the formalism of quantum mechanics.

I would agree if the topology of entanglement is completely accessible to observations. If Bob has an enormous amount of resources and by some means can snuggle up to within a Planck length of the horizon, where acceleration is √{c^7/Għ} ≃ 10^{52}m/sec^2 he could in principle access this information. The Hawking radiation would gush out of the black hole. The Shapiro theorem about geodesics with positive energy is turned on its head, and Bob can access in principle everything. However, in practice this cannot happen. Bob must remain content with partial access to the topology of this entanglement. He in fact then does not know whether the entanglement is equivalent to his original bipartite simple entanglement or a vastly more complex N-tangle. This appears to be observer dependent, at least for one observer.

Thank you, you appear to largely agree with some of my assessments --- though you give some critical changes I need to consider.

GPT-4 ans:

You're welcome! It's always important to carefully

consider different perspectives and arguments in a complex and evolving field

like physics. If you have any further questions or ideas to discuss, feel free

to ask!

John Clark

> I had this conservation with GPT-4 on aspects of black hole quantum mechanics. I kept it largely non-mathematical. This is interesting, and it suggests a level of processing that is complex. An AI system was fed ephemeris data on the appearance of the sun, moon and planets in the sky and it deduced Kepler's laws. Holy Sh*t Batman --- the world is changing.

Lawrence Crowell

On Fri, May 5, 2023 at 8:02 PM Lawrence Crowell <goldenfield...@gmail.com> wrote:> I had this conservation with GPT-4 on aspects of black hole quantum mechanics. I kept it largely non-mathematical. This is interesting, and it suggests a level of processing that is complex. An AI system was fed ephemeris data on the appearance of the sun, moon and planets in the sky and it deduced Kepler's laws. Holy Sh*t Batman --- the world is changing.Holy Singularity Batman, you're right! I think we can safely say that the Turing Test has been passed. If you had this online conversation 10 years ago would you have had any doubt that you were communicating with a human being? And to think, some very silly people still maintain that GPT-4 is nothing but a glorified autocomplete program that just uses statistics to compute what the next word in a sentence most probably is. Any rational person who held that view and then read your conversation with GPT-4 would change their opinion of it, but some people are not rational and they will continue to whistle past the graveyard.John K Clark

John Clark

>>Holy Singularity Batman, you're right! I think we can safely say that the Turing Test has been passed. If you had this online conversation 10 years ago would you have had any doubt that you were communicating with a human being? And to think, some very silly people still maintain that GPT-4 is nothing but a glorified autocomplete program that just uses statistics to compute what the next word in a sentence most probably is. Any rational person who held that view and then read your conversation with GPT-4 would change their opinion of it, but some people are not rational and they will continue to whistle past the graveyard.John K Clark> To be honest I have no clear idea whether GPT is actually sentient. My dogs have no numerical ability or spatial reasoning, but I have a sense that they are sentient. Whether an AI like this is actually self-aware is something I am agnostic about.

Lawrence Crowell

Lawrence Crowell

John Clark

> I spent some time on GPT-4 this afternoon. I wrote about a topic that was leading to an inference I had made. Before I wrote on that inference GPT made the same inference.

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/a6ef449e-26bc-4cf7-9208-078afa98153cn%40googlegroups.com.

Lawrence Crowell

On Mon, May 8, 2023 at 8:10 PM Lawrence Crowell <goldenfield...@gmail.com> wrote:> I spent some time on GPT-4 this afternoon. I wrote about a topic that was leading to an inference I had made. Before I wrote on that inference GPT made the same inference.Wow! Would I be correct in saying that you gave GPT-4 your own personal Turing Test and it passed because you couldn't tell if you were conversing with a machine or a human being with at least average intelligence?John K Clark

John Clark

> I have found 2 mistakes it [GPT-4] has made. It has caught me on a few errors as well.

> It is a very good emulator of intelligence.

> It also is proving to be a decent first check on my work. It might be said it passes some criterion for Turing tests, though I have often thought this idea was old fashioned in a way.

Lawrence Crowell

John Clark

> This does make me ponder what is the relationship between consciousness and intelligence. I suspect GPT-4 and other AI systems may be intelligent, but they are so without underlying consciousness. Our intelligence is in a sense built upon a pore-existing substratum of sentience.

> My dogs are sentient, but when it comes to numerical intelligence they have none, and indeed very poor spatial sense.

> we subjectively experience as consciousness

> is built on a deeper substrate of biological activity.

Lawrence Crowell

John Clark

>What you write is rather standard fair.

> I think sentience or even bio-sense has its basis in some means by which white noise processes, such as statistical mechanics and quantum mechanics, are adjusted to have pink noise.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv2fV-FtGAsBqBWczKzehu%2BYRThyrmJ6NuTpoiGuB-cTdQ%40mail.gmail.com.

Lawrence Crowell

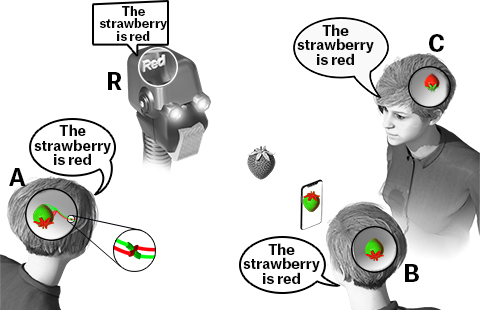

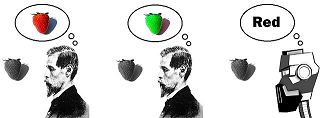

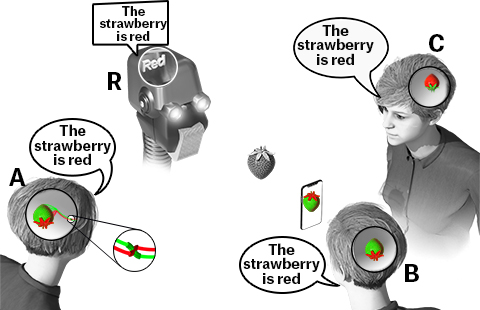

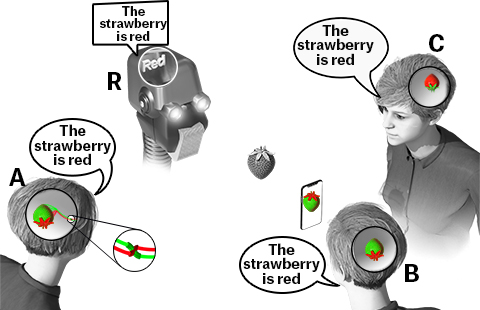

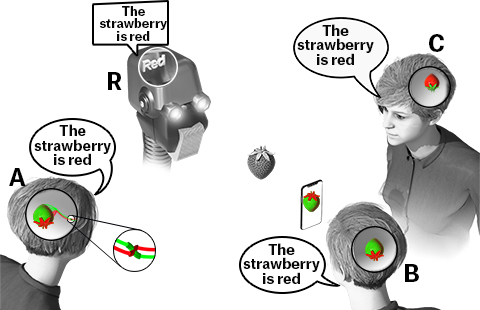

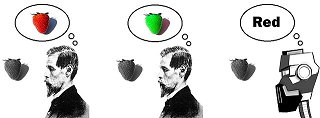

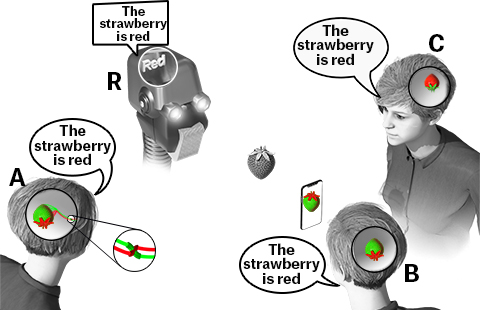

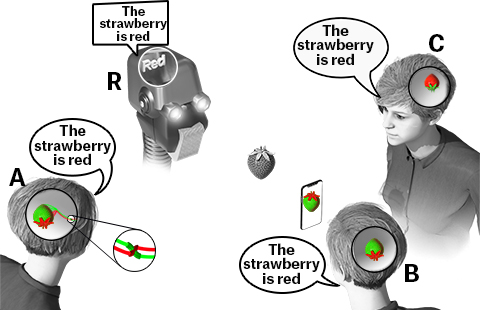

Hello Mr Larwence,John and I have been having this conversation you guys (and surely gazilions of people all over the world) are repeating, since probably back in the 90s. I've been saying things like you:"This does make me ponder what is the relationship between consciousness and intelligence. I suspect GPT-4 and other AI systems may be intelligent, but they are so without underlying consciousness. Our intelligence is in a sense built upon a pore-existing substratum of sentience."And John has been endlessly responding with:"consciousness is the way data feels when it is being processed intelligently and, as far as consciousness is concerned, it doesn't make any difference if that processing is done electronically or mechanically or biologically."I got tired of saying the same things over and over again, year after year, with no apparent progress. That's why we built the consensus building and tracking system at Canonizer and started the Theories of Consciousness topic. Now we can track who, and how many people are on each side of issues like this. The most important thing is, if you find an argument that falsifies someone's camps, and get them to jump to a better camp, we want to be able to know that, so we can focus on those arguments. And at least some people have abandoned their camp, due to falsifying data from the Large Hadron Collider, for example. And you can see other's jumping camps by examining the history using the "as of" selection on the side bar. This chapter in our video describes a bit about the emerging consensus that continues to progress.To better illustrate the difference between our views, I started with this picture, modified from an image on the wikipedia page on Qualia.John has expressed that he doesn't like this picture. So we've created a new version which I hope is more descriptive of the idea, and not as easy to misinterpret what it represents.John, let me know if you think it is any better.The bottom line is, subjective knowledge is represented by subjective qualities like redness and greenness (no dictionary required). While abstract intelligent systems represent the same information, abstracted away from any physical properties or qualities (the word 'red' requires an additional dictionary). All of these systems can be equally intelligent (some of them require more hardware for a dictionary), and they can all tell you the strawberry is red. To me, this proves that John's claim that "consciousness is the way data feels" is wrong, as the abstract word "red" does not feel like anything. John seems to not care about any of these differences, while I think it is critically important, and the very definition of what is and isn't sentient. The strawberry knowledge of A and C are like something, though different. But the knowledge of R is not like anything. For me, not only do you need to know if the type of knowledge is phenomenal or not, you need to know what it is like. (as in "what is it like to be a bat.") But John doesn't care about any of these differences.John claims that evolution "can't see" qualities, but I disagree. I believe computing directly on intrinsic subjective qualities, like redness and greenness is a far more efficient way to do intelligent things like find and pick the strawberries from all the leaves. C would be more survivable than A (because of the natural difference in importance between redness and greenness), and both of these phenomenal systems would be far more survivable than R, simply because R requires an additional hardware dictionary to know what the word "red" means, while the quality of A and C can do the same kind of situational awareness in a much more natural and intelligent way, since it doesn't require a dictionary to know what the representation means. We're predicting that once we discover which of all our abstract descriptions of stuff in the brain is a description of redness, along with how such qualities can be "computationally bound" into one composite subjective situational awareness experience, this will revolutionize the way computation is done in far more naturally intelligent ways, than using the discrete logic gates we use today, to do the same thing in far less natural and less intelligent ways.Does any of that help?

John Clark

> To better illustrate the difference between our views, I started with this picture,

> John has expressed that he doesn't like this picture. So we've created a new version which I hope is more descriptive of the idea, and not as easy to misinterpret what it represents.John, let me know if you think it is any better.

> The bottom line is, subjective knowledge is represented by subjective qualities like redness and greenness (no dictionary required).

While abstract intelligent systems represent the same information, abstracted away from any physical properties or qualities (the word 'red' requires an additional dictionary).

> this proves that John's claim that "consciousness is the way data feels" is wrong, as the abstract word "red" does not feel like anything.

> The strawberry knowledge of A and C are like something, though different.

> John claims that evolution "can't see" qualities, but I disagree.

> I believe computing directly on intrinsic subjective qualities, like redness and greenness is a far more efficient way to do intelligent things like find and pick the strawberries from all the leaves.

> and both of these phenomenal systems would be far more survivable than R, simply because R requires an additional hardware dictionary to know what the word "red" means,

> We're predicting that once we discover which of all our abstract descriptions of stuff in the brain is a description of redness, along with how such qualities can be "computationally bound" into one composite subjective situational awareness experience,

> this will revolutionize the way computation is done in far more naturally intelligent ways, than using the discrete logic gates we use today,

Brent Allsop

Brent wrote:> To better illustrate the difference between our views, I started with this picture,I know, you've shown me that exact same cartoon about 6.02×10^ 23 times. All it demonstrates is that 3 different intelligent entities might (and almost certainly would) have different subjective experiences when they see a red strawberry even though it causes the first two to behave in the same way, the last one apparently could not tell the difference between green and blue. But it's not surprising they have different subjective experiences, if they didn't then they wouldn't be 3 different people there would only be one.> John has expressed that he doesn't like this picture. So we've created a new version which I hope is more descriptive of the idea, and not as easy to misinterpret what it represents.John, let me know if you think it is any better.Sorry Brent, I don't like your new cartoon any better, and the problem wasn't that I didn't understand the first cartoon, the first time I saw it I understood what you were trying to say and I didn't disagree, it's just that I didn't think what you were trying to say was very important or very profound.> The bottom line is, subjective knowledge is represented by subjective qualities like redness and greenness (no dictionary required).Well at least you've stopped going on and on about the importance of dictionaries.While abstract intelligent systems represent the same information, abstracted away from any physical properties or qualities (the word 'red' requires an additional dictionary).I spoke too soon, we're back with dictionaries.

> this proves that John's claim that "consciousness is the way data feels" is wrong, as the abstract word "red" does not feel like anything.The word "red" is just a symbol for electromagnetic waves in the 700 nm range, and so is the red qualia; I maintain that in an intelligent mind the bundle of concepts that are represented by either of those two symbols DOES feel like something.

> The strawberry knowledge of A and C are like something, though different.They are indeed different and that difference could easily be experimentally proven. C observes the world in black-and-white and thus cannot differentiate between a blue grape and a green grape, but A could.

John Clark

> Hi John,You claim you understand what these images represent, but you are saying things that, to me at least, indicate you don't understand.

I was going to rebut your post line by line, and perhaps I will, but something you said discouraged me. You said "the 1s and 0s are abstracted away from whatever is representing them. So how could they be like anything?" It occurred to me that exactly the same thing could be said about the string of ASCII characters that made up your entire email. So instead I'm going to ask you just one question and if you answer it successfully then you've won this long argument.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv3P_4D3WES5mNn4gy7ZvKBc1m%3DAUeOYEFBAUZ1zTm_M4A%40mail.gmail.com.

John Clark

> So, you need a dictionary to know what any of the intermediate representations or "codes" of the knowledge is representing.

> As far as knowing the nature of fellow human beings, as Descartes pointed out, various theories, such as being a brain in a vat, or solipsism (my subjective knowledge is the only thing that exists) are all theoretical possibilities.

> But as we've discussed multiple times, the left hemisphere of your brain knows, absolutely, that it is not the only hemisphere in existence, since it can be computationally bound with subjective knowledge in the other. So [...]

Brent Allsop

On Mon, May 15, 2023 at 1:24 PM Brent Allsop <brent....@gmail.com> wrote:> So, you need a dictionary to know what any of the intermediate representations or "codes" of the knowledge is representing.No you do not! What you need are examples because without examples every definition in a dictionary is circular.

> As far as knowing the nature of fellow human beings, as Descartes pointed out, various theories, such as being a brain in a vat, or solipsism (my subjective knowledge is the only thing that exists) are all theoretical possibilities.A brain in a vat made out of bone is more than just a theoretical possibility. And I know you don't believe in solipsism because you believe your fellow human beings are conscious, it's just the things that have brains that are hard and dry and not soft and squishy that you think are not conscious regardless of how intelligently they behave.> But as we've discussed multiple times, the left hemisphere of your brain knows, absolutely, that it is not the only hemisphere in existence, since it can be computationally bound with subjective knowledge in the other. So [...]So what does that have to do with the price of eggs in China? You still haven't answered my question, why do you believe that your fellow human beings have this certain something or whatever it is you want to call the secret sauce but GPT-4 does not?

John Clark

> I believe my friends are sentient, because they say things like they can experience redness.

Brent Allsop

John Clark

> In an abstract system a word like 'red' is really just a string of 1s and 0s.

> by definition, those 1s and 0s are not anything like what is representing them

Brent Allsop

John Clark

> In an abstract system a word like 'red' is really just a string of 1s and 0s.And all Shakespeare did is produce a string of ASCII characters.> by definition, those 1s and 0s are not anything like what is representing themBut the action potential across the cell membrane of a neuron in the brain IS like something?!> You always change the subject to things like these, which has nothing to do with what I'm trying to describe.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv3QejF5bp_2ENFqsjTpJObbXfsfwVLgzpjJ%2BHVkx0uHOQ%40mail.gmail.com.

Stathis Papaioannou

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onuutC%2BZYzDt82Ym6T5vCgrcLSDAwpW_YpZVGiOZqY9g2A%40mail.gmail.com.

Brent Allsop

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypUMZTVi7HGA_NNtEwXsE0NTWYrLpNDGVzvp965uZ8rwdA%40mail.gmail.com.

John Clark

> computation directly on qualities is more efficient than systems which represent information which is abstracted away from what is representing it

> And then, there is the simple: "What is fundamental?" question. What is reality, and knowledge of reality made of?

Stathis Papaioannou

Hi Stathis,Functionality wise, there is no difference. There are lots of possible systems that are isomorphically the same, or functionally the same. That is all the functionalists are talking about. But if you want to know what it is like, you are talking about something different. You are asking: "Are you using voltages, cogs, gears or qualities to achieve that functionality?"And capability and efficiency is important. Voltages are going to function far better than cogs. And computation directly on qualities is more efficient than systems which represent information which is abstracted away from what is representing it, doing the computational binding with brute force discrete logic gates. Motivational computation done by an actual physical attraction which feels like something is going to be much more robust than some abstractly programmed attraction, that isn't like anything.And then, there is the simple: "What is fundamental?" question. What is reality, and knowledge of reality made of? What are we. How is our functionality achieved? What is reality made of? How do you get new qualities, to enable greater functionality, and all that.

--

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypXe-WBGd1rQrCwRT-%2BcumvgukbidT0431Q8m%3Dxr77cP7Q%40mail.gmail.com.

John Clark

> You are saying "even the functioning as a gear is not accessible to the conscious system."

> But redness is a quality of something, like a gear or glutamate

> and those properties are what consciousness is composed of.

> If a single pixel of redness changes to greenness, the entire system must be aware of that change,

Dylan Distasio

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onvKDTOSYbMOOewY5oYgC45Br5Acyg1YDyodC3h1gG0-gQ%40mail.gmail.com.

William Flynn Wallace

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJrqPH-EUcsKqWXenuRnQGra1xd4On-kTWQfW03Urc4DPTW2rQ%40mail.gmail.com.

Dylan Distasio

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAO%2BxQEZ5q8yVUnQhmgMXQk4AeYp-Bb7JMjxFmY9DH7Oy3Kt7Jg%40mail.gmail.com.

Stathis Papaioannou

This is the key to the issue. You are saying "even the functioning as a gear is not accessible to the conscious system." But redness is a quality of something, like a gear or glutamate or something, and those properties are what consciousness is composed of. If a single pixel of redness changes to greenness, the entire system must be aware of that change, and be able to report on that change. You are saying, or assuming, or just stating, that nothing can do that. But, without that, you can't have composite subjective experiences composed of thousands of pixels of qualities like redness and greenness, where the entire system is aware of every pixel of diverse qualities, all at once.

William Flynn Wallace

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJrqPH-ZFFY4mqhzqKOaiL9Yk3zASH%2BkTjTwcnUw-9Z1MOFD9w%40mail.gmail.com.

Brent Allsop

I agree with you. Everything is processed. No direct route to some sensation. bill wBrent is arguing that there is some fundamental physical molecule that is "red" with no dictionary/translation required anywhere. I don't know what this means. bill w

Stathis Papaioannou

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypXXgDPun0FXRsTkMOMawmxmCKp_Fgsjk5HZ%2BBJDLVrgwQ%40mail.gmail.com.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypXXgDPun0FXRsTkMOMawmxmCKp_Fgsjk5HZ%2BBJDLVrgwQ%40mail.gmail.com.

Stathis Papaioannou

Hi Stathis,I've answered exactly this question so many times. Yet you still show no sign of understanding what I'm trying to say. Maybe this latest picture will help?All of these systems can tell you the strawberry is red. It's just that if you ask them what their knowledge of the strawberry is like, they will all (but A and B) give you these different answers.C: My redness is like your redness.A and B: My redness is like your greenness.R: My knowledge is intentionally abstracted away from whatever properties are representing it, via transducing dictionaries. So, my abstract knowledge isn't like anything, and unlike A, B, and C, I need a dictionary to know what the word "red" means.

John Clark

> In order to understand, one must first distinguish between reality and knowledge of reality.

> Also, one must understand that: "If you know something, that knowledge must be something."

> We initially naively think redness is an intrinsic quality of the strawberry. IF that were the case, a strawberry wouldn't need a dictionary

> It is these LEGO block voxels that have the intrinsic subjective qualities, not the strawberry.

> Your subjective CPU is computing directly on these computationally bound qualities.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypUQkgpkbO1_Uhpu%2BOhjEVo0go%2B9SU%3DSENm_Y5SQZ7xfKg%40mail.gmail.com.

Stathis Papaioannou

You are changing the subject from what matters. I am only talking about the fact of the matter that is the quality of the knowledge. Whether the person is lying about the quality of its knowledge, or not, or whatever, has nothing to do with the quality, itself, and what it is like. Let's emagine a "shut in" person who can't interact with the outside world, but is still experiencing or dreaming of a subjective redness experience. That quality of that is the only thing I am talking about.

On Thu, May 18, 2023 at 3:57 AM Stathis Papaioannou <stat...@gmail.com> wrote:On Thu, 18 May 2023 at 16:49, Brent Allsop <brent....@gmail.com> wrote:Hi Stathis,I've answered exactly this question so many times. Yet you still show no sign of understanding what I'm trying to say. Maybe this latest picture will help?All of these systems can tell you the strawberry is red. It's just that if you ask them what their knowledge of the strawberry is like, they will all (but A and B) give you these different answers.C: My redness is like your redness.A and B: My redness is like your greenness.R: My knowledge is intentionally abstracted away from whatever properties are representing it, via transducing dictionaries. So, my abstract knowledge isn't like anything, and unlike A, B, and C, I need a dictionary to know what the word "red" means.--But R could say “my redness is like your redness” or “my redness is like your greenness”. What would you make of that? And if you wouldn’t believe it, why not?Stathis Papaioannou----

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypUQkgpkbO1_Uhpu%2BOhjEVo0go%2B9SU%3DSENm_Y5SQZ7xfKg%40mail.gmail.com.

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-ons%2BnX1%2B4w2rNYBqRKLseX0qwrWSMB%2BRXdUBg6_A_L3KGA%40mail.gmail.com.

Brent Allsop

On Fri, 19 May 2023 at 09:35, Brent Allsop <brent....@gmail.com> wrote:You are changing the subject from what matters. I am only talking about the fact of the matter that is the quality of the knowledge. Whether the person is lying about the quality of its knowledge, or not, or whatever, has nothing to do with the quality, itself, and what it is like. Let's emagine a "shut in" person who can't interact with the outside world, but is still experiencing or dreaming of a subjective redness experience. That quality of that is the only thing I am talking about.No, I mean that the robot could say the same things as the humans AND the robot could be accurately describing its experiences. Why do you think that is impossible?

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypXs7hU3sy1jtqjY0wk_iODukmJLCf%2BZoumUUZV7pxH6VA%40mail.gmail.com.

Lawrence Crowell

On Thu, May 18, 2023 at 5:53 PM Stathis Papaioannou <stat...@gmail.com> wrote:On Fri, 19 May 2023 at 09:35, Brent Allsop <brent....@gmail.com> wrote:You are changing the subject from what matters. I am only talking about the fact of the matter that is the quality of the knowledge. Whether the person is lying about the quality of its knowledge, or not, or whatever, has nothing to do with the quality, itself, and what it is like. Let's emagine a "shut in" person who can't interact with the outside world, but is still experiencing or dreaming of a subjective redness experience. That quality of that is the only thing I am talking about.No, I mean that the robot could say the same things as the humans AND the robot could be accurately describing its experiences. Why do you think that is impossible?You are just proving you have no idea what this image is trying to say. It is a fact that the quality of A's knowledge is different from the quality of C's knowledge. To understand the nature of R's knowledge, as being an abstract word like 'red', emagine a quality you have never experienced, before, let's call it grue. So, you can talk about grue knowledge, you may even know what has a grueness quality, and be able to tell people that thing is grue, but you have no idea what grue is like. The same is true for the robot, and the usage of the word 'red'. it can talk about red, and tell you the strawberry is 'red', but it has no idea what redness is like. Its knowledge of red is not anything like A's or C's knowledge, the same as your knowledge of grue is not like anything. Those are just the facts portrayed in this image. You just seem to be trying to distract people away from those facts, so you can use your sleight of hand to talk about silly substitutions, while completely ignoring what really matters, the qualities, and the fact that if you change them (whatever they are), they are no longer the same..

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onup167UewyFnoFSjM5RdO25HpzPm_1CE4jdEJCVkQe2Pg%40mail.gmail.com.

Brent Allsop

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAAFA0qqo%2BuGSBhrgFB_nAz_YcU6%3DXgjr_2-JW5k0FYxOn6LC3w%40mail.gmail.com.

Stathis Papaioannou

On Thu, May 18, 2023 at 5:53 PM Stathis Papaioannou <stat...@gmail.com> wrote:On Fri, 19 May 2023 at 09:35, Brent Allsop <brent....@gmail.com> wrote:You are changing the subject from what matters. I am only talking about the fact of the matter that is the quality of the knowledge. Whether the person is lying about the quality of its knowledge, or not, or whatever, has nothing to do with the quality, itself, and what it is like. Let's emagine a "shut in" person who can't interact with the outside world, but is still experiencing or dreaming of a subjective redness experience. That quality of that is the only thing I am talking about.No, I mean that the robot could say the same things as the humans AND the robot could be accurately describing its experiences. Why do you think that is impossible?You are just proving you have no idea what this image is trying to say. It is a fact that the quality of A's knowledge is different from the quality of C's knowledge. To understand the nature of R's knowledge, as being an abstract word like 'red', emagine a quality you have never experienced, before, let's call it grue. So, you can talk about grue knowledge, you may even know what has a grueness quality, and be able to tell people that thing is grue, but you have no idea what grue is like. The same is true for the robot, and the usage of the word 'red'. it can talk about red, and tell you the strawberry is 'red', but it has no idea what redness is like. Its knowledge of red is not anything like A's or C's knowledge, the same as your knowledge of grue is not like anything. Those are just the facts portrayed in this image. You just seem to be trying to distract people away from those facts, so you can use your sleight of hand to talk about silly substitutions, while completely ignoring what really matters, the qualities, and the fact that if you change them (whatever they are), they are no longer the same..

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onup167UewyFnoFSjM5RdO25HpzPm_1CE4jdEJCVkQe2Pg%40mail.gmail.com.

Brent Allsop

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypXq0n9FTmj%2ByQzFib1hhp3ci5%3DC-1id80_bbpq37SZO_g%40mail.gmail.com.

Stathis Papaioannou

I'm absolutely certain, the same as I am absolutely certain that you can't know what the grue quality is like.And the word 'red' is just a string of 1s and 0s, all of which, it doesn't matter what is representing them, as long as you have a transducing system telling you which property or quality is the 1, and which is the 0. So, by definition, I know that the word "red" isn't like anything. Or rather, it is like anything, from redness, to greenness, to a punch in a paper, to a magnetic polarization, to a voltage on a line... and regardless of what properties you are using, the transducing system gets the "1" from all of those, by design, in a way that has nothing to do with whatever properties are representing that 1.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-ontP8-HFfVmKye1rkPX7Vrzvy5PsO8jx1iQwwyK90ND6rA%40mail.gmail.com.

Dylan Distasio

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-ontP8-HFfVmKye1rkPX7Vrzvy5PsO8jx1iQwwyK90ND6rA%40mail.gmail.com.

Lawrence Crowell

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onuzEZko9L33qOi1CqMfHdv8x9VEegHQWmU8kUUVwTmdmQ%40mail.gmail.com.

Brent Allsop

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypXUvb2-vRJdaViFOki7Jkk-%3DTgvEVa7PTkXnuTbpWbTEg%40mail.gmail.com.

John Clark

> Wow. So you are saying something like 11001 has a redness quality and 11000 has a greenness quality??? To me that passes that laughable test less well than some function like square root is redness and cube root is greenness.

"When I see equations, I see the letters in colors. I don’t know why. I see vague pictures of Bessel functions with light-tan j’s, slightly violet-bluish n’s, and dark brown x’s flying around. And I wonder what the hell it must look like to the students".

John K Clark

Stathis Papaioannou

Wow. So you are saying something like 11001 has a redness quality and 11000 has a greenness quality??? To me that passes that laughable test less well than some function like square root is redness and cube root is greenness. So even a pattern of ones and zeros on your computer screen can have a redness quality??! That doesn't seem completely laughable to you?

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-ontt-qV4wr7scD6u2mo1mCcS2D40iVqO%3DXo4GJq1%3DaJ1gQ%40mail.gmail.com.

Brent Allsop

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypWLOHu_WhqoAQRvF-7W5-gafsKGVpD25dMshN4RYnJC5A%40mail.gmail.com.

Stathis Papaioannou

OK, whew.So what type of process would this be?

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onuJHWVJsKrAhQnzyZH7Mao1rY8w5AB8BjUQ93qyBaVpYA%40mail.gmail.com.

--

Brent Allsop

If a person asked: “I want to know what type of vehicle you took to get to London?”

And if the person answered: “I took one that got me there.” Would you consider that an answer?

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypV25v2vDhary-VUZ0SDP4AG0Ls8GByTRzOJWtz3HzdMhQ%40mail.gmail.com.

Stathis Papaioannou

If a person asked: “I want to know what type of vehicle you took to get to London?”

And if the person answered: “I took one that got me there.” Would you consider that an answer?

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onu5r73vkS%3DksmMhdRGUhKTmYcLRQdsrxb0rD7JEVUiQzg%40mail.gmail.com.

John Clark

> If a person asked: “I want to know what type of vehicle you took to get to London?”

And if the person answered: “I took one that got me there.” Would you consider that an answer?

Brent Allsop

It's a one hell of a lot better answer than "the fundamental reason humans can experience qualia but computers cannot is because humans have a brain that is soft and squishy but a computer's brain is hard and dry".

Brent Allsop

On Sat, 20 May 2023 at 12:18, Brent Allsop <brent....@gmail.com> wrote:If a person asked: “I want to know what type of vehicle you took to get to London?”

And if the person answered: “I took one that got me there.” Would you consider that an answer?

No, because the question already assumes that I got to London in a vehicle, so the answer is not giving any further information.

Stathis Papaioannou

On Fri, May 19, 2023 at 9:07 PM Stathis Papaioannou <stat...@gmail.com> wrote:On Sat, 20 May 2023 at 12:18, Brent Allsop <brent....@gmail.com> wrote:If a person asked: “I want to know what type of vehicle you took to get to London?”

And if the person answered: “I took one that got me there.” Would you consider that an answer?

No, because the question already assumes that I got to London in a vehicle, so the answer is not giving any further information.OK, good.So then can you understand the problem I have with this answer, where your answer provides nothing other than what my question is already assuming one can do?

Brent Allsop

Stathis Papaioannou

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypVkVM1%3D4O%2ByupMhM03i3psV%2BNt6ckHpaoyvd_tfwOCjtw%40mail.gmail.com.

John Clark

> Humans can represent knowledge abstractly, and computers can represent knowledge directly on physical properties or qualities the same as humans. [...] But until that squishy brain directly experiences grueness, they can't know what that grueness property is like. It doesn't have anything to do with being a computer or a human.

> I was describing how a squishy brain can have abstract knowledge earlier when I was talking about a new grue quality which someone has never experienced before.

> And a conscious human that suffers from achromatopsia (experiences everything in black and white, so has never experienced redness).

John Clark

> I'm not asking about the type of light it reflects. I'm asking, if it is reflecting light like that, what quality or property causes it to reflect that type of light?

Stathis Papaioannou

In a way, I'm not saying it is impossible, I'm just talking about something different.You are talking about "behaving like a human". I am talking about the quality, itself, that results in the person (honestly or dishonestly) saying something like: "My redness is like your greenness."You always talk about "genuine experiences" but never say what those genuine experiences are, other than the casually downstream behavior it causes. I am asking what is a "genuine experience" which causes that behavior?I'm not asking about the type of light it reflects. I'm asking, if it is reflecting light like that, what quality or property causes it to reflect that type of light? (the light being very different from the thing reflecting that light.)

Brent Allsop

On Sat, May 20, 2023 at 11:20 AM Brent Allsop <brent....@gmail.com> wrote:> Humans can represent knowledge abstractly, and computers can represent knowledge directly on physical properties or qualities the same as humans. [...] But until that squishy brain directly experiences grueness, they can't know what that grueness property is like. It doesn't have anything to do with being a computer or a human.I have no problem with that, but if intelligent humans and intelligent computers represent knowledge in the same physical way then whatever it is that generates qualia in humans must also do so in computers.

So what are we arguing about?> I was describing how a squishy brain can have abstract knowledge earlier when I was talking about a new grue quality which someone has never experienced before.If neither a human or a computer has ever experienced "grue" before then neither one of them will know what it's like to experience it.> And a conscious human that suffers from achromatopsia (experiences everything in black and white, so has never experienced redness).It would be easy to test for achromatopsia, if they can't differentiate between red photons and blue photons then they have it,

but there is no test to determine if they are experiencing the red qualia or the blue qualia. And that's why the study of consciousness and qualia has never made any progress, and it never will.

John Clark

> We are arguing about whether that is possible as you are predicting, or not.

> Bottom line: I am a faithful curious scientist, and I want to know, and be able to demonstrate to all, what color things truly are, not just the colors they seem to be.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypVP6mwjE_vdCzE8S3jGsjF6QHOauxaRYu5z02N_3Q8FOQ%40mail.gmail.com.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv2Ure7O5NGTRYpffJw_yLgBqvwPPmO%3DGZNrdxiRKS5k_Q%40mail.gmail.com.

John Clark

> John, what is your consciousness made of?

Stathis Papaioannou

OK, two different assumptions:1. Information processing gives rise to redness.2. Information processing is done on redness.

Would you agree that whatever "information processing" gives rise to redness, that it is a physical fact that this is the case? As in, physical reality has an interface, such that if you perform the correct incantation of "information process" a redness experience "arises" from that.

Also, you must agree that consciousness can be composed of one incantation for redness, and different incantation for greenness, and that both redness and greenness can be computationally bound into one unified subjective composite experience which can represent things like subjective composite knowledge of strawberries?

So, now. Do the neural substitution on that, and if you can do that, it will prove that it can't be that incantation, either.

Bummer. I hate all the zombie contradictions and impossibly hard problems. Maybe we should try a different assumption and see if all these impossibly hard problems ALL GO AWAY. Is there a possibility that a different assumption might enable us to find out the true colorness qualities of things, not just the qualities things seem to be?

On Sat, May 20, 2023 at 12:20 PM Stathis Papaioannou <stat...@gmail.com> wrote:--On Sun, 21 May 2023 at 03:24, Brent Allsop <brent....@gmail.com> wrote:In a way, I'm not saying it is impossible, I'm just talking about something different.You are talking about "behaving like a human". I am talking about the quality, itself, that results in the person (honestly or dishonestly) saying something like: "My redness is like your greenness."You always talk about "genuine experiences" but never say what those genuine experiences are, other than the casually downstream behavior it causes. I am asking what is a "genuine experience" which causes that behavior?I'm not asking about the type of light it reflects. I'm asking, if it is reflecting light like that, what quality or property causes it to reflect that type of light? (the light being very different from the thing reflecting that light.)I am pretty sure I understand what you are talking about and am talking about the same thing: the subjective aspect of seeing red. I propose that any entity that is able to behave in the way humans do when they look at the world may also have similar subjectivity, because it is the type of information processing that results in this behaviour that gives rise to the subjectivity, not the subjectivity that gives rise to the behaviour.Stathis Papaioannou----

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAH%3D2ypVP6mwjE_vdCzE8S3jGsjF6QHOauxaRYu5z02N_3Q8FOQ%40mail.gmail.com.

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAK7-onv5WPaLZ6CqL4OiAZrtgUv%2BhDOB_DFy8Ap5JrE78H7Vhg%40mail.gmail.com.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv0XO%3D%2BZZfM3fLMzAuPwUParz4J5P4Tm-DPv%2BasE_b4Spg%40mail.gmail.com.

Brent Allsop

On Sun, 21 May 2023 at 04:47, Brent Allsop <brent....@gmail.com> wrote:Also, you must agree that consciousness can be composed of one incantation for redness, and different incantation for greenness, and that both redness and greenness can be computationally bound into one unified subjective composite experience which can represent things like subjective composite knowledge of strawberries?I don’t agree that the incantations are unique or static. The same hardware may give rise to redness on one occasion, greenness on another.

I don’s see a problem. It is the way the world seems to work.

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv0XO%3D%2BZZfM3fLMzAuPwUParz4J5P4Tm-DPv%2BasE_b4Spg%40mail.gmail.com.

John Clark

> What is data made of?

Brent Allsop

On Sat, May 20, 2023 at 4:15 PM Brent Allsop <brent....@gmail.com> wrote:> What is data made of?As I've mentioned before, logically there can only be 2 possibilities, a chain of iterative questions either goes on forever or ends in a brute fact.

John Clark

>> As I've mentioned before, logically there can only be 2 possibilities, a chain of iterative questions either goes on forever or ends in a brute fact.>You mean a brute demonstrable physical fact like +5 volts, or a redness quality?

Brent Allsop

--

You received this message because you are subscribed to the Google Groups "extropolis" group.

To unsubscribe from this group and stop receiving emails from it, send an email to extropolis+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/extropolis/CAJPayv2BQB4hf_FONEeNJuw-zdaDOqQA0OpD4h9%2BHhNBkiMPLQ%40mail.gmail.com.

Stathis Papaioannou

Hi Stathis,On Sat, May 20, 2023 at 1:13 PM Stathis Papaioannou <stat...@gmail.com> wrote:On Sun, 21 May 2023 at 04:47, Brent Allsop <brent....@gmail.com> wrote:Also, you must agree that consciousness can be composed of one incantation for redness, and different incantation for greenness, and that both redness and greenness can be computationally bound into one unified subjective composite experience which can represent things like subjective composite knowledge of strawberries?I don’t agree that the incantations are unique or static. The same hardware may give rise to redness on one occasion, greenness on another.Right, we both agree, and my question assumes something gives rise to redness. My question is what? At best, you seem to be saying it is turtles all the way down.

If not, then what is responsible for redness, and how is it different from greenness? How would I discover and demonstrate grueness? How would I objectively observe redness, and how would I objectively know it isn't greenness?

I don’s see a problem. It is the way the world seems to work.You don't have a problem with there being an eternal "impossibly hard" problem? You don't ever want to see the headlines say: "Consciousness has finally been figured out" reporting that we now know the colorness qualities of at least something...?

Is there any other argument that makes you think we can't assume:2. Information processing is done on redness.than the neural substitution argument that incorrectly assumes that the machine being substituted can achieve computational binding of intrinsic qualities like redness and greenness (otherwise the neuro substitution would fail)?

Brent Allsop

On Sun, 21 May 2023 at 05:50, Brent Allsop <brent....@gmail.com> wrote:If not, then what is responsible for redness, and how is it different from greenness? How would I discover and demonstrate grueness? How would I objectively observe redness, and how would I objectively know it isn't greenness?You would display a change in behaviour if your greenness changed to redness: you would say “all the green things now look red”. Or you would say “I see a new colour that I have never seen before, call it grue”. This is an objective expression of your subjective experience.

I don’s see a problem. It is the way the world seems to work.You don't have a problem with there being an eternal "impossibly hard" problem? You don't ever want to see the headlines say: "Consciousness has finally been figured out" reporting that we now know the colorness qualities of at least something...?The Hard Problem is why we should have these experiences at all rather than just being dead inside, as you assume that robots are. No possible scientific fact can ever answer that question. If you are right and redness is an intrinsic property of a substance such as glutamate, there is still the question of why glutamate produces redness rather than greenness and why it produces any qualia at all.

Is there any other argument that makes you think we can't assume:2. Information processing is done on redness.than the neural substitution argument that incorrectly assumes that the machine being substituted can achieve computational binding of intrinsic qualities like redness and greenness (otherwise the neuro substitution would fail)?I don’t see the binding problem as a real problem. Computers functionally bind different types of data using various techniques such as noting that they are synchronised in time or by being trained to associate different modalities, like a self-driving car that uses cameras, ultrasound and radar.

Stathis Papaioannou

On Sat, May 20, 2023 at 5:42 PM Stathis Papaioannou <stat...@gmail.com> wrote:On Sun, 21 May 2023 at 05:50, Brent Allsop <brent....@gmail.com> wrote:If not, then what is responsible for redness, and how is it different from greenness? How would I discover and demonstrate grueness? How would I objectively observe redness, and how would I objectively know it isn't greenness?You would display a change in behaviour if your greenness changed to redness: you would say “all the green things now look red”. Or you would say “I see a new colour that I have never seen before, call it grue”. This is an objective expression of your subjective experience.Right, and you could objectively isolate a description of whatever it is that is the necessary and sufficient initial cause of the system reporting those things, giving you your dictionary. And the set of redness and the set of greenness must be a disjoint set. Perception of such different sets, through our senses, would be a weak form of effing the ineffable, and as descartes pointed out, you could doubt that. So we need some way to directly experience them both, at the same time, in a way that can't be doubted (not perceived, causally downstream, but directly apprehended) . As in "I think with redness, therefore I know what redness is like."And when you do a neuro substitution of redness (or whatever thing or function is responsible for what you isolated), with something different, like greenness, the system must be able to report that greenness isn't the same as redness. So at that point, the neuro substitution would fail because anything but redness would be different, by definition.

I don’s see a problem. It is the way the world seems to work.You don't have a problem with there being an eternal "impossibly hard" problem? You don't ever want to see the headlines say: "Consciousness has finally been figured out" reporting that we now know the colorness qualities of at least something...?The Hard Problem is why we should have these experiences at all rather than just being dead inside, as you assume that robots are. No possible scientific fact can ever answer that question. If you are right and redness is an intrinsic property of a substance such as glutamate, there is still the question of why glutamate produces redness rather than greenness and why it produces any qualia at all.Yes, I agree. We don't know why Force equals mass time acceleration. We just know that it does. And knowing only that allows us to dance in the heavens. Just like reliably predicting that glutamate (or some function, or whatever) has a redness quality, and that if we can discover a bunch of new things for which it is a fact that they have many other new qualities, we will be able to amplify our intelligence with not only thousands of times more pixels, but thousands of times more qualities for each pixel, greatly increasing our subjective "situational awareness" intelligence.Is there any other argument that makes you think we can't assume:2. Information processing is done on redness.than the neural substitution argument that incorrectly assumes that the machine being substituted can achieve computational binding of intrinsic qualities like redness and greenness (otherwise the neuro substitution would fail)?I don’t see the binding problem as a real problem. Computers functionally bind different types of data using various techniques such as noting that they are synchronised in time or by being trained to associate different modalities, like a self-driving car that uses cameras, ultrasound and radar.I go over the difference in the "Computational Binding" chapter of the video. There is only "binding" between at most one pixel at a time in a computer CPU. And lots of intermediate results being built up, after lots of individual binding comparisons, is very different than a system that can be aware of the intrinsic qualities of LOTS of pixels at the same time, in a way so that if any one of the intrinsic properties changes, the entire system must be able to know about and report any single pixel change from all the rest.With lots of individual sequences of comparisons of single pixels, any pixel can be represented with anything you want, as long as there is a dictionary mapping it (as is the sleight of hand performed in a substitution, with changing mapping dictionaries, does) to the abstract meaning, so it will eventually end up with the same abstract causally downstream uttering: "it is red". Again, computing directly on intrinsic properties like this, where the entire system can be directly aware of all the intrinsic properties, in parallel, at one time is very different.As you point out, nothing like that is possible in your substitution, because every time I point out a possible change which it could be redness, you insist that we can also swap in a dictionary mapping system that maps the change back to the same meaning, preventing that change from being anything that is computationally bound, so the entire system can know when redness has changed to greenness, as subjective composite experience requires.

Stuart LaForge

What you write is rather standard fair. I think sentience or even bio-sense has its basis in some means by which white noise processes, such as statistical mechanics and quantum mechanics, are adjusted to have pink noise. This means a fluctuation at one time can influence a fluctuation at a later time. This is then the start of molecular information storage and memory. I am not certain whether molecular information processing of this sort is the basis for bio-sense or whether the means by which white noise is filtered or transitioned into pink noise.LCOn Saturday, May 13, 2023 at 4:44:22 PM UTC-5 johnk...@gmail.com wrote:On Sat, May 13, 2023 at 5:10 PM Lawrence Crowell <goldenfield...@gmail.com> wrote:> This does make me ponder what is the relationship between consciousness and intelligence. I suspect GPT-4 and other AI systems may be intelligent, but they are so without underlying consciousness. Our intelligence is in a sense built upon a pore-existing substratum of sentience.If the two things can be separated and sentience is not the inevitable byproduct of intelligence then how and why did Natural Selection bother to produce consciousness when you know with absolute certainty it did so at least once and probably many billions of times? After all, except by way of intelligent behavior Evolution can't directly observe consciousness any better than we can, and natural selection can't select for something it can't see. I strongly suspect that artificial sentience is easy, but artificial intelligence is hard.> My dogs are sentient, but when it comes to numerical intelligence they have none, and indeed very poor spatial sense.Your dogs may not know much mathematics and they probably wouldn't do very well on an IQ test, but their behavior is not random, they show some degree of intelligence.> we subjectively experience as consciousnessWe? I certainly subjectively experience consciousness and I assume you do too but only because I have observed intelligent behavior on your part. I have no choice, I have to accept as an axiom of existence that consciousness is the inevitable byproduct of intelligence because, even though I can't disprove it, I simply could not function if I really believed in solipsism.> is built on a deeper substrate of biological activity.But again, if it wasn't intelligent behavior then what on earth could the Evolutionary pressure have been that led to the development of consciousness?John K ClarkOn Friday, May 12, 2023 at 4:01:04 AM UTC-5 johnk...@gmail.com wrote:On Thu, May 11, 2023 at 6:33 PM Lawrence Crowell <goldenfield...@gmail.com> wrote:> I have found 2 mistakes it [GPT-4] has made. It has caught me on a few errors as well.To me that sounds like very impressive performance. If you were working with a human colleague who did the same thing would you hesitate in saying he was exhibiting some degree of intelligence?> It is a very good emulator of intelligence.What's the difference between being intelligent and emulating intelligence? It must be more than the degree of squishiness of the brain.> It also is proving to be a decent first check on my work. It might be said it passes some criterion for Turing tests, though I have often thought this idea was old fashioned in a way.Well, it is old I'll grant you that. Turing didn't invent the "Turing Test", he just pointed out something that was ancient, that we use everyday, and was so accepted and ubiquitous that nobody had given it much thought before. I'm sure you, just like everybody else, has at one time or another in your life encountered people who you consider to be brilliant and people who you consider to be stupid, when making your determination, if you did not use the Turing Test (which is basically just observing behavior and judging if it's intelligent ) what method did you use ? How in the world can you judge if something is intelligent or not except by observing if it does anything intelligent?John K Clark

John Clark

> OK, you need contrast.So you can distinguish +5 volts from 0 volts. So you set up a transducing system that says 0 volts is 1 and +5 volts is 0. And that is a "sort of thing from which you can still make a perfectly usable data processing machine" but only if you have a transducing system to tell you whether it is the +5 volts, or the 0 volts, that is the 1.

> Or is it the other way around, and the 1 is more fundamental than the voltage?

John Clark

> when you do a neuro substitution of redness (or whatever thing or function is responsible for what you isolated), with something different, like greenness, the system must be able to report that greenness isn't the same as redness.

> So at that point, the neuro substitution would fail because anything but redness would be different, by definition.

Brent Allsop

On Sun, 21 May 2023 at 12:34, Brent Allsop <brent....@gmail.com> wrote:On Sat, May 20, 2023 at 5:42 PM Stathis Papaioannou <stat...@gmail.com> wrote:On Sun, 21 May 2023 at 05:50, Brent Allsop <brent....@gmail.com> wrote:If not, then what is responsible for redness, and how is it different from greenness? How would I discover and demonstrate grueness? How would I objectively observe redness, and how would I objectively know it isn't greenness?You would display a change in behaviour if your greenness changed to redness: you would say “all the green things now look red”. Or you would say “I see a new colour that I have never seen before, call it grue”. This is an objective expression of your subjective experience.Right, and you could objectively isolate a description of whatever it is that is the necessary and sufficient initial cause of the system reporting those things, giving you your dictionary. And the set of redness and the set of greenness must be a disjoint set. Perception of such different sets, through our senses, would be a weak form of effing the ineffable, and as descartes pointed out, you could doubt that. So we need some way to directly experience them both, at the same time, in a way that can't be doubted (not perceived, causally downstream, but directly apprehended) . As in "I think with redness, therefore I know what redness is like."And when you do a neuro substitution of redness (or whatever thing or function is responsible for what you isolated), with something different, like greenness, the system must be able to report that greenness isn't the same as redness. So at that point, the neuro substitution would fail because anything but redness would be different, by definition.The system would not report that redness was different if the substitution left the output of the system the same, by definition.

This is the point I keep making, the whole basis of the functionalist argument.

We work out what glutamate does in the neuron, replace it with something else that does the same thing, and the redness (or whatever) will necessarily be preserved.

“What glutamate does in the neuron” means in what way it causes physical changes.

You seem to have the idea that “redness” itself can somehow cause physical changes, and that is the problem.

Qualia cannot do anything themselves, it is only physical forces, matter and energy that can do things.

In the case of glutamate what it does in the brain is very limited: it attaches to glutamate receptor proteins and changes their shape slightly.

Brent Allsop

On Sat, May 20, 2023 at 10:34 PM Brent Allsop <brent....@gmail.com> wrote:> when you do a neuro substitution of redness (or whatever thing or function is responsible for what you isolated), with something different, like greenness, the system must be able to report that greenness isn't the same as redness.Yes.

> So at that point, the neuro substitution would fail because anything but redness would be different, by definition.Obviously if a neuro upload could no longer tell the difference between red and green photons then its behavior would change, but that could be easily rectified because these days new color camera replacement parts are cheap. The huge advantage that digital has over analog is that all digital needs to do is notice that there is a difference between 2 things, it doesn't need to concern itself with the nature of that difference or how large it is as long as the difference is large enough to detect.

Brent Allsop

On Sat, May 20, 2023 at 6:28 PM Brent Allsop <brent....@gmail.com> wrote:> OK, you need contrast.So you can distinguish +5 volts from 0 volts. So you set up a transducing system that says 0 volts is 1 and +5 volts is 0. And that is a "sort of thing from which you can still make a perfectly usable data processing machine" but only if you have a transducing system to tell you whether it is the +5 volts, or the 0 volts, that is the 1.A "Transducing System" sounds very grand but the simplest one able to tell the difference between 5 volts and 0 is just an electromagnet, and they were invented two centuries ago.> Or is it the other way around, and the 1 is more fundamental than the voltage?The "1" describes the voltage, so which is more fundamental depends on if adjectives are more fundamental than nouns.

John Clark

>>> Or is it the other way around, and the 1 is more fundamental than the voltage?>> The "1" describes the voltage, so which is more fundamental depends on if adjectives are more fundamental than nouns.> This has nothing to do with "adjectives" or "nouns"

> You can experimentally demonstrate +5 volts. A "1" can only exist if you have a relay telling you +5 volts represents a 1 or a 0. Otherwise, you don't know what is a 1, and what isn't.

Stathis Papaioannou