Parallel distributed hp solution transfer with FE_nothing

Kaushik Das

Bruno Turcksin

Kaushik Das

if (cell->is_locally_owned() && cell->active_fe_index () != 0)

{

if (counter > ((dim == 2) ? 4 : 8))

cell->set_coarsen_flag();

else

cell->set_refine_flag();

}

--

The deal.II project is located at http://www.dealii.org/

For mailing list/forum options, see https://groups.google.com/d/forum/dealii?hl=en

---

You received this message because you are subscribed to a topic in the Google Groups "deal.II User Group" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/dealii/ssEva6C2PU8/unsubscribe.

To unsubscribe from this group and all its topics, send an email to dealii+un...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/609b0457-ba90-4aa2-a7d1-5b798d5349ebn%40googlegroups.com.

Marc Fehling

Marc

Kaushik Das

* -----------

* | 0 | 0 |

* -----------

* | 1 | 1 | 0 - FEQ, 1 - FE_Nothing

* -----------

*/

/* Set refine flags:

* -----------

* | R | R | FEQ

* -----------

* | | | FE_Nothing

* -----------

*/

An error occurred in line <1167> of file </build/deal.ii-vFp8uU/deal.ii-9.2.0/include/deal.II/lac/vector.h> in function

Number& dealii::Vector<Number>::operator()(dealii::Vector<Number>::size_type) [with Number = double; dealii::Vector<Number>::size_type = unsigned int]

The violated condition was:

static_cast<typename ::dealii::internal::argument_type<void( typename std::common_type<decltype(i), decltype(size())>::type)>:: type>(i) < static_cast<typename ::dealii::internal::argument_type<void( typename std::common_type<decltype(i), decltype(size())>::type)>:: type>(size())

Additional information:

Index 0 is not in the half-open range [0,0). In the current case, this half-open range is in fact empty, suggesting that you are accessing an element of an empty collection such as a vector that has not been set to the correct size.

-----------

#0 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: dealii::Vector<double>::operator()(unsigned int)

#1 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0:

#2 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: dealii::parallel::distributed::SolutionTransfer<2, dealii::PETScWrappers::MPI::Vector, dealii::hp::DoFHandler<2, 2> >::pack_callback(dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus)

#3 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: dealii::parallel::distributed::SolutionTransfer<2, dealii::PETScWrappers::MPI::Vector, dealii::hp::DoFHandler<2, 2> >::register_data_attach()::{lambda(dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus)#1}::operator()(dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus) const

#4 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: std::_Function_handler<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus), dealii::parallel::distributed::SolutionTransfer<2, dealii::PETScWrappers::MPI::Vector, dealii::hp::DoFHandler<2, 2> >::register_data_attach()::{lambda(dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus)#1}>::_M_invoke(std::_Any_data const&, dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus&&)

#5 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: std::function<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus)>::operator()(dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus) const

#6 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: std::_Function_handler<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> >, dealii::Triangulation<2, 2>::CellStatus), std::function<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> > const&, dealii::Triangulation<2, 2>::CellStatus)> >::_M_invoke(std::_Any_data const&, dealii::TriaIterator<dealii::CellAccessor<2, 2> >&&, dealii::Triangulation<2, 2>::CellStatus&&)

#7 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: std::function<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> >, dealii::Triangulation<2, 2>::CellStatus)>::operator()(dealii::TriaIterator<dealii::CellAccessor<2, 2> >, dealii::Triangulation<2, 2>::CellStatus) const

#8 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: dealii::parallel::distributed::Triangulation<2, 2>::DataTransfer::pack_data(std::vector<std::tuple<p4est_quadrant*, dealii::Triangulation<2, 2>::CellStatus, dealii::TriaIterator<dealii::CellAccessor<2, 2> > >, std::allocator<std::tuple<p4est_quadrant*, dealii::Triangulation<2, 2>::CellStatus, dealii::TriaIterator<dealii::CellAccessor<2, 2> > > > > const&, std::vector<std::function<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> >, dealii::Triangulation<2, 2>::CellStatus)>, std::allocator<std::function<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> >, dealii::Triangulation<2, 2>::CellStatus)> > > const&, std::vector<std::function<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> >, dealii::Triangulation<2, 2>::CellStatus)>, std::allocator<std::function<std::vector<char, std::allocator<char> > (dealii::TriaIterator<dealii::CellAccessor<2, 2> >, dealii::Triangulation<2, 2>::CellStatus)> > > const&)

#9 /lib/x86_64-linux-gnu/libdeal.ii.g.so.9.2.0: dealii::parallel::distributed::Triangulation<2, 2>::execute_coarsening_and_refinement()

#10 /home/kdas/FE_NothingTest/build/test_distributed: main

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/4cd4582b-3168-4b8b-8195-e316b049cfadn%40googlegroups.com.

Marc Fehling

- On how many processes did you run your program?

- Did you use PETSc or Trilinos?

- Could you try to build deal.II on the latest master branch? There is a chance that your issue has been solved upstream. Chances are high that fix #8860 and the changes made to `get_interpolated_dof_values()` will solve your problem.

Marc Fehling

Kaushik Das

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/ada069fe-e4cb-455a-bb4b-06a776d4490bn%40googlegroups.com.

Kaushik Das

Kaushik Das

Marc Fehling

Kaushik Das

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/7f41a733-77ea-4a6e-b8d9-412de5d9e963n%40googlegroups.com.

Marc Fehling

Wolfgang Bangerth

>

> 2) I did not know you were trying to interpolate a FENothing element into a

> FEQ element. This should not be possible, as you can not interpolate

> information from simply 'nothing', and some assertion should be triggered

> while trying to do so. The other way round should be possible, i.e.,

> interpolation from FEQ to FENothing, since you will simply 'forget' what has

> been on the old cell.

been FE_Zero: A finite element space that contains only a single function --

the zero function. Because it only contains one function, it requires no

degrees of freedom.

So interpolation from FE_Nothing to FE_Q is well defined if you take this

point of view, and projection from any finite element space to FE_Nothing is also.

Best

Wolfgang

--

------------------------------------------------------------------------

Wolfgang Bangerth email: bang...@colostate.edu

www: http://www.math.colostate.edu/~bangerth/

Marc Fehling

Wolfgang Bangerth

> The problem here is that the solution is not continuous across the face of a

> FE_Q and a FE_Nothing element. If a FE_Nothing is turned into a FE_Q element,

> the solution is suddenly expected to be continuous, and we have no rule in

> deal.II yet how to continue in the situation. In my opinion, we should throw

> an assertion in this case.

documented, but see here now:

https://github.com/dealii/dealii/pull/11430

Does that also address what you wanted to do in your patch?

Best

W.

Marc Fehling

Wolfgang Bangerth

>

> In case a FE_Nothing has been configured to dominate, the solution should be

> continuous on the interface if I understood correctly, i.e., zero on the face.

> I will write a few tests to see if this is actually automatically the case in

> user applications. If so, this check for domination will help other users to

> avoid this pitfall :)

>

Cheers

Kaushik Das

--

The deal.II project is located at http://www.dealii.org/

For mailing list/forum options, see https://groups.google.com/d/forum/dealii?hl=en

---

You received this message because you are subscribed to a topic in the Google Groups "deal.II User Group" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/dealii/ssEva6C2PU8/unsubscribe.

To unsubscribe from this group and all its topics, send an email to dealii+un...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/0398a440-3ee2-9642-9c27-c519eb2f12e5%40colostate.edu.

Marc Fehling

Hi Kaushik,

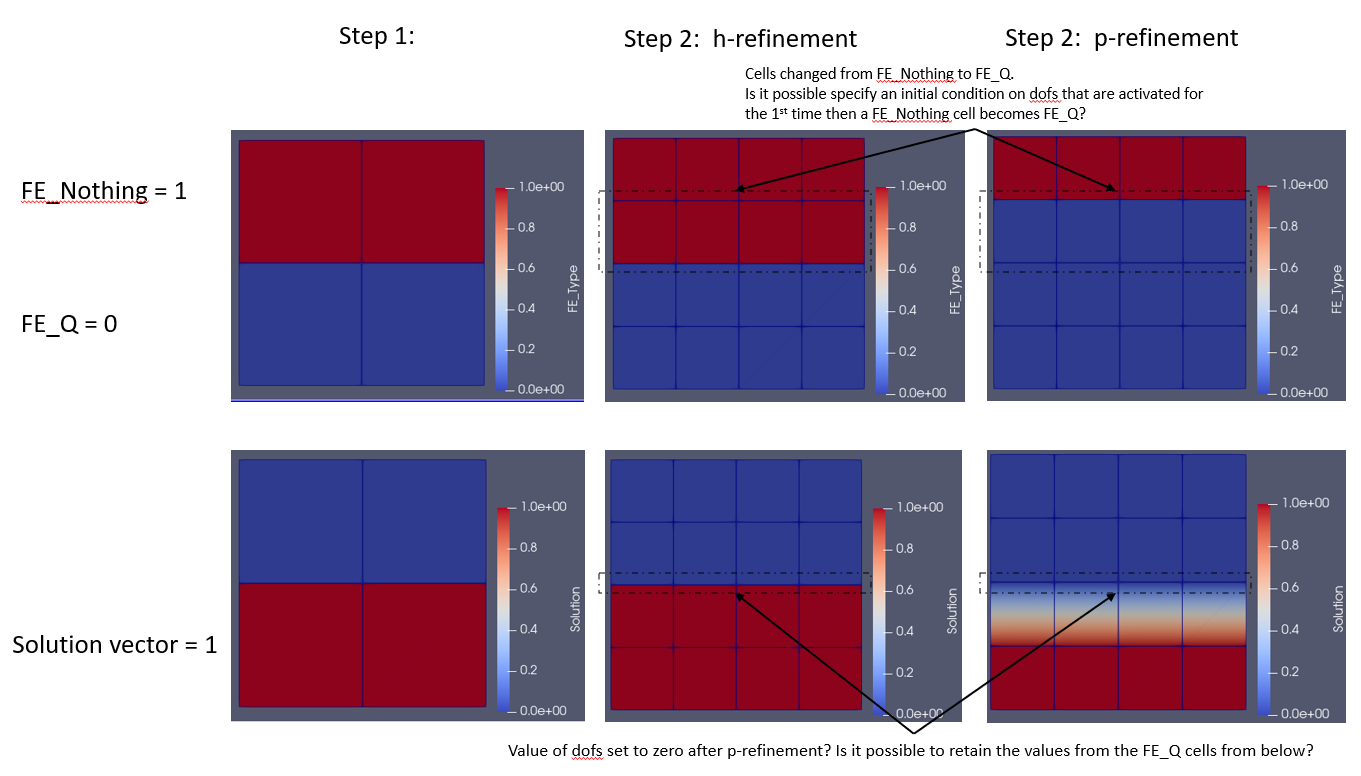

Yes, this is possible by changing a cell from FE_Nothing to FE_Q using p-refinement.

You can do this with the method described in #11132: Imitate what p::d::SolutionTransfer is doing with the more versatile tool p::d::CellDataTransfer and consider the following:

- Prepare a data container like `Vector<Vector<double>>` where the outer layer represents each cell in the current mesh, and the inner layer corresponds to the dof values inside each cell.

- Prepare data for the updated grid on the old grid.

- On already activated cells, store dof values with `cell->get_interpolated_dof_values()`.

- On all other cells, store an empty container.

- On already activated cells, store dof values with `cell->get_interpolated_dof_values()`.

- Register your data container for and execute coarsening and refinement.

- Interpolate the old solution on the new mesh.

- Initialize your new solution vector with invalid values `std::numeric_limits<double>::infinity`.

- On previously activated cells, extract the stored data with `cell->set_dof_values_by_interpolation()`.

- Skip all other cells which only have an empty container.

- Initialize your new solution vector with invalid values `std::numeric_limits<double>::infinity`.

- On non-ghosted solution vectors, call `compress(VectorOperation::min)` to get correct values on ghost cells.

This leaves you with a correctly interpolated solution on the new mesh, where all newly activated dofs have the value `infinity`.

You can now (and not earlier!!!) assign the values you have in mind

for the newly activated dofs. You may want to exchange data on ghost

cells once more with `GridTools::exchange_cell_data_to_ghosts()`.

This is a fairly new feature in the library for a very specific use

case, so there is no dedicated class for transferring solutions across

finite element activation yet. If you successfully manage to make this

work, would you be willing to turn this into a class for the deal.II

library?

Marc

Marc Fehling

Kaushik Das

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/e68e5710-5b53-430c-b053-985b959b0d98n%40googlegroups.com.

Kaushik Das

std::vector<std::vector<double>> transferred_data(m_triangulation.n_active_cells());

m_cell_data_trans.unpack(transferred_data);

i = 0;

for (auto &cell : m_dof_handler.active_cell_iterators())

{

std::vector<double> cell_data = transferred_data[i];

if (cell_data.size() != 0)

{

Vector<double> local_data(GeometryInfo<2>::vertices_per_cell);

for (unsigned int j = 0; j < GeometryInfo<2>::vertices_per_cell; j++)

{

local_data = cell_data[j];

}

cell->set_dof_values_by_interpolation(local_data, m_completely_distributed_solution);

}

i++;

}

//m_completely_distributed_solution.compress(VectorOperation::min); // calling compress aborts here

for (unsigned int i = 0; i < m_completely_distributed_solution.size(); ++i)

if (m_completely_distributed_solution(i) == std::numeric_limits<double>::max())

m_completely_distributed_solution(i) = 2; // 2 is the initial condition I want apply to newly created dofs

// m_completely_distributed_solution.compress(VectorOperation::min); // aborts here too Missing compress() or calling with wrong VectorOperation argument.

// exchange_cell_data_to_ghosts ??

m_locally_relevant_solution = m_completely_distributed_solution;

void dealii::PETScWrappers::VectorBase::compress(dealii::VectorOperation::values)

The violated condition was:

last_action == ::dealii::VectorOperation::unknown || last_action == operation

Additional information:

Bruno Turcksin

I am working on the exact same problem for the same application :-)

PETSc Vector do not support compress(min) You need to use a

dealii::LinearAlgebra::distributed::Vector instead.

Best,

Bruno

Kaushik Das

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/CAGVt9eM4SkeaHdo7EMo3Y5OsD%3D9nwMXhKDxmWiVTM1wDxJw2mw%40mail.gmail.com.

Bruno Turcksin

Oh wow this is a small world :D Unfortunately, PETSc solver requires a

PETSc vector but I think it should be straightforward to add

compress(min) to the PETSc vector. So that's a possibility if copying

the solution takes too much time.

Bestm

Bruno

Wolfgang Bangerth

Kaushik

Marc and others have already answered the technical details, so just one

overall comment:

> Let me explain what I am trying to do and why.

> I want to solve a transient heat transfer problem of the additive

> manufacturing (AM) process. In AM processes, metal powder is deposited in

> layers, and then a laser source scans each layer and melts and bonds the

> powder to the layer underneath it. To simulate this layer by layer process, I

> want to start with a grid that covers all the layers, but initially, only the

> bottom-most layer is active and all other layers are inactive, and then as

> time progresses, I want to activate one layer at a time. I read on this

> mailing list that cell "birth" or "activation" can be done by changing a cell

> from FE_Nothing to FE_Q using p-refinement. I was trying to keep all cells of

> the grid initially to FE_Nothing except the bottom-most layer. And then

> convert one layer at a time to FE_Q. My questions are:

> 1. Does this make sense?

has ever tried this, and that some of the steps don't work without further

modification -- but whatever doesn't work should be treated as either a

missing feature, or a bug. The general approach is sound.

When I try to build a code with a work flow that is not quite standard, I

(like you) frequently run into things that don't quite work. My usual approach

is then to write a small and self-contained test case that illustrates the

issue without the overhead of the actual application. Most of the time, one

can show the issue with <100 lines of code. This then goes into a github issue

so that one can write a fix for this particular problem without having to

understand the overall code architecture, the application, etc. I would

encourage you to follow this kind of work-flow!

Best

Kaushik Das

- cell->set_dof_values(local_data, m_completely_distributed_solution);

- m_completely_distributed_solution.compress(VectorOperation::insert);

- m_locally_relevant_solution = m_completely_distributed_solution;

--

The deal.II project is located at http://www.dealii.org/

For mailing list/forum options, see https://groups.google.com/d/forum/dealii?hl=en

---

You received this message because you are subscribed to a topic in the Google Groups "deal.II User Group" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/dealii/ssEva6C2PU8/unsubscribe.

To unsubscribe from this group and all its topics, send an email to dealii+un...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dealii/5ebefd8a-5dde-603e-76d1-6967c7783504%40colostate.edu.

Wolfgang Bangerth

> Thanks to all of you, I can write a small test that seems to be doing what I

> want following Marc's suggestion. But I have a few questions (the test code is

> attached)

>

> Does set_dof_values set entries in m_completely_distributed_solution that are

> not owned by the current rank?

locally owned, which are ghosted, and which are stored elsewhere. Writing into

entries does not change this decision.

>

> * m_completely_distributed_solution.compress(VectorOperation::insert);

> After set_dof_values, if I call compress(VectorOperation::insert) on that

> PETSc vector, now, does that mean that I now have entries in that distributed

> vector that are not owned by the current rank?

> * m_locally_relevant_solution = m_completely_distributed_solution;

> Will this assignment of a distributed vector to a locally relevant vector

> correctly set the ghost values?

> If this test code is correct, it will be great to add this as a test to

> deal.ii. My current work will be dependent on this part working correctly, and

> it will be nice to have a test that can alert us if something changes in the

> future.