BBR performance and optimization in cellular wireless network with high delay jitter

423 views

Skip to first unread message

Eric xu

Jul 4, 2021, 8:53:52 AM7/4/21

to BBR Development

Hello, dear friends. I wonder there is any optimization to BBR , for the reason of BBR low bandwidth utilization in wireless network with high delay jitter. In my opinion,

High delay jitter will cause BBR to underestimate the BDP, thus limit the send rate even if

pipeline is not full. Right?

So, I wonder, if there is any optimization to improve bw utilization in wireless network with high delay jitter, even in 5G network? Thx a lot.

Neal Cardwell

Jul 4, 2021, 9:59:57 AM7/4/21

to Eric xu, BBR Development

Yes, BBR includes mechanisms to deal with this kind of jitter. Please see the March 2019 thread on this topic:

https://groups.google.com/g/bbr-dev/c/kBZaq98xCC4Our experience is that BBR's throughput on high-jitter links (cellular, wifi, DOCSIS, datacenter ethernet w/ GRO/LRO) is quite good at this point (usually matches CUBIC), and there are others who have documented this as well, e.g.:

best,

neal

On Sun, Jul 4, 2021 at 8:53 AM Eric xu <ericb...@gmail.com> wrote:

Hello, dear friends. I wonder there is any optimization to BBR , for the reason of BBR low bandwidth utilization in wireless network with high delay jitter. In my opinion, High delay jitter will cause BBR to underestimate the BDP, thus limit the send rate even if pipeline is not full. Right?So, I wonder, if there is any optimization to improve bw utilization in wireless network with high delay jitter, even in 5G network? Thx a lot.

--

You received this message because you are subscribed to the Google Groups "BBR Development" group.

To unsubscribe from this group and stop receiving emails from it, send an email to bbr-dev+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/bc675976-45f5-4ef6-b337-e693e4d21c6en%40googlegroups.com.

Eric xu

Jul 5, 2021, 3:27:08 AM7/5/21

to BBR Development

Hi, Neal, thanks for your answer. I still have two questions to discuss with you after having read the two links you mentioned above.

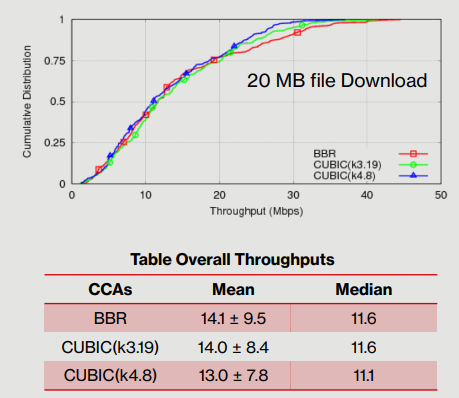

1, As mentioned in the second link, BBR's throughput on high-jitter links (especially in cellular) usually matches CUBIC, as demonstrated by the following figure,

however, what we can infer from the figure is that, the mean throughput(~14Mbps) is far from the available bw that is greater than 20Mbps as showed in the cumulative distrubution figure. Since CUBIC back off frequently in the LTE network with high error rate, but BBR does not acquire much more throughput than CUBIC, the only reason I can imagine is that BBR is highly affected by high delay jitter although cwnd provision is applied.

Is my understanding right? and is there any optimization to further improve thoughput in high bw and delay jitter scenario?

2, Is there any method to enhance BBR's adaptive ability to high bw and delay jitter? In my opinion, in high bw jitter scenario, window based bw undaption method is too slow to converge to the actual bottleneck bw, when actual bw imcreases, BBR needs at lease 8RTT to catch it, when it decreases, BBR then needs at most 10 RTT to expire the previous bw maximum, anyway it is too slow, causing severe buffer-bloating. In high delay jitter scenario, BBR also will cause bw under utilizaion. So, what is your latest optimizaion to improve the performance in such a high bw and delay jitter network?

Thx a lot,

Eric

Neal Cardwell

Jul 5, 2021, 12:24:20 PM7/5/21

to Eric xu, BBR Development

On Mon, Jul 5, 2021 at 3:27 AM Eric xu <ericb...@gmail.com> wrote:

Hi, Neal, thanks for your answer. I still have two questions to discuss with you after having read the two links you mentioned above.1, As mentioned in the second link, BBR's throughput on high-jitter links (especially in cellular) usually matches CUBIC, as demonstrated by the following figure,however, what we can infer from the figure is that, the mean throughput(~14Mbps) is far from the available bw that is greater than 20Mbps as showed in the cumulative distrubution figure. Since CUBIC back off frequently in the LTE network with high error rate, but BBR does not acquire much more throughput than CUBIC, the only reason I can imagine is that BBR is highly affected by high delay jitter although cwnd provision is applied.

Is my understanding right?

Your statement "the available bw that is greater than 20Mbps" seems to imply that your theory is that even in a cellular network there is a single, fixed amount of bandwidth that is available, which is greater than 20Mbps here, and both CUBIC and BBR are falling short in utilizing that bandwidth.

But the amount of bandwidth available to a single TCP flow over a cellular network is inherently highly variable, and is a function of link-layer fluctuations (signal strength, interference, link-layer retransmissions,...), dynamic bandwidth allocation among users, etc.

The fact that the average and median throughput for CUBIC and BBR are below the maximum does not imply that there is under-utilization of bandwidth. IMHO a more likely interpretation is that:

(a) the actual available bandwidth is highly variable, due to the factors mentioned above, and is most often in the 10-15Mbit/sec range.

(b) both CUBIC and BBR are generally reasonably efficient at discovering and using the available bandwidth, with BBR slightly more efficient at utilizing higher bandwidths

and is there any optimization to further improve thoughput in high bw and delay jitter scenario?2, Is there any method to enhance BBR's adaptive ability to high bw and delay jitter? In my opinion, in high bw jitter scenario, window based bw undaption method is too slow to converge to the actual bottleneck bw, when actual bw imcreases, BBR needs at lease 8RTT to catch it, when it decreases, BBR then needs at most 10 RTT to expire the previous bw maximum, anyway it is too slow, causing severe buffer-bloating. In high delay jitter scenario, BBR also will cause bw under utilizaion. So, what is your latest optimizaion to improve the performance in such a high bw and delay jitter network?

We have posted about ongoing work in other threads on the bbr-dev list, and at the IETF; you can find links here:

We have continued work beyond the version of BBR studied above; and we have posted a version of BBRv2 alpha online here:

This is an open area of research, and I'm sure there is always room to improve things. :-)

We look forward to hearing about your future work in this area, if you are interested in this area.

best,

neal

Thx a lot,Eric--在2021年7月4日星期日 UTC+8 下午9:59:57<Neal Cardwell> 写道:Yes, BBR includes mechanisms to deal with this kind of jitter. Please see the March 2019 thread on this topic:https://groups.google.com/g/bbr-dev/c/kBZaq98xCC4Our experience is that BBR's throughput on high-jitter links (cellular, wifi, DOCSIS, datacenter ethernet w/ GRO/LRO) is quite good at this point (usually matches CUBIC), and there are others who have documented this as well, e.g.:best,nealOn Sun, Jul 4, 2021 at 8:53 AM Eric xu <ericb...@gmail.com> wrote:Hello, dear friends. I wonder there is any optimization to BBR , for the reason of BBR low bandwidth utilization in wireless network with high delay jitter. In my opinion, High delay jitter will cause BBR to underestimate the BDP, thus limit the send rate even if pipeline is not full. Right?So, I wonder, if there is any optimization to improve bw utilization in wireless network with high delay jitter, even in 5G network? Thx a lot.--

You received this message because you are subscribed to the Google Groups "BBR Development" group.

To unsubscribe from this group and stop receiving emails from it, send an email to bbr-dev+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/bc675976-45f5-4ef6-b337-e693e4d21c6en%40googlegroups.com.

You received this message because you are subscribed to the Google Groups "BBR Development" group.

To unsubscribe from this group and stop receiving emails from it, send an email to bbr-dev+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/303da319-87dd-46c0-928f-cad7f93835f8n%40googlegroups.com.

Dave Taht

Jul 5, 2021, 1:20:14 PM7/5/21

to Eric xu, Make-Wifi-fast, BBR Development

eric, I thought I'd forward this to one of the bufferbloat.net lists. Feel free to join. It would also be helpful to duplicate your test scripts here.

I agree with you, bbr is way too slow here. We see it bloat massively in wifi also. I would like BBR to far more reactive than it is, and also for it to respond to RFC3168 ECN marks.

You said:

" In my opinion, in high bw jitter scenario, window based bw undaption method is too slow to converge to the actual bottleneck bw, when actual bw imcreases, BBR needs at lease 8RTT to catch it, when it decreases, BBR then needs at most 10 RTT to expire the previous bw maximum, anyway it is too slow, causing severe buffer-bloating. "

You might find the "flent" tool very useful for getting a more microscopic view as to what is going on.

A test we would use to get a better picture of what is really going on would be

flent -H netperf_test_server -x --socket-stats --step-size=.05 -t the_test_conditions --te=cc_algo=bbr --te=upload_streams=1 tcp_nup

This samples a ton of tcp related stats and lets you plot them with flent-gui, there are 110 other tests in the suite.

A more complicated invocation can get qdisc stats from the router involved, or iterate over CCs, or run at longer intervals. In general I have found running tests for 5 minutes or longer reveal interesting behaviors.

flent -H $S --socket-stats -x --step-size=.05 -t 1-flow-fq_starlink_noecn-${M}-c

c=${CC} --te=cpu_stats_hosts=$R --te=netstat_hosts=$R -4 --te=qdisc_stats_hosts=

$RS --te=qdisc_stats_interfaces=$RV --te=upload_streams=1 --te=cc_algo=$CC tcp_1

up

pic below

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/303da319-87dd-46c0-928f-cad7f93835f8n%40googlegroups.com.

Dave Täht CTO, TekLibre, LLC

Bob McMahon

Jul 6, 2021, 4:34:51 PM7/6/21

to Dave Taht, Eric xu, Make-Wifi-fast, BBR Development

Curious to the idea of wifi providing the opposite of ECN, i.e. my rate selection algorithm just went from one stream to two stream so TCP speed up your feedback loop decisions with a bias to faster?

Bob

Bob

_______________________________________________

Make-wifi-fast mailing list

Make-wi...@lists.bufferbloat.net

https://lists.bufferbloat.net/listinfo/make-wifi-fast

This electronic communication and the information and any files transmitted with it, or attached to it, are confidential and are intended solely for the use of the individual or entity to whom it is addressed and may contain information that is confidential, legally privileged, protected by privacy laws, or otherwise restricted from disclosure to anyone else. If you are not the intended recipient or the person responsible for delivering the e-mail to the intended recipient, you are hereby notified that any use, copying, distributing, dissemination, forwarding, printing, or copying of this e-mail is strictly prohibited. If you received this e-mail in error, please return the e-mail to the sender, delete it from your computer, and destroy any printed copy of it.

Neal Cardwell

Jul 6, 2021, 4:39:52 PM7/6/21

to Bob McMahon, Dave Taht, Eric xu, Make-Wifi-fast, BBR Development

On Tue, Jul 6, 2021 at 4:34 PM 'Bob McMahon' via BBR Development <bbr...@googlegroups.com> wrote:

Curious to the idea of wifi providing the opposite of ECN, i.e. my rate selection algorithm just went from one stream to two stream so TCP speed up your feedback loop decisions with a bias to faster?

That could make sense. That sounds a lot lot like the Accel-Brake Control work:

ABC: A Simple Explicit Congestion Controller for Wireless Networks

Authors:

Prateesh Goyal, MIT CSAIL; Anup Agarwal, CMU; Ravi Netravali, UCLA; Mohammad Alizadeh and Hari Balakrishnan, MIT CSAIL

Abstract:

We propose Accel-Brake Control (ABC), a simple and deployable explicit congestion control protocol for network paths with time-varying wireless links. ABC routers mark each packet with an “accelerate” or “brake”, which causes senders to slightly increase or decrease their congestion windows. Routers use this feedback to quickly guide senders towards a desired target rate. ABC requires no changes to header formats or user devices, but achieves better performance than XCP. ABC is also incrementally deployable; it operates correctly when the bottleneck is a non-ABC router, and can coexist with non-ABC traffic sharing the same bottleneck link. We evaluate ABC using a Wi-Fi implementation and trace-driven emulation of cellular links. ABC achieves 30-40% higher throughput than Cubic+Codel for similar delays, and 2.2× lower delays than BBR on a Wi-Fi path. On cellular network paths, ABC achieves 50% higher throughput than Cubic+Codel.

Authors:

Prateesh Goyal, MIT CSAIL; Anup Agarwal, CMU; Ravi Netravali, UCLA; Mohammad Alizadeh and Hari Balakrishnan, MIT CSAIL

Abstract:

We propose Accel-Brake Control (ABC), a simple and deployable explicit congestion control protocol for network paths with time-varying wireless links. ABC routers mark each packet with an “accelerate” or “brake”, which causes senders to slightly increase or decrease their congestion windows. Routers use this feedback to quickly guide senders towards a desired target rate. ABC requires no changes to header formats or user devices, but achieves better performance than XCP. ABC is also incrementally deployable; it operates correctly when the bottleneck is a non-ABC router, and can coexist with non-ABC traffic sharing the same bottleneck link. We evaluate ABC using a Wi-Fi implementation and trace-driven emulation of cellular links. ABC achieves 30-40% higher throughput than Cubic+Codel for similar delays, and 2.2× lower delays than BBR on a Wi-Fi path. On cellular network paths, ABC achieves 50% higher throughput than Cubic+Codel.

neal

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/CAHb6Lvroqyg22Ax6ChOPYicYTjm_GnFapm8iX_5ae5EbvVEBzQ%40mail.gmail.com.

Bob McMahon

Jul 6, 2021, 7:49:50 PM7/6/21

to Neal Cardwell, Dave Taht, Eric xu, Make-Wifi-fast, BBR Development

Thanks for this. Looks interesting. Does ABC (love the acronym) try to optimize throughput, latency or some combination, e.g. network power (throughput/delay)?

Things like roams, seen energy over the last n milliseconds, and "I've lost n TXOP arbitrations over time y" could also have influence on the control feedback loop to TCP. Not sure how TCP gets any of these stats.

None of this is end/end though. It's likely just the last/first hop which I think is probably still useful to TCP designers - not sure.

Bob

Things like roams, seen energy over the last n milliseconds, and "I've lost n TXOP arbitrations over time y" could also have influence on the control feedback loop to TCP. Not sure how TCP gets any of these stats.

None of this is end/end though. It's likely just the last/first hop which I think is probably still useful to TCP designers - not sure.

Bob

Neal Cardwell

Jul 8, 2021, 2:45:44 PM7/8/21

to Bob McMahon, Dave Taht, Eric xu, Make-Wifi-fast, BBR Development

On Tue, Jul 6, 2021 at 7:49 PM Bob McMahon <bob.m...@broadcom.com> wrote:

Thanks for this. Looks interesting. Does ABC (love the acronym) try to optimize throughput, latency or some combination, e.g. network power (throughput/delay)?

ABC tries to optimize both throughput and delay, though the paper does not mention power specifically.

Things like roams, seen energy over the last n milliseconds, and "I've lost n TXOP arbitrations over time y" could also have influence on the control feedback loop to TCP. Not sure how TCP gets any of these stats.

None of this is end/end though. It's likely just the last/first hop which I think is probably still useful to TCP designers - not sure.

The paper explains their scheme to convey the accelerate/break signals from bottlenecks using the ECN bits, and then have the TCP receiver echo them to the TCP sender using the historic "NS" bit.

neal

Fernando Xavier

Jul 15, 2021, 9:20:12 AM7/15/21

to BBR Development

Hi guys

If anyone is interested, I've contacted the author to get ABC code, and got this:

Hi Fernando,

Repo based on the 2017 Hotnets paper: https://github.com/prateshg/ABC

I haven't released the code for the NSDI20 version yet but the basic algorithm is the same in the old version.

Thanks

Prateesh

If anyone is interested, I've contacted the author to get ABC code, and got this:

Hi Fernando,

Repo based on the 2017 Hotnets paper: https://github.com/prateshg/ABC

I haven't released the code for the NSDI20 version yet but the basic algorithm is the same in the old version.

Thanks

Prateesh

Reply all

Reply to author

Forward

0 new messages