Question on Strange BBR Behavior

Sam Kumar

Hello bbr-dev,

I’m measuring the performance of BBR TCP when used to transfer data between different cloud regions, and I noticed some strange performance characteristics that I want to ask about in this forum.

Usually, BBR flows are very fast, several Gbit/s, and significantly outperform loss-based congestion control like CUBIC. However, between certain pairs of cloud regions, a BBR flow routinely gets “stuck” in a state where it sends out data very slowly, at only about 100 Kbit/s. Once BBR is in this state, it can remain in this state for hours before recovering and making progress as normal.

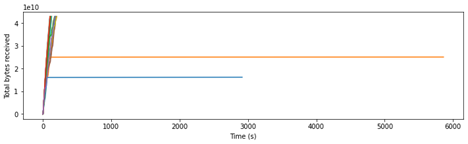

Here is an example of 25 trials of transferring 40 GiB from Google Cloud’s europe-north1 region (Finland) to AWS’ ap-southeast-1 region (Singapore):

Each trial is represented as a separate curve, and each curve shows how the TCP connection made progress. As you can see, two of the flows failed to complete, instead getting stuck in a bad state. After waiting for a long time, I stopped those trials early—that is why they never reach 40 GiB transferred.

I did some cursory analysis with ss -tin, and it seems that BBR remains stuck in this state because, when the pacing rate increases beyond about 1 Mbit/s, the delivery rate decreases. Based on my understanding of BBR, backing off and remaining at low bandwidth seems like correct operation of the BBR algorithm.

I’m unsure whether this is an issue with the network or an issue with BBR. I’m wondering if any of the folks in this forum can provide some guidance?

Thanks,

Sam Kumar

By the way, I ran BBR by doing the following:

1. I am running Ubuntu 20.04. On Google Cloud, this uses Linux 5.13.0-1024-gcp. On AWS, this uses Linux 5.11.0-1022-aws.

2.

I set changed sysctl

parameters as follows:

net.core.rmem_max = 2147483647

net.core.wmem_max = 2147483647

net.ipv4.tcp_rmem = 4096 87380 2147483647

net.ipv4.tcp_wmem = 4096 65536 2147483647

net.ipv4.tcp_mem = 8388608 8388608 8388608

net.ipv4.tcp_keepalive_time = 240

net.ipv4.tcp_keepalive_intvl = 65

net.ipv4.tcp_keepalive_probes = 5

3. I ran a simple C program that transfers data over TCP. It repeatedly calls write on the file descriptor to send out data on one VM and read to receive data on the other VM. It issues TCP_INFO system calls periodically from another thread to measure TCP progress and state.

4.

Here is the output of ss -tin on the sending node for a transfer that has stalled.

As you can see, there is data in the send buffer to send out.

ESTAB

0 1328486296 10.0.0.4:57001 18.228.192.119:57001

bbr wscale:14,14 rto:620

rtt:310.914/0.067 mss:1448 pmtu:1500 rcvmss:536 advmss:1448 cwnd:10

ssthresh:10298 bytes_sent:12933690937 bytes_retrans:840863736

bytes_acked:11556415602 segs_out:8932114 segs_in:110155 data_segs_out:8932112

bbr:(bw:96664bps,mrtt:310.86,pacing_gain:1,cwnd_gain:2) send 372579bps

lastsnd:232 lastrcv:297088 lastack:232 pacing_rate 95696bps delivery_rate

74520bps delivered:8106021 app_limited busy:297088ms

rwnd_limited:122508ms(41.2%) unacked:370451 retrans:1/580707 lost:245551

sacked:124899 dsack_dups:167 reordering:300 reord_seen:6 rcv_space:14480

rcv_ssthresh:65535 notsent:792074696 minrtt:310

Neal Cardwell

--

You received this message because you are subscribed to the Google Groups "BBR Development" group.

To unsubscribe from this group and stop receiving emails from it, send an email to bbr-dev+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/3ac326df-5c28-4257-94bc-360e3dae604fn%40googlegroups.com.