Distance from radius

115 views

Skip to first unread message

Chadwick Boulay

Feb 14, 2015, 12:39:55 AM2/14/15

to psm...@googlegroups.com

Hi Thomas and others.

I saw (but didn't understand) the binary search algorithm for calculating distance in psmove_fusion.cpp and I saw the psmove_tracker_distance_from_radius method in psmove_tracker.c

I thought there must be a simpler, faster, and maybe more accurate way based on trigonometry. Thomas, did you ever investigate this?

First, some constants.

Does anyone know the exact diameter of the sphere, as measured with a caliper? I used a very crude technique and got 45 mm, and I saw the official tech specs for the entire controller had the diameter as 46 mm.

The source code has the blue-dot FOV as 75 degrees. Is that horizontal, vertical, diagonal? What is the source of that number?

Do we know the pincushion distortion?

If we take the blue dot fov as 75 diagonal, and the sphere diameter as 46 mm, then the distance, in mm, can be calculated as:

d = 23 / sind( r_pix * 75/800 ); where r_pix is the radius of the sphere in pixels.

This method can be adapted to any camera as long as we know the FOV and the frame width.

In words, the logic is something like this:

The camera has an angular resolution of 800 pixels (diag) per 75 degrees (diag).

Therefore, an object that occupies r_pix pixels will occupy r_pix * 75/800 degrees of visual angle.

The sine of this angle is equal to the ratio of the radius (measured 23?) to the distance (d = from camera focal point to centre of sphere).

Solve for d.

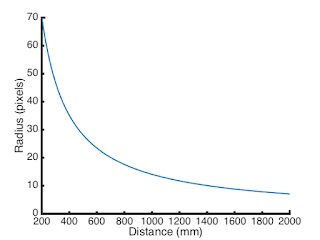

I tried this for different values of r_pix and got a curve similar in shape to the one on page 41 of Thomas' thesis.

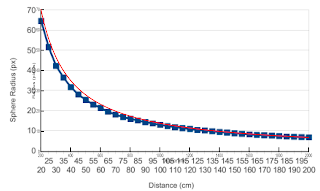

Here is my equation (in red) overlayed on top of Thomas' data.

The fit is pretty good, but not great. There are some problems. At least 2 that I know of:

1. I don't know the focal point of the camera. If it is somewhere behind the camera then this will add an artificial offset to my distances, shifting the red curve to the right, as it is here.

2. I have not considered lens distortion. Does the radius in pixels get calculated after undistorting the image? It should, but then we need to know its distortion.

3. My estimate for sphere radius may be off.

Comments?

Alexander Nitsch

Feb 14, 2015, 4:21:55 AM2/14/15

to psm...@googlegroups.com

> I saw (but didn't understand) the binary search algorithm for calculating

> distance in psmove_fusion.cpp and I saw the psmove_tracker_distance_from_radius

> method in psmove_tracker.c

> I thought there must be a simpler, faster, and maybe more accurate way

> based on trigonometry. Thomas, did you ever investigate this?

I was wondering the same thing when I saw this back then. See this

> distance in psmove_fusion.cpp and I saw the psmove_tracker_distance_from_radius

> method in psmove_tracker.c

> I thought there must be a simpler, faster, and maybe more accurate way

> based on trigonometry. Thomas, did you ever investigate this?

thread from the archive

https://groups.google.com/forum/#!topic/psmove/O1LyBG6wkfc

which also contains Thomas' answer.

> Does anyone know the exact diameter of the sphere, as measured with a

> caliper? I used a very crude technique and got 45 mm, and I saw the

> official tech specs for the entire controller had the diameter as 46 mm.

from the radius as you suggest. Going through my notes from that time, I

can see that I measured a pretty accurate 45 mm as diameter.

> The source code has the blue-dot FOV as 75 degrees. Is that horizontal,

> vertical, diagonal? What is the source of that number?

> Do we know the pincushion distortion?

(using dvs "Camera Calibration Tools"). From my notes:

- red dot

- intrinsic parameters matrix

760.929138 0 271.914948

0 760.732544 242.089966

0 0 1

- radial distortion factors

k_1 = -0.111819

k_2 = 0.042795

- tangential distortion factors

p_1 = -0.000072

p_2 = -0.003156

- blue dot

- intrinsic parameters matrix

539.850525 0 284.061035

0 540.187439 248.344650

0 0 1

- radial distortion factors

k_1 = -0.116824

k_2 = 0.115965

- tangential distortion factors

p_1 = 0.003105

p_2 = -0.001743

Note, however, that these are just the values for my particular PS Eye

camera. They might differ a bit for others, of course.

Alex

Chadwick Boulay

Feb 16, 2015, 12:28:09 AM2/16/15

to psm...@googlegroups.com

Thanks for pointing me to that post. It didn't come up in my original search. Also thank you for your camera calibration results. I hope I know how to use them some day.

Anyway, if it hasn't become obvious yet, I'm a scientist (well, postdoc) and I'm trying to use the PSMove to collect behavioural data that I will then attempt to correlate with intracranial neural data. I would like the behavioural data be as accurate as possible. We would use a much more expensive system (e.g., OptiTrack) except that we are not allowed to mount it on the operating room walls and the OR is too crowded to have tripods everywhere. I tried the Leap and it was not robust enough. Other consumer-grade optical trackers are not fast enough. The PSMove seems like the best fit. So I'm motivated to find the solution that will yield the best data.

For Thomas' solution, my understanding is that his reasons for not using the analytic solution are:

- The calibration data are difficult to obtain well

- Undistorting each frame slowed things down too much

But, the parametric distance calibration method probably only calibrates along the principal axis, correct? And the distance_from_radius function only considers the radius, not the x or y position. This leads me to believe that there is no correction of any sort for lens distortion. At increasing eccentricities the lens distortion will affect the parametric distance estimation just as much as it would the analytic solution, would it not?

As for the computational penalty of undistorting the image, is there any savings in only undistorting the ROI?

And I'm still wondering about what's going on in psmove_fusion.

Reply all

Reply to author

Forward

0 new messages