Develop: More Controlled Motion

ma...@makr.zone

- Expose Axes to the GUI (this would also help virtually every OpenPnP newbie get this right!)

- Introduce multiple Axis subclasses: RawAxis, TransformedAxis and many subclasses.

- AxisTransforms are no longer "children" of one single Axis but separate Axes that are based on other Axes and apply a transformation.

- Multi-Axis Transforms are possible, i.e. it can be based on more than one input Axis.

- Make Squareness Compensation a multi AxisTransform based on X and Y.

- Of course make the shared Z transforms like CamTransform available again.

- Some Transforms have multiple outputs (such as the CamTransform), so an OutputAxis could be based on the transforming Axis and expose the other result(s) such as the negated CamTransform.

- As a further example: If Renee's Revolver Head makes it into reality, it would be relatively easy to add the needed revolver transformation.

- Reverse the current Axis Mapping, i.e....

- ... Choose the X and Y axis on the Head with simple drop downs, and the Z and C axes on each HeadMountable.

- ... Leave an Axis empty to not map it.

- Handle axis transforms on the HeadMountable, before calling the driver (like it is done with runout compensation for instance).

- Remove all Axis handling including squareness compensation from the GCodeDriver.

- Whether this could all be migrated using an elaborate @Commit handler, I don't know yet.

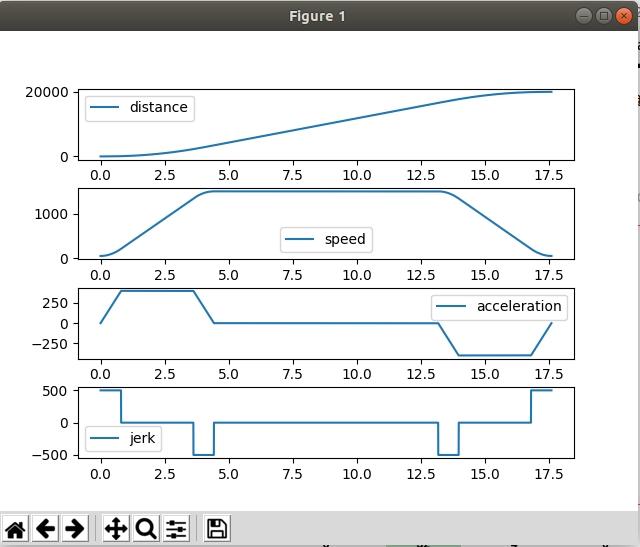

- Now that we have the RawAxis in our hands, add properties for nominal feedrate, acceleration and jerk.

- Also Backlash Compensation can be configured on the axis so it is available for all drivers.

- GCode driver can then not only scale feedrate, but also acceleration (and jerk, for controllers that have it).

- Note if we want 50% speed, we need to shape acceleration to 25% (squared) and jerk to 12.5% (power of three).

- I would also break out the waitForMovesToComplete() (M400 GCode) from the moveTo() and call it from the necessary places. Now the driver/controller knows when to wait for a move to complete and where it can smooth the ride.

- Add a GCodeDriver subclass to go even further:

- I can simulate jerk control by sending as series path segments with stepped accelerations. This is not 100% smooth but much better. Watch the video of standard Smoothieware Constant Acceleration vs. Simulated Jerk Control via G-Codes segments with 20ms time steps. The moves take the same time i.e. same average acceleration, so its a fair comparison:

https://makr.zone/wp-content/uploads/2020/04/SimulatedJerkControl.mp4 - I can do more than what an S-curve controller could do: e.g. asymmetric acceleration/deceleration = fast start but smooth stop, when I know I need vibration free stillstand.

- Also I don't want a true S-curve (too slow for long moves), I want more of the "Integral symbol"-Curve, i.e. with a straight maximum acceleration segment in the middle (that is what I simulated in the video).

- Many more ideas, not spilling everything here, hehe.

Marek T.

But

1. Don't you think you have too weak vacuum or just too small nozzle for the part like you show? I think that my machine is faster and sharper than yours but I've never seen part's slippery so strong like in your video shown.

2. Sending M204 together with G0 (in moveTo) instead of F really fixes a lot of problems. Pure speed adjustment is not too usable for PNP.

Jarosław Karwik

Jason von Nieda

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/708392a2-0e3b-43af-9313-d6e8f64aa0d8%40googlegroups.com.

Keith Hargrove

I went from Linuxcnc to smoothie I am using the same drivers for both I just made a db25 break out for the smoothie clone step,dir etc. My bot is old I made over 10 years ago it is big and heavy with smallish steppers but it gets moving very fast. I have noticed just looking at the cam I get a lot of bounce. I do not remember getting any of that before but this system also sat for 10 years so I was thinking the belts might have got weak. Before I could run at 100% speed just putting the parts from a strip onto a pcb and from the pcb back into strip. With just raw gcode done by hand, I can't get anywhere near the accuracy now. I was thinking it was just due to aging of the bot. But now I thinking the smoothie might be a lot of the problem. I know I had to slow my Z to almost 1/2 to keep the stepper from skipping once in a while. I never had that with the linuxcnc. Over all I have been disappointed with smoothie. I went to smoothie just to do OpenPNP as it seems to be what it is based around. if I get everything working I may put back a real cnc motion controller. all my bots have encoders (not wired yet) but I am used to mills and such. and closed loop is the norm.

I was wondering if you could add a encoder to a motor or on a idler pulley. Then you can compare and see if it matches.(I think it will)

Marek T.

However the project of Jaroslaw controller sounds even better.

ma...@makr.zone

ma...@makr.zone

1. Don't you think you have too weak vacuum or just too small nozzle for the part like you show? I think that my machine is faster and sharper than yours but I've never seen part's slippery so strong like in your video shown.

Marek T.

Balázs buglyó

That's also my plan (in some free time) to flash my spare board MKS1.3 LPC1768 with Marlin to see how it works comparing to Smoothie firmware.

However the project of Jaroslaw controller sounds even better.

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/d50d338d-c633-4bbc-9077-d0d755664668%40googlegroups.com.

Marek T.

Pls tell me, do you use termistor inputs to read vacuum sensors with Marlin?

(Mark, only this question and we shut up about Marlin here).

Balázs buglyó

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/24f2d0dd-963f-4bbc-a74c-d764ddb3b4c6%40googlegroups.com.

Marek T.

Let's meet later in some new separated thread when you're after some tests and decision how to go. I'll also do some tests here.

ma...@makr.zone

Now, since we will (currently) *always* want the initial acceleration and jerk values to be 0,

We set P_i = P_0 = P_1 = P_2 (initial velocity), and P_t = P_3 = P_4 = P_5 (target velocity),

which, after simplification, resolves to ...

- This will not work with my simulation. Marlin will need to be rebuilt with S Curves disabled.

- They also just substitute the constant acceleration trapezoid ramp with a standard-shaped S-Curve with the same average acceleration as the ramp, completely oblivious of how large the velocity change is. This will result in extremely soft acceleration on large moves.

- Might be fine for 3D printing micro-moves but no good for PnP. We need an "Integral symbol" shape!

ma...@makr.zone

Hi Mark,I'm fully on board with all of this. I think it's the right direction to improve the current extremely limited motion system.

ma...@makr.zone

dc42

Jason von Nieda

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/9e122023-5dd0-4a58-96bc-5242aebf083d%40googlegroups.com.

ma...@makr.zone

Hi dc42

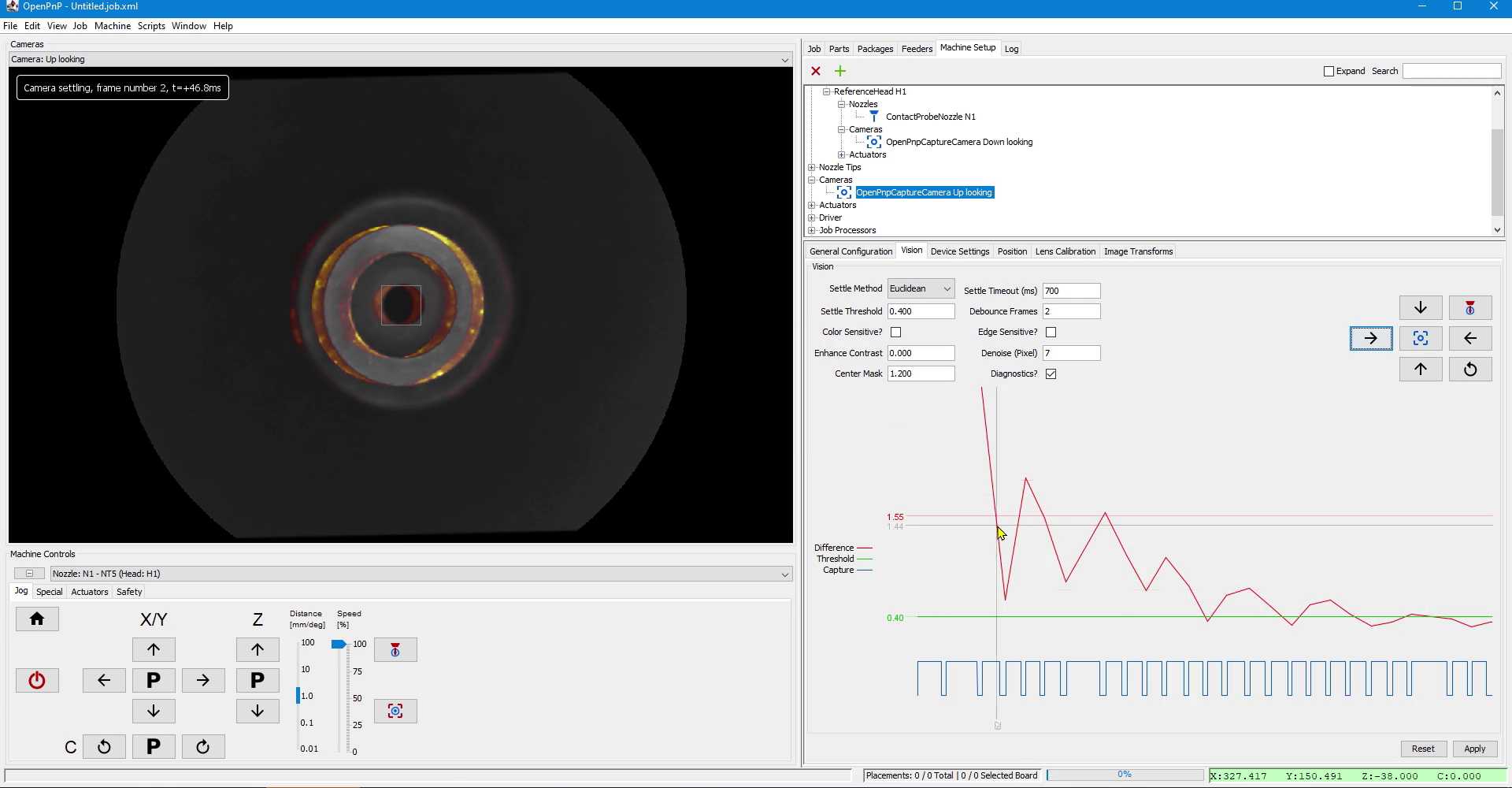

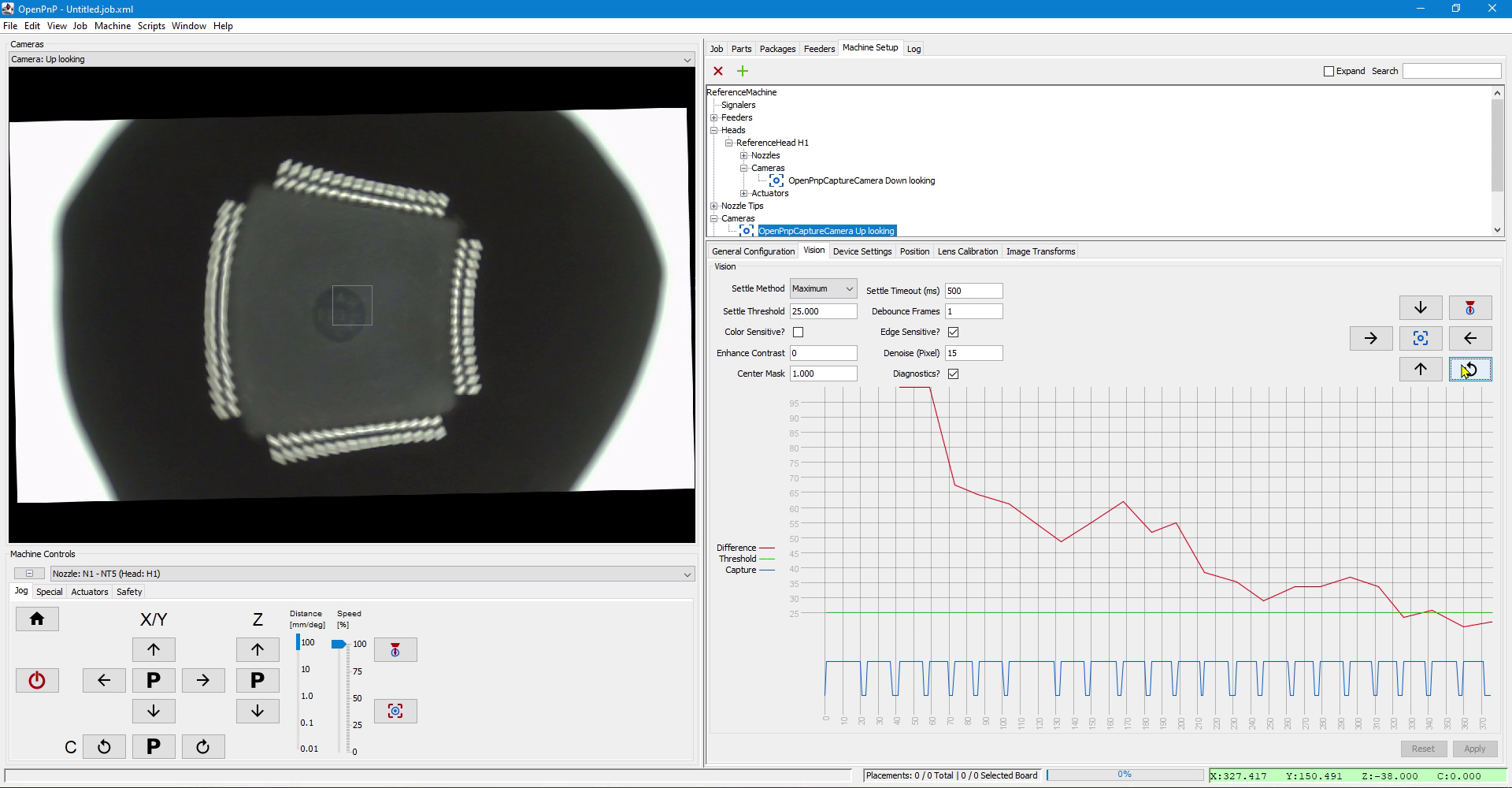

Yep, the Eigenfrequency.

Already thought about how to measure this per Axis (you can

already glimpse the sinusoidal in the Camera Settle graphs) and

then try and suppress it. But I thought it goes both ways

(symmetrically), i.e. to also increase jerk/acceleration, when (or

slightly before) the pendulum wants to swing back.

I thought I could integrate the product with the sin() and cos() of the Eigenfrequency over the past/future moments of jerk/acceleration, get amplitude and phase and feed it back negatively.

Found this helpful to understand the principles without too many

formulas :-)

https://youtu.be/spUNpyF58BY

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/9c500e82-74f2-4652-a334-54c3a261bfe7%40googlegroups.com.

dc42

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

RENEE Berty

ma...@makr.zone

Jason von Nieda

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/4485eb44-eb19-4267-8108-05fff2b326b4%40googlegroups.com.

ma...@makr.zone

> Have at it Mark. I trust you'll get it right :)

Thanks, Jason

I've already had my run-ins with the tests, and your new Gcode

Server is a great addition because it tests the relevant driver

;-) The tests correctly caught some unfinished conversion and

demonstrated that it works!

> if, as you find things that are changing significantly, ...

This is half answer, half note self. If you see something that is irking you, just tell me.

Outside of the obvious Axes rework, up until now, there are these

things that are changing in semantics (rather than just hidden

Axis implementation):

- I want all HeadMountable moveTo() to go though the head,

currently the moveToSafeZ() variants went directly to the

driver. Now moveToSafeZ() is just a wrapper for moveTo(). The

following will make clear why:

- BIGGEST CHANGE: the headOffset translation (back and forth)

must be handled by the HeadMountable rather than by the driver,

I believe that's the correct place to encapsulate this. This

will allow for future variable offsets, like Renee's revolver

head.

- Axis mapping and transform will be coordinated by the Head

(using functionality on the HeadMountable) and it will call all

the drivers that have one or more axis mapped in the operation.

- Order of calling drivers is always guaranteed to match the one

in Machine Configuration. User has Arrow buttons to permutate.

- No more NaNs to the driver. They must be substituted by the

HeadMountables, before head offset translation and axis

transformation as this is essential for multi-axis transforms

(like Non-Squareness Compensation) to work.

- The drivers will work purely with raw coordinates.

- Visual Homing is now in the Head and available for all

drivers. You could even Visual Home a machine that has the X

axis on a different driver than the Y axis.

- It works with transformed axes (including Non-Squareness

Compensation) therefore the fiducial coordinates will finally

match. For existing machine.xml the fiducial coordinate

is migrated to "unapply" Squareness Compensation, so

captured coordinates on existing machines will not be

broken.

- Visual Homing now aborts the home() when Vision fails (I had

some near-miss experiences when I tested Camera Settle and it

was misconfigured).

I saw some existing test cases that I believe will fail due to 2.

I will need to adjust these and try to add some new testing there.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQw0jzCw63uKUQSChtzZ%3DF0%2BtzGRLiNq9z4zRWpmqXnFC7ivA%40mail.gmail.com.

Jarosław Karwik

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/4485eb44-eb19-4267-8108-05fff2b326b4%40googlegroups.com.

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

Jason von Nieda

> Have at it Mark. I trust you'll get it right :)

Thanks, Jason

I've already had my run-ins with the tests, and your new Gcode Server is a great addition because it tests the relevant driver ;-) The tests correctly caught some unfinished conversion and demonstrated that it works!

> if, as you find things that are changing significantly, ...

This is half answer, half note self. If you see something that is irking you, just tell me.

Outside of the obvious Axes rework, up until now, there are these things that are changing in semantics (rather than just hidden Axis implementation):

- I want all HeadMountable moveTo() to go though the head, currently the moveToSafeZ() variants went directly to the driver. Now moveToSafeZ() is just a wrapper for moveTo(). The following will make clear why:

- BIGGEST CHANGE: the headOffset translation (back and forth) must be handled by the HeadMountable rather than by the driver, I believe that's the correct place to encapsulate this. This will allow for future variable offsets, like Renee's revolver head.

- Axis mapping and transform will be coordinated by the Head (using functionality on the HeadMountable) and it will call all the drivers that have one or more axis mapped in the operation.

- Order of calling drivers is always guaranteed to match the one in Machine Configuration. User has Arrow buttons to permutate.

- No more NaNs to the driver. They must be substituted by the HeadMountables, before head offset translation and axis transformation as this is essential for multi-axis transforms (like Non-Squareness Compensation) to work.

- The drivers will work purely with raw coordinates.

- Visual Homing is now in the Head and available for all drivers. You could even Visual Home a machine that has the X axis on a different driver than the Y axis.

- It works with transformed axes (including Non-Squareness Compensation) therefore the fiducial coordinates will finally match. For existing machine.xml the fiducial coordinate is migrated to "unapply" Squareness Compensation, so captured coordinates on existing machines will not be broken.

- Visual Homing now aborts the home() when Vision fails (I had some near-miss experiences when I tested Camera Settle and it was misconfigured).

Jason von Nieda

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/4a6aca1b-727b-4246-8c8c-5aaf6b239ce0%40googlegroups.com.

Jarosław Karwik

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/4a6aca1b-727b-4246-8c8c-5aaf6b239ce0%40googlegroups.com.

ma...@makr.zone

Hi Jarosław

> quite many interesting features were in GCode driver only.

This will change now. Most features will be outside the driver and be available for all drivers/machines.

> I mean that instead of sending several G codes for

all small operations - you would send something like -

"pick/place component on certain Z and return result" ? This

was the way my previous chinese machine was working on protocol

level.

OpenPnP has this kind of abstraction, starting from the Job

Processor steps. They are really extremely basic and versatile.

The "Reference" classes in OpenPnP are only one possible

implementation. But if you wanted to replace those, you would need

to replace large chucks of code with your own. The important

message is that it is possible within OpenPnP (unlike any

other framework I know).

_Mark

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/902d5853-30ff-4fd5-837c-07a06edc4fbc%40googlegroups.com.

ma...@makr.zone

ma...@makr.zone

- Axis Mapping (obviously)

- Axis Transforms

- Visual Homing

- Non-Squarenes Compensation

ma...@makr.zone

Hi Duncan

thanks a lot!

It seems to work.

Some impressions of your machine after fully automatic migration:

Axis mapping using drop downs:

Actuator Mapping:

Simpler management through mapping. Only what is mapped can have Gcode:

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/524f28a4-4cf0-4cc7-bbd9-f6ccf5281fe3%40googlegroups.com.

Jason von Nieda

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/da7883e7-6274-7014-5fef-6bf422cb540e%40makr.zone.

Duncan Ellison

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

ma...@makr.zone

Hi Duncan,

Hi Everybody,

your feed-back prompted some deeper scrutiny and I actually

changed some thing again. Thanks for that. :-)

> Will be interesting to see if this breaks anything.

The only things now known to break are these:

- Same Axis mapped to multiple controllers.

- Features of one Actuator distributed across multiple controllers, e.g. boolean-actuate valve on controller 1, read back the vacuum level on controller 2.

- Use of OffsetTransform, as announced earlier (not really broken but needs manual work after migrate).

If 2. is a problem, I'd rather create a new sub-class

DelegatedActuator that calls on a second actuator for select

functions.

Naturally Script access to machine objects might need to be adapted. Some APIs have changed and if you're doing something with them, you need to adapt your scripts:

- All the already mentioned capabilities and properties that

moved out of the driver to the various machine objects. E.g.

Axis Mapping, Axis Transform, Non-Squareness, Visual Homing,

Backlash offsets.

- All driver coordinates are now raw controller coordinates,

i.e. all transformations are done outside of drivers in

HeadMountables and Head.

- Most notably the Head offset is now handled on the

HeadMountable i.e. no longer subtracted in the driver, so be careful if you used direct

driver.moveTo(). But in this case your scripts will fail anyway

due to changed signature.

- All driver coordinates are now handled using a new

AxesLocation rather than Location, so the mapped axis is always

specified along with the coordinate. The multi-dimensional

implementation is now done using simple for() loops rather than

four times duplicated code. This is also ready for future

improvements where we might want to move more than one axis of

the same Axis.Type at the same time. E.g. multiple rotation axes

of a multi-nozzle head on the trip to alignment (smart motion

blending). Or even ganged picks and

ganged alignments in the far future ;-).

- ReferenceDriver.home() is now done with the Machine instead of the Head, as a machine with multiple Heads should not home the same controller multiple times.

- Instead, Visual homing is now done on the Head (if enabled).

- Sign of Non-Squareness Compensation has flipped (it is now a

transformation like all the others, going from raw controller

axes forward).

> Maybe as well to warn everyone to take a backup of machine.xml first?

Always do that when upgrading OpenPnP!! Do

not expect an extra Warning.

_Mark

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/c7673f67-ae03-4583-91e8-35a1e7665b0d%40googlegroups.com.

ma...@makr.zone

Hi Mike,

I'm referring to your PM but posting this on the list for others

to see.

Some impressions from the result of your machine's migration.

As you see it has created the four nozzle dual rack and pinion Z

configuration:

Btw, the MappedAxis can do any axis scaling, axis offset, but also combinations, like full axis reversal for instance, in an easy to understand way by just relating two points on the axis to each other in "input" --> "output" manner.

Migrated Visual Homing:

Axis mapping with simple drop-downs:

Actuators mapped to second driver:

Looks good!

Thanks again for the machine.xml

@Everybody, the call for your

OpenPnP 2.0 machine.xml file still stands!

_Mark

Am 13.05.2020 um 17:24 schrieb M. Mencinger:

>

> I refer to OpenPnP :

> What I now need is your machine.xml, expecially if you have

special mappings, special transforms, shared axes, sub-drivers,

moveable actuators etc. pp.

>

> I have smoothie 6axis mapping - OpenPnP 2.0 see attached

> Please let me know how it works out.

>

> Thanks

>

> --

> Mike

bert shivaan

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/4fd18236-d050-d31d-871b-1087218eeb17%40makr.zone.

Marek T.

ma...@makr.zone

Sorry Bert, only 2.0. The XML reader in OpenPnP can't be switched

into "tolerant" mode, unfortunately, so migrating this is a

hassle.

But you can send me a partially stripped down version, that opens

in 2.0. It's mostly the nozzle tip section that changes, you could

just delete that for this test plus handle any remaining errors

when trying to open. Perhaps you have a different computer to do

this, so your original setup is safe.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BKNHNx73tm4HxZJu9WZGgRk_v7J%3D0FLYgQ3Xdu-XUtqemgXzg%40mail.gmail.com.

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/bc57b13c-8796-aace-0ac6-c67e1a44a4d7%40makr.zone.

ma...@makr.zone

Hi Bert

I think I got enough examples for now, some in PM, thanks. All the important Transforms, Non-Squareness and Multi-Driver setups covered.

If somebody has a ScaleTransform, please send it :-)

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BKNHNyod0o98QK27J_HkXj9sXDXs74vE3ENxrW7yT5OS%2B_%2BpQ%40mail.gmail.com.

ma...@makr.zone

Hi Jason

I wanted to add some tests for the new axis & motion stuff (future and present). But foraging into this has turned into veritable side show.

See the video:

https://makr.zone/SimulatedImperfectMachine.mp4

As you can see, I can simulate many more aspects of the Machine. And btw. this is all with the new Axes implementation, the basis of which is the auto-migrated default machine.xml.The new framework for this is on a new sub-class of

ReferenceMachine so we can test axes across multiple drivers. To

enable one needs to change the machine class in the .xml.

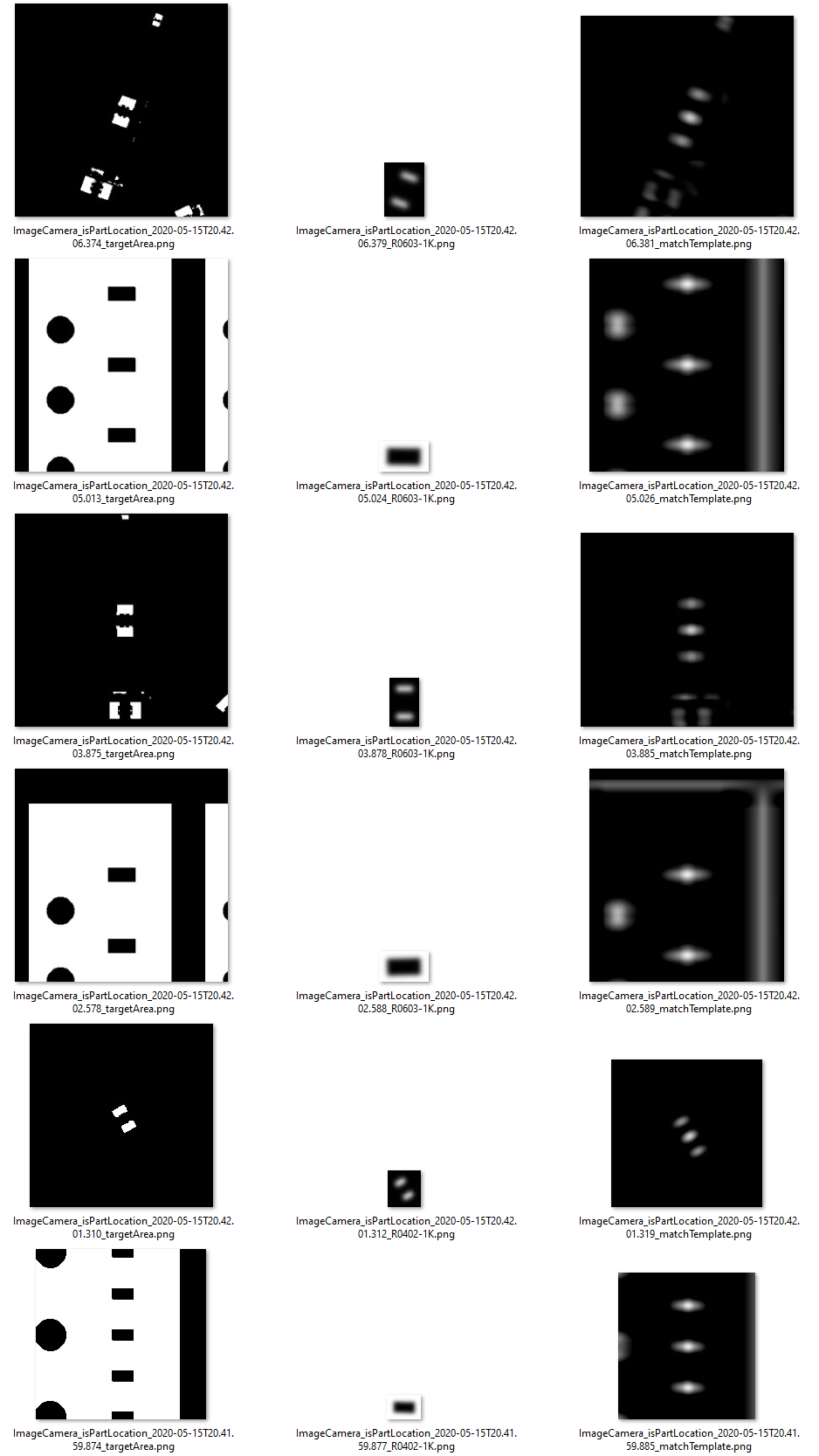

What is missing is the "human-less" success monitoring. I'd like

to add little Vision operations that looks at the pick and place

location and match for the footprint, rendered black as a template

for the black strip pockets on Pick and rendered like in Alignment

as a so-so template for solder lands on Place. Triggered by the

Vacuum Valve actuate() in the Null driver. It could test both the

location and rotation.

If this works, I guess I could then create a TestUnit. But then we need to talk about test duration or how to run tests at different "scrutiny" levels.

Jason von Nieda

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/c49d5cb4-744e-9030-3311-d14e42e7bec7%40makr.zone.

Mike M.

ma...@makr.zone

Jason,

is there a way to determine the current placement in the Job from outside?

More specifically I need the PartAlignmentOffset for the current

part on the nozzle, if it was aligned. As an offset for

the Place check, obviously. So far I've found a way for most

things, but this bugger really eludes me.

Thanks for your help!

If you're interested: The system already works, as currently all

the alignments are perfect in the SimulatedUpCamera, but I'd like

to change that, in order to really test Alignment in the

simulated imperfect machine.

Just to show you how the PnP location check works, this is a

series of alternating Pick and Place triplets of 1) what it sees

at the location (PCB HSV masked to just see the solder lands), 2)

the blurred part template, 3) the match map.

It's also a proof-of-concept of the "solder land to bottom vision

match" we talked about. If you blur one of the two, it will find

the natural sweet spot, even if the two don't really match in

individual pad/land size. This is nicely visible in the R0603

here:

_Mark

Jason von Nieda

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/9c483eb9-9d76-3d50-f39a-9b601347f8d5%40makr.zone.

ma...@makr.zone

Hi Jason

thanks. If I'm not mistaken, this data only lives transiently on

the stack of the JobProcessor thread. No way to access.

I propose eventually moving the aligment-offsets to the Nozzle, where the org.openpnp.spi.Nozzle.getPart() already is. The two belong together IMHO. This will also allow for ad-hoc alignment and accurate placement outside the JobProcessor. A "Place part at camera location" function could be useful during early machine setup and testing (at least with a Z probe or ContactProbeNozzle).

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQw0jxN9F8ZuY5X0bpwiHxBY_Ojt_Y2xEijJasYYTCmH2X80Q%40mail.gmail.com.

Jason von Nieda

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/6632f3fb-a075-2580-5986-67a7a1affbec%40makr.zone.

ma...@makr.zone

Hi everybody (TinyG users?)

who uses MOVE_TO_COMPLETE_REGEX?

If the following link is still valid, then it is used for TinyG and it captures a response coming from the G0, G1 command, right?

<![CDATA[.*stat:3.*]]>https://github.com/openpnp/openpnp/wiki/TinyG

Can anybody explain/provide an example log?

I'm asking because I'd like to separate the wait for a move to complete from the moveTo() command (as laid out in the original post of this topic). This is also important for multi (sub-) drivers machines where axes are mixed across drivers. This should only wait for completion after all the controllers have been commanded to do their moves. Otherwise they will be done in sequence (slow and completely uncoordinated!).If this <![CDATA[.*stat:3.*]]> report is sent

back without prompt (i.e. directly as a result of the move

itself), this might become a bad source of race conditions.

@tonyluken, is this the "unsolicited response" you meant?

Wanted is a command that you can send to the controller at

any time, that either reliably waits for completion before

returning (like the M400 on Smoothieware) or that sends a status

report that can be used in a loop to wait (like the position

report M114.1 on Smoothieware).

I've tried to find answers myself, but gave it up when I read

this,

https://github.com/openpnp/openpnp/wiki/TinyG#quirks

... if this is all still true, how can people even use TinyG with OpenPnP at all?Thanks.

_Mark

Tony Luken

2020-05-17 16:35:18.182 GcodeDriver DEBUG: sendCommand(G1 X112.4944 Y44.9927 F8000, 12000)...

2020-05-17 16:35:18.182 GcodeDriver TRACE: [serial://COM5] >> G1 X112.4944 Y44.9927 F8000

2020-05-17 16:35:18.202 GcodeDriver TRACE: [serial://COM5] << tinyg [mm] ok>

2020-05-17 16:35:18.202 GcodeDriver DEBUG: sendCommand(serial://COM5 G1 X112.4944 Y44.9927 F8000, 12000) => [tinyg [mm] ok>]

2020-05-17 16:35:18.202 GcodeDriver TRACE: [serial://COM5] << qr:31, qi:1, qo:0

2020-05-17 16:35:18.202 GcodeDriver DEBUG: sendCommand(G1 X112.0944 Y44.5927 F8000, 12000)...

2020-05-17 16:35:18.203 GcodeDriver TRACE: [serial://COM5] >> G1 X112.0944 Y44.5927 F8000

2020-05-17 16:35:18.218 GcodeDriver TRACE: [serial://COM5] << tinyg [mm] ok>

2020-05-17 16:35:18.218 GcodeDriver DEBUG: sendCommand(serial://COM5 G1 X112.0944 Y44.5927 F8000, 12000) => [qr:31, qi:1, qo:0, tinyg [mm] ok>]

2020-05-17 16:35:18.218 GcodeDriver TRACE: [serial://COM5] << qr:30, qi:1, qo:0

2020-05-17 16:35:18.218 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-17 16:35:18.469 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [qr:30, qi:1, qo:0]

2020-05-17 16:35:18.469 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-17 16:35:18.720 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => []

2020-05-17 16:35:18.720 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-17 16:35:18.954 GcodeDriver TRACE: [serial://COM5] << qr:31, qi:0, qo:1

2020-05-17 16:35:18.971 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [qr:31, qi:0, qo:1]

2020-05-17 16:35:18.971 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-17 16:35:19.103 GcodeDriver TRACE: [serial://COM5] << posx:112.094,posy:44.593,posz:0.000,posa:0.000,feed:8000.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3

2020-05-17 16:35:19.103 GcodeDriver TRACE: Position report: posx:112.094,posy:44.593,posz:0.000,posa:0.000,feed:8000.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3

2020-05-17 16:35:19.103 GcodeDriver TRACE: [serial://COM5] << qr:32, qi:0, qo:1

2020-05-17 16:35:19.222 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [posx:112.094,posy:44.593,posz:0.000,posa:0.000,feed:8000.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3, qr:32, qi:0, qo:1]

2020-05-17 16:35:19.222 ReferenceCamera DEBUG: moveTo((111.997952, 44.592722, 0.000000, 0.000000 mm), 1.0)

2020-05-18 12:31:49.103 GcodeDriver DEBUG: sendCommand(G1 X103.4826 Y24.8941 F8000, 12000)...

2020-05-18 12:31:49.103 GcodeDriver TRACE: [serial://COM5] >> G1 X103.4826 Y24.8941 F8000

2020-05-18 12:31:49.122 GcodeDriver TRACE: [serial://COM5] << tinyg [mm] ok>

2020-05-18 12:31:49.123 GcodeDriver TRACE: [serial://COM5] << qr:31, qi:1, qo:0

2020-05-18 12:31:49.123 GcodeDriver DEBUG: sendCommand(serial://COM5 G1 X103.4826 Y24.8941 F8000, 12000) => [tinyg [mm] ok>]

2020-05-18 12:31:49.123 GcodeDriver DEBUG: sendCommand(G1 X103.0826 Y24.4941 F8000, 12000)...

2020-05-18 12:31:49.123 GcodeDriver TRACE: [serial://COM5] >> G1 X103.0826 Y24.4941 F8000

2020-05-18 12:31:49.139 GcodeDriver TRACE: [serial://COM5] << tinyg [mm] ok>

2020-05-18 12:31:49.139 GcodeDriver TRACE: [serial://COM5] << qr:30, qi:1, qo:0

2020-05-18 12:31:49.139 GcodeDriver DEBUG: sendCommand(serial://COM5 G1 X103.0826 Y24.4941 F8000, 12000) => [qr:31, qi:1, qo:0, tinyg [mm] ok>]

2020-05-18 12:31:49.139 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-18 12:31:49.323 GcodeDriver TRACE: [serial://COM5] << posx:5.871,posy:1.412,posz:0.000,posa:0.000,feed:8000.00,vel:5314.83,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:49.324 GcodeDriver TRACE: Position report: posx:5.871,posy:1.412,posz:0.000,posa:0.000,feed:8000.00,vel:5314.83,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:49.390 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [qr:30, qi:1, qo:0, posx:5.871,posy:1.412,posz:0.000,posa:0.000,feed:8000.00,vel:5314.83,unit:1,coor:1,dist:0,frmo:1,stat:5]

2020-05-18 12:31:49.390 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-18 12:31:49.520 GcodeDriver TRACE: [serial://COM5] << posx:29.447,posy:7.084,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:49.521 GcodeDriver TRACE: Position report: posx:29.447,posy:7.084,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:49.640 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [posx:29.447,posy:7.084,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5]

2020-05-18 12:31:49.640 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-18 12:31:49.717 GcodeDriver TRACE: [serial://COM5] << posx:54.649,posy:13.147,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:49.717 GcodeDriver TRACE: Position report: posx:54.649,posy:13.147,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:49.891 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [posx:54.649,posy:13.147,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5]

2020-05-18 12:31:49.891 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-18 12:31:49.910 GcodeDriver TRACE: [serial://COM5] << posx:79.851,posy:19.209,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:49.910 GcodeDriver TRACE: Position report: posx:79.851,posy:19.209,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:50.106 GcodeDriver TRACE: [serial://COM5] << posx:100.847,posy:24.260,posz:0.000,posa:0.000,feed:8000.00,vel:3553.30,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:50.106 GcodeDriver TRACE: Position report: posx:100.847,posy:24.260,posz:0.000,posa:0.000,feed:8000.00,vel:3553.30,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:50.142 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [posx:79.851,posy:19.209,posz:0.000,posa:0.000,feed:8000.00,vel:8000.00,unit:1,coor:1,dist:0,frmo:1,stat:5, posx:100.847,posy:24.260,posz:0.000,posa:0.000,feed:8000.00,vel:3553.30,unit:1,coor:1,dist:0,frmo:1,stat:5]

2020-05-18 12:31:50.142 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-18 12:31:50.250 GcodeDriver TRACE: [serial://COM5] << qr:31, qi:0, qo:1

2020-05-18 12:31:50.304 GcodeDriver TRACE: [serial://COM5] << posx:103.428,posy:24.839,posz:0.000,posa:0.000,feed:8000.00,vel:301.57,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:50.304 GcodeDriver TRACE: Position report: posx:103.428,posy:24.839,posz:0.000,posa:0.000,feed:8000.00,vel:301.57,unit:1,coor:1,dist:0,frmo:1,stat:5

2020-05-18 12:31:50.392 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [qr:31, qi:0, qo:1, posx:103.428,posy:24.839,posz:0.000,posa:0.000,feed:8000.00,vel:301.57,unit:1,coor:1,dist:0,frmo:1,stat:5]

2020-05-18 12:31:50.392 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-18 12:31:50.398 GcodeDriver TRACE: [serial://COM5] << posx:103.083,posy:24.494,posz:0.000,posa:0.000,feed:8000.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3

2020-05-18 12:31:50.399 GcodeDriver TRACE: Position report: posx:103.083,posy:24.494,posz:0.000,posa:0.000,feed:8000.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3

2020-05-18 12:31:50.399 GcodeDriver TRACE: [serial://COM5] << qr:32, qi:0, qo:1

2020-05-18 12:31:50.643 GcodeDriver DEBUG: sendCommand(serial://COM5 null, 250) => [posx:103.083,posy:24.494,posz:0.000,posa:0.000,feed:8000.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3, qr:32, qi:0, qo:1]

2020-05-18 12:31:50.643 ReferenceCamera DEBUG: moveTo((103.029642, 24.494094, 0.000000, 0.000000 mm), 1.0)

Doppelgrau

2020-05-18 20:06:32.518 ReferenceNozzle DEBUG: N1.moveTo((281.751000, -67.225000, -15.000000, 0.000000 mm), 1.0)

2020-05-18 20:06:32.518 GcodeDriver DEBUG: sendCommand(G0 X279.7310 F32000, 10000)...

2020-05-18 20:06:32.518 GcodeDriver TRACE: [serial://ttyUSB0] >> G0 X279.7310 F32000

2020-05-18 20:06:32.528 GcodeDriver TRACE: [serial://ttyUSB0] << G0 X279.7310 F32000

2020-05-18 20:06:32.532 GcodeDriver TRACE: [serial://ttyUSB0] << tinyg [mm] ok>

2020-05-18 20:06:32.533 GcodeDriver DEBUG: sendCommand(serial://ttyUSB0 G0 X279.7310 F32000, 10000) => [G0 X279.7310 F32000, tinyg [mm] ok>]

2020-05-18 20:06:32.533 GcodeDriver DEBUG: sendCommand(G1 X279.8810 F1600, 10000)...

2020-05-18 20:06:32.533 GcodeDriver TRACE: [serial://ttyUSB0] >> G1 X279.8810 F1600

2020-05-18 20:06:32.538 GcodeDriver TRACE: [serial://ttyUSB0] << G1 X279.8810 F1600

2020-05-18 20:06:32.542 GcodeDriver TRACE: [serial://ttyUSB0] << tinyg [mm] ok>

2020-05-18 20:06:32.543 GcodeDriver DEBUG: sendCommand(serial://ttyUSB0 G1 X279.8810 F1600, 10000) => [G1 X279.8810 F1600, tinyg [mm] ok>]

2020-05-18 20:06:32.544 GcodeDriver DEBUG: sendCommand(null, 250)...

2020-05-18 20:06:32.723 GcodeDriver TRACE: [serial://ttyUSB0] << posx:279.881,posy:11.525,posz:-15.000,posa:0.000,feed:1600.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3

2020-05-18 20:06:32.798 GcodeDriver DEBUG: sendCommand(serial://ttyUSB0 null, 250) => [posx:279.881,posy:11.525,posz:-15.000,posa:0.000,feed:1600.00,vel:0.00,unit:1,coor:1,dist:0,frmo:1,stat:3]

Toying with the idea of an other controller again and again, but original smoothie 5x seems very hard to get, and other boards seems a bit of a gamble (e.g. SKR 1.3 with the 2209 or something like that).

ma...@makr.zone

Thanks Tony!

What happens if you send the same move command again i.e. with

unchanged coordinates? Is there be a second report?

Or do you know any other command that may prompt a reliable status or completion report?

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/f5f0b2e1-c21a-4fb9-bfdd-db55ff53ee6f%40googlegroups.com.

ma...@makr.zone

Hi Jason,

another issue. The Camera Rotation Jogging you presented here...

https://www.youtube.com/watch?v=0TvqQBkTGP8

...didn't work any more in the NullDriver.

Turns out the reason is that the NullDriver now also uses proper

Axis Mapping. For others that read this, the current GcodeDriver

only Axis Mapping is described here:

https://github.com/openpnp/openpnp/wiki/GcodeDriver%3A-Axis-Mapping#mapping-axes-to-headmountables

The Wiki example maps Z and C axes only to the Nozzles. That's what I would expect and have on my machine and see in other people's machine.xml they recently sent me. Furthermore, with multi-nozzle machines there are multiple C axes , and one wouldn't know which C axis to map the camera to, so I guess not mapping the camera is still the correct thing to do, right?

But the code here...

.. always talks to the Camera, so with the unmapped axis, getLocation()

will always return the constant rotation from the head offsets.

The Jog will also not work, the rotation is ignored.

I haven't tried, but this must also never have worked on the

GcodeDriver, if the C axis wasn't mapped to the camera.

So this a bug, and we should rotate the selected tool instead, correct?

Plus we could hide the rotation handle when no C axis is mapped

to the selected tool.

As a reference: the reticles/cross hairs are also drawn with C

taken from the selected tool:

As a bonus this will then also work for the bottom camera that is currently excluded here:

If you agree, I can fix this in my PR-to-be and also conveniently

test it in the NullDriver. This will be a big one anyway :-)

_Mark

Jason von Nieda

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/44182c09-b8bb-686a-9d08-8a2f77155f05%40makr.zone.

ma...@makr.zone

Hi Jason,

Yes, there is this discrepancy. It is a bit too conveniently

hidden in the NullDriver with its head offset of 0, i.e. you are

effectively looking through the nozzle. But until we have

stereoscopic down-looking cameras and 3D reconstruction of the

image underneath, plus perfect cam-to-tip alignment, zero runout

etc, we need to live with offsets. And offsets mean switching

between tools, right?

And what about the second, the third, the nth nozzle? We need a

way to select ... says the guy with the one-nozzle machine :-) But

even with one nozzle there are those areas that the camera can't

reach... and it is veery useful to be able to use the nozzle tip

for capture (and not to waste that area).

I don't think there is an easy way to completely overturn the

current system. But, there is room for improvement how the

selected tool is handled, so you'd hardly ever have to think about

it. It should be automatically selected, whenever a Position

button is pressed. Not only the obvious ones but also the Feeders

Panel's pick location buttons etc.

When Camera-Jogging is used - even with just a click, it should

likewise select the camera.

OpenPnP would need to remember the last selected non-camera-tool

and restore it, when toggling between camera and tool (especially

when doing that in the Machine Controls).

The Camera View/Machine Controls could then also delegate the

Camera's unmapped C axis coordinate to the last selected tool

while bypassing the runout compensation (that's now a feature of

the new transform system, greetings to @doppelgrau). So we can

use rotation when the camera is selected, while keeping the X/Y

calibrated for the camera.

The runout offset that is visible when you rotate and the nozzle

is the selected tool, is vexing users, myself included for a long

time... that would finally be gone.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQw0jx%2BOnoWD7HJbLsrcZbrtyFaLy-5X9X%2Bpp%3DTTAjVAgjZVg%40mail.gmail.com.

Jason von Nieda

Hi Jason,

Yes, there is this discrepancy. It is a bit too conveniently hidden in the NullDriver with its head offset of 0, i.e. you are effectively looking through the nozzle. But until we have stereoscopic down-looking cameras and 3D reconstruction of the image underneath, plus perfect cam-to-tip alignment, zero runout etc, we need to live with offsets. And offsets mean switching between tools, right?

And what about the second, the third, the nth nozzle? We need a way to select ... says the guy with the one-nozzle machine :-) But even with one nozzle there are those areas that the camera can't reach... and it is veery useful to be able to use the nozzle tip for capture (and not to waste that area).

I don't think there is an easy way to completely overturn the current system. But, there is room for improvement how the selected tool is handled, so you'd hardly ever have to think about it. It should be automatically selected, whenever a Position button is pressed. Not only the obvious ones but also the Feeders Panel's pick location buttons etc.

When Camera-Jogging is used - even with just a click, it should likewise select the camera.

OpenPnP would need to remember the last selected non-camera-tool and restore it, when toggling between camera and tool (especially when doing that in the Machine Controls).

The Camera View/Machine Controls could then also delegate the Camera's unmapped C axis coordinate to the last selected tool while bypassing the runout compensation (that's now a feature of the new transform system, greetings to @doppelgrau). So we can use rotation when the camera is selected, while keeping the X/Y calibrated for the camera.

The runout offset that is visible when you rotate and the nozzle is the selected tool, is vexing users, myself included for a long time... that would finally be gone.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/9da7e3fe-ccd0-401d-189a-7e3e9c7284e5%40makr.zone.

Duncan Ellison

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/44182c09-b8bb-686a-9d08-8a2f77155f05%40makr.zone.

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQw0jx%2BOnoWD7HJbLsrcZbrtyFaLy-5X9X%2Bpp%3DTTAjVAgjZVg%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

Jason von Nieda

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/daa3e52a-f45b-4e94-ae26-0f92f1af84e0%40googlegroups.com.

Tony Luken

Alexander Goldstone

On Saturday, May 9, 2020 at 8:50:39 PM UTC+1, ma...@makr.zone wrote:

Hi everybodyIMPORTANT CALL FOR YOUR ASSISTANCE:As described in the original post of this thread, I'm working on a new GUI based global Axes and Drivers mapping solution in OpenPnP 2.0.In short:You can now easily define the Axes and Axis Transforms in the GUI and map them to the Head Mountables with simple drop-downs (see the image). No more machine.xml hacking.Furthermore, you can add as many Drivers as you like and mix and match different types. All Axes and Actuators can be mapped to the drivers (again in the GUI) and OpenPnP will then automatically just talk to the right driver(s).Most of the GcodeDiver specific goodies were extracted from the driver and are now available to all the drivers (some of that still work in progress):

- Axis Mapping (obviously)

- Axis Transforms

- Visual Homing

- Non-Squarenes Compensation

The idea is to automatically migrate your machine.xml to the new solution.

What I now need is your machine.xml, expecially if you have special mappings, special transforms, shared axes, sub-drivers, moveable actuators etc. pp.

ma...@makr.zone

Thanks, Alex.

I guess there is a mistake in that config. It had an unused

sub-driver with a second set of Z and Rotation axes mapped that

are never used (no second nozzle).

Note, the migration is still perfect and this machine will work,

it now just shows you the superfluous stuff more prominently:

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/8ee26fb5-754f-4fd0-a94b-38eed5ba1097%40googlegroups.com.

ma...@makr.zone

This would mean that Camera.getLocation() would not reflect the rotation. Which would mean that capturing camera coordinates would need to go through the camera view instead of just on the camera, unless I am missing something? I'm not a fan of this solution. I think it would be better for the camera to just maintain a "virtual" rotation coordinate.

OK, I understand now.

Can we agree that there are two completely independent questions?

- Whether the camera has virtual coordinates.

- Whether we should have a selected tool on the UI.

About 1.:

I'll create a VirtualAxis that is mapped to down-looking cameras'

C and serves as a coordinate store.

Users could also assign a virtual Z. It is sometimes convenient

to switch back and forth between camera and nozzle tip such as

when setting up a feeder (height). I always hate it when the

camera forgets the Z.

To be clear, a Z probe measurement would still be favored on

capture. We could perhaps add a modifier Key (holding down Shift)

when you don't want the probe measurement.

The question: should I map a virtual Z per default on migrate?

Btw. I have made it easy to set the NullDriver up for 3D

operation (currently everything is on Z=0) so Z handling can be

tested. It sets all feeder's Z, the BoardLocations' Z and the

bottom cameras' Z.

About 2.:

> Only during machine setup, IMHO. My goal is for the runtime UI to not have a selected tool. So, certainly when you are setting up a nozzle changer, there is an implied selected / current tool, but my end goal is that all of the "operator" functions will just use the right tool automatically.

I'm not sure I understand. Sometimes I feel you underestimate

your own brilliant work. :-)

You don't need any of that control GUI during normal Job

operation, neither virtual nor real. Machine Controls can already

be hidden along with the selected tool combo-box and we could even

add an option to hide them automatically when you hit Run on the

Job. Your system is already brilliant, no need for a Verschlimmbesserung.

But as soon as the job is stuck and you need to trouble-shoot something you need to be back in full control mode, and fast. I wouldn't want a dumbed-own GUI when I need to fix a bad feeder pick location etc.. I can't imagine a single realistic interrupted Job/trouble-shoot use case that I can fix with just the camera view and virtual axes. It would be infuriating to have the real problem at hand and then also having to wrestle free of some Noobs Are King GUI. Sorry I'm quite emotional about these questions, as I'm already a bleeding victim of that *** mega trend.

About the unreachable area, I would lose so much space! One use case see here

https://youtu.be/dGde59Iv6eY?t=250

It would also make most Liteplacer users' life hard, as they are advised to set up their changer in the unreachable area here:

>Attach the holder to your work table. The down looking camera doesn’t need to see the nozzles, a good place is above the hole location, on the left.

https://www.liteplacer.com/assembling-the-nozzle-holder/

Not on my machine, though.

_Mark

Jason von Nieda

This would mean that Camera.getLocation() would not reflect the rotation. Which would mean that capturing camera coordinates would need to go through the camera view instead of just on the camera, unless I am missing something? I'm not a fan of this solution. I think it would be better for the camera to just maintain a "virtual" rotation coordinate.OK, I understand now.

Can we agree that there are two completely independent questions?

- Whether the camera has virtual coordinates.

- Whether we should have a selected tool on the UI.

About 1.:

I'll create a VirtualAxis that is mapped to down-looking cameras' C and serves as a coordinate store.

Users could also assign a virtual Z. It is sometimes convenient to switch back and forth between camera and nozzle tip such as when setting up a feeder (height). I always hate it when the camera forgets the Z.

To be clear, a Z probe measurement would still be favored on capture. We could perhaps add a modifier Key (holding down Shift) when you don't want the probe measurement.

The question: should I map a virtual Z per default on migrate?

Btw. I have made it easy to set the NullDriver up for 3D operation (currently everything is on Z=0) so Z handling can be tested. It sets all feeder's Z, the BoardLocations' Z and the bottom cameras' Z.

About 2.:

> Only during machine setup, IMHO. My goal is for the runtime UI to not have a selected tool. So, certainly when you are setting up a nozzle changer, there is an implied selected / current tool, but my end goal is that all of the "operator" functions will just use the right tool automatically.

I'm not sure I understand. Sometimes I feel you underestimate your own brilliant work. :-)

You don't need any of that control GUI during normal Job operation, neither virtual nor real. Machine Controls can already be hidden along with the selected tool combo-box and we could even add an option to hide them automatically when you hit Run on the Job. Your system is already brilliant, no need for a Verschlimmbesserung.

But as soon as the job is stuck and you need to trouble-shoot something you need to be back in full control mode, and fast. I wouldn't want a dumbed-own GUI when I need to fix a bad feeder pick location etc.. I can't imagine a single realistic interrupted Job/trouble-shoot use case that I can fix with just the camera view and virtual axes. It would be infuriating to have the real problem at hand and then also having to wrestle free of some Noobs Are King GUI. Sorry I'm quite emotional about these questions, as I'm already a bleeding victim of that *** mega trend.

About the unreachable area, I would lose so much space! One use case see here

https://youtu.be/dGde59Iv6eY?t=250

It would also make most Liteplacer users' life hard, as they are advised to set up their changer in the unreachable area here:

>Attach the holder to your work table. The down looking camera doesn’t need to see the nozzles, a good place is above the hole location, on the left.

https://www.liteplacer.com/assembling-the-nozzle-holder/

Not on my machine, though.

--

_Mark

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/8389c137-83d0-7c49-87ff-74579a0acdaf%40makr.zone.

Alexander Goldstone

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

ma...@makr.zone

(Clearly I have to do more testing on the machine and less in the simulator)

Well, one of my goals is to make the simulator much more

realistic and testing as results-oriented as possible. This should

now all behave much more like a real machine i.e. the same type of

discrepancies between drivers should no longer be possible.

Ideally, instead of using the NullDriver, this would one day also

work with a regular GcodeDriver talking to a GcodeServer. But this

needs a true Gcode interpreter for a full jobs test. Once there,

people could even test their real-life machine.xml setup

with arbitrary, reasonably standardized Gcode commands and the

comms temporarily bypassed to the GcodeServer. The next step would

be a machine scan using the down-looking camera to get the user's

machine table into the ImageCamera. Imagine how cool ;-) Shouldn't

be too difficult to implement if kept simple.

I'll keep the motion planner building blocks universal, so they can be plugged in, as soon as such a Gcode interpreter is available. I'm unfamilar with advanced Regexes so maybe this could actually be quite easy.

_Mark

ma...@makr.zone

> Good to know its compatible with the changes though.

Yes, the real world tests will still have to be done yet, some

bugs are to be expected and I hope people will help me test it. I

will do my best to make it work, for those who do.

_Mark

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/29601f27-8e82-4cef-a67f-e6011c6fa856%40googlegroups.com.

Marek T.

I'm not sure it's matter of the simulator function you create. But have you solved, thought, how to test picks/plecements with different (proper-expected and improper-fault) vacuum values? To see how machine will work when in some point of the vacuum test the value is not proper.

ma...@makr.zone

Hi Marek

no, vacuum sensing simulation is not included and not planned this time. Already too much work with motion.

But the basic framework is there. So this could be added later.

_Mark

Jason von Nieda

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/b2c98abe-7a66-2cf4-8292-cbdad878b14b%40makr.zone.

Marek T.

ma...@makr.zone

Hi Tony

I suspect the following:

If you send two, three or any number of G0/G1 commands quickly,

only one such status report will be issued. And if some

of the commands are somewhat delayed (due to other stuff happening

on the OpenPnP side) it will become nondeterministic, how many

reports are to be expected. It will become impossible to determine

safely when the sequence of commands has completed.

Please try to find a solution. Otherwise TinyG will not be usable

with the advanced GCode driver mode.

Note: TinyG is open source.

https://github.com/synthetos/TinyG/blob/master/firmware/tinyg/gcode_parser.c

Perhaps it would be easier to add a proper M400 command, than to try to coerce it into doing what we want with a crystal ball, tweezers and a crow bar.

https://www.reprap.org/wiki/G-code#M400:_Wait_for_current_moves_to_finish

Have you tried

G4 P0

?

_Mark

Tony Luken

2020-05-23 11:13:43.702 GcodeDriver TRACE: [serial://COM5] >> N1 G0 X110 Y100

N2 G0 Y110

N3 G0 X100

N4 G0 Y100

N5 G0 X110

N6 G0 Y110

N7 G0 X100

N9 G0 Y100

N10 G0 X110

N11 G0 Y110

N12 G0 X100

N13 G0 Y100

N14 G0 X110

N15 G0 Y110

N16 G0 X100

N17 G0 Y100

2020-05-23 11:13:43.713 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,16,156]}

2020-05-23 11:13:43.713 GcodeDriver DEBUG: sendCommand(serial://COM5 N1 G0 X110 Y100

N2 G0 Y110

N3 G0 X100

N4 G0 Y100

N5 G0 X110

N6 G0 Y110

N7 G0 X100

N9 G0 Y100

N10 G0 X110

N11 G0 Y110

N12 G0 X100

N13 G0 Y100

N14 G0 X110

N15 G0 Y110

N16 G0 X100

N17 G0 Y100, 5000) => [{r:{},f:[1,0,16,156]}]

2020-05-23 11:13:43.959 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:100.000,posy:100.000,posz:0.000,posa:0.000,vel:1.26,stat:5}}

2020-05-23 11:13:43.960 GcodeDriver TRACE: Position report: {sr:{line:1,posx:100.000,posy:100.000,posz:0.000,posa:0.000,vel:1.26,stat:5}}

2020-05-23 11:13:43.960 GcodeDriver TRACE: [serial://COM5] << {qr:31,qi:1,qo:0}

2020-05-23 11:13:43.960 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,11,151]}

2020-05-23 11:13:43.961 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:100.004,posy:100.000,posz:0.000,posa:0.000,vel:30.43,stat:5}}

2020-05-23 11:13:43.961 GcodeDriver TRACE: Position report: {sr:{line:1,posx:100.004,posy:100.000,posz:0.000,posa:0.000,vel:30.43,stat:5}}

2020-05-23 11:13:43.961 GcodeDriver TRACE: [serial://COM5] << {qr:30,qi:1,qo:0}

2020-05-23 11:13:43.961 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,11,151]}

2020-05-23 11:13:43.962 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:100.026,posy:100.000,posz:0.000,posa:0.000,vel:125.09,stat:5}}

2020-05-23 11:13:43.962 GcodeDriver TRACE: Position report: {sr:{line:1,posx:100.026,posy:100.000,posz:0.000,posa:0.000,vel:125.09,stat:5}}

2020-05-23 11:13:43.962 GcodeDriver TRACE: [serial://COM5] << {qr:29,qi:1,qo:0}

2020-05-23 11:13:43.962 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,11,151]}

2020-05-23 11:13:43.963 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:100.084,posy:100.000,posz:0.000,posa:0.000,vel:302.68,stat:5}}

2020-05-23 11:13:43.963 GcodeDriver TRACE: Position report: {sr:{line:1,posx:100.084,posy:100.000,posz:0.000,posa:0.000,vel:302.68,stat:5}}

2020-05-23 11:13:43.963 GcodeDriver TRACE: [serial://COM5] << {qr:28,qi:1,qo:0}

2020-05-23 11:13:43.963 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,11,151]}

2020-05-23 11:13:43.964 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:100.199,posy:100.000,posz:0.000,posa:0.000,vel:563.05,stat:5}}

2020-05-23 11:13:43.964 GcodeDriver TRACE: Position report: {sr:{line:1,posx:100.199,posy:100.000,posz:0.000,posa:0.000,vel:563.05,stat:5}}

2020-05-23 11:13:43.964 GcodeDriver TRACE: [serial://COM5] << {qr:27,qi:1,qo:0}

2020-05-23 11:13:43.964 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,11,151]}

2020-05-23 11:13:43.965 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:100.389,posy:100.000,posz:0.000,posa:0.000,vel:892.33,stat:5}}

2020-05-23 11:13:43.965 GcodeDriver TRACE: Position report: {sr:{line:1,posx:100.389,posy:100.000,posz:0.000,posa:0.000,vel:892.33,stat:5}}

2020-05-23 11:13:43.965 GcodeDriver TRACE: [serial://COM5] << {qr:26,qi:1,qo:0}

2020-05-23 11:13:43.965 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,11,151]}

2020-05-23 11:13:43.966 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:100.667,posy:100.000,posz:0.000,posa:0.000,vel:1266.87,stat:5}}

2020-05-23 11:13:43.966 GcodeDriver TRACE: Position report: {sr:{line:1,posx:100.667,posy:100.000,posz:0.000,posa:0.000,vel:1266.87,stat:5}}

2020-05-23 11:13:43.966 GcodeDriver TRACE: [serial://COM5] << {qr:25,qi:1,qo:0}

2020-05-23 11:13:43.966 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,11,151]}

2020-05-23 11:13:43.967 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:101.040,posy:100.000,posz:0.000,posa:0.000,vel:1657.15,stat:5}}

2020-05-23 11:13:43.967 GcodeDriver TRACE: Position report: {sr:{line:1,posx:101.040,posy:100.000,posz:0.000,posa:0.000,vel:1657.15,stat:5}}

2020-05-23 11:13:43.967 GcodeDriver TRACE: [serial://COM5] << {qr:24,qi:1,qo:0}

2020-05-23 11:13:43.967 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.968 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:101.506,posy:100.000,posz:0.000,posa:0.000,vel:2031.69,stat:5}}

2020-05-23 11:13:43.968 GcodeDriver TRACE: Position report: {sr:{line:1,posx:101.506,posy:100.000,posz:0.000,posa:0.000,vel:2031.69,stat:5}}

2020-05-23 11:13:43.968 GcodeDriver TRACE: [serial://COM5] << {qr:23,qi:1,qo:0}

2020-05-23 11:13:43.968 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.969 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:102.057,posy:100.000,posz:0.000,posa:0.000,vel:2360.97,stat:5}}

2020-05-23 11:13:43.969 GcodeDriver TRACE: Position report: {sr:{line:1,posx:102.057,posy:100.000,posz:0.000,posa:0.000,vel:2360.97,stat:5}}

2020-05-23 11:13:43.969 GcodeDriver TRACE: [serial://COM5] << {qr:22,qi:1,qo:0}

2020-05-23 11:13:43.970 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.970 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:102.678,posy:100.000,posz:0.000,posa:0.000,vel:2690.06,stat:5}}

2020-05-23 11:13:43.970 GcodeDriver TRACE: Position report: {sr:{line:1,posx:102.678,posy:100.000,posz:0.000,posa:0.000,vel:2690.06,stat:5}}

2020-05-23 11:13:43.971 GcodeDriver TRACE: [serial://COM5] << {qr:21,qi:1,qo:0}

2020-05-23 11:13:43.971 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.972 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:103.580,posy:100.000,posz:0.000,posa:0.000,vel:2839.24,stat:5}}

2020-05-23 11:13:43.972 GcodeDriver TRACE: Position report: {sr:{line:1,posx:103.580,posy:100.000,posz:0.000,posa:0.000,vel:2839.24,stat:5}}

2020-05-23 11:13:43.972 GcodeDriver TRACE: [serial://COM5] << {qr:20,qi:1,qo:0}

2020-05-23 11:13:43.972 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.973 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:104.286,posy:100.000,posz:0.000,posa:0.000,vel:2909.14,stat:5}}

2020-05-23 11:13:43.973 GcodeDriver TRACE: Position report: {sr:{line:1,posx:104.286,posy:100.000,posz:0.000,posa:0.000,vel:2909.14,stat:5}}

2020-05-23 11:13:43.973 GcodeDriver TRACE: [serial://COM5] << {qr:19,qi:1,qo:0}

2020-05-23 11:13:43.973 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.974 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:105.000,posy:100.000,posz:0.000,posa:0.000,vel:2923.97,stat:5}}

2020-05-23 11:13:43.974 GcodeDriver TRACE: Position report: {sr:{line:1,posx:105.000,posy:100.000,posz:0.000,posa:0.000,vel:2923.97,stat:5}}

2020-05-23 11:13:43.974 GcodeDriver TRACE: [serial://COM5] << {qr:18,qi:1,qo:0}

2020-05-23 11:13:43.976 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.977 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:105.714,posy:100.000,posz:0.000,posa:0.000,vel:2918.39,stat:5}}

2020-05-23 11:13:43.977 GcodeDriver TRACE: Position report: {sr:{line:1,posx:105.714,posy:100.000,posz:0.000,posa:0.000,vel:2918.39,stat:5}}

2020-05-23 11:13:43.978 GcodeDriver TRACE: [serial://COM5] << {qr:17,qi:1,qo:0}

2020-05-23 11:13:43.978 GcodeDriver TRACE: [serial://COM5] << {r:{},f:[1,0,12,152]}

2020-05-23 11:13:43.978 GcodeDriver TRACE: [serial://COM5] << {sr:{line:1,posx:106.420,posy:100.000,posz:0.000,posa:0.000,vel:2870.58,stat:5}}

2020-05-23 11:13:43.978 GcodeDriver TRACE: Position report: {sr:{line:1,posx:106.420,posy:100.000,posz:0.000,posa:0.000,vel:2870.58,stat:5}}

2020-05-23 11:13:43.979 GcodeDriver TRACE: [serial://COM5] << {qr:16,qi:1,qo:0}

2020-05-23 11:13:44.119 GcodeDriver TRACE: [serial://COM5] << {qr:17,qi:0,qo:1}

2020-05-23 11:13:44.520 GcodeDriver TRACE: [serial://COM5] << {qr:18,qi:0,qo:1}

2020-05-23 11:13:44.919 GcodeDriver TRACE: [serial://COM5] << {qr:19,qi:0,qo:1}

2020-05-23 11:13:45.319 GcodeDriver TRACE: [serial://COM5] << {qr:20,qi:0,qo:1}

2020-05-23 11:13:45.719 GcodeDriver TRACE: [serial://COM5] << {qr:21,qi:0,qo:1}

2020-05-23 11:13:46.119 GcodeDriver TRACE: [serial://COM5] << {qr:22,qi:0,qo:1}

2020-05-23 11:13:46.519 GcodeDriver TRACE: [serial://COM5] << {qr:23,qi:0,qo:1}

2020-05-23 11:13:46.918 GcodeDriver TRACE: [serial://COM5] << {qr:24,qi:0,qo:1}

2020-05-23 11:13:47.319 GcodeDriver TRACE: [serial://COM5] << {qr:25,qi:0,qo:1}

2020-05-23 11:13:47.719 GcodeDriver TRACE: [serial://COM5] << {qr:26,qi:0,qo:1}

2020-05-23 11:13:48.102 GcodeDriver TRACE: [serial://COM5] << {qr:27,qi:0,qo:1}

2020-05-23 11:13:48.503 GcodeDriver TRACE: [serial://COM5] << {qr:28,qi:0,qo:1}

2020-05-23 11:13:48.903 GcodeDriver TRACE: [serial://COM5] << {qr:29,qi:0,qo:1}

2020-05-23 11:13:49.303 GcodeDriver TRACE: [serial://COM5] << {qr:30,qi:0,qo:1}

2020-05-23 11:13:49.703 GcodeDriver TRACE: [serial://COM5] << {qr:31,qi:0,qo:1}

2020-05-23 11:13:50.124 GcodeDriver TRACE: [serial://COM5] << {sr:{line:17,posx:100.000,posy:100.000,posz:0.000,posa:0.000,vel:0.00,stat:3}}

2020-05-23 11:13:50.124 GcodeDriver TRACE: Position report: {sr:{line:17,posx:100.000,posy:100.000,posz:0.000,posa:0.000,vel:0.00,stat:3}}

2020-05-23 11:13:50.124 GcodeDriver TRACE: [serial://COM5] << {qr:32,qi:0,qo:1}

ma...@makr.zone

Could the driver have the capability to provide an incrementing line number to each G code command and then use that to disambiguate the responses?

Yes, that sounds promising. Can the same number be reused in a cycle if an overflow of the 99999 happens?

> BTW - you may have already answered this somewhere and I just missed it but if you are going to stream commands to the controller without waiting for each to complete, how do you know that the controller can accept another command without overflowing its internal buffer?

I really hope that each controller has proper serial flow control

that is blocking on the internal command queue. It never even

occurred to me that this could be missing. I think it is a common

pattern to just blindly stream Gcode for a 3D printer, so I'm

quite confident.

But having said that, don't overestimate the potential. I need

the asynchronous streaming for bursts of fine-grained interpolated

motion. There will still be frequent interlocks between those

bursts. It's not that OpenPnP can send the whole job and then sit

back and wait. :-)

We need interlock in all vision operation and also need some kind of interlock on vacuum valve switching and sensing. That's because the vacuum reading Gcode is asynchronous i.e. the command returns values immediately and in parallel to any on-going motion. So we need to interlock to the valve switch to know on the OpenPnP side when that happened. The valve switch in turn is not asynchronous i.e. the controller will by itself wait for motion to complete and then switch the valve. At least that's the behavior on Smothie.

That last part is unfortunate btw. as it spoils the possibility

to do "on-the-fly" (while moving) Part-Off checking. :-(

BEGIN FUTURE IDEAS

Maybe I'll find a way to trick Smoothie and other controllers or

maybe I'll even hack it to do asynchronous switching.

I plan to add some kind of "soft wait" that allows waiting for

some event without machine still-stand. The new writer thread on

the driver would periodically issue position report commands (not

needed on TinyG). The position reports would be monitored

continously and a "soft wait" would be released as soon as some

preset positional predicate turns true (Java Function).

The machine could still be in full motion in the background, but

we can trigger on-the-fly actions on the OpenPnP side. Like

checking the vacuum level, as soon as some Z height is reached

after a pick etc.

The next idea is to add a "Monitoring" switch on the Actuator.

The Actuator will no longer be read on demand, but continually

monitored. The Gcode writer thread would issue the

ACTUATOR_READ_COMMAND periodically and as soon as the report comes

back, match it against all the monitoring Actuator REGEXes and

store the measurement. When OpenPnP code reads the Actuator it

will just immediately return the stored last measurement. Often

multiple Actuators also share the same ACTUATOR_READ_COMMAND, as

one Gcode command reports multiple measurements, so a lot of

round-trip delays can be saved. E.g. @doppelgrau's screenshots

showed ~15ms round-trips on vacuum reads, so this can save up a

lot.

The next step would be to dynamically set Alarm ranges on

Actuators. After the Pick, i.e. as soon as the soft wait on SafeZ

is triggered, an Alarm range on the vacuum level could be set. The

vacuum level would then be continuously monitored during the whole

cycle until the alarm range is removed again in the Place step. An

Alarm status on the Actuator would be set by background monitoring

and evaluated on the next JobProcessor Step. So even a temporary

dip could trigger e.g. a Part-Off alarm. I guess this could be

handled like a "Deferred Exception" in a universal way i.e. the

handling of these Alarms on the JobProcessor Step side could be

generic. This way additional Actuators could monitor other machine

parameters and raise Alarms (like the pump reservoir level or

perhaps a stepper temperature, etc.).

TinyG's line number position reports now seems to enable us to exactly know where it is. I guess this even beats Smoothie's capabilities, where I will need to count the reports or even match up coordinates with the motion plan.

Thanks Tony.

_Mark

Jarosław Karwik

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/73707467-aab1-70e9-f493-ee5206dd8c75%40makr.zone.

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAC%2BEaojF-4gCTqkXexYU5G89RTkxbFmizj%3DQVkyJdBNHocN9sA%40mail.gmail.com.

Jarosław Karwik

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/73707467-aab1-70e9-f493-ee5206dd8c75%40makr.zone.

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to ope...@googlegroups.com.

Tony Luken

ma...@makr.zone

> Full, maximum set ?

Like on Christmas? 8-)

OK, here's my list.

Integral planner

Most open source firmwares I looked at handle motion as something

special. Other commands are not planned the same way. This stands

in the way of important capabilities.

Most importantly, being synchronous should not mean "bring the machine to a full still-stand then do it". It should just mean "as soon as that last (movement) command is done, do it". If more movement commands are queued after the synchronous command, then the machine should keep the speed up.

E.g.

G0 X100

M10 ; switch valve on

G0 X200

M11 ; switch valve off

G0 X300

should move 100mm then on-the-fly switch the valve on, then without decelerating move on to 200mm, then on-the-fly-switch the valve off then move the rest to 300mm. Acceleration/Deceleration should only happen at the beginning and end of the sequence.

If I do that on the Smoothie, it will bring the machine to a full still-stand after 100mm then switch the valve, then accelerate again etc. Sloooow!

Still-stand command

Having said that, a proper M400 command is still an absolute MUST.

So if you wanted the still-stand behavior, you should be

able to add a working M400 command.

Synchronous/Asynchronous operation

Ideally, switching and other "actuator" commands should be

provided in a asynchronous variant (and vice versa).

E.g. a separate M10.1 variant would switch immediately,

without waiting for the queue.

Unique queue acknowledgments

It seems it should be an obvious feature but I haven't found

anything on open source controllers.

A synchronous "echo" command should report a unique string back

to OpenPnP as soon as the queue reaches this command. So on the

OpenPnP side we know exactly when that happens. Again it should be

done on-the-fly without stopping motion, if it is inserted between

motion commands.

Uncoordinates moves

A controller should implement G0 vs. G1 properly i.e. allow axes to move in uncoordinated fashion, if we want it. Uncoordinated moves can speed things up.

Ideally, and in a special variant only, this could even work across multiple G0 commands. So If you tell it this:

G0 X100 Y200

G0.1 B180

Then X, Y move in uncoordinated "hockey stick" fashion for best speed. Plus the move in B would start to move before the X, Y move is complete. This would be worth a lot when you want to move other stuff on the machine, like feeder actuators, conveyor belts etc. As one example consider this separated "drag" feeder on the SmallSMT machines:

Support path blending

This is probably a tall order but ideally you would support what LinuxCNC can do with the G64 command i.e. "cut corners" in smoothed-out motion to speed up things:

http://www.linuxcnc.org/docs/2.6/html/gcode/gcode.html#sec:G64

That's what I can think of at the moment.

:-)

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAC%2BEaojF-4gCTqkXexYU5G89RTkxbFmizj%3DQVkyJdBNHocN9sA%40mail.gmail.com.

ma...@makr.zone

Bert,

I think if you read the beginning of this thread, things will

become clear. Then perhaps also re-read that last post about the

FUTURE IDEAS... This will shave off significant time if done

right.

Otherwise ask again.