Skip to first unread message

JoAnne Russo

Jul 18, 2018, 5:13:42 PM7/18/18

to iNaturalist

I am wondering if there's any chance of making it necessary for 2 people to agree with an initial species level ID to elevate it to research grade? I would argue that an extra set of eyes verifying an ID would give greater credibility to the "research grade" level of an ID. I find mistakes made all the time with IDs.

JoAnne

paloma

Jul 18, 2018, 6:21:37 PM7/18/18

to iNaturalist

This has been brought up before, although sometimes the conversations go in multiple directions, so they might be hard to sift through. But my impression was that this idea is not going anywhere. There have also been discussions about whether or not research grade has any actual importance, and I recall differences of opinion on that. You might try word searching the group's messages, but I just tried myself and didn't have much success. I'm just a user, not anyone with any power, so this is just to let you know that this topic has been discussed previously.

JoAnne Russo

Jul 18, 2018, 7:17:15 PM7/18/18

to iNaturalist

thanks! I was trying to search too, figuring this topic must have been broached before, but couldn't find anything.

Scott Loarie

Jul 18, 2018, 9:21:37 PM7/18/18

to inatu...@googlegroups.com

Hi JoAnne and Paloma,

We've been doing a lot of analyses of the proportion of incorrectly ID'd Research Grade obs. From the experiments we've done, its actually pretty low, like around 2.5% for most groups we've looked at.

You could argue that this is too high (ie we're being too liberal with the 'Research Grade' threshold) or too low (we're being to conservative) and we've had different asks to move the threshold one way or another so I imagine changing would be kind of a zero sum game.

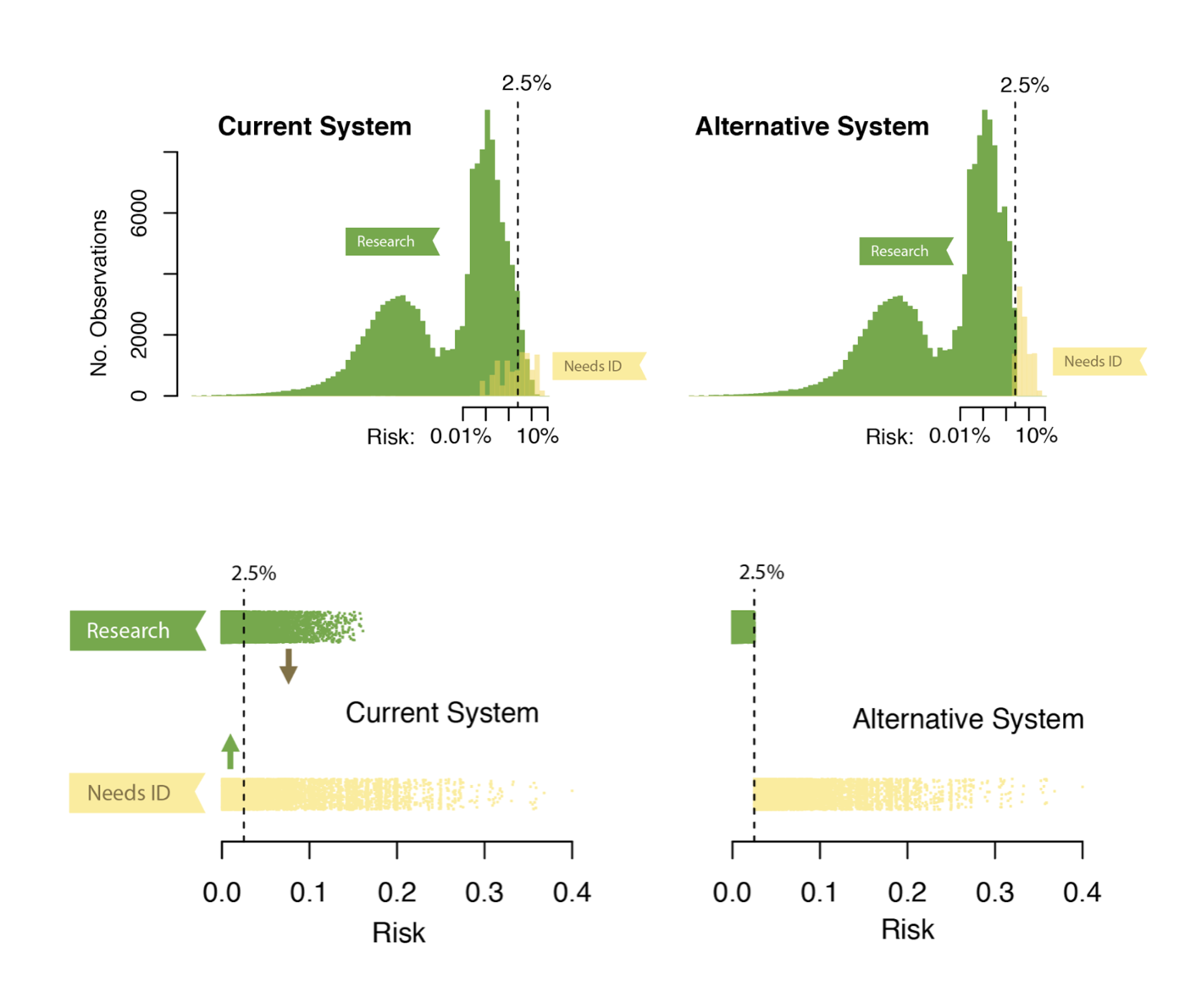

One thing we have noticed from our experiments though is that our current Research Grade system (which is quite simplistic) is that we could do a better job of discriminating high risk (ie potentially incorrectly ID'd) from low risk (ie likely correctly ID'd) into Research and Needs ID categories. As you can see from the figures on the left below, there's some overlap between high risk and Research Grade and low risk and Needs ID. We've been exploring more sophisticated systems that do a better job of discriminating these (figures on the right).

We (by which I mean Grant Van Horn who was also heavily involved in our Computer Vision model) actually just presented one approach which is kind of an 'earned reputation' approach where we simultaneously estimate the 'skill' of identifiers and the risk of observations at this conference a few weeks ago: http://cvpr2018.thecvf.com/

you can read the paper 'lean multiclass crowdsourcing' here:http://openaccess.thecvf.com/content_cvpr_2018/papers/Van_Horn_Lean_Multiclass_Crowdsourcing_CVPR_2018_paper.pdf

Still more work to be done, but its appealing to us that a more sophisticated approach like this could improve discriminating high risk and low risk obs into Needs ID and Research Grade categories rather than just moving the threshold in the more or less conservative direction without really improving things

Scott

--

You received this message because you are subscribed to the Google Groups "iNaturalist" group.

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist+unsubscribe@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

--------------------------------------------------

Scott R. Loarie, Ph.D.

Co-director, iNaturalist.org

California Academy of Sciences

55 Music Concourse Dr

San Francisco, CA 94118

--------------------------------------------------

Scott R. Loarie, Ph.D.

Co-director, iNaturalist.org

California Academy of Sciences

55 Music Concourse Dr

San Francisco, CA 94118

--------------------------------------------------

paloma

Jul 18, 2018, 9:39:25 PM7/18/18

to iNaturalist

Thanks for sharing that, Scott! I'm pretty sure I don't understand it all, but it's very intriguing.

On Wednesday, July 18, 2018 at 7:21:37 PM UTC-6, Scott Loarie wrote:

Hi JoAnne and Paloma,We've been doing a lot of analyses of the proportion of incorrectly ID'd Research Grade obs. From the experiments we've done, its actually pretty low, like around 2.5% for most groups we've looked at.You could argue that this is too high (ie we're being too liberal with the 'Research Grade' threshold) or too low (we're being to conservative) and we've had different asks to move the threshold one way or another so I imagine changing would be kind of a zero sum game.One thing we have noticed from our experiments though is that our current Research Grade system (which is quite simplistic) is that we could do a better job of discriminating high risk (ie potentially incorrectly ID'd) from low risk (ie likely correctly ID'd) into Research and Needs ID categories. As you can see from the figures on the left below, there's some overlap between high risk and Research Grade and low risk and Needs ID. We've been exploring more sophisticated systems that do a better job of discriminating these (figures on the right).We (by which I mean Grant Van Horn who was also heavily involved in our Computer Vision model) actually just presented one approach which is kind of an 'earned reputation' approach where we simultaneously estimate the 'skill' of identifiers and the risk of observations at this conference a few weeks ago: http://cvpr2018.thecvf.com/you can read the paper 'lean multiclass crowdsourcing' here:

http://openaccess.thecvf.com/content_cvpr_2018/papers/Van_Horn_Lean_Multiclass_Crowdsourcing_CVPR_2018_paper.pdfStill more work to be done, but its appealing to us that a more sophisticated approach like this could improve discriminating high risk and low risk obs into Needs ID and Research Grade categories rather than just moving the threshold in the more or less conservative direction without really improving thingsScott

On Wed, Jul 18, 2018 at 4:17 PM, JoAnne Russo <jo.a....@gmail.com> wrote:

thanks! I was trying to search too, figuring this topic must have been broached before, but couldn't find anything.

On Wednesday, July 18, 2018 at 5:13:42 PM UTC-4, JoAnne Russo wrote:I am wondering if there's any chance of making it necessary for 2 people to agree with an initial species level ID to elevate it to research grade? I would argue that an extra set of eyes verifying an ID would give greater credibility to the "research grade" level of an ID. I find mistakes made all the time with IDs.JoAnne

--

You received this message because you are subscribed to the Google Groups "iNaturalist" group.

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist...@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

Colin Purrington

Jul 19, 2018, 7:21:54 AM7/19/18

to iNaturalist

Is that 2.5% error rate of RG observations stable? Would be an interesting stat to see displayed over time.

JoAnne Russo

Jul 19, 2018, 7:46:31 AM7/19/18

to iNaturalist

I like the "earned reputation" approach! I tend not to trust an ID from someone who I don't know or has only IDed a few compared to another who IDed higher numbers of the species.

Charlie Hohn

Jul 20, 2018, 6:18:50 AM7/20/18

to inatu...@googlegroups.com

Intriguing!

I still say the research grade Inaturalist error rate is no higher than the rate of other types of data collected by “pros” without photos (monitoring data etc )

On Thu, Jul 19, 2018 at 7:46 AM JoAnne Russo <jo.a....@gmail.com> wrote:

I like the "earned reputation" approach! I tend not to trust an ID from someone who I don't know or has only IDed a few compared to another who IDed higher numbers of the species.

--

You received this message because you are subscribed to the Google Groups "iNaturalist" group.

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist...@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

Sent from Gmail Mobile

gcwa...@austin.rr.com

Jul 20, 2018, 4:02:27 PM7/20/18

to iNaturalist

Not that I understand all the nuances of "crowdsourced multiclass annotations", but I glanced at the referenced pdf article and was impressed with the outcome of the new methodology. We are all prone to making ID errors on occasion (!!!), but one of my frustrations in the ID system is when one or more friends (often new users and/or young students?) jump to agreement on IDs that turn out to be wrong. Recently, this has happened more and more when the iNat identitron (AI) suggestions are followed blindly*. Implementing a user-history defined success calculation will substatially bypass or assuage my concerns.

* As an aside, I have been super-impressed by the ID capabilities by iNat's AI algorithms, but they obviously haven't been trained on the millions of species worldwide and can be forgiven for bad choices on subtle IDs or mishits on poor images. For the time being, they should be accompanied with a strong warning label, IMHO!

Patrick Alexander

Jul 21, 2018, 2:27:55 AM7/21/18

to iNaturalist

Hello Scott,

How are you all determining whether or not the ID of Research Grade observations is correct? Although "Research Grade" has a variety of issues, it's not clear to me what ID system we could apply at the scale of many thousands of observations that would have a lower error rate and be reasonably assumed to provide the correct ID. For identifying digital media, Research Grade IDs are at least in the running for the title of "worst system except for all the others we've tried". There's plenty of room for improvement, but a claim that there's another system available at this scale that we can call "correct" compared to those Research Grade IDs should prompt some serious skepticism.

My take on how far we should trust Research Grade IDs kind of boils down to: If the correct ID of particular observations is important to you, check them yourself or find someone with the expertise to do so. Don't trust IDs uncritically unless the ID task is trivial or accuracy is not that important to your use of the data.

Regards,

Patrick

How are you all determining whether or not the ID of Research Grade observations is correct? Although "Research Grade" has a variety of issues, it's not clear to me what ID system we could apply at the scale of many thousands of observations that would have a lower error rate and be reasonably assumed to provide the correct ID. For identifying digital media, Research Grade IDs are at least in the running for the title of "worst system except for all the others we've tried". There's plenty of room for improvement, but a claim that there's another system available at this scale that we can call "correct" compared to those Research Grade IDs should prompt some serious skepticism.

My take on how far we should trust Research Grade IDs kind of boils down to: If the correct ID of particular observations is important to you, check them yourself or find someone with the expertise to do so. Don't trust IDs uncritically unless the ID task is trivial or accuracy is not that important to your use of the data.

Regards,

Patrick

On Wednesday, July 18, 2018 at 7:21:37 PM UTC-6, Scott Loarie wrote:

Hi JoAnne and Paloma,We've been doing a lot of analyses of the proportion of incorrectly ID'd Research Grade obs. From the experiments we've done, its actually pretty low, like around 2.5% for most groups we've looked at.You could argue that this is too high (ie we're being too liberal with the 'Research Grade' threshold) or too low (we're being to conservative) and we've had different asks to move the threshold one way or another so I imagine changing would be kind of a zero sum game.One thing we have noticed from our experiments though is that our current Research Grade system (which is quite simplistic) is that we could do a better job of discriminating high risk (ie potentially incorrectly ID'd) from low risk (ie likely correctly ID'd) into Research and Needs ID categories. As you can see from the figures on the left below, there's some overlap between high risk and Research Grade and low risk and Needs ID. We've been exploring more sophisticated systems that do a better job of discriminating these (figures on the right).We (by which I mean Grant Van Horn who was also heavily involved in our Computer Vision model) actually just presented one approach which is kind of an 'earned reputation' approach where we simultaneously estimate the 'skill' of identifiers and the risk of observations at this conference a few weeks ago: http://cvpr2018.thecvf.com/you can read the paper 'lean multiclass crowdsourcing' here:

http://openaccess.thecvf.com/content_cvpr_2018/papers/Van_Horn_Lean_Multiclass_Crowdsourcing_CVPR_2018_paper.pdfStill more work to be done, but its appealing to us that a more sophisticated approach like this could improve discriminating high risk and low risk obs into Needs ID and Research Grade categories rather than just moving the threshold in the more or less conservative direction without really improving thingsScott

On Wed, Jul 18, 2018 at 4:17 PM, JoAnne Russo <jo.a....@gmail.com> wrote:

thanks! I was trying to search too, figuring this topic must have been broached before, but couldn't find anything.

On Wednesday, July 18, 2018 at 5:13:42 PM UTC-4, JoAnne Russo wrote:I am wondering if there's any chance of making it necessary for 2 people to agree with an initial species level ID to elevate it to research grade? I would argue that an extra set of eyes verifying an ID would give greater credibility to the "research grade" level of an ID. I find mistakes made all the time with IDs.JoAnne

--

You received this message because you are subscribed to the Google Groups "iNaturalist" group.

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist...@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

Charlie Hohn

Jul 21, 2018, 10:40:52 PM7/21/18

to inatu...@googlegroups.com

yeah, when accuracy is important you have to evaluate all data yourself. True in the 'professional world' as well. but i think they used the blind ID study to evaluate research grade accuracy. My non-scientific observation is that the iNat research grade error rate is similar to that of other forms of biological data collected by land management agencies, conservation groups, etc. Which is to say not perfect, but good, and problem data is usually pretty easy to spot.

============================

Charlie Hohn

Montpelier, Vermont

Charlie Hohn

Montpelier, Vermont

Patrick Alexander

Jul 22, 2018, 2:37:24 AM7/22/18

to inatu...@googlegroups.com

What's the blind ID study?

You received this message because you are subscribed to a topic in the Google Groups "iNaturalist" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/inaturalist/4l0MjWlArFg/unsubscribe.

To unsubscribe from this group and all its topics, send an email to inaturalist...@googlegroups.com.

Charlie Hohn

Jul 22, 2018, 7:55:41 AM7/22/18

to inatu...@googlegroups.com

Patrick Alexander

Jul 23, 2018, 3:21:38 AM7/23/18

to iNaturalist

Interesting. I was assuming that the scale on the figure Scott posted above ruled out that kind of approach... guessing by the scale on the y-axis and the number of columns maybe 150,000 to 200,000 identifications. But, then, if it's been going on for 15 months or so and there've been a lot of folks signing up on that form, maybe that is feasible. I hope we can look forward to more detail on this or maybe being able to play with the data a bit. I've been looking at the Grant Van Horn et al. paper Scott linked a little bit, but I'm finding it fairly opaque so far. It's not clear if the iNaturalist-based portion of the paper is coming from the blind ID study or not.

Charlie Hohn

Jul 23, 2018, 7:39:16 AM7/23/18

to inatu...@googlegroups.com

Yeah I’m not sure if that’s where they are getting all their info from just that it’s relevant.

Sent from Gmail Mobile

Scott Loarie

Jul 23, 2018, 9:26:49 AM7/23/18

to inatu...@googlegroups.com

For the 'ground truthed' true IDs we've been using IDs made within the last year from 'experts' (for a location-clade) registered via the identification experiment https://www.inaturalist.org/pages/identification_quality_experiment

and then rolling the clock back a year and using a snapshot of the database from then for analysis so that the other IDs we're analyzing are independent of the expert's influence.

For example, imagine at time_now we know that observation_a is 'ground truthed' at species_a because of the existence of an ID made within the last year by expert_a. Then if we roll the clock back to time_1_year_ago before expert_a made there ID we can analyze observation_a independent of the influence of expert_a, but we know that the true ID of observation_a is species_a

Hope that helps,

Scott

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist+unsubscribe@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

--Sent from Gmail Mobile

--

You received this message because you are subscribed to the Google Groups "iNaturalist" group.

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist+unsubscribe@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

Sam Kieschnick

Jul 23, 2018, 12:36:12 PM7/23/18

to iNaturalist

Hey Scott,

I think that your explanation and Grant's research paper would warrant a journal post on iNat. I'd sure like to link out to it when I get messages asking me about the accuracy/relevance/explanation of 'research grade.'

I've printed out CVPR paper to digest it...later.

Thanks!

~Sam

--Sent from Gmail Mobile--

You received this message because you are subscribed to the Google Groups "iNaturalist" group.

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist...@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

Patrick Alexander

Jul 23, 2018, 8:50:46 PM7/23/18

to inatu...@googlegroups.com

Hello Scott,

That helps, thanks! I may need to sit down with Grant Van Horn's paper when I have more time to devote to it.

I'm also a little curious on the form at https://www.inaturalist.org/pages/identification_quality_experiment if there's a good way for a generalist to join. I have a pile of genera that I think I know as well (at least in my area) as anyone other than one or two taxonomists who specialize on that group... and a pile of genera that are on my to-do list, that I really need to nail down someday but don't have a great handle on yet... and most are somewhere in between. I think, perhaps incorrectly, that I at least know whether or not I know something! But I can't really nail it down to a clade; I can't say that I know Brassicaceae in New Mexico (mostly, but not Draba or Lepidium), or Poaceae in New Mexico (I'm good on the C4s, iffy on C3s), and so forth.

Regards,

Patrick

That helps, thanks! I may need to sit down with Grant Van Horn's paper when I have more time to devote to it.

I'm also a little curious on the form at https://www.inaturalist.org/pages/identification_quality_experiment if there's a good way for a generalist to join. I have a pile of genera that I think I know as well (at least in my area) as anyone other than one or two taxonomists who specialize on that group... and a pile of genera that are on my to-do list, that I really need to nail down someday but don't have a great handle on yet... and most are somewhere in between. I think, perhaps incorrectly, that I at least know whether or not I know something! But I can't really nail it down to a clade; I can't say that I know Brassicaceae in New Mexico (mostly, but not Draba or Lepidium), or Poaceae in New Mexico (I'm good on the C4s, iffy on C3s), and so forth.

Regards,

Patrick

paloma

Oct 28, 2018, 7:24:50 PM10/28/18

to iNaturalist

I noticed in a couple of other threads that comments re an internal reputation system are coming up again, so I was wondering if there is any update to Scott Loarie's message attached, re the "earned reputation" approach from Grant Van Horn's presentation?

On Wednesday, July 18, 2018 at 6:21:37 PM UTC-7, Scott Loarie wrote:

Hi JoAnne and Paloma,We've been doing a lot of analyses of the proportion of incorrectly ID'd Research Grade obs. From the experiments we've done, its actually pretty low, like around 2.5% for most groups we've looked at.You could argue that this is too high (ie we're being too liberal with the 'Research Grade' threshold) or too low (we're being to conservative) and we've had different asks to move the threshold one way or another so I imagine changing would be kind of a zero sum game.One thing we have noticed from our experiments though is that our current Research Grade system (which is quite simplistic) is that we could do a better job of discriminating high risk (ie potentially incorrectly ID'd) from low risk (ie likely correctly ID'd) into Research and Needs ID categories. As you can see from the figures on the left below, there's some overlap between high risk and Research Grade and low risk and Needs ID. We've been exploring more sophisticated systems that do a better job of discriminating these (figures on the right).We (by which I mean Grant Van Horn who was also heavily involved in our Computer Vision model) actually just presented one approach which is kind of an 'earned reputation' approach where we simultaneously estimate the 'skill' of identifiers and the risk of observations at this conference a few weeks ago: http://cvpr2018.thecvf.com/you can read the paper 'lean multiclass crowdsourcing' here:

http://openaccess.thecvf.com/content_cvpr_2018/papers/Van_Horn_Lean_Multiclass_Crowdsourcing_CVPR_2018_paper.pdfStill more work to be done, but its appealing to us that a more sophisticated approach like this could improve discriminating high risk and low risk obs into Needs ID and Research Grade categories rather than just moving the threshold in the more or less conservative direction without really improving thingsScott

On Wed, Jul 18, 2018 at 4:17 PM, JoAnne Russo <jo.a....@gmail.com> wrote:

thanks! I was trying to search too, figuring this topic must have been broached before, but couldn't find anything.

On Wednesday, July 18, 2018 at 5:13:42 PM UTC-4, JoAnne Russo wrote:I am wondering if there's any chance of making it necessary for 2 people to agree with an initial species level ID to elevate it to research grade? I would argue that an extra set of eyes verifying an ID would give greater credibility to the "research grade" level of an ID. I find mistakes made all the time with IDs.JoAnne

--

You received this message because you are subscribed to the Google Groups "iNaturalist" group.

To unsubscribe from this group and stop receiving emails from it, send an email to inaturalist...@googlegroups.com.

To post to this group, send email to inatu...@googlegroups.com.

Visit this group at https://groups.google.com/group/inaturalist.

For more options, visit https://groups.google.com/d/optout.

Reply all

Reply to author

Forward

0 new messages