Calculation of frontend hours seems off by almost factor 2.

49 views

Skip to first unread message

Per

May 14, 2012, 5:51:42 PM5/14/12

to google-a...@googlegroups.com

Hi Google team,

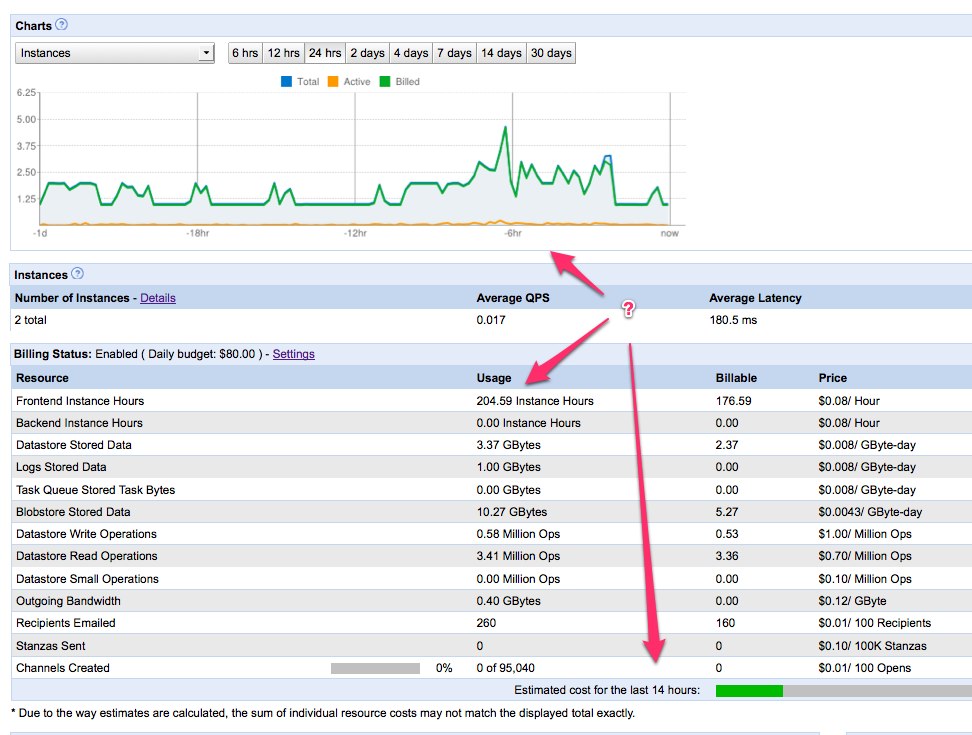

according to my understanding the F4 instances incur 4 instance hours

per actual hour. Assuming an

average of 2 instances over the past 14 hours, we should be at 2*14*4 =

112

instance hours, not at 205. I understand that this data may not be

exact, so maybe we should be at 125 or even 140 or so. Or am I mistaken?

This has been going on for about 2 weeks now, and our bills are getting very high, given that we rarely exceeds 0.8 requests per second.

I raised two production issues (on Thursday and Friday) but I haven't received confirmation even wether the issues have been created, so I'm trying the forum.

We used to be at $5 to $10per day, now we're at $30 to $50. Some of it might be due to increased usage, but we certainly didn't quadruple load. It would be great to get some insight into this.

Our app ID is small-improvements-hrd

Cheers,

Per

Message has been deleted

Message has been deleted

Gregory Nicholas

May 15, 2012, 5:01:26 AM5/15/12

to google-a...@googlegroups.com

Not directly answering the question, I'm posing another, why the eff are you running instances in such a manner for such little qps????

Also, I can also suggest that at that low of qps, you could stand to substantially optimize the work you're doing reading data from the data store, which will also help minimize the frontend instance workload

Per

May 15, 2012, 9:17:13 AM5/15/12

to google-a...@googlegroups.com

Hi Gregory,

if I'm doing something wrong here then I'd love to learn how you do it. I tried setting the max idle instances to 1 (didn't help, except increase latency), I set the pending queue to 1s and 2s and 3s (didn't help, but increased the latency). If there is a way to limit the instances reliably, I would love to hear how you do it.

We have maybe 0.5 to 1 requests per second on average (the QPS up there in the screenshot is only a snapshot for the past minute). Our average response time is around 300ms. Occasionally more, occasionally less. It's not great, but it's still a lot lower than the 1s limit for continuous usage. We do use the memcache where we can, but it's limited to some 10MB to 30MB for us at the moment, and the hit ratio is always around 65%, so we do have to access the datastore more than we want to. We're also denormalising plenty of data for faster access, but it would substantially slow us down if we had to denormalise *everything* that is displayed on a page. I don't think it should be a problem to do 2 to 3 queries in a single request, maybe 5 datastore gets plus a few memcache gets, should it? Given that we're developing a complex application (www.small-improvements.com) and not just a number crunching app, this seems not too bad. And performance-wise we're happy, it's just the cost that's prohibitive.

Anyway, I would love to hear how you do it, and maybe a screenshot of your system (and the ratio of requests to instances) would be interesting.

Cheers,

Per

if I'm doing something wrong here then I'd love to learn how you do it. I tried setting the max idle instances to 1 (didn't help, except increase latency), I set the pending queue to 1s and 2s and 3s (didn't help, but increased the latency). If there is a way to limit the instances reliably, I would love to hear how you do it.

We have maybe 0.5 to 1 requests per second on average (the QPS up there in the screenshot is only a snapshot for the past minute). Our average response time is around 300ms. Occasionally more, occasionally less. It's not great, but it's still a lot lower than the 1s limit for continuous usage. We do use the memcache where we can, but it's limited to some 10MB to 30MB for us at the moment, and the hit ratio is always around 65%, so we do have to access the datastore more than we want to. We're also denormalising plenty of data for faster access, but it would substantially slow us down if we had to denormalise *everything* that is displayed on a page. I don't think it should be a problem to do 2 to 3 queries in a single request, maybe 5 datastore gets plus a few memcache gets, should it? Given that we're developing a complex application (www.small-improvements.com) and not just a number crunching app, this seems not too bad. And performance-wise we're happy, it's just the cost that's prohibitive.

Anyway, I would love to hear how you do it, and maybe a screenshot of your system (and the ratio of requests to instances) would be interesting.

Cheers,

Per

Reply all

Reply to author

Forward

0 new messages