a z/OS port in the future

Bill O'Farrell

Matthew Dempsky

Now that go is available for Linux on z (i.e. s390x), I'd like to give everyone a heads-up that we hope in the next few months to have ready a port for z/OS. z/OS is the modern 64-bit mainframe operating system that has a lineage that goes back to MVS, first released in 1974. One of its biggest claims to fame is its stability. Some users haven't had any unplanned downtime in literally decades, which is probably why more than 90% of Fortune 500 companies run z/OS. It would be great to get go running on this robust and (for business at least) ubiquitous platform. We've had great support from the community in the porting to Linux on z, so I wanted to run this by everyone early in the process. It won't be ready in time for 1.8, but 1.9 could be a potential target. Comments appreciated.

--

You received this message because you are subscribed to the Google Groups "golang-dev" group.

To unsubscribe from this group and stop receiving emails from it, send an email to golang-dev+unsubscribe@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Rob Pike

Dave Cheney

>>

>>

>> --

>> You received this message because you are subscribed to the Google Groups

>> "golang-dev" group.

>> To unsubscribe from this group and stop receiving emails from it, send an

>

>

> --

> You received this message because you are subscribed to the Google Groups

> "golang-dev" group.

> To unsubscribe from this group and stop receiving emails from it, send an

Keith Randall

>>> email to golang-dev+unsubscribe@googlegroups.com.

>>> For more options, visit https://groups.google.com/d/optout.

>>

>>

>> --

>> You received this message because you are subscribed to the Google Groups

>> "golang-dev" group.

>> To unsubscribe from this group and stop receiving emails from it, send an

>> email to golang-dev+unsubscribe@googlegroups.com.

>> For more options, visit https://groups.google.com/d/optout.

>

>

> --

> You received this message because you are subscribed to the Google Groups

> "golang-dev" group.

> To unsubscribe from this group and stop receiving emails from it, send an

> email to golang-dev+unsubscribe@googlegroups.com.

> For more options, visit https://groups.google.com/d/optout.

--

You received this message because you are subscribed to the Google Groups "golang-dev" group.

To unsubscribe from this group and stop receiving emails from it, send an email to golang-dev+unsubscribe@googlegroups.com.

Brad Fitzpatrick

Matthew Dempsky

The "os" is a stutter. GOOS=z.

Andrew Gerrand

--

Brad Fitzpatrick

Please. That naming was sooo last month.

It's macOS now. GOOS=mac.

--

Matthew Dempsky

John McKown

Neat.On a scale from Windows to Solaris, how POSIX-y is z/OS?

What's the long-term binary compatibility story like? Is there a stable kernel and/or userland ABI we can/should rely on?

Does GCC run on z/OS? Is there anything that will make supporting cgo extra exciting?

John McKown

mun...@ca.ibm.com

On a scale from Windows to Solaris, how POSIX-y is z/OS?

What's the long-term binary compatibility story like? Is there a stable kernel and/or userland ABI we can/should rely on?

Does GCC run on z/OS? Is there anything that will make supporting cgo extra exciting?

No GCC... The C compiler on z/OS is IBM's XL C. One exciting (?) thing is that XPLINK (the newest linkage convention on z/OS) stores the stack pointer in R4, rather than R15 like ELF does. It's not an enormous problem, but does mean that the Go ABI (at least as it currently stands) will be a bit less compatible with the system linkage convention than it is on Linux. But at least the stack grows downwards in XPLINK...

Are you thinking GOOS=zos or something else?

To unsubscribe from this group and stop receiving emails from it, send an email to golang-dev+...@googlegroups.com.

Matthew Dempsky

Very long term :) LE provides a way to get at POSIX functions via a userland ABI, so we can use that for "system calls" and don't have to use cgo necessarily.

No GCC... The C compiler on z/OS is IBM's XL C.

Matthew Dempsky

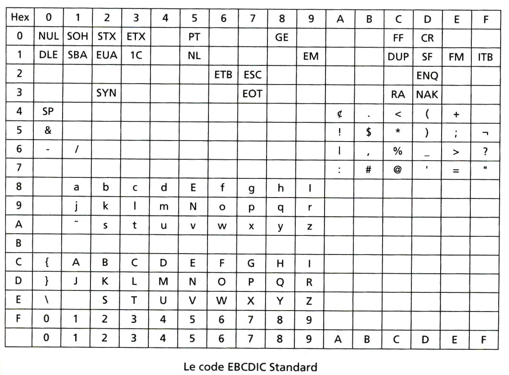

The single biggest PITA is going to be the character set. The native IBM z/OS character set is EBCDIC. And not just one variant. Historic z/OS has used CP-037. z/OS UNIX uses IBM-1047. They are generally compatible, but with some different code points for things such as []{} and other "special" characters. This requires some adjustments if a person is using a 3270 emulator. The reason this is a PITA is that, from what I can tell, "go" is designed to use UNICODE only and likely won't work with EBCDIC. Many coding assumptions made in C with ASCII (UNICODE) are not true in EBCDIC. Case in point: The mappings of the Cntrl-<letter> in EBCDIC are not contiguous like they are in ASCII. And their code points don't collate in the same order. So you can't map from a <letter> to a Ctrl-<letter> using a simple formula such as <letter>&0x1f . Another weirdness is that there is a "gap" in the code points between I & J and R & S as well as "i" & "j" and "r" and "s". EBCDIC is a very weird encoding if you come from the ASCII world. It makes sense only if you come (as z/OS originally does) from the "Hollerith card" (aka "punched card") world. This was IBM original world. When they make the original S/360 (hardware progenitor of current z series), the decided to be compatible with the equipment their current customers had. Bad decision, at least in today's world.

David Chase

--

You received this message because you are subscribed to the Google Groups "golang-dev" group.

To unsubscribe from this group and stop receiving emails from it, send an email to golang-dev+unsubscribe@googlegroups.com.

Brad Fitzpatrick

andrey mirtchovski

John McKown

On the upside, Brad already has a CL to implement trigraph support.

mun...@ca.ibm.com

The Go spec says source files are UTF-8. How do we reconcile this on z/OS if the rest of the OS expects text files to be EBCDIC?

As for the Go source files themselves, they are defined to be in UTF-8 so editors on z/OS will just need to switch character sets for those files. It's not too bad, filesystem tags can be be used or they can be edited on a shared file system which is what I tend to do.

Bill O'Farrell

Rob Pike

--

You received this message because you are subscribed to the Google Groups "golang-dev" group.

To unsubscribe from this group and stop receiving emails from it, send an email to golang-dev+unsubscribe@googlegroups.com.

Bill O'Farrell

To unsubscribe from this group and stop receiving emails from it, send an email to golang-dev+...@googlegroups.com.

Rob Pike

To unsubscribe from this group and stop receiving emails from it, send an email to golang-dev+unsubscribe@googlegroups.com.

Michael Jones

I’ve had the privilege of mentorship from both Bo Evans and Fred Brooks. Feel great admiration for IBM’s hardware team and many aspects of the mainframe software groups—channel controllers, RACF, VM/370 and beyond, John Cocke, Hursley house, Mike Cowlishaw, RTP, Henry Rich, etc. All magical.

One comment on EBCDIC’s glyph arrangement…it never made sense to me until I got the fold-out pocket reference card that showed the character layout on a 16x16 grid. In that format you can see that all of the logical groupings of symbols are tightly packed rectangles separated by whitespace. Only on this “virtual {hex}x{hex} cross-product typewriter, did it have some sense…

…but even this structure is made invisible by typical columnar layouts. You can also understand here the great woes of EBCDIC:

The difficult to comprehend gap in rectangle structure before the ‘s’ and ‘S’.

The debris of punctuation strewn down the left column and into the gap before the esses.

The 118 characters that are arranged to occupy more than the seven low-order bits of the byte, which Fred Brooks of the S/360 & OS/360 team chose to be 8 bits long before.

The typical at the time but now quaint notion that every special control specification needed its own symbol (“in band signaling”) rather than an escape mechanism and then subsequent normal characters to describe the control. This made logic decoding simpler in devices of yore, but wastes symbols on uses that are abandoned before the assignment is standardized. The choice was not wrong for the times, but the lesson is one for the ages.

EBCDIC has the interesting property that you can go from lower case to upper case with a constant offset, but cannot get from one letter to the next with x+1 or test for alphabetical with ‘a’ <= x <= ‘z’ || ‘A’ <= x <= ‘Z’.

Michael

Lucio De Re

think Russian/Cyrillic does that), rather than a distinct set of

symbols, none of EBCDIC, ASCII or UNICODE got it right. Maybe Seymour

Craye's Fieldata did :-). Or was the DoD actually responsible for

that.

Nowhere is there the necessary elbow room to fix that, is there?

Lucio.

On 8/26/16, Michael Jones <michae...@gmail.com> wrote:

> I’ve had the privilege of mentorship from both Bo Evans and Fred Brooks.

> Feel great admiration for IBM’s hardware team and many aspects of the

> mainframe software groups—channel controllers, RACF, VM/370 and beyond, John

> Cocke, Hursley house, Mike Cowlishaw, RTP, Henry Rich, etc. All magical.

>

>

>

> One comment on EBCDIC’s glyph arrangement…it never made sense to me until I

> got the fold-out pocket reference card that showed the character layout on a

> 16x16 grid. In that format you can see that all of the logical groupings of

> symbols are tightly packed rectangles separated by whitespace. Only on this

> “virtual {hex}x{hex} cross-product typewriter, did it have some sense…

>

>

>

>

>

2 Piet Retief St

Kestell (Eastern Free State)

9860 South Africa

Ph.: +27 58 653 1433

Cell: +27 83 251 5824

FAX: +27 58 653 1435

Rob Pike

mikioh...@gmail.com

mun...@ca.ibm.com

So that's easy, Go code on zOS is ASCII or maybe, one hopes, full UTF-8 and Unicode using some editor mode.

Yes, the idea is to use UTF-8 with the limitation that strings passed to system calls (filenames, hostnames etc.) will be restricted to ASCII in order to take advantage of the automatic conversion facilities.

But as for programs themselves, they must work on EBCDIC

We'll need to provide some sort of conversion library. It's not clear yet (to me anyway) whether it should live in the standard library or somewhere else (x/text maybe?). Either way inside Go there will need to be a conversion facility available for tests and the linker but personally I'm happy for that to live in an internal package.

I'm hoping (maybe I'm being naive) that if a developer wants to interact with an EBCDIC program or file they will just need to use a shim implementing the io.Reader and io.Writer interfaces. In exactly the same way they would if they were interacting with some other file/stream encoding (e.g. gzip). Third-party libraries might not always work "out of the box". However this is true of Linux and Windows too. In my opinion the UTF-8-centric nature of Go might well be very useful for providing web interfaces and other external communications.

mun...@ca.ibm.com

Sorry, to be clear: is it the kernel ABI or userland ABI that have long-term support, or both?

http://www.ibm.com/support/knowledgecenter/SSLTBW_2.2.0/com.ibm.zos.v2r2.ceea600/olmc.htm

I don't know if there will be much need to interact with the kernel directly but I believe the compatibility guarantees are similar.

On Thursday, August 25, 2016 at 11:31:30 AM UTC-4, Matthew Dempsky wrote:

john...@gmail.com

John Roth

john...@gmail.com

A couple of minor points before getting into the meat. One of almost no interest but was mentioned in the thread is that 1401 emulation was never officially supported by any operating system - it was always a standalone hardware option on the 360/30 and 360/40. I think there were a couple of heavily modified versions of DOS that supported it on a 360/40. There are quite competent 1401 interpreters that work today, so if playing with a simulated 1401 rocks your boat, have at it.

There’s a reason that the Language Environment doesn’t use R15 - system calls use R0, R1 and return a condition code in R15, which means that using R15 for the stack would have to save and restore it around each and every system call.

On EBCDIC (Extended Binary Coded Decimal Interchange Code). It’s an 8-bit version of BCDIC (Binary Coded Decimal Interchange Code) that was used on earlier IBM systems; that in turn was an extension of the coding used on punched card systems. (And yes, I worked on some of those earlier systems.) On the 6-bit encoding, the vacant character in front of the letter S contained the slash. The reason for the weird layout is that the designers decided to use the high-order bit to distinguish alphanumeric characters from special characters. That made some common character-manipulation tasks relatively easy - in Assembler. Once you realize that, EBCDIC is simply BCDIC with some twiddling in the two high order bits.

Stability isn’t the reason that companies have stuck with it. Modern server operating systems are just as stable. The reason people have stuck with it, despite the problems and that today it’s a real outlier in modern operating systems is COBOL, and specifically the difficulty in converting legacy programming that are central to business operations together with the file formats they require.

The last major gig I had before retiring was on a mixed Unix and mainframe team, and I can personally testify that the Unix people simply didn’t comprehend the mainframe side. It’s too different. That problem, by itself, will almost certainly relegate a zOS port of GO to toy status - the people doing the port most likely have no idea what the business side has to deal with. Operations and system administration, somewhat. Business, no.

Let’s start out with data types. Anything that deals with files formatted to work with COBOL has to deal with fixed length 8-bit EBCDIC character fields, as well as fixed point decimal arithmetic. If you expect Go to be anything other than a toy on that system, it has to handle those two data types. Now, if you look at the Wikipedia article on COBOL, it seems like there are alternatives. In actual practice, that is real programs doing real work, there aren’t. Those new-fangled data types simply haven't been adopted to any great extent.

Fixed-length 8-bit character fields are the reason that UTF-8 encoding is a non-starter. If a field is supposed to be 30 characters, it’s 30 bytes and that’s it. No more, no less. There’s no room for multi-byte characters, and there’s no silliness about the string being variable length ending with a null character. It’s got 30 characters, padded on the right with blanks.

Fixed decimal means the field is composed of decimal digits with an assumed decimal point that’s part of the data type. The field can be packed two digits per byte. This has hardware support meaning that if you expect any speed out of it a GO compiler that supports it will have to generate the appropriate instructions. Otherwise you’re stuck with format conversions to and from the disk record formats, and you can expect disk formats will have packed decimal fields. Packed fields can be as long as 31 decimal digits, and since a decimal digit is somewhat over 3 bits of information, it can be too long to put in a 63-bit integer. That’s not actually a practical issue, fortunately, and at least some versions of the zSystem have decimal floating point in hardware. Of course, that means the compiler is going to have to generate the correct instructions.

Now for file types. Unix is based on the stream of bytes concept. zOS is based on a record and block concept. If I want to read a record from a sequential file, I ask for the next record. How many bytes do I ask for? I don’t. Doing application programming, I simply don’t care how big the record is. As long as it maps into the correct struct (FD in COBOL, and COBOL will take care of the record length for me), I’m good with it. If the records are variable length, I don’t even know how big it’s going to be - that length is kept in the first two bytes in binary. Records are not delimited by any form of record delimiter.

Now for the tool-chain. On that last gig, major business systems were written in macro Assembler. I hope nobody dislocated their jaw on that one! They may have converted it by now - I certainly hope so! - but the key here is that zOS programmers that are familiar with Assembler will not be happy with the assembler that comes with the GO toolchain. They’ll expect that the existing High Level Assembler integrates properly with GO programs. That is not going to be trivial.

With that as background, it should be fairly obvious why “essential” tools like the GNU utilities haven’t been ported officially, and why UTF-8 hasn’t taken over from the older code page character sets like it’s doing on UNIXy systems. It’s completely pointless. The scripting language that comes with TSO (Time Sharing Option) (which is not a UNIX shell) has no resemblance to UNIX shell scripting languages. Batch jobs are run using something called JCL (Job Control Language - of which the less said the better). If you’re going to go to the trouble of converting that mess, you might as well go all the way to a real UNIXy system and frankly, a large proportion of companies running zOS would like to, but the cost of conversion is way too high.

Back on Language Environment. Since it’s a user-land facility, you have to deal with a unix-style stack, which means that calls to and from LE are most likely going to have to do the same kind of stack shifting you see with CGO. It’s also not compatible with the POSIX environment.

I’ve never dealt with the POSIX environment; it may have solutions for some of the problems. If it doesn’t, I’d suggest going straight to the SVC call interface to the operating system.

Turning GO into a reasonable tool for existing zOS shops would be an interesting experience, for some value of interesting.

John Roth

Lucio De Re

I'd like to add a teeny item of information that I have never seen

highlighted. Back in 387 days, BCD calculation were part of the FPU

instruction set. In fact, one version of Turbo Pascal allowed one to

pick which format floating point computations would take place in.

Of course, that is still there. Whether there's any scope outside of

COBOL to use the BCD FPU capabilities of the i86 chips is debatable. I

presume that neither MIPS nor ARM provide for it, in any event. PPC,

maybe?

Lucio.

Bill O'Farrell

Note that UTF8 isn't a problem on z/OS. Files can be tagged as UTF8, and, can be converted as necessary.

john...@gmail.com

On Monday, September 12, 2016 at 2:00:52 PM UTC-6, Bill O'Farrell wrote:

A couple of clarifications. I don't think anybody thinks that go will be supplanting Cobol on z/OS. Rather it will be interacting with existing "systems of record." there are important applications written in go (ex: HyperLedger and Docker) that would require a robust, complete, go implementation on z/OS. In that sense Go will certainly not be a toy. With CGO it could interface with other major applications (DB2, CICS) and things written in HLASM.

Note that UTF8 isn't a problem on z/OS. Files can be tagged as UTF8, and, can be converted as necessary.

This begins to make sense. What you seem to be saying is that there's this neat system with oodles of industry bigwigs signed on that runs under Linux, and you think that porting it to zOS would be a good idea. Since it's written in Go, that's the first thing to port. Docker, of course, is an operating system utility, not an application framework. HyperLedger is an interesting concept if the financial industry and the financial regulators actually buy into it.

Being old enough that I've earned the right to be a bit cynical, I can say that I've seen these industry consortia pursuing the next big thing come and go without actually changing anything. All that the list of Big Names signed up to HyperLedger means to me is that a lot of companies are willing to devote some resources to it to make sure that they don't get left out in the cold if it takes off. Of course, sometimes one of these ideas does manage to catch fire and change some small part of the world, and I'm not going to indulge in prophecy.

I'd think that CGO is a requirement; you're not going to interface with Language Environment without being able to shift into an environment that supports C and C++, not to mention COBOL and PL/1. You can, of course, build the run-time library using the OS interfaces without needing to do that, and you're going to have to do a lot of that anyway: Language Environment does not provide I/O services to the languages it supports. Those are in the specific languages' run-time libraries.

John Roth