Sherry Lake

Mar 15, 2016, 10:56:14 AM3/15/16

to Dataverse Users Community

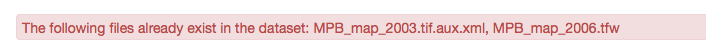

What is the criteria for duplicate file flagging on upload? A zip file with 30 items was uploaded to our UVa Dataverse and two were flagged as dups:

As far as I can tell these are not "really" dups. I am not sure exactly which files Dataverse thinks they are duplicates of, but I can guess based on filesize. Even if the contents are the same, I think it is safe to say that it is OK to have two files with the same contents and different file names in a dataset.

I've put the zip file here for someone to check out:

https://virginia.box.com/s/6jv4afrjjqssa10vktl7nifeeoffi7lb

Thanks

Sherry Lake

As far as I can tell these are not "really" dups. I am not sure exactly which files Dataverse thinks they are duplicates of, but I can guess based on filesize. Even if the contents are the same, I think it is safe to say that it is OK to have two files with the same contents and different file names in a dataset.

I've put the zip file here for someone to check out:

https://virginia.box.com/s/6jv4afrjjqssa10vktl7nifeeoffi7lb

Thanks

Sherry Lake

Philip Durbin

Mar 15, 2016, 11:00:48 AM3/15/16

to dataverse...@googlegroups.com

Duplicate file detection is based on checksums. Please see this (open) issue:

Update File Ingest Documentation To Explain Duplicate File Handling · Issue #2956 · IQSS/dataverse - https://github.com/IQSS/dataverse/issues/2956

Update File Ingest Documentation To Explain Duplicate File Handling · Issue #2956 · IQSS/dataverse - https://github.com/IQSS/dataverse/issues/2956

Sherry Lake

--

You received this message because you are subscribed to the Google Groups "Dataverse Users Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dataverse-commu...@googlegroups.com.

To post to this group, send email to dataverse...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/c8ff83e4-a641-4e60-a9a1-19dc988433e3%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

--

Philip Durbin

Software Developer for http://dataverse.org

http://www.iq.harvard.edu/people/philip-durbin

Software Developer for http://dataverse.org

http://www.iq.harvard.edu/people/philip-durbin

Sherry Lake

Mar 15, 2016, 11:10:22 AM3/15/16

to Dataverse Users Community, philip...@harvard.edu

I am thinking that I am not in the business of questioning why a researcher has "duplicate" files with different file names in their dataset. So is there no work around for dataverse to accept these files?

Another possible work around is to keep the zip file "zipped".

Is there a way for Dataverse NOT to unzip a zip file?

Thanks.

Sherry

Another possible work around is to keep the zip file "zipped".

Is there a way for Dataverse NOT to unzip a zip file?

Thanks.

Sherry

On Tuesday, March 15, 2016 at 11:00:48 AM UTC-4, Philip Durbin wrote:

Duplicate file detection is based on checksums. Please see this (open) issue:

Update File Ingest Documentation To Explain Duplicate File Handling · Issue #2956 · IQSS/dataverse - https://github.com/IQSS/dataverse/issues/2956

On Tue, Mar 15, 2016 at 10:56 AM, Sherry Lake <shla...@gmail.com> wrote:

What is the criteria for duplicate file flagging on upload? A zip file with 30 items was uploaded to our UVa Dataverse and two were flagged as dups:

As far as I can tell these are not "really" dups. I am not sure exactly which files Dataverse thinks they are duplicates of, but I can guess based on filesize. Even if the contents are the same, I think it is safe to say that it is OK to have two files with the same contents and different file names in a dataset.

I've put the zip file here for someone to check out:

https://virginia.box.com/s/6jv4afrjjqssa10vktl7nifeeoffi7lb

Thanks

Sherry Lake

--

You received this message because you are subscribed to the Google Groups "Dataverse Users Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dataverse-community+unsub...@googlegroups.com.

To post to this group, send email to dataverse...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/c8ff83e4-a641-4e60-a9a1-19dc988433e3%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Mercè Crosas

Mar 15, 2016, 7:18:06 PM3/15/16

to dataverse...@googlegroups.com, Philip Durbin

Sherry,

The main reason that in general is not a good practice to not unzip a file before archiving in the Dataverse is that a zip file is not a good preservation format,. In addition, if the file is not unzip, we can't display information about each individual file in the dataset landing page - providing this information to users is good for transparency and understanding of what's in the dataset before they download the file.

However, I can see that in some cases keeping a zip file might be useful. One solution would be to have an option upon upload that asks the depositor whether the unzip or not unzip the file. If this would be useful to you, would you like to enter an issue on GitHub with this request?

Thanks,

Merce

Mercè Crosas, Ph.D.

Chief Data Science and Technology Officer, IQSS

Harvard University

On Tue, Mar 15, 2016 at 11:10 AM, Sherry Lake <shla...@gmail.com> wrote:

I am thinking that I am not in the business of questioning why a researcher has "duplicate" files with different file names in their dataset. So is there no work around for dataverse to accept these files?

Another possible work around is to keep the zip file "zipped".

Is there a way for Dataverse NOT to unzip a zip file?

Thanks.

Sherry

On Tuesday, March 15, 2016 at 11:00:48 AM UTC-4, Philip Durbin wrote:

Duplicate file detection is based on checksums. Please see this (open) issue:

Update File Ingest Documentation To Explain Duplicate File Handling · Issue #2956 · IQSS/dataverse - https://github.com/IQSS/dataverse/issues/2956

On Tue, Mar 15, 2016 at 10:56 AM, Sherry Lake <shla...@gmail.com> wrote:

What is the criteria for duplicate file flagging on upload? A zip file with 30 items was uploaded to our UVa Dataverse and two were flagged as dups:

As far as I can tell these are not "really" dups. I am not sure exactly which files Dataverse thinks they are duplicates of, but I can guess based on filesize. Even if the contents are the same, I think it is safe to say that it is OK to have two files with the same contents and different file names in a dataset.

I've put the zip file here for someone to check out:

https://virginia.box.com/s/6jv4afrjjqssa10vktl7nifeeoffi7lb

Thanks

Sherry Lake

--

You received this message because you are subscribed to the Google Groups "Dataverse Users Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dataverse-commu...@googlegroups.com.

To post to this group, send email to dataverse...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/c8ff83e4-a641-4e60-a9a1-19dc988433e3%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

--Philip Durbin

Software Developer for http://dataverse.org

http://www.iq.harvard.edu/people/philip-durbin

--

You received this message because you are subscribed to the Google Groups "Dataverse Users Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dataverse-commu...@googlegroups.com.

To post to this group, send email to dataverse...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/3ea64612-5919-45e5-bf09-63f9249956b3%40googlegroups.com.

Sherry Lake

Mar 16, 2016, 10:47:23 AM3/16/16

to dataverse...@googlegroups.com

Mercè,

Thanks for the explanation. It makes sense. I'll think about specific use cases keeping a zip file on upload (such as being able to upload a zip file to preserve the directory structure - sort of a work around for the fact that Dataverse does not support a folder hierarchical : https://github.com/IQSS/dataverse/issues/2249).--

--

You received this message because you are subscribed to a topic in the Google Groups "Dataverse Users Community" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/dataverse-community/FLnm8-60sOs/unsubscribe.

To unsubscribe from this group and all its topics, send an email to dataverse-commu...@googlegroups.com.

To post to this group, send email to dataverse...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/CAPAYmDM%3DE2wZLHHCz29fMjZZAVseSi69FopgbqsDM_gpb%2BUGVQ%40mail.gmail.com.

Mercè Crosas

Mar 16, 2016, 10:59:54 AM3/16/16

to dataverse...@googlegroups.com

We plan to support the original directory structure in the near future. The SBGrid team at Harvard Medical School is working on this for their biomedical data.

Merce

Mercè Crosas, Ph.D.

Chief Data Science and Technology Officer, IQSS

Harvard University

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/CADL9p-UdjDh-_mkR827dJ%3Dz%2BPDxMt4sydYB_RrXeGgT3G9Hqqg%40mail.gmail.com.

Sherry Lake

Mar 16, 2016, 11:11:14 AM3/16/16

to Dataverse Users Community, philip...@harvard.edu

Restating my concern over duplicate file flagging (actually not just "flagging" but preventing upload).

I am thinking that I am not in the business of questioning why a researcher has "duplicate" files with different file names in their dataset. So is there any work around for dataverse to accept these files?

If a researcher has two files that just happen to contain the same information (the same checksum), I don't think that should stop that file from being uploaded, maybe flagged??. There may be a reason for different filenames w/ same content (such as: used in a script as part of analysis - where the title of the file is important to the script and thus would be important for transparency and understanding of the methodology).

Thanks for listening and welcome feedback and comments.

--

I am thinking that I am not in the business of questioning why a researcher has "duplicate" files with different file names in their dataset. So is there any work around for dataverse to accept these files?

If a researcher has two files that just happen to contain the same information (the same checksum), I don't think that should stop that file from being uploaded, maybe flagged??. There may be a reason for different filenames w/ same content (such as: used in a script as part of analysis - where the title of the file is important to the script and thus would be important for transparency and understanding of the methodology).

Thanks for listening and welcome feedback and comments.

--

Sherry

On Tuesday, March 15, 2016 at 11:00:48 AM UTC-4, Philip Durbin wrote:

Duplicate file detection is based on checksums. Please see this (open) issue:

Update File Ingest Documentation To Explain Duplicate File Handling · Issue #2956 · IQSS/dataverse - https://github.com/IQSS/dataverse/issues/2956

On Tue, Mar 15, 2016 at 10:56 AM, Sherry Lake <shla...@gmail.com> wrote:

What is the criteria for duplicate file flagging on upload? A zip file with 30 items was uploaded to our UVa Dataverse and two were flagged as dups:

As far as I can tell these are not "really" dups. I am not sure exactly which files Dataverse thinks they are duplicates of, but I can guess based on filesize. Even if the contents are the same, I think it is safe to say that it is OK to have two files with the same contents and different file names in a dataset.

I've put the zip file here for someone to check out:

https://virginia.box.com/s/6jv4afrjjqssa10vktl7nifeeoffi7lb

Thanks

Sherry Lake

--

You received this message because you are subscribed to the Google Groups "Dataverse Users Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dataverse-community+unsub...@googlegroups.com.

To post to this group, send email to dataverse...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/c8ff83e4-a641-4e60-a9a1-19dc988433e3%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Reply all

Reply to author

Forward

0 new messages