Re: Help in running a model

81 views

Skip to first unread message

Message has been deleted

Sachin Joglekar

Jul 28, 2021, 4:30:11 PM7/28/21

to TensorFlow Lite, buis...@gmail.com

Not sure if you have attached the model correctly? Can you share the TFLite converter output (a .tflite file), along with the snippet of code that you are running for model inference?

On Tuesday, July 27, 2021 at 4:52:19 PM UTC-7 buis...@gmail.com wrote:

Hi all,I am just trying to run a different model instead of the hello world

project. So, I just changed model.cc file accordingly. However, my new

model doesn't run correctly with this change. It shows aborted on the

stdout and terminates. I figured out the error arises during the

TfLiteStatus allocate_status = interpreter->AllocateTensors(); lineHere is my model architecture and model.cc file. Any help would be

appreciated.Thanks

Message has been deleted

Message has been deleted

Sachin Joglekar

Jul 28, 2021, 4:48:39 PM7/28/21

to TensorFlow Lite, buis...@gmail.com, Nat Jeffries, Sachin Joglekar

Thanks for providing the model! CC'ing Nat who has a better knowledge of our Micro APIs to take a look at your code/model.

On Wednesday, July 28, 2021 at 1:44:52 PM UTC-7 buis...@gmail.com wrote:

Here is the tflite modelhttps://drive.google.com/file/d/1nq6PXrq4B2AjZaekfoQHRknzDkqFYFwP/view?usp=sharing

Buisness Professionals

Jul 28, 2021, 5:25:19 PM7/28/21

to Sachin Joglekar, TensorFlow Lite, Nat Jeffries

Thanks Sachin!

Buisness Professionals

Jul 28, 2021, 7:31:35 PM7/28/21

to Nat Jeffries, Sachin Joglekar, TensorFlow Lite

Hi Nat,

I did increase the arena to about 2,000,000 and still, the same error occurs. I will try the debugging method you suggested to see if I can find a solution to the problem.

On Wed, 28 Jul 2021 at 14:37, Nat Jeffries <nj...@google.com> wrote:

Hi there. I took a quick look at the model and we should support all the layers in TFLM. It's likely you have not increased the arena sufficiently to run the model, since your model is quite a bit larger than the hello world example.If you want to dig deeper, please build with BUILD_TYPE=debug and run gdb <binary> The binary should be printed out during the last stages of the build.Thanks,Nat

Buisness Professionals

Jul 28, 2021, 7:59:21 PM7/28/21

to TensorFlow Lite, Buisness Professionals, Sachin Joglekar, TensorFlow Lite, Nat Jeffries

Hi Nat,

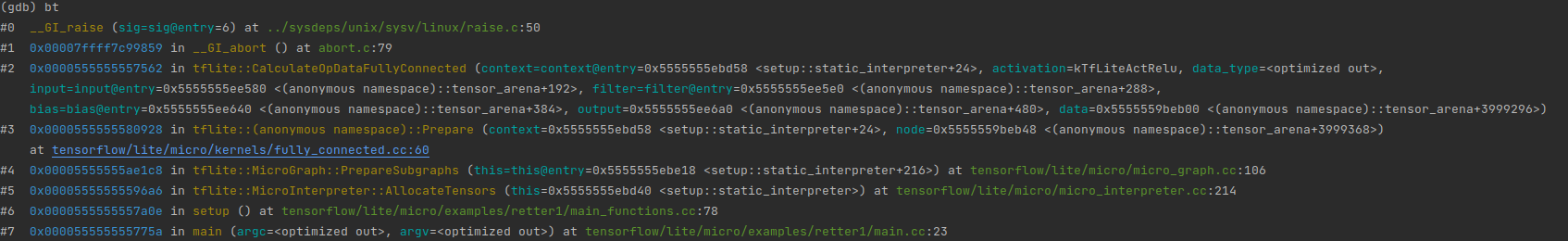

Thanks for the suggestion. I was able to Debug it and found out something isn't working with fullyconnected.cc file. Can you help me figure out the issue? Here is the error trace:

Same error trace in text format:

__GI_raise (sig=sig@entry=6) at ../sysdeps/unix/sysv/linux/raise.c:50

#1 0x00007ffff7c99859 in __GI_abort () at abort.c:79

#2 0x0000555555557562 in tflite::CalculateOpDataFullyConnected (context=context@entry=0x5555555ebd58 <setup::static_interpreter+24>, activation=kTfLiteActRelu, data_type=<optimized out>,

input=input@entry=0x5555555ee580 <(anonymous namespace)::tensor_arena+192>, filter=filter@entry=0x5555555ee5e0 <(anonymous namespace)::tensor_arena+288>,

bias=bias@entry=0x5555555ee640 <(anonymous namespace)::tensor_arena+384>, output=0x5555555ee6a0 <(anonymous namespace)::tensor_arena+480>, data=0x5555559beb00 <(anonymous namespace)::tensor_arena+3999296>)

#3 0x0000555555580928 in tflite::(anonymous namespace)::Prepare (context=0x5555555ebd58 <setup::static_interpreter+24>, node=0x5555559beb48 <(anonymous namespace)::tensor_arena+3999368>)

at tensorflow/lite/micro/kernels/fully_connected.cc:60

#4 0x00005555555ae1c8 in tflite::MicroGraph::PrepareSubgraphs (this=this@entry=0x5555555ebe18 <setup::static_interpreter+216>) at tensorflow/lite/micro/micro_graph.cc:106

#5 0x00005555555596a6 in tflite::MicroInterpreter::AllocateTensors (this=0x5555555ebd40 <setup::static_interpreter>) at tensorflow/lite/micro/micro_interpreter.cc:214

#6 0x0000555555557a0e in setup () at tensorflow/lite/micro/examples/retter1/main_functions.cc:78

#7 0x000055555555775a in main (argc=<optimized out>, argv=<optimized out>) at tensorflow/lite/micro/examples/retter1/main.cc:23

#1 0x00007ffff7c99859 in __GI_abort () at abort.c:79

#2 0x0000555555557562 in tflite::CalculateOpDataFullyConnected (context=context@entry=0x5555555ebd58 <setup::static_interpreter+24>, activation=kTfLiteActRelu, data_type=<optimized out>,

input=input@entry=0x5555555ee580 <(anonymous namespace)::tensor_arena+192>, filter=filter@entry=0x5555555ee5e0 <(anonymous namespace)::tensor_arena+288>,

bias=bias@entry=0x5555555ee640 <(anonymous namespace)::tensor_arena+384>, output=0x5555555ee6a0 <(anonymous namespace)::tensor_arena+480>, data=0x5555559beb00 <(anonymous namespace)::tensor_arena+3999296>)

#3 0x0000555555580928 in tflite::(anonymous namespace)::Prepare (context=0x5555555ebd58 <setup::static_interpreter+24>, node=0x5555559beb48 <(anonymous namespace)::tensor_arena+3999368>)

at tensorflow/lite/micro/kernels/fully_connected.cc:60

#4 0x00005555555ae1c8 in tflite::MicroGraph::PrepareSubgraphs (this=this@entry=0x5555555ebe18 <setup::static_interpreter+216>) at tensorflow/lite/micro/micro_graph.cc:106

#5 0x00005555555596a6 in tflite::MicroInterpreter::AllocateTensors (this=0x5555555ebd40 <setup::static_interpreter>) at tensorflow/lite/micro/micro_interpreter.cc:214

#6 0x0000555555557a0e in setup () at tensorflow/lite/micro/examples/retter1/main_functions.cc:78

#7 0x000055555555775a in main (argc=<optimized out>, argv=<optimized out>) at tensorflow/lite/micro/examples/retter1/main.cc:23

Thanks

Buisness Professionals

Jul 29, 2021, 2:44:47 PM7/29/21

to Nat Jeffries, TensorFlow Lite, Sachin Joglekar

Thanks for the information Nat!

On Wed, 28 Jul 2021 at 17:42, Nat Jeffries <nj...@google.com> wrote:

It looks like you're running into https://github.com/tensorflow/tflite-micro/issues/216 since you're trying to use uint8 quantization.Please convert your model to use signed int8 quantization to avoid this issue. We switched over since TFLite and the TFLite converter both now default to int8 quantization.Thanks,Nat

Buisness Professionals

Jul 30, 2021, 3:56:48 PM7/30/21

to Nat Jeffries, TensorFlow Lite, Sachin Joglekar

Hi Nat,

Thanks again for directing me to the github issue. I was able to work that out.

I wanted to know if TFLM has any support for cross-compilation on Android like we have for TFLite (https://www.tensorflow.org/lite/guide/build_cmake).

Thanks

Reply all

Reply to author

Forward

0 new messages