Element-wise add and multiply operations work on CPU instead of GPU and bring about overhead

33 views

Skip to first unread message

Simon King

Oct 19, 2021, 2:39:21 PM10/19/21

to TensorFlow Lite

Hi TFLite team and friends,

I built a model based on tf.function and its runtime inference performance is very important for us. While using Tensorboard to profile the model inference time on GPU, I found the element-wise add and multiply operations are performed on CPU instead of GPU, which cause a remarkable overhead. Here's the codes of element-wise operations in the model:

state = tf.gather(input_text, indices=indices, axis=1)

state = tf.math.add(state, byte_indices)

box = tf.gather(box, indices=state)

state = tf.math.add(state, byte_indices)

box = tf.gather(box, indices=state)

# more tf.gather and bit-wise operations

To run the model on GPU, I used:

strategy = tf.distribute.MirroredStrategy()

with strategy.scope():

strategy = tf.distribute.MirroredStrategy()

with strategy.scope():

profile_inference()

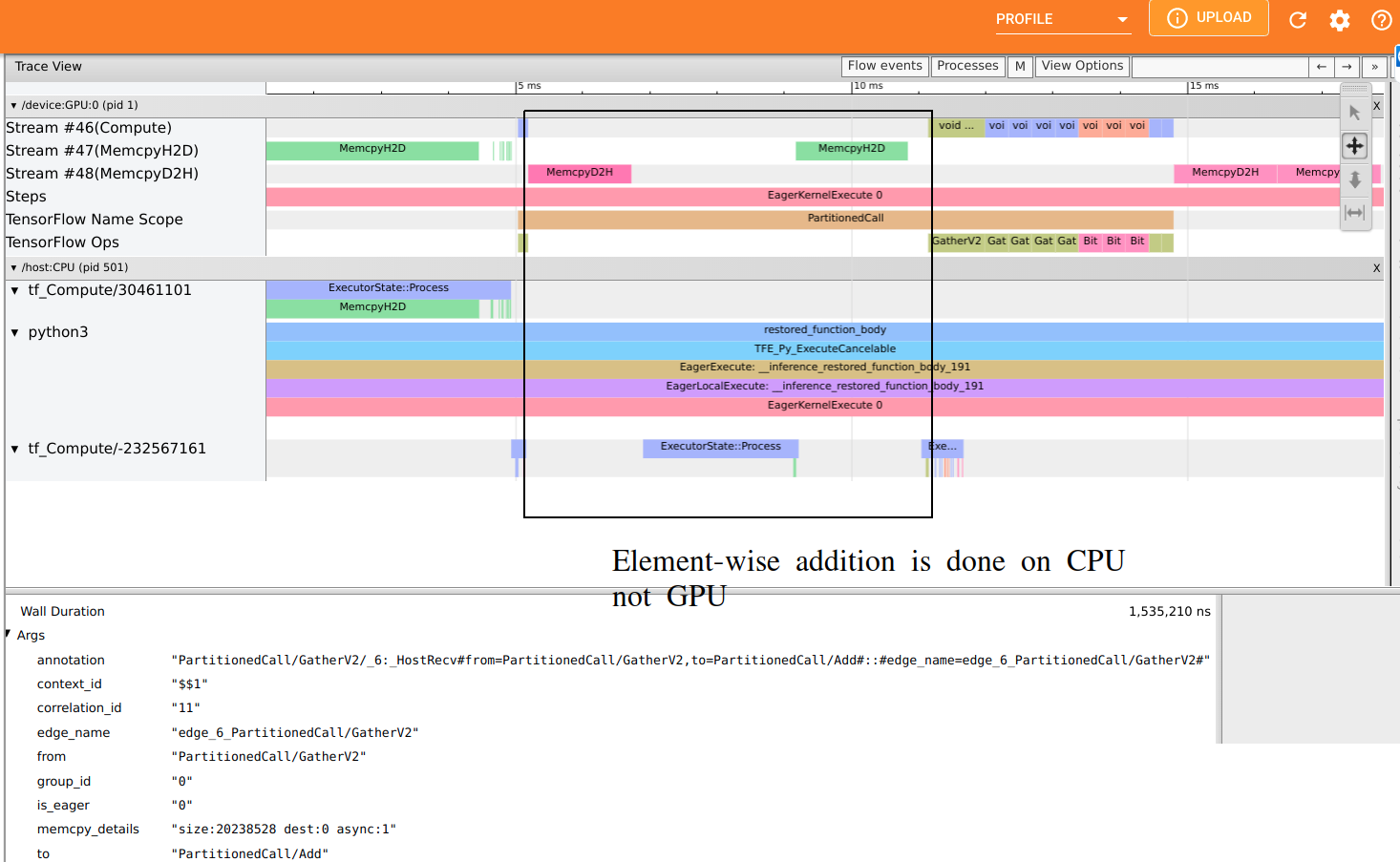

With Tensorboard, I got the profile UI as below. The state = tf.math.add(state, byte_indices) is done on CPU not GPU and it cause unexpected memory copy overhead.

Is there any way to force the element-wise operations, especially add and multiply, work on GPU?

Simon King

Oct 19, 2021, 2:42:24 PM10/19/21

to TensorFlow Lite, Simon King

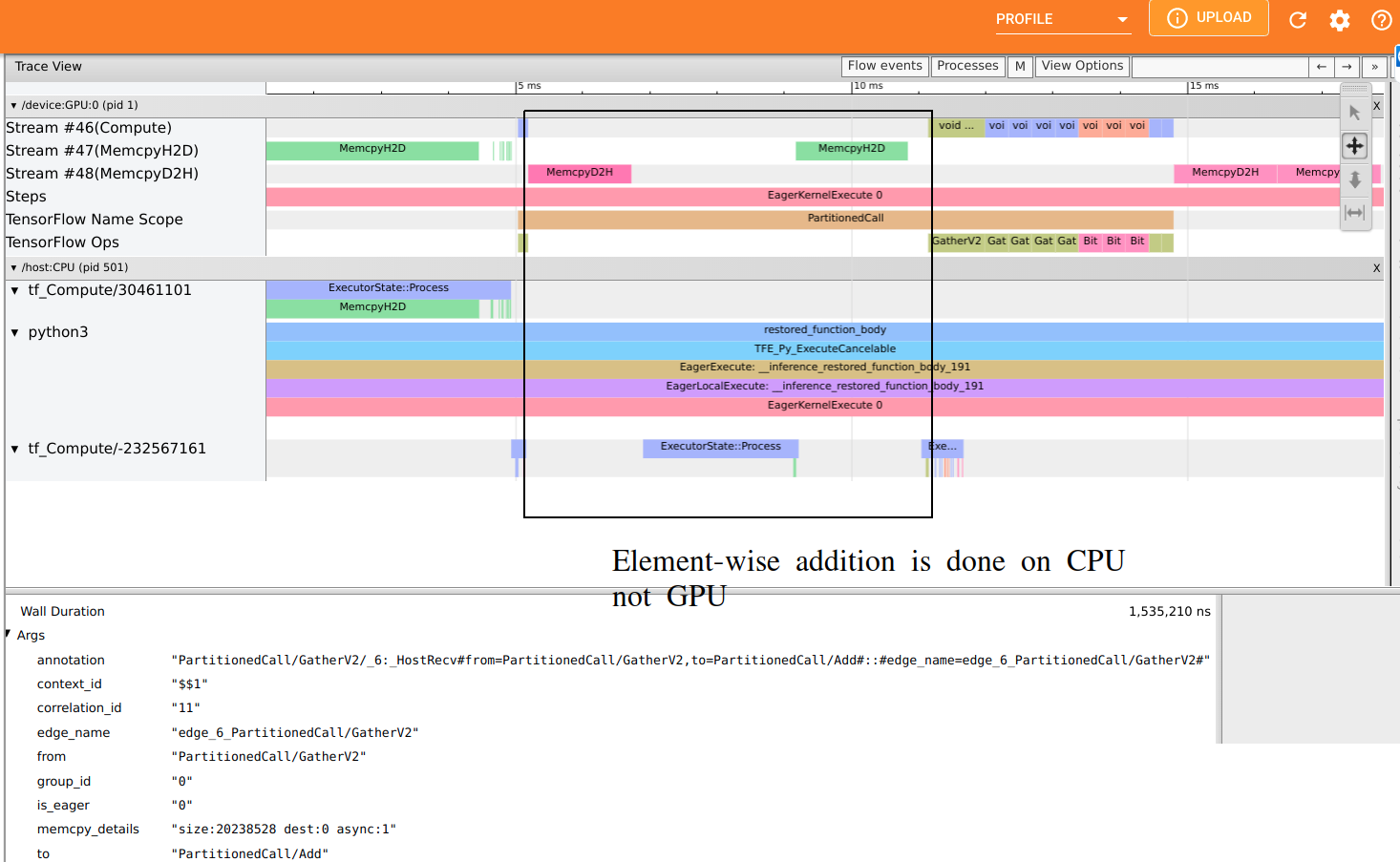

After commenting the element-wise add operation, I got another profile UI as follows:

.png?part=0.1&view=1)

.png?part=0.1&view=1)

As you can see, the overhead of add operation disappears.

Sachin Joglekar

Oct 20, 2021, 12:50:45 PM10/20/21

to TensorFlow Lite, Simon King

Hey Simon,

I don't think this issue pertains to TFLite, since it looks like you are trying to run a TensorFlow model on a desktop/server machine. Maybe file a Github issue?

Simon King

Oct 20, 2021, 3:29:02 PM10/20/21

to TensorFlow Lite, Sachin Joglekar, Simon King

Right, I'm running the model on desktop.

Thanks for your reply. I will ask on Github.

Reply all

Reply to author

Forward

0 new messages