2.7 converter compatibility

Daniel Situnayake

model = Sequential()

model.add(Dense(16, activation='relu'))

model.add(Flatten())

model.add(Dense(classes, activation='softmax'))

Jean-michel DELORME

Hi Dan,

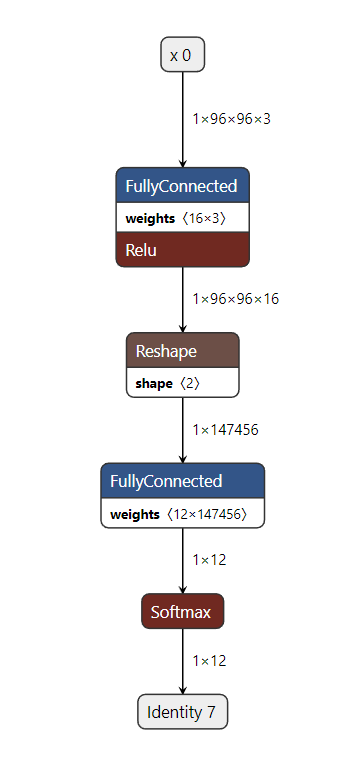

In our case, you have the possibility to use a concrete function to fix the input shape.

As illustrated the resulting graph is more efficient for TFLM “2.7”. Else you have always the possibility to use the toco instead MLIR backend.

Br,

JM

model = tf.keras.Sequential()

model.add(tf.keras.Input(shape=(96,96,3)))

model.add(tf.keras.layers.Dense(16, activation='relu'))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(12, activation='softmax'))

run_model = tf.function(lambda x: model(x))

concrete_func = run_model.get_concrete_function(

tf.TensorSpec([1, 96, 96, 3], model.inputs[0].dtype))

converter = tf.lite.TFLiteConverter.from_concrete_functions([concrete_func])

tfl_model = converter.convert()

with open('sig_tfl_conv_2.tflite', "wb") as f:

f.write(tfl_model)

![]()

![]()

Jean-Michel Delorme | TINA: 041 5105 | Tel: +33 4 76 58 51 05 | Mobile: +33 6 72 81 98 66

MDG/MCD | AI Solution – Senior SW designer

![]()

From: Daniel Situnayake <d...@edgeimpulse.com>

Sent: Tuesday, January 11, 2022 2:10 AM

To: SIG Micro <mi...@tensorflow.org>

Subject: 2.7 converter compatibility

Hi SIG Micro,

I'm upgrading our codebase to use TF2.7. For certain models, the converter in 2.7 outputs a highly mutated graph compared to earlier versions (we were previously using 2.4). For example, the following simple model with a 96x96 RGB input:

# input is 96x96x3

model = Sequential()

model.add(Dense(16, activation='relu'))

model.add(Flatten())

model.add(Dense(classes, activation='softmax'))

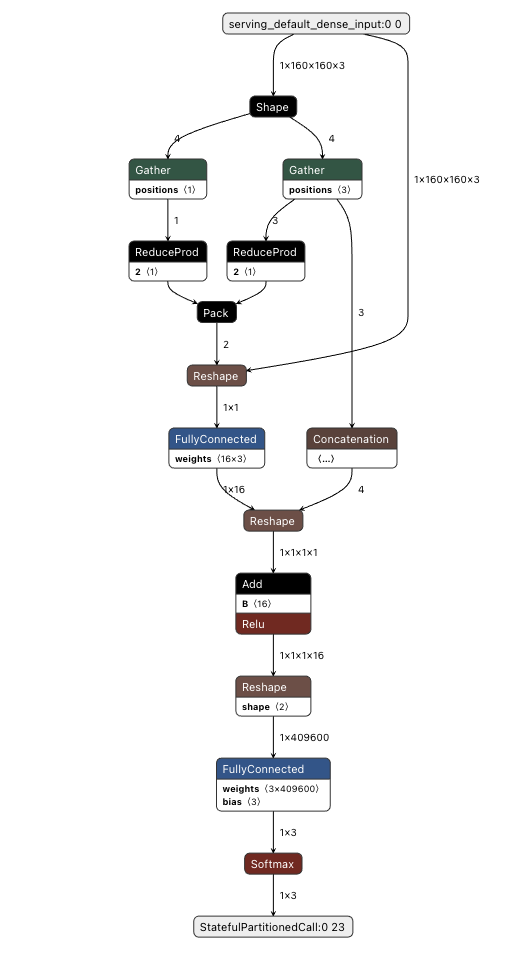

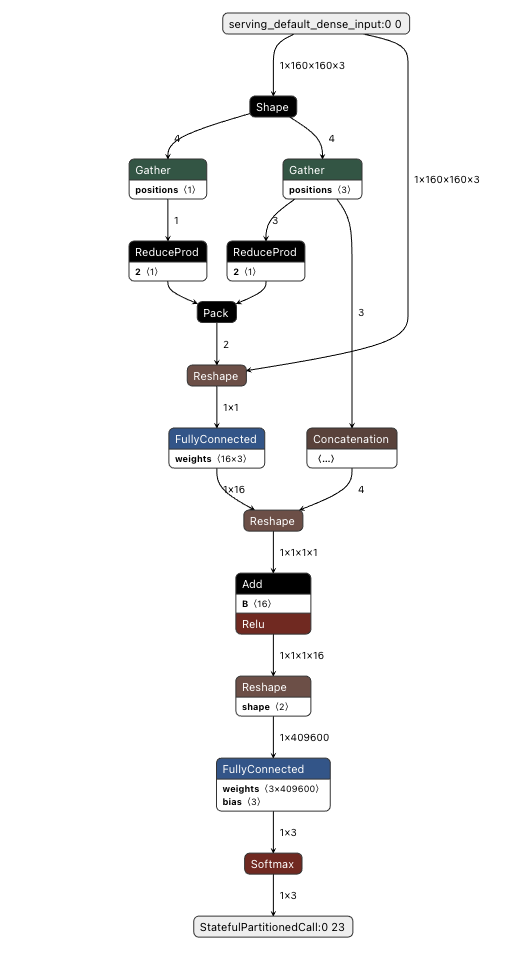

The resulting tflite graph is highly complex:

Some of the ops (such as ReduceProd) don't seem to be available in the latest TFLM; they are only in Lite.

This seems to happen whenever you follow a 2D tensor with more than one fully connected layer, even if you reshape/flatten down to a single dimension first.

Does anyone know if there is a way to force the converter to output a "simple" model that doesn't bring in these extra operators?

Warmly,

--

You received this message because you are subscribed to the Google Groups "SIG Micro" group.

To unsubscribe from this group and stop receiving emails from it, send an email to

micro+un...@tensorflow.org.

To view this discussion on the web visit

https://groups.google.com/a/tensorflow.org/d/msgid/micro/CAOu%3DFxatXnTt9jBOWzAp1B%2Bj7mXh78ooYBOPoGkVjoraK%3DPOCw%40mail.gmail.com.

ST Restricted

Daniel Situnayake

To view this discussion on the web visit https://groups.google.com/a/tensorflow.org/d/msgid/micro/PAXPR10MB473308BB61127459F4FF5308CF519%40PAXPR10MB4733.EURPRD10.PROD.OUTLOOK.COM.

Jean-michel DELORME

Hi Dan,

Yes, I confirm, in my environment (tensorflow 2.7.0, Python 3.7.9) with the snippet code and an input shape of 160x160, the output is the same (w/o ReduceProd op). The usage of the concrete function seems help the MILR converter to avoid the generation of the subgraph with ReduceOp op which is not requested here and not supported in the current baseline of TFLM (but I have not really checked with the latest TFLM version).

However, you highlight perhaps a specific point, I have not yet find it in the TF documentation (wiki or other), if it is possible to specific some constraints/options in the TFLiteConverter to have a TFLite file more “compliant” with a TFLM runtime.

Warn,

JM

ST Restricted

Daniel Situnayake

Daniel Situnayake

Chris Knorowski

To view this discussion on the web visit https://groups.google.com/a/tensorflow.org/d/msgid/micro/CAOu%3DFxYZ_eiXxABv2pCYLNwusaLv3ktCdtvqzg5Tsbv8OUok2g%40mail.gmail.com.