Audio Loudness Normalization

3,950 views

Skip to first unread message

Lars Kiesow

Feb 27, 2018, 12:27:51 PM2/27/18

to us...@opencast.org

Hi everyone

tldr; Use the following FFmpeg filter as part of your encoding

profiles for audio normalization in Opencast:

ffmpeg -i … -filter:a loudnorm=I=-23:LRA=1:dual_mono=true:tp=-1 …

For ETH, I had a look at including audio normalization into their

workflows to deal with fluctuations in their audio loudness (e.g. to

avoid having some very quiet recordings). Since 2014, Opencast has a

SoX integration for exactly this purpose which works quite fine:

https://docs.opencast.org/develop/admin/workflowoperationhandlers/normalizeaudio-woh/

Starting with a video recording, the normalization operation would

extract the recordings audio stream, have SoX analyze and normalize it

to a certain RMS dB value and then integrate the new audio stream again

into the original video container (there are a few more modes but that

is probably the most common use case).

While this works fine, current versions of FFmpeg make things quite a

bit easier by providing the `loudnorm` filter for EBU R128 loudness

normalization which can be used as part of any FFmpeg operation. Hence,

we can normalize the audio for example as part of generating the

distribution artifacts or the work files. Of course, you could also

still do it as a separate operation :)

If you are now wondering what the hell EBU R128 is: EBU 128 is the name

of the European Broadcasting Union's recommendation for loudness

normalization and permitted maximum level of audio signals, an attempt

to avoid huge loudness fluctuations between different broadcasting

channels.

The most important thing relevant for Opencast is probably the

recommendation to have the audio normalized to a target level of -23.0

LUFS. There are more recommendations on which I based the filter

definition above. For details, read:

https://tech.ebu.ch/loudness

…and the actual R128 (which is quite short and easy to read):

https://tech.ebu.ch/docs/r/r128-2014.pdf

Less relevant to Opencast but still another interesting read when it

comes to audio normalization and EBU R128 is the following document. It

also outlines some reasoning behind the recommendation and possible

solutions for what can be done if broadcasters ignore the

recommendations to be the loudest out there:

https://tech.ebu.ch/docs/techreview/trev_2012-Q3_Loudness_van_Everdingen.pdf

Of course, it is always best if you need to make less adjustments

afterwards but can already get things right when recording the audio in

the first place. For that it is helpful to know the loudness levels of

your recordings, … which is what the `ebur128` FFmpeg filter can be used

for. Running a command like this will output all the interesting

numbers:

ffmpeg -nostats -i in.mp4 -filter:a ebur128=peak=true -f null -

Did anyone else look at audio normalization recently? Did I miss

anything important? Also, should we point to this in the normalization

workflow operation or even deprecate it?

I'm interested in your comments.

Best regards,

Lars

tldr; Use the following FFmpeg filter as part of your encoding

profiles for audio normalization in Opencast:

ffmpeg -i … -filter:a loudnorm=I=-23:LRA=1:dual_mono=true:tp=-1 …

For ETH, I had a look at including audio normalization into their

workflows to deal with fluctuations in their audio loudness (e.g. to

avoid having some very quiet recordings). Since 2014, Opencast has a

SoX integration for exactly this purpose which works quite fine:

https://docs.opencast.org/develop/admin/workflowoperationhandlers/normalizeaudio-woh/

Starting with a video recording, the normalization operation would

extract the recordings audio stream, have SoX analyze and normalize it

to a certain RMS dB value and then integrate the new audio stream again

into the original video container (there are a few more modes but that

is probably the most common use case).

While this works fine, current versions of FFmpeg make things quite a

bit easier by providing the `loudnorm` filter for EBU R128 loudness

normalization which can be used as part of any FFmpeg operation. Hence,

we can normalize the audio for example as part of generating the

distribution artifacts or the work files. Of course, you could also

still do it as a separate operation :)

If you are now wondering what the hell EBU R128 is: EBU 128 is the name

of the European Broadcasting Union's recommendation for loudness

normalization and permitted maximum level of audio signals, an attempt

to avoid huge loudness fluctuations between different broadcasting

channels.

The most important thing relevant for Opencast is probably the

recommendation to have the audio normalized to a target level of -23.0

LUFS. There are more recommendations on which I based the filter

definition above. For details, read:

https://tech.ebu.ch/loudness

…and the actual R128 (which is quite short and easy to read):

https://tech.ebu.ch/docs/r/r128-2014.pdf

Less relevant to Opencast but still another interesting read when it

comes to audio normalization and EBU R128 is the following document. It

also outlines some reasoning behind the recommendation and possible

solutions for what can be done if broadcasters ignore the

recommendations to be the loudest out there:

https://tech.ebu.ch/docs/techreview/trev_2012-Q3_Loudness_van_Everdingen.pdf

Of course, it is always best if you need to make less adjustments

afterwards but can already get things right when recording the audio in

the first place. For that it is helpful to know the loudness levels of

your recordings, … which is what the `ebur128` FFmpeg filter can be used

for. Running a command like this will output all the interesting

numbers:

ffmpeg -nostats -i in.mp4 -filter:a ebur128=peak=true -f null -

Did anyone else look at audio normalization recently? Did I miss

anything important? Also, should we point to this in the normalization

workflow operation or even deprecate it?

I'm interested in your comments.

Best regards,

Lars

Stephen Marquard

Feb 27, 2018, 1:02:50 PM2/27/18

to us...@opencast.org

Hi Lars,

Great, this is a good step forward, as using the ffmpeg filters will reduce the number of dependencies (sox) plus reduce workflow operations.

It would be great to include the ebur128 filter in the media analysis workflow operation by default, and add the loudnorm filter as a configurable workflow param for the videoeditor operation.

Assuming videoeditor is the right place to put it? The loudness should get adjusted based on the edited output, disregarding the parts of the video which have been cut.

We should definitely deprecate the sox WOHs once we've got the ffmpeg approach in the documentation and default workflows.

Regards

Stephen

From: Lars Kiesow <lki...@uos.de>

Sent: 27 February 2018 07:27:47 PM

To: us...@opencast.org

Subject: [OC Users] Audio Loudness Normalization

Sent: 27 February 2018 07:27:47 PM

To: us...@opencast.org

Subject: [OC Users] Audio Loudness Normalization

--

You received this message because you are subscribed to the Google Groups "Opencast Users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to users+un...@opencast.org.

Disclaimer - University of Cape Town This email is subject to UCT policies and email disclaimer published on our website at http://www.uct.ac.za/main/email-disclaimer or obtainable from +27 21 650 9111. If this email is not related to the business of UCT, it

is sent by the sender in an individual capacity. Please report security incidents or abuse via https://csirt.uct.ac.za/page/report-an-incident.php.

You received this message because you are subscribed to the Google Groups "Opencast Users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to users+un...@opencast.org.

Jan Koppe

Feb 27, 2018, 2:47:34 PM2/27/18

to us...@opencast.org

Hello Lars,

your idea is not wrong, but I would not want to use this normalization

standard in this setting. I think that it would actually be harmful. EBU

R128 is intended for normalizing levels between different broadcasting

programs to avoid huge jumps in audio levels, or having some commercial

be "louder" (literally and figuratively) for some stupid reason.

Considering the setting in which the recordings in a typical Opencast

system will be played back, the reasons for EBU R128 are not really

valid anymore - Opencast administrators are pretty much in control of

the entire "program" and can (and most definitely should!!) normalize

audio levels between recordings. The drawback of EBU R128 is that it

still needs to allow for highly dynamic signals and therefore leaves a

lot of headroom in the signal to provide enough dynamic range. In the

lecture setting, overly high dynamic range is actually not something I

as a viewer would want. I always want to hear the speaker loud and

clear, preferrably at a very constant volume level. Using EBU R128 would

sort of achieve that, minus being loud.

That's the main issue imho: The recordings will have the same level, but

will be a good amount quieter than they could be. Of course, you can

easily turn up the volume of your TV. But as a reality check: Tell that

to the student watching the lecture on a crappy little netbook in their

dorm. They can't just make it louder, if we're alrady throwing away

10-15 dB of maximum signal range. This can be a real issue.

What I would propose (as we've been doing at WWU for some time now with

pretty good results) is using this chain:

highpass=f=120,acompressor=threshold=0.3:makeup=3:release=50:attack=5:knee=4:ratio=10:detection=peak,alimiter=limit=0.95

This chain is equivalent of what I would set up as a sound engineer for

a conference event. It provides simple filtering of unwanted

rumbling-noises and a rather quick and hard dynamic compression, with

some final limiting to prevent clipping of the signal. This will result

in very loud, consistent levels that can be understood clearly even on

quiet playback devices.

Also: Yes, including this filterchain in your ffmpeg encoding steps is

the best way to do it imho. We're confident enough with this chain that

we apply it directly to our source recording on the fly (with pyCA)

before ingesting to Opencast. I think we tried using the SoX WOH at

first, but the results were pretty bad.

Regards,

Jan

On 02/27/2018 07:02 PM, Stephen Marquard wrote:

>

> Hi Lars,

>

>

> Great, this is a good step forward, as using the ffmpeg filters will

> reduce the number of dependencies (sox) plus reduce workflow operations.

>

>

> It would be great to include the ebur128 filter in the media analysis

> workflow operation by default, and add the loudnorm filter as a

> configurable workflow param for the videoeditor operation.

>

>

> Assuming videoeditor is the right place to put it? The loudness should

> get adjusted based on the edited output, disregarding the parts of the

> video which have been cut.

>

>

> We should definitely deprecate the sox WOHs once we've got the ffmpeg

> approach in the documentation and default workflows.

>

>

> Regards

>

> Stephen

>

>

> ------------------------------------------------------------------------

> *From:* Lars Kiesow <lki...@uos.de>

> *Sent:* 27 February 2018 07:27:47 PM

> *To:* us...@opencast.org

> *Subject:* [OC Users] Audio Loudness Normalization

--

Jan Koppe

eLectures / LearnWeb

Westfälische Wilhelms-Universität

Fliednerstraße 21 - Room 143c

48149 Münster/Westf. - Germany

E-mail: jan....@wwu.de

your idea is not wrong, but I would not want to use this normalization

standard in this setting. I think that it would actually be harmful. EBU

R128 is intended for normalizing levels between different broadcasting

programs to avoid huge jumps in audio levels, or having some commercial

be "louder" (literally and figuratively) for some stupid reason.

Considering the setting in which the recordings in a typical Opencast

system will be played back, the reasons for EBU R128 are not really

valid anymore - Opencast administrators are pretty much in control of

the entire "program" and can (and most definitely should!!) normalize

audio levels between recordings. The drawback of EBU R128 is that it

still needs to allow for highly dynamic signals and therefore leaves a

lot of headroom in the signal to provide enough dynamic range. In the

lecture setting, overly high dynamic range is actually not something I

as a viewer would want. I always want to hear the speaker loud and

clear, preferrably at a very constant volume level. Using EBU R128 would

sort of achieve that, minus being loud.

That's the main issue imho: The recordings will have the same level, but

will be a good amount quieter than they could be. Of course, you can

easily turn up the volume of your TV. But as a reality check: Tell that

to the student watching the lecture on a crappy little netbook in their

dorm. They can't just make it louder, if we're alrady throwing away

10-15 dB of maximum signal range. This can be a real issue.

What I would propose (as we've been doing at WWU for some time now with

pretty good results) is using this chain:

highpass=f=120,acompressor=threshold=0.3:makeup=3:release=50:attack=5:knee=4:ratio=10:detection=peak,alimiter=limit=0.95

This chain is equivalent of what I would set up as a sound engineer for

a conference event. It provides simple filtering of unwanted

rumbling-noises and a rather quick and hard dynamic compression, with

some final limiting to prevent clipping of the signal. This will result

in very loud, consistent levels that can be understood clearly even on

quiet playback devices.

Also: Yes, including this filterchain in your ffmpeg encoding steps is

the best way to do it imho. We're confident enough with this chain that

we apply it directly to our source recording on the fly (with pyCA)

before ingesting to Opencast. I think we tried using the SoX WOH at

first, but the results were pretty bad.

Regards,

Jan

On 02/27/2018 07:02 PM, Stephen Marquard wrote:

>

> Hi Lars,

>

>

> Great, this is a good step forward, as using the ffmpeg filters will

> reduce the number of dependencies (sox) plus reduce workflow operations.

>

>

> It would be great to include the ebur128 filter in the media analysis

> workflow operation by default, and add the loudnorm filter as a

> configurable workflow param for the videoeditor operation.

>

>

> Assuming videoeditor is the right place to put it? The loudness should

> get adjusted based on the edited output, disregarding the parts of the

> video which have been cut.

>

>

> We should definitely deprecate the sox WOHs once we've got the ffmpeg

> approach in the documentation and default workflows.

>

>

> Regards

>

> Stephen

>

>

> *From:* Lars Kiesow <lki...@uos.de>

> *Sent:* 27 February 2018 07:27:47 PM

> *To:* us...@opencast.org

> *Subject:* [OC Users] Audio Loudness Normalization

>

> Hi everyone

>

> tldr; Use the following FFmpeg filter as part of your encoding

> profiles for audio normalization in Opencast:

>

> ffmpeg -i … -filter:a loudnorm=I=-23:LRA=1:dual_mono=true:tp=-1 …

>

>

> For ETH, I had a look at including audio normalization into their

> workflows to deal with fluctuations in their audio loudness (e.g. to

> avoid having some very quiet recordings). Since 2014, Opencast has a

> SoX integration for exactly this purpose which works quite fine:

>

> https://docs.opencast.org/develop/admin/workflowoperationhandlers/normalizeaudio-woh/

> <https://protect-za.mimecast.com/s/wqhJCNxKq0ij67wOfrINox>

> Hi everyone

>

> tldr; Use the following FFmpeg filter as part of your encoding

> profiles for audio normalization in Opencast:

>

> ffmpeg -i … -filter:a loudnorm=I=-23:LRA=1:dual_mono=true:tp=-1 …

>

>

> For ETH, I had a look at including audio normalization into their

> workflows to deal with fluctuations in their audio loudness (e.g. to

> avoid having some very quiet recordings). Since 2014, Opencast has a

> SoX integration for exactly this purpose which works quite fine:

>

> https://docs.opencast.org/develop/admin/workflowoperationhandlers/normalizeaudio-woh/

>

> Starting with a video recording, the normalization operation would

> extract the recordings audio stream, have SoX analyze and normalize it

> to a certain RMS dB value and then integrate the new audio stream again

> into the original video container (there are a few more modes but that

> is probably the most common use case).

>

> While this works fine, current versions of FFmpeg make things quite a

> bit easier by providing the `loudnorm` filter for EBU R128 loudness

> normalization which can be used as part of any FFmpeg operation. Hence,

> we can normalize the audio for example as part of generating the

> distribution artifacts or the work files. Of course, you could also

> still do it as a separate operation :)

>

> If you are now wondering what the hell EBU R128 is: EBU 128 is the name

> of the European Broadcasting Union's recommendation for loudness

> normalization and permitted maximum level of audio signals, an attempt

> to avoid huge loudness fluctuations between different broadcasting

> channels.

>

> The most important thing relevant for Opencast is probably the

> recommendation to have the audio normalized to a target level of -23.0

> LUFS. There are more recommendations on which I based the filter

> definition above. For details, read:

>

> https://tech.ebu.ch/loudness

> <https://protect-za.mimecast.com/s/WVH2CO7Xr8svOnE7i5I27J>

> Starting with a video recording, the normalization operation would

> extract the recordings audio stream, have SoX analyze and normalize it

> to a certain RMS dB value and then integrate the new audio stream again

> into the original video container (there are a few more modes but that

> is probably the most common use case).

>

> While this works fine, current versions of FFmpeg make things quite a

> bit easier by providing the `loudnorm` filter for EBU R128 loudness

> normalization which can be used as part of any FFmpeg operation. Hence,

> we can normalize the audio for example as part of generating the

> distribution artifacts or the work files. Of course, you could also

> still do it as a separate operation :)

>

> If you are now wondering what the hell EBU R128 is: EBU 128 is the name

> of the European Broadcasting Union's recommendation for loudness

> normalization and permitted maximum level of audio signals, an attempt

> to avoid huge loudness fluctuations between different broadcasting

> channels.

>

> The most important thing relevant for Opencast is probably the

> recommendation to have the audio normalized to a target level of -23.0

> LUFS. There are more recommendations on which I based the filter

> definition above. For details, read:

>

> https://tech.ebu.ch/loudness

>

> …and the actual R128 (which is quite short and easy to read):

>

> https://tech.ebu.ch/docs/r/r128-2014.pdf

> <https://protect-za.mimecast.com/s/1O80CP1KvQU36WJjuBKQd_>

> …and the actual R128 (which is quite short and easy to read):

>

> https://tech.ebu.ch/docs/r/r128-2014.pdf

>

> Less relevant to Opencast but still another interesting read when it

> comes to audio normalization and EBU R128 is the following document. It

> also outlines some reasoning behind the recommendation and possible

> solutions for what can be done if broadcasters ignore the

> recommendations to be the loudest out there:

>

> https://tech.ebu.ch/docs/techreview/trev_2012-Q3_Loudness_van_Everdingen.pdf

> <https://protect-za.mimecast.com/s/-qRWCQ1Kw7UoROJ2IQlDW9>

> Less relevant to Opencast but still another interesting read when it

> comes to audio normalization and EBU R128 is the following document. It

> also outlines some reasoning behind the recommendation and possible

> solutions for what can be done if broadcasters ignore the

> recommendations to be the loudest out there:

>

> https://tech.ebu.ch/docs/techreview/trev_2012-Q3_Loudness_van_Everdingen.pdf

>

>

> Of course, it is always best if you need to make less adjustments

> afterwards but can already get things right when recording the audio in

> the first place. For that it is helpful to know the loudness levels of

> your recordings, … which is what the `ebur128` FFmpeg filter can be used

> for. Running a command like this will output all the interesting

> numbers:

>

> ffmpeg -nostats -i in.mp4 -filter:a ebur128=peak=true -f null -

>

>

> Did anyone else look at audio normalization recently? Did I miss

> anything important? Also, should we point to this in the normalization

> workflow operation or even deprecate it?

>

> I'm interested in your comments.

>

> Best regards,

> Lars

>

> --

> You received this message because you are subscribed to the Google

> Groups "Opencast Users" group.

> To unsubscribe from this group and stop receiving emails from it, send

> an email to users+un...@opencast.org.

> Disclaimer - University of Cape Town This email is subject to UCT

> policies and email disclaimer published on our website at

> http://www.uct.ac.za/main/email-disclaimer or obtainable from +27 21

> 650 9111. If this email is not related to the business of UCT, it is

> sent by the sender in an individual capacity. Please report security

> incidents or abuse via

> https://csirt.uct.ac.za/page/report-an-incident.php. --

>

> Of course, it is always best if you need to make less adjustments

> afterwards but can already get things right when recording the audio in

> the first place. For that it is helpful to know the loudness levels of

> your recordings, … which is what the `ebur128` FFmpeg filter can be used

> for. Running a command like this will output all the interesting

> numbers:

>

> ffmpeg -nostats -i in.mp4 -filter:a ebur128=peak=true -f null -

>

>

> Did anyone else look at audio normalization recently? Did I miss

> anything important? Also, should we point to this in the normalization

> workflow operation or even deprecate it?

>

> I'm interested in your comments.

>

> Best regards,

> Lars

>

> --

> You received this message because you are subscribed to the Google

> Groups "Opencast Users" group.

> To unsubscribe from this group and stop receiving emails from it, send

> an email to users+un...@opencast.org.

> Disclaimer - University of Cape Town This email is subject to UCT

> policies and email disclaimer published on our website at

> http://www.uct.ac.za/main/email-disclaimer or obtainable from +27 21

> 650 9111. If this email is not related to the business of UCT, it is

> sent by the sender in an individual capacity. Please report security

> incidents or abuse via

> You received this message because you are subscribed to the Google

> Groups "Opencast Users" group.

> To unsubscribe from this group and stop receiving emails from it, send

> an email to users+un...@opencast.org

> <mailto:users+un...@opencast.org>.

> Groups "Opencast Users" group.

> To unsubscribe from this group and stop receiving emails from it, send

> an email to users+un...@opencast.org

--

Jan Koppe

eLectures / LearnWeb

Westfälische Wilhelms-Universität

Fliednerstraße 21 - Room 143c

48149 Münster/Westf. - Germany

E-mail: jan....@wwu.de

Sven Stauber

Feb 27, 2018, 3:47:16 PM2/27/18

to Opencast Users

Hi all

As a general comment, please don't be too focused on lecture recordings as there is other audio-visual content, too ;-)

@Jan: How do you handle the different volumes of spoken language of different persons? The compressor won't address the issue of two different persons ("whispering" vs "shouting") in different recordings when comparing the "loudness" of those recordings, will it? That would require a guy adjusting the audio input level (or mic volume) during the recording (in addition to your filters), wouldn't it?

Best

Sven

Jan Koppe

Feb 28, 2018, 4:45:15 AM2/28/18

to us...@opencast.org

On 02/27/2018 09:47 PM, Sven Stauber wrote:

> Hi all

>

> As a general comment, please don't be too focused on lecture

> recordings as there is other audio-visual content, too ;-)

good point.

> Hi all

>

> As a general comment, please don't be too focused on lecture

> recordings as there is other audio-visual content, too ;-)

>

> @Jan: How do you handle the different volumes of spoken language of

> different persons? The compressor won't address the issue of two

> different persons ("whispering" vs "shouting") in different recordings

> when comparing the "loudness" of those recordings, will it? That would

> require a guy adjusting the audio input level (or mic volume) during

> the recording (in addition to your filters), wouldn't it?

No, that's exactly what I'm using the compressor for. It has a very high

> @Jan: How do you handle the different volumes of spoken language of

> different persons? The compressor won't address the issue of two

> different persons ("whispering" vs "shouting") in different recordings

> when comparing the "loudness" of those recordings, will it? That would

> require a guy adjusting the audio input level (or mic volume) during

> the recording (in addition to your filters), wouldn't it?

ratio with low threshold and moderate attack and release speed, which

pretty much does the job of a person adjusting a "fader". Different

speakers might be a tiny bit different in compression rate, but not too

much. You will maybe have to adjust the threshold and makeup-gain to

your situation, but if you're getting a somewhat sensible level going

into your capture device, it should work pretty well already.

Regards,

Jan

Lars Kiesow

Mar 1, 2018, 1:28:11 PM3/1/18

to us...@opencast.org

Hi everyone,

sorry for the late reply! Let me try to answer your questions and get

rid of some misconceptions about EBU R128 as well as FFmpeg's loudnorm

filter.

First of all, the `loudnorm` filter chain I provided will do a dynamic

loudness normalization, possibly including audio compression and true

peak limitation if required. It should hence archive the desired effect

that if a second speaker is louder than the first, the loudness of the

second one will be adjusted to appear equally loud. Of course, this

effect is configurable.

This may become a bit more clear when you have a look at my suggested

configuration:

I=-23

Set the overall target loudness to -23 LUFS

LRA=1

Set the loudness range to 1 LU

tp=-1

Set the true peak limiter to a target of -1 dBFS to avoid clipping.

This should produce a similar effect as a dedicated audio compression

filter with the benefit of having a similar output loudness regardless

of the input. Though you have certainly more control over a dedicated

compressor which, however, also mean that you probably need to know

more about your content.

Note that the algorithm also include ways of dealing with silence. Not

only as a fixed audio gate, but also as a dynamic gate measuring

relative loudness to previous content. That is also why small silent

parts in an audio stream should not heavily effect the normalization.

For details of how the algorithm works, take a look at this post by the

filter's author:

http://k.ylo.ph/2016/04/04/loudnorm.html

…as well as EBU TECH 3341 which describes in more details how this

recommended audio normalization should work:

https://tech.ebu.ch/docs/tech/tech3341.pdf

What is important to know is that the filter configuration I provided

is the single-pass or `live` version. A two-pass normalization is

possible and should yield more accurate results.

An obvious scenario where a single-pass version may yield unwanted

results is a recording starting with a very silent signal (e.g.

recording with overhead microphone and some students mumbling in the

room). Since the normalization filter only knows about the signal at

the beginning, it would normalize the mumbling to -23 LUFS already and

not identify this as silence as the two-pass filter would due to the

huge relative loudness difference compared to to the following signal.

Sill, if we cut material or apply the normalization to the material

after the editor this should be no big problem for resulting Opencast

recordings. And having a single-pass does make things a lot easier :)

That said, implementing a two-pass version, if we integrate the metadata

into the media package as part of the media package, should also be

relatively easy.

Maybe, I can generate a few audio samples to demonstrate these effects.

That would probably be helpful anyway if we create a proper

documentation from this thread.

Talking about the concern about „crappy devices“ and insufficient

loudness when you normalize content to -23 LUFS as recommended by EBU

R128, I found an AES recommendation for streaming content which

addresses exactly this concern (and which references EBU R128).

The AES recommendation still strongly discourages being as loud as

possible before clipping (applying strong compression and peak

limiters). Something I think is a very good idea because even if you

can usually get away with a very low dynamic range on Opencast

recordings, the students with crappy devices will probably still be

also playing things with a higher dynamic range which means that they

will need to make loudness adjustments if they jump between e.g.

Opencast and YouTube. That is something I always find very annoying.

In fact, the recommendation (AES TD1004.1.15-10) still outlines EBU

R128 as an optimal solution if only the loudness of mobile devices in

the EU weren't limited to prevent hearing loss… a very good idea, but

it also introduces a disadvantage ;)

In the end, to compensate for that, their recommendation is to

normalize all streams in the range of -16 LUFS to -20 LUFS to limit

loudness jumps when switching between content to an acceptable maximum.

By selecting a value in that range, you can either choose to be louder,

or have more space for a higher dynamic, but you are not too loud or too

quiet.

For more details on the recommendations as well as a background of why

these recommendations were chosen, have a look at the (relatively

short) AES document:

http://www.aes.org/technical/documents/AESTD1004_1_15_10.pdf

One additional interesting recommendation in this document we could

probably implement is to include the loudness levels into the streams

meta-data to allow devices to automatically adjust their volume

accordingly. If that would be properly implemented by everyone (I don't

think it is implemented anywhere) people could easily switch between

-23 LUFS broadcasting content and -16 LUFS streaming content without

having an annoying jump in loudness. Maybe, we could set a good example.

When reading the AES recommendations, I was also wondering if we should

select a value like -20 LUFS for normalization in Opencast. That would

make us compliant with the AES recommendations while switching to any

broadcasting channel on the same device would still be a tolerable

difference in loudness. Though from the content, we should certainly be

able to use -16 LUFS. As Jan pointed out, we do not need (probably not

even want) a high dynamic range.

Best regards,

Lars

On Tue, 27 Feb 2018 18:27:47 +0100

Lars Kiesow <lki...@uos.de> wrote:

> Hi everyone

>

> tldr; Use the following FFmpeg filter as part of your encoding

> profiles for audio normalization in Opencast:

>

> ffmpeg -i … -filter:a loudnorm=I=-23:LRA=1:dual_mono=true:tp=-1 …

>

>

> For ETH, I had a look at including audio normalization into their

> workflows to deal with fluctuations in their audio loudness (e.g. to

> avoid having some very quiet recordings). Since 2014, Opencast has a

> SoX integration for exactly this purpose which works quite fine:

>

> https://docs.opencast.org/develop/admin/workflowoperationhandlers/normalizeaudio-woh/

>

sorry for the late reply! Let me try to answer your questions and get

rid of some misconceptions about EBU R128 as well as FFmpeg's loudnorm

filter.

First of all, the `loudnorm` filter chain I provided will do a dynamic

loudness normalization, possibly including audio compression and true

peak limitation if required. It should hence archive the desired effect

that if a second speaker is louder than the first, the loudness of the

second one will be adjusted to appear equally loud. Of course, this

effect is configurable.

This may become a bit more clear when you have a look at my suggested

configuration:

I=-23

Set the overall target loudness to -23 LUFS

LRA=1

Set the loudness range to 1 LU

tp=-1

Set the true peak limiter to a target of -1 dBFS to avoid clipping.

This should produce a similar effect as a dedicated audio compression

filter with the benefit of having a similar output loudness regardless

of the input. Though you have certainly more control over a dedicated

compressor which, however, also mean that you probably need to know

more about your content.

Note that the algorithm also include ways of dealing with silence. Not

only as a fixed audio gate, but also as a dynamic gate measuring

relative loudness to previous content. That is also why small silent

parts in an audio stream should not heavily effect the normalization.

For details of how the algorithm works, take a look at this post by the

filter's author:

http://k.ylo.ph/2016/04/04/loudnorm.html

…as well as EBU TECH 3341 which describes in more details how this

recommended audio normalization should work:

https://tech.ebu.ch/docs/tech/tech3341.pdf

What is important to know is that the filter configuration I provided

is the single-pass or `live` version. A two-pass normalization is

possible and should yield more accurate results.

An obvious scenario where a single-pass version may yield unwanted

results is a recording starting with a very silent signal (e.g.

recording with overhead microphone and some students mumbling in the

room). Since the normalization filter only knows about the signal at

the beginning, it would normalize the mumbling to -23 LUFS already and

not identify this as silence as the two-pass filter would due to the

huge relative loudness difference compared to to the following signal.

Sill, if we cut material or apply the normalization to the material

after the editor this should be no big problem for resulting Opencast

recordings. And having a single-pass does make things a lot easier :)

That said, implementing a two-pass version, if we integrate the metadata

into the media package as part of the media package, should also be

relatively easy.

Maybe, I can generate a few audio samples to demonstrate these effects.

That would probably be helpful anyway if we create a proper

documentation from this thread.

Talking about the concern about „crappy devices“ and insufficient

loudness when you normalize content to -23 LUFS as recommended by EBU

R128, I found an AES recommendation for streaming content which

addresses exactly this concern (and which references EBU R128).

The AES recommendation still strongly discourages being as loud as

possible before clipping (applying strong compression and peak

limiters). Something I think is a very good idea because even if you

can usually get away with a very low dynamic range on Opencast

recordings, the students with crappy devices will probably still be

also playing things with a higher dynamic range which means that they

will need to make loudness adjustments if they jump between e.g.

Opencast and YouTube. That is something I always find very annoying.

In fact, the recommendation (AES TD1004.1.15-10) still outlines EBU

R128 as an optimal solution if only the loudness of mobile devices in

the EU weren't limited to prevent hearing loss… a very good idea, but

it also introduces a disadvantage ;)

In the end, to compensate for that, their recommendation is to

normalize all streams in the range of -16 LUFS to -20 LUFS to limit

loudness jumps when switching between content to an acceptable maximum.

By selecting a value in that range, you can either choose to be louder,

or have more space for a higher dynamic, but you are not too loud or too

quiet.

For more details on the recommendations as well as a background of why

these recommendations were chosen, have a look at the (relatively

short) AES document:

http://www.aes.org/technical/documents/AESTD1004_1_15_10.pdf

One additional interesting recommendation in this document we could

probably implement is to include the loudness levels into the streams

meta-data to allow devices to automatically adjust their volume

accordingly. If that would be properly implemented by everyone (I don't

think it is implemented anywhere) people could easily switch between

-23 LUFS broadcasting content and -16 LUFS streaming content without

having an annoying jump in loudness. Maybe, we could set a good example.

When reading the AES recommendations, I was also wondering if we should

select a value like -20 LUFS for normalization in Opencast. That would

make us compliant with the AES recommendations while switching to any

broadcasting channel on the same device would still be a tolerable

difference in loudness. Though from the content, we should certainly be

able to use -16 LUFS. As Jan pointed out, we do not need (probably not

even want) a high dynamic range.

Best regards,

Lars

On Tue, 27 Feb 2018 18:27:47 +0100

Lars Kiesow <lki...@uos.de> wrote:

> Hi everyone

>

> tldr; Use the following FFmpeg filter as part of your encoding

> profiles for audio normalization in Opencast:

>

> ffmpeg -i … -filter:a loudnorm=I=-23:LRA=1:dual_mono=true:tp=-1 …

>

>

> For ETH, I had a look at including audio normalization into their

> workflows to deal with fluctuations in their audio loudness (e.g. to

> avoid having some very quiet recordings). Since 2014, Opencast has a

> SoX integration for exactly this purpose which works quite fine:

>

> https://docs.opencast.org/develop/admin/workflowoperationhandlers/normalizeaudio-woh/

>

> Starting with a video recording, the normalization operation would

> extract the recordings audio stream, have SoX analyze and normalize it

> to a certain RMS dB value and then integrate the new audio stream

> again into the original video container (there are a few more modes

> but that is probably the most common use case).

>

> While this works fine, current versions of FFmpeg make things quite a

> bit easier by providing the `loudnorm` filter for EBU R128 loudness

> normalization which can be used as part of any FFmpeg operation.

> Hence, we can normalize the audio for example as part of generating

> the distribution artifacts or the work files. Of course, you could

> also still do it as a separate operation :)

>

> If you are now wondering what the hell EBU R128 is: EBU 128 is the

> name of the European Broadcasting Union's recommendation for loudness

> normalization and permitted maximum level of audio signals, an attempt

> to avoid huge loudness fluctuations between different broadcasting

> channels.

>

> The most important thing relevant for Opencast is probably the

> recommendation to have the audio normalized to a target level of -23.0

> LUFS. There are more recommendations on which I based the filter

> definition above. For details, read:

>

> https://tech.ebu.ch/loudness

>

> extract the recordings audio stream, have SoX analyze and normalize it

> to a certain RMS dB value and then integrate the new audio stream

> again into the original video container (there are a few more modes

> but that is probably the most common use case).

>

> While this works fine, current versions of FFmpeg make things quite a

> bit easier by providing the `loudnorm` filter for EBU R128 loudness

> normalization which can be used as part of any FFmpeg operation.

> Hence, we can normalize the audio for example as part of generating

> the distribution artifacts or the work files. Of course, you could

> also still do it as a separate operation :)

>

> If you are now wondering what the hell EBU R128 is: EBU 128 is the

> name of the European Broadcasting Union's recommendation for loudness

> normalization and permitted maximum level of audio signals, an attempt

> to avoid huge loudness fluctuations between different broadcasting

> channels.

>

> The most important thing relevant for Opencast is probably the

> recommendation to have the audio normalized to a target level of -23.0

> LUFS. There are more recommendations on which I based the filter

> definition above. For details, read:

>

> https://tech.ebu.ch/loudness

>

> …and the actual R128 (which is quite short and easy to read):

>

> https://tech.ebu.ch/docs/r/r128-2014.pdf

>

>

> https://tech.ebu.ch/docs/r/r128-2014.pdf

>

> Less relevant to Opencast but still another interesting read when it

> comes to audio normalization and EBU R128 is the following document.

> It also outlines some reasoning behind the recommendation and possible

> solutions for what can be done if broadcasters ignore the

> recommendations to be the loudest out there:

>

> https://tech.ebu.ch/docs/techreview/trev_2012-Q3_Loudness_van_Everdingen.pdf

>

>

> comes to audio normalization and EBU R128 is the following document.

> It also outlines some reasoning behind the recommendation and possible

> solutions for what can be done if broadcasters ignore the

> recommendations to be the loudest out there:

>

> https://tech.ebu.ch/docs/techreview/trev_2012-Q3_Loudness_van_Everdingen.pdf

>

>

Oliver Müller

Mar 7, 2018, 4:42:25 AM3/7/18

to Opencast Users

Hi Lars,

I made some tests with this kind of audionormalization some time ago and I think this is a good option to include in opencast. What I found out is it seems to be important to use a dual pass mode approach to get the best results (see also http://k.ylo.ph/2016/04/04/loudnorm.html). That means in the first pass you supply your target loudness values for ffmpeg i. e.:

ffmpeg -i in.wav -af loudnorm=I=-23:TP=-1.5:LRA=11 -ar 48k out.wav

The first ffmpeg run is for measurement. As an output (i. e. in json format) you get the real values for I, TP, LRA and some other parameters of the input file. In a second ffmpeg run you have to use the measured values from the first run as an input for some parameters (measured_I, measured_LRA, measured_TP, measured_thresh, offset) in the second ffmpeg command:

ffmpeg -i in.wav -af loudnorm=I=-23:TP=-1.5:LRA=11:measured_I=-27.61:measured_LRA=18.06:measured_TP=-4.47:measured_thresh=-39.20:offset=0.58 -ar 48k out.wav

I think, that means for Opencast, in order to support this, you need a new/modified workflow operation handler in order to provide the measured values from the first run for the parameters in the second ffmpeg run. Another important thing is that you always have to provide the sampling rate in the ffmpeg command (otherwise it is to high because the loudnorm filter upsamples to a higher value than 48 khz which causes problems in some browsers).

Best Regards

Oliver

Lars Kiesow

Mar 7, 2018, 5:19:57 AM3/7/18

to us...@opencast.org

Hi Oliver,

good point, yes, the filter upsamples to 192 kHz if I remember

correctly. Something you likely want to downsample to a reasonable

amount again.

Adding two pass encoding should not be too hard since the filter can

just output the values of the first pass as JSON which then is very

easy to parse: loudnorm=…:print_format=json

Apart from the comments on the page you linked about the loudnorm

filter which I read and incidentally linked before (please read

through my second mail as well ;), did you run actual tests with

lecture recordings as input material to compare single-pass vs two-pass

normalization?

I ran only a very few so far. The most problematic thing I noticed

about single-pass normalization was the raised volume in quiet parts at

the beginning of a recording. Though that would usually be cut away in

any case.

Best regards,

Lars

On Wed, 7 Mar 2018 01:42:25 -0800 (PST)

Oliver Müller <omuel...@gmail.com> wrote:

> Hi Lars,

>

> I made some tests with this kind of audionormalization some time ago

> and I think this is a good option to include in opencast. What I

> found out is it seems to be important to use a dual pass mode

> approach to get the best results (see also

> http://k.ylo.ph/2016/04/04/loudnorm.html). That means in the first

> pass you supply your target loudness values for ffmpeg i. e.:

>

> *ffmpeg -i in.wav -af loudnorm=I=-23:TP=-1.5:LRA=11 -ar 48k out.wav*

good point, yes, the filter upsamples to 192 kHz if I remember

correctly. Something you likely want to downsample to a reasonable

amount again.

Adding two pass encoding should not be too hard since the filter can

just output the values of the first pass as JSON which then is very

easy to parse: loudnorm=…:print_format=json

Apart from the comments on the page you linked about the loudnorm

filter which I read and incidentally linked before (please read

through my second mail as well ;), did you run actual tests with

lecture recordings as input material to compare single-pass vs two-pass

normalization?

I ran only a very few so far. The most problematic thing I noticed

about single-pass normalization was the raised volume in quiet parts at

the beginning of a recording. Though that would usually be cut away in

any case.

Best regards,

Lars

On Wed, 7 Mar 2018 01:42:25 -0800 (PST)

Oliver Müller <omuel...@gmail.com> wrote:

> Hi Lars,

>

> I made some tests with this kind of audionormalization some time ago

> and I think this is a good option to include in opencast. What I

> found out is it seems to be important to use a dual pass mode

> approach to get the best results (see also

> http://k.ylo.ph/2016/04/04/loudnorm.html). That means in the first

> pass you supply your target loudness values for ffmpeg i. e.:

>

>

> The first ffmpeg run is for measurement. As an output (i. e. in json

> format) you get the real values for I, TP, LRA and some other

> parameters of the input file. In a second ffmpeg run you have to use

> the measured values from the first run as an input for some

> parameters (measured_I, measured_LRA, measured_TP, measured_thresh,

> offset) in the second ffmpeg command:

>

> *ffmpeg -i* *in.wav -af

> The first ffmpeg run is for measurement. As an output (i. e. in json

> format) you get the real values for I, TP, LRA and some other

> parameters of the input file. In a second ffmpeg run you have to use

> the measured values from the first run as an input for some

> parameters (measured_I, measured_LRA, measured_TP, measured_thresh,

> offset) in the second ffmpeg command:

>

> loudnorm=I=-23:TP=-1.5:LRA=11:measured_I=-27.61:measured_LRA=18.06:measured_TP=-4.47:measured_thresh=-39.20:offset=0.58

> -ar 48k out.wav*

Oliver Müller

Mar 7, 2018, 6:27:02 AM3/7/18

to Opencast Users

Hi Lars,

>The most problematic thing I noticed

>about single-pass normalization was the raised volume in quiet parts at

>the beginning of a recording

>about single-pass normalization was the raised volume in quiet parts at

>the beginning of a recording

Yes, that was the same problem which I realized (that is the main reason why we would prefer two-pass normalization). In addition to that with two-pass normalization you get some results which are (at least a little bit) more optimized than the results you get with one-pass normalization.

Best Regards

Oliver

Jeremias Lubberger

Mar 20, 2018, 5:22:49 AM3/20/18

to Opencast Users

Hi All,

sorry I'm a bit late to the party, but I just wanted to add another small piece of information in case it is relevant for someone.

Loudness manipulation in FFmpeg can not only be done with the "volume" and "loudnorm" filters, there is also a "dynaudnorm" filter. It's something between simple volume manipulation and dynamic range compression: The volume is changed in the same way as with the "volume" filter, but this is done on small chunks (<8s) of the audio at a time.

I know this sounds kinda odd at first, but it really works quite weill. There are many parameters which handle possible problems, I think the documentation is quite helpful here:

https://ffmpeg.org/ffmpeg-filters.html#dynaudnorm

Of course, this is not possible in real-time, but you also don't need two passes. In my tests it works really well when used in post-processing. You can also add "classic" dynamic range compression to it as well, and it can also take care of DC offset problems (we have that in some settings).

The "expected problems", hectic gain changes and excessive boosting of silet parts, are taken care of by analysing a bigger number of frames at a time and smoothing the gain changes between them, as well as setting a maximum applicable gain to prevent boosting background noise to full volume.

Maybe this is something worth considering!

Regards

Jeremias

sorry I'm a bit late to the party, but I just wanted to add another small piece of information in case it is relevant for someone.

Loudness manipulation in FFmpeg can not only be done with the "volume" and "loudnorm" filters, there is also a "dynaudnorm" filter. It's something between simple volume manipulation and dynamic range compression: The volume is changed in the same way as with the "volume" filter, but this is done on small chunks (<8s) of the audio at a time.

I know this sounds kinda odd at first, but it really works quite weill. There are many parameters which handle possible problems, I think the documentation is quite helpful here:

https://ffmpeg.org/ffmpeg-filters.html#dynaudnorm

Of course, this is not possible in real-time, but you also don't need two passes. In my tests it works really well when used in post-processing. You can also add "classic" dynamic range compression to it as well, and it can also take care of DC offset problems (we have that in some settings).

The "expected problems", hectic gain changes and excessive boosting of silet parts, are taken care of by analysing a bigger number of frames at a time and smoothing the gain changes between them, as well as setting a maximum applicable gain to prevent boosting background noise to full volume.

Maybe this is something worth considering!

Regards

Jeremias

Jan Koppe

Mar 20, 2018, 7:16:52 AM3/20/18

to us...@opencast.org

Hello Jeremias,

thanks for the tip - that sounds interesting, and is essentially what

I'm trying to do with abusing the regular compressor. That's what

happens when you try to shoot for your "usual" solution (as in what I

usually do while mixing, etc.) and don't take a look left and right. :)

Will need to try that one!

Maybe we could do some collaboration and each provide a few unprocessed

audio samples to compare all the different methods (ebu128, my simple

comp, dynaudnorm) and publish them for future reference? I'm sure this

will be interesting to many more organisations, especially newcomers

with little experience.

Maybe a do's/dont's or tips section with ffmpeg examples could be

helpful in the github.com/opencast/pyca wiki. This would be out of scope

for the regular opencast documentation I think.

Regards,

Jan

> <http://k.ylo.ph/2016/04/04/loudnorm.html>). That means in the

thanks for the tip - that sounds interesting, and is essentially what

I'm trying to do with abusing the regular compressor. That's what

happens when you try to shoot for your "usual" solution (as in what I

usually do while mixing, etc.) and don't take a look left and right. :)

Will need to try that one!

Maybe we could do some collaboration and each provide a few unprocessed

audio samples to compare all the different methods (ebu128, my simple

comp, dynaudnorm) and publish them for future reference? I'm sure this

will be interesting to many more organisations, especially newcomers

with little experience.

Maybe a do's/dont's or tips section with ffmpeg examples could be

helpful in the github.com/opencast/pyca wiki. This would be out of scope

for the regular opencast documentation I think.

Regards,

Jan

> --

> You received this message because you are subscribed to the Google

> Groups "Opencast Users" group.

> To unsubscribe from this group and stop receiving emails from it, send

> an email to users+un...@opencast.org

> <mailto:users+un...@opencast.org>.

> You received this message because you are subscribed to the Google

> Groups "Opencast Users" group.

> To unsubscribe from this group and stop receiving emails from it, send

> an email to users+un...@opencast.org

> <mailto:users+un...@opencast.org>.

Lars Kiesow

Mar 20, 2018, 10:47:17 AM3/20/18

to us...@opencast.org

> Maybe we could do some collaboration and each provide a few

> unprocessed audio samples to compare all the different methods

Sounds like a plan :-D

> unprocessed audio samples to compare all the different methods

Lars

Maximiliano Lira Del Canto

Oct 23, 2018, 10:52:41 AM10/23/18

to Opencast Users

Hi Opencasters:

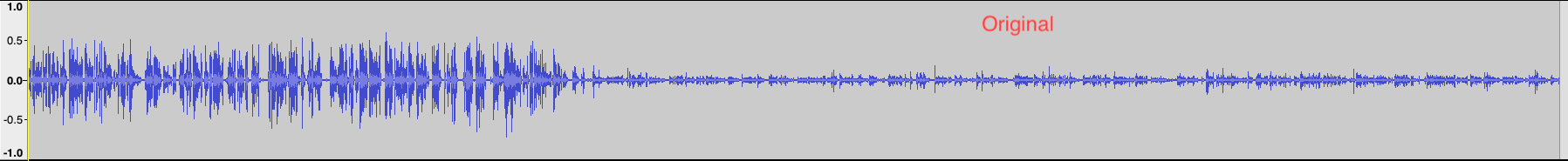

Finally using dynaudnorm, you get almost the same volume of the first speaker, but the quietly speaker gets a lot lauder like the first one:

From quality aside (This is totally personal opinion), in all filters you get an improvement in the volume of the speakers, but at a cost that you rise the noise background, worsen the quality of the recording.

I just started to add this feature here, and after some research and tests I have some examples to show:

This is a sample of 10 minutes of a presentation held here with two speakers, the first speaker talks very loud and clear, meanwhile, the second one speaks very quietly.

Now I will show you how it is after pass the discussed filters (In their default settings) here:

With loudnorm, you get all the voices more lauder in the same scale, the voice of the first speaker that is was ok, now is more louder.

With the two pass loudnorm strategy, occurs that is more lauder even, but the first voice hears more smooth. In the quietly voice also the background noise is amplified.

Finally using dynaudnorm, you get almost the same volume of the first speaker, but the quietly speaker gets a lot lauder like the first one:

From quality aside (This is totally personal opinion), in all filters you get an improvement in the volume of the speakers, but at a cost that you rise the noise background, worsen the quality of the recording.

The dynaudnorm is at least 4x faster compared with the loudnorm filter.

The two pass loudnorm didn't make a lot of difference if you compare it with the single pass.

itz.feli...@gmail.com

Dec 20, 2020, 9:28:27 PM12/20/20

to Opencast Users

I would really love to read the above findings in the docs. Applying normalization during a trancoding step instead of using the Nomalize Audio WOH gives more flexibility. Especially in the self-recorded and unsupervised lectures this year, dynaudnorm seems like a good candidate.

Reply all

Reply to author

Forward

0 new messages